#1: Rules, Rules, RULES!!

Dan Toomey, Senior Integration Architect at Deloitte Australia, kicked off the session by highlighting the essential role of business rules in software development. He emphasized the significance of managing evolving and complex business rules, advocating for the use of effective tools like Business Rules Management Systems (BRMS) to safeguard code and services.

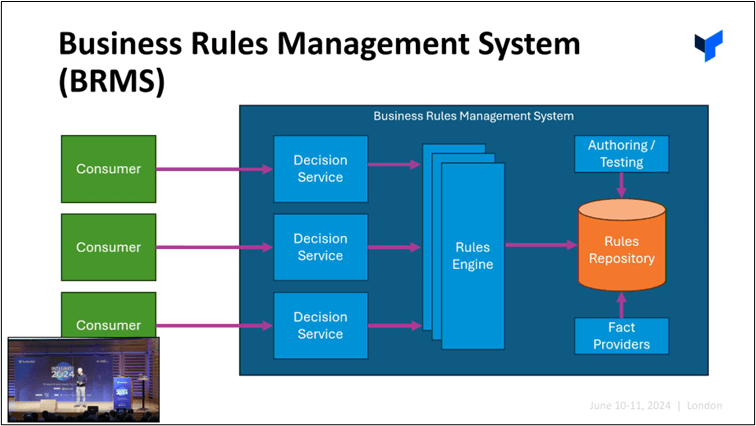

A BRMS includes key components like rule storage, authoring tools, and rules engines, enabling comprehensive rule application across various scenarios.

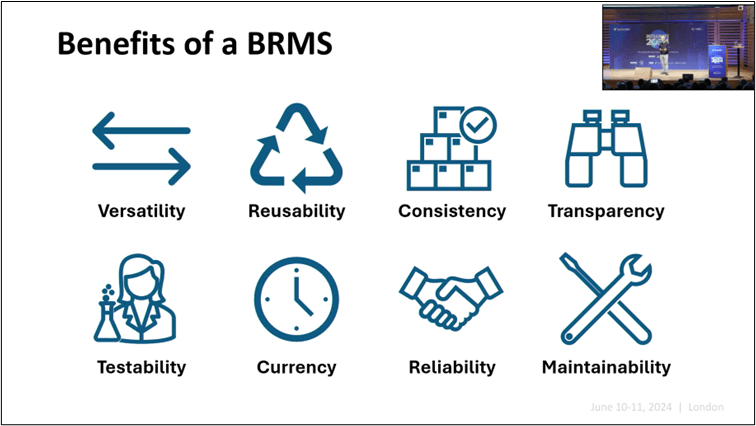

Dan Toomey highlighted how a BRMS offers versatility through quick and cost-effective rule updates, fosters reuse across multiple application, enables independent testing, supports adaptability to changes, and enhances rule manageability.

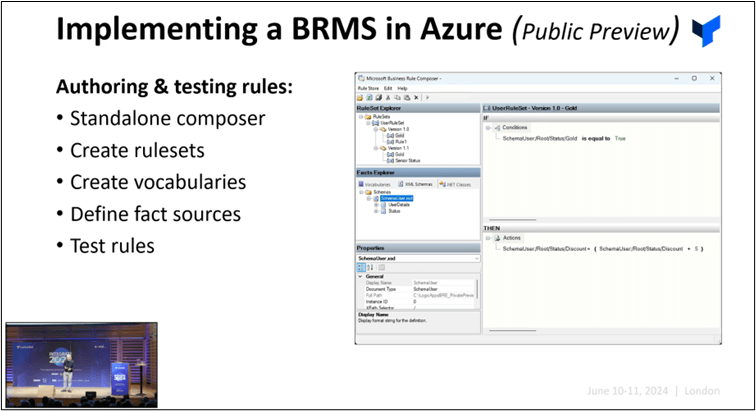

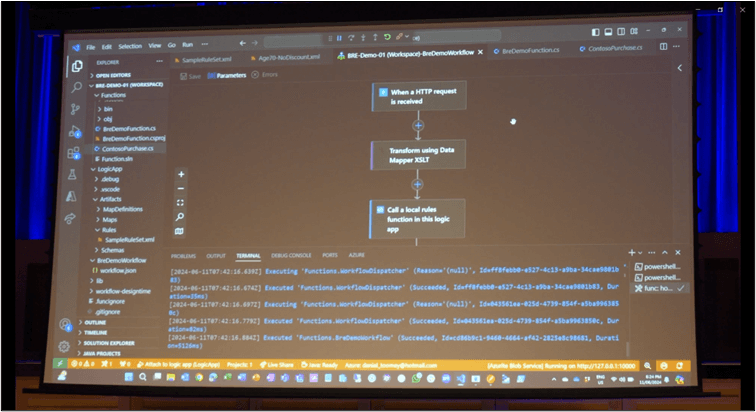

He then demonstrated setting up BRMS in Azure, starting with introducing the Business Rules Composer (BRC). BRC enables creating and testing rules independently, boasting user-friendly features like vocabulary support and compatibility with various data sources.

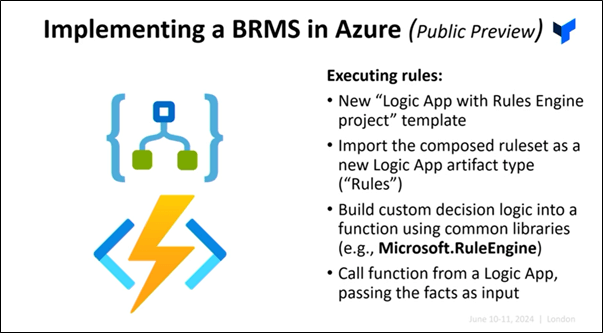

Moving on, Dan explained the execution of rules using logic apps and functions. Microsoft introduced a project template “Logic App with Rule Engine” tailored for rule development and a corresponding artifact type for rules deployment.

Finally, Dan demonstrated the practical use of BRC by configuring rule sets and performing tests.

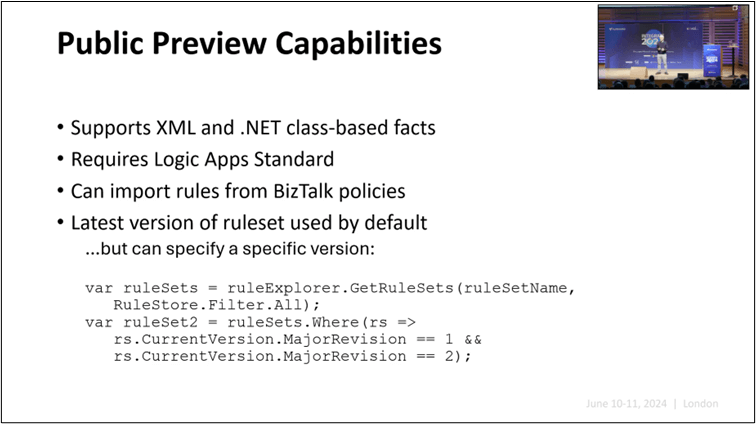

In closing, Dan highlighted BRC’s use of XML, ensuring seamless transition from BizTalk Server to BRC with compatibility and straightforward migration. He also underscored the supported schemas and noted the Business Rules Composer’s ability to manage different versions of rule sets, enabling developers to target specific versions as needed.

#2: The evolution of Azure Message brokers

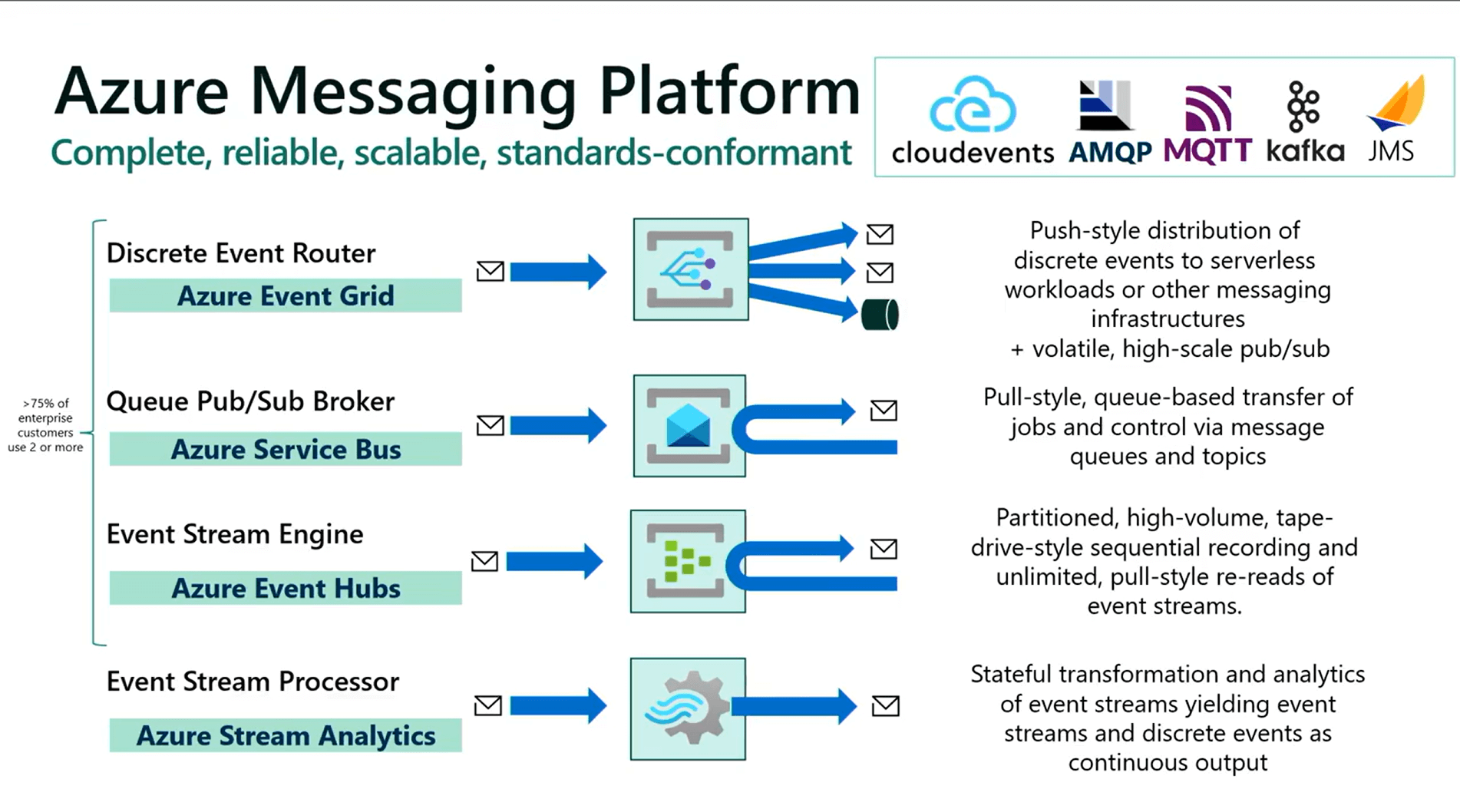

Clemens and Christina from Microsoft messaging team began the session on Azure messaging covering Event grid , Service Bus and Messaging catalogues.

Clemens started by emphasizing how these messaging services enable reliable and scalable communication and integration.

Clemens mentioned that the team is working on two parallel initiatives: Azure and Fabric. They are developing PaaS services for developers and building a messaging foundation and metadata foundations for Fabric to provide real-time intelligence for data analysts.

Azure Event Grid

- Pull Delivery: A new model that provides a queue-like structure, allowing messages to be fetched and completed later, similar to queue capabilities. This pull delivery is built on top of HTTP and is extremely fast.

- MQTT Communication: Enabling clients to communicate over MQTT.

- Last Will and Testament (LWT): Allows clients to notify the broker about unexpected disconnections.

- Custom Domains: Supports the use of custom domain names combined with Traffic Manager for scenarios like cross-region client mobility.

Then, he handed over the session to Christina, who spoke about Azure Service Bus and its recent feature releases:

- Batch delete

- Studio integration for entity down

- Integration for CMK with managed HSM support

Deprecation of TLS 1.0 and TSL 1.1 . Enabling TLS 1.3 for AMQP

Availability zones

Service bus and Event hubs are adding availability zones . Migration in progress over next few months . Which give promising reliability .

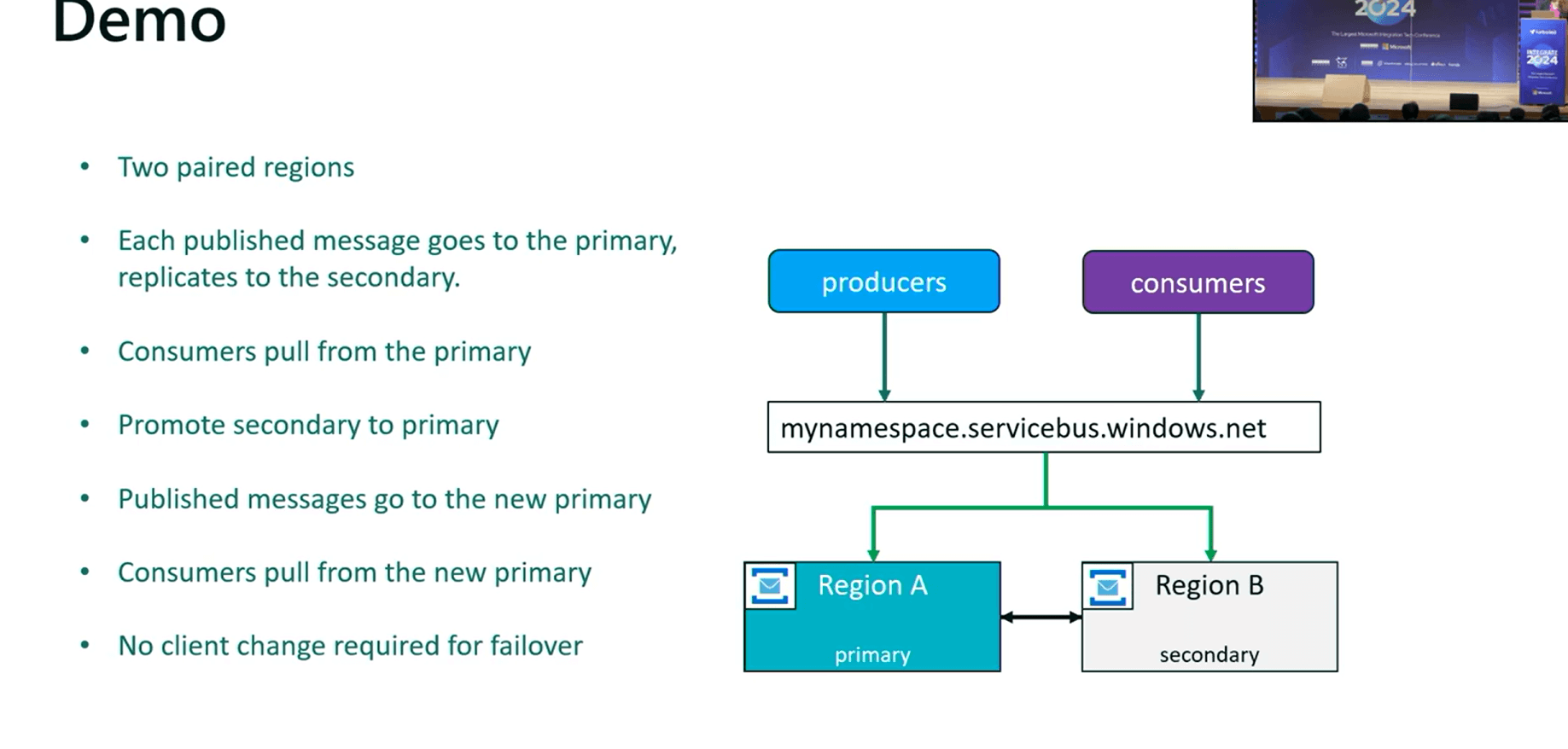

Geo Replication

- A major announcement was made regarding Geo Replication, humorously dubbed “Double Decker Service Bus.”

- This feature enables full data replication across multiple regions, providing robust disaster recovery capabilities.

- The public preview for this functionality will commence soon.

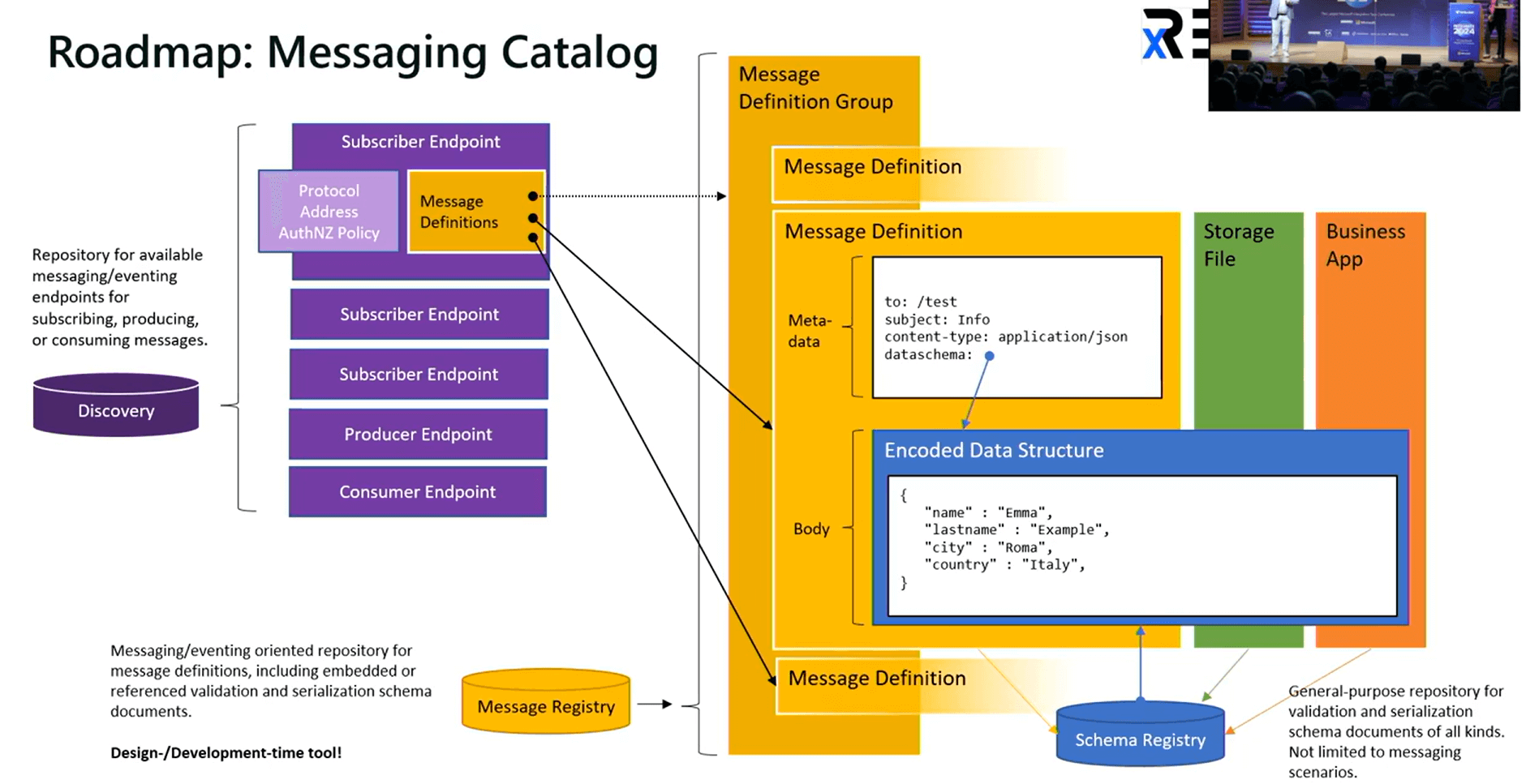

Azure Messaging Catalog

The next intriguing topic discussed was Messaging Catalog, which streamline integration by providing a standardized setup of schemas.The structured registries within these catalogs also play a crucial role in facilitating AI initiatives by providing valuable metadata.

#3: The world’s biggest Azure integration mistakes I want you to learn from!

Toon Vanhoutte led a session on Azure integration mistakes, drawing insights from over 100 experts. He showcased real-world use cases, detailing challenges, solutions, and lessons learned.

Here are the use cases presented during the session, each highlighting the problems encountered and the solutions applied

- In one scenario, handling document uploads promptly was crucial for customer service. An event-driven architecture using Azure Event Grid automated responses, but serverless loops could strain resources. Filtering archived messages mitigated this, emphasizing the need for cost control. The Key takeaway from the above scenario is to Perform cost control in various ways and on regular time intervals!

- Another case highlighted network security across all Azure services. Challenges arose when networking impacted scalability. The Key takeaway from the above scenario is to Consider scaling when configuring network size!

- A low-code integration initiative faced a security breach due to user account operations. The Key takeaway from the above scenario is Integration must run under a system account! Use managed identity, service principals, or system credentials!

- Introducing queuing for decoupling posed challenges with Logic App and Service Bus integration mismatch. Event Grid emerged as a potential solution. The Key takeaway from the above scenario is to Always prototype Logic Apps connectors before architecting solutions with them!

- In 2019, an enterprise integration platform was addressed using Integration Service Environment, which later faced deprecation. The Key takeaway from the above scenario is to Always validate the lifecycle when adopting a new service!

He further emphasized several key areas:

- Resource Consistency to maintain uniformity across environments.

- Cost Management to evaluate and monitor expenses effectively.

- Security & Identity to ensure compliance with IT security requirements.

- Release Management to speed up solution deployment.

- Business Continuity to guarantee availability and robust disaster recovery.

He concluded the session by urging the organization to invest in their Azure governance and adapt to a distributed cloud.

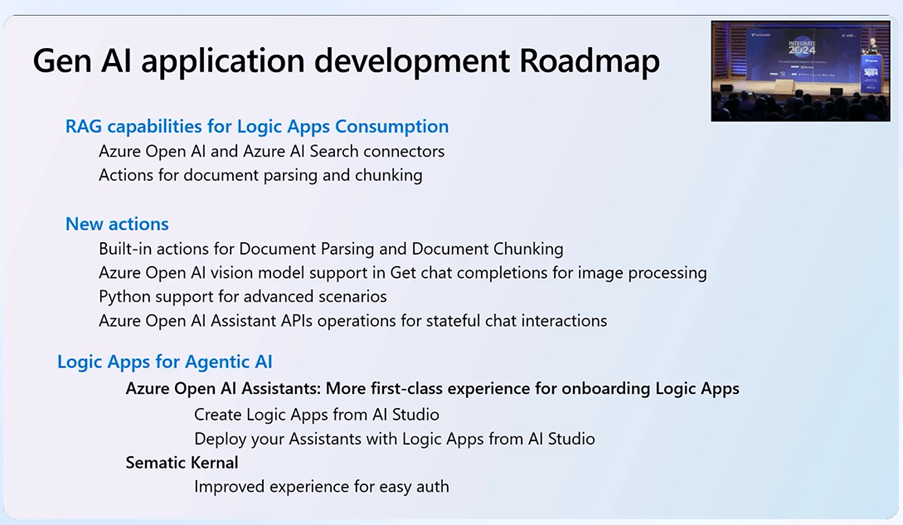

#4: Accelerating generative AI development with Azure Logic Apps

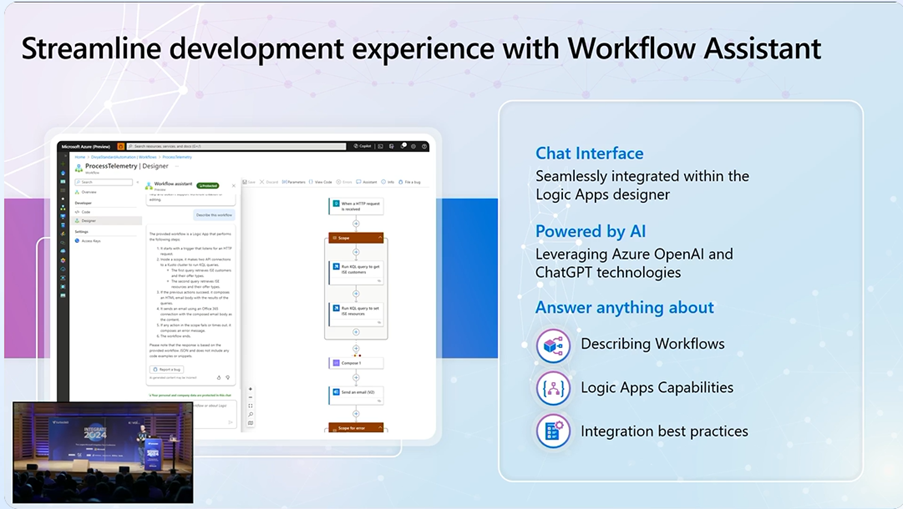

It was Kent Weare again with an interesting session on Logic Apps with Generative AI. With the buzz word Gen AI everywhere in the integration space, this session is one another attraction. Kent kick started the session with the two strategies on Gen AI and Logic Apps called the Azure Logic App Generative AI Strategy. The strategies included how the Workflow assistant can help in accomplishing things easier and how intelligent business applications can be created with the AI services.

The Workflow Assistant with the Logic App workflow designer

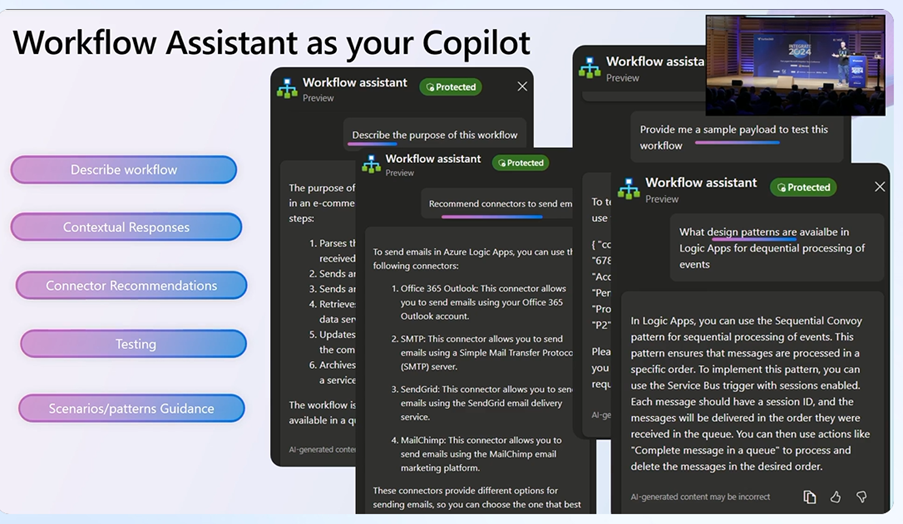

The first strategy was to use the workflow assistant as the copilot. This assistant features the following

Here are few interesting examples of how the workflow assistant can be used.

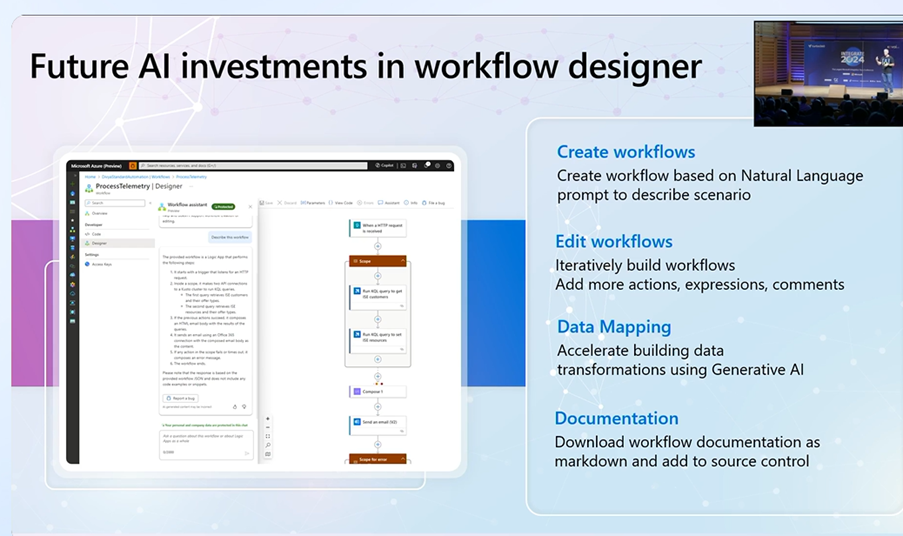

It really doesn’t stop here. Kent continued the session highlighting the future investments that are to be made in the workflow assistant which included the important aspects like the data mapping and documenting the workflows and adding them to the source control and automating these things easily.

Using Gen AI for building interesting business applications

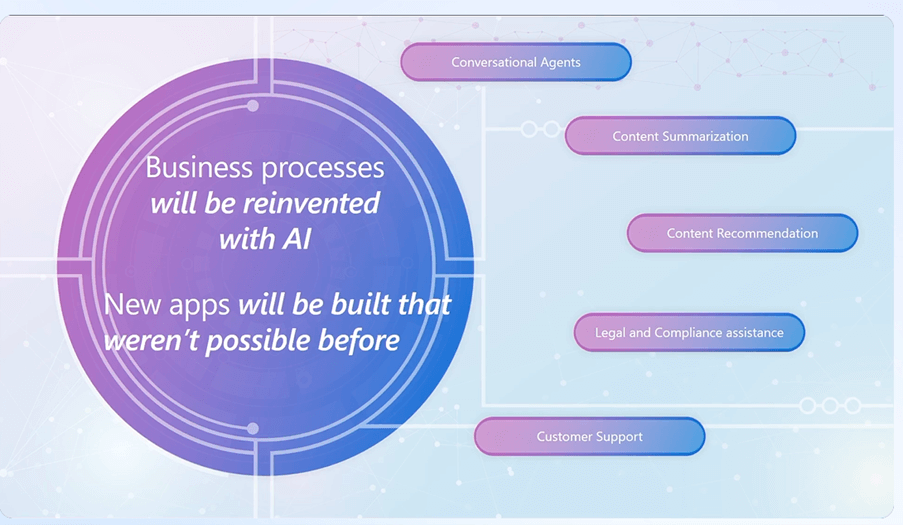

The second strategy was using GenAI for building applications based on the specific use cases. When it comes to integration, it’s all about how different services communicate with each other and how the data moves. With Gen AI, the misconception on building something new is broken where the highly sensitive data can be consolidated and passed on to various services. Here is how the AI comes into play.

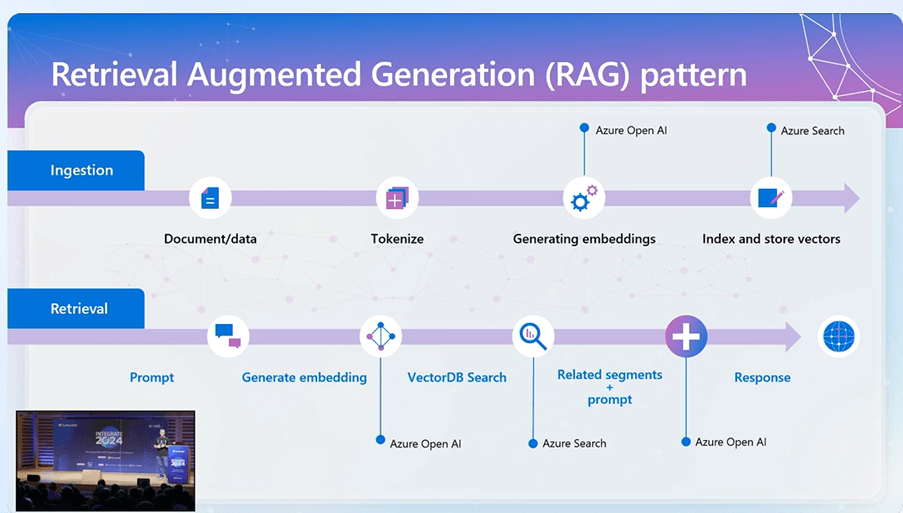

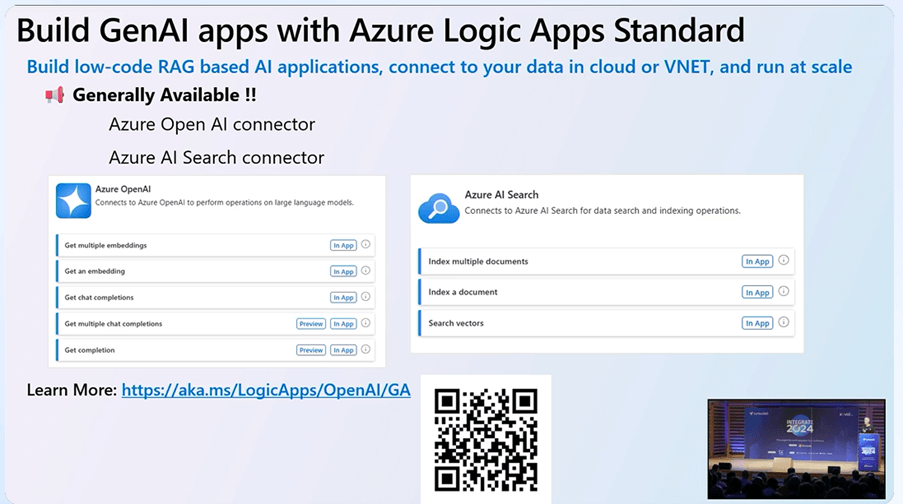

One of the key retrievers of the Gen AI with the Logic Apps is the RAG pattern which involves the two paths.

- Ingestion of data

- Retrieval of data

The data in the ingestion path may be structured or unstructured and it needs to be tokenized and stored in vectors which is done in Azure Search. These are done with the help of the connectors available with Logic Apps. For retrieval, the operations are again performed with these connectors. This reduces the time and complexity when compared to using the code-first approach.

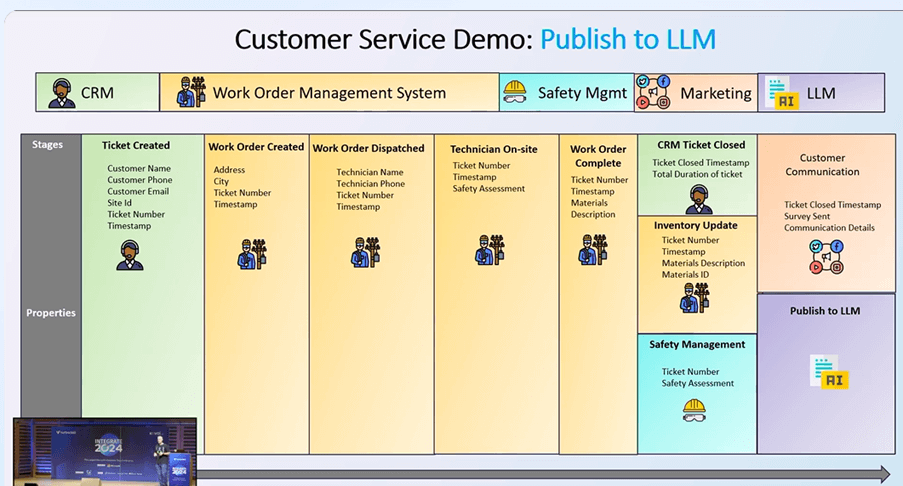

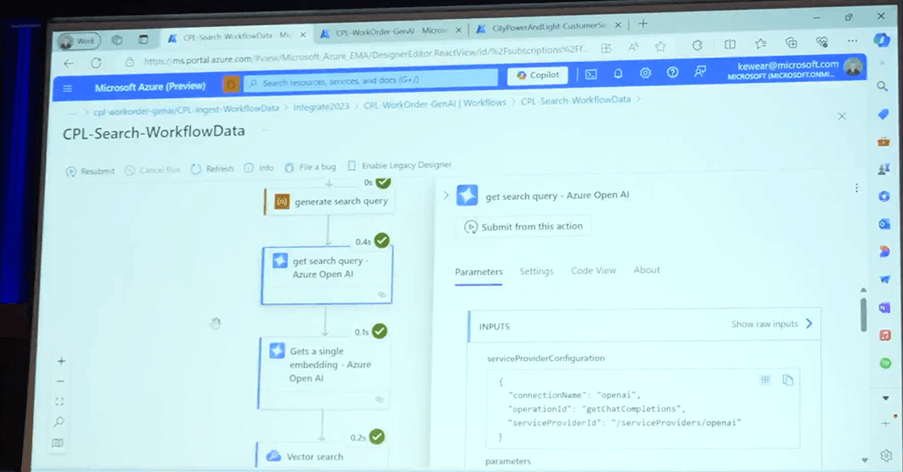

The highlight of the session was this interesting demo on customer service on the safety management system with LLM. The demo consisted of the various workflows with the GenAI. The model can also be enriched with the corporate data using connectors.

Here is a glimpse of the GenAI connectors.

Logic Apps as Plugins

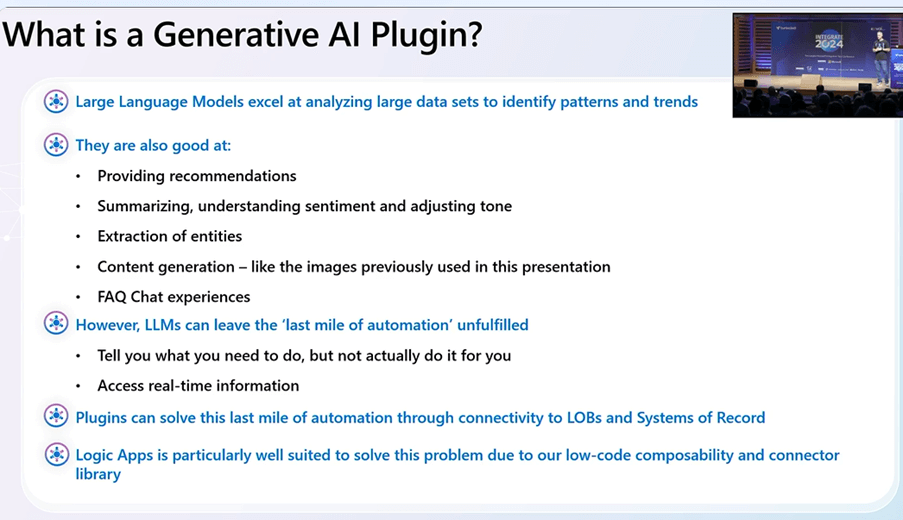

Though LLMs are interesting, there are some challenges like they only understand historical data or data at a point in time based on the data fed. He insisted that to complement the existing LLMs, the plugins are used with the features below.

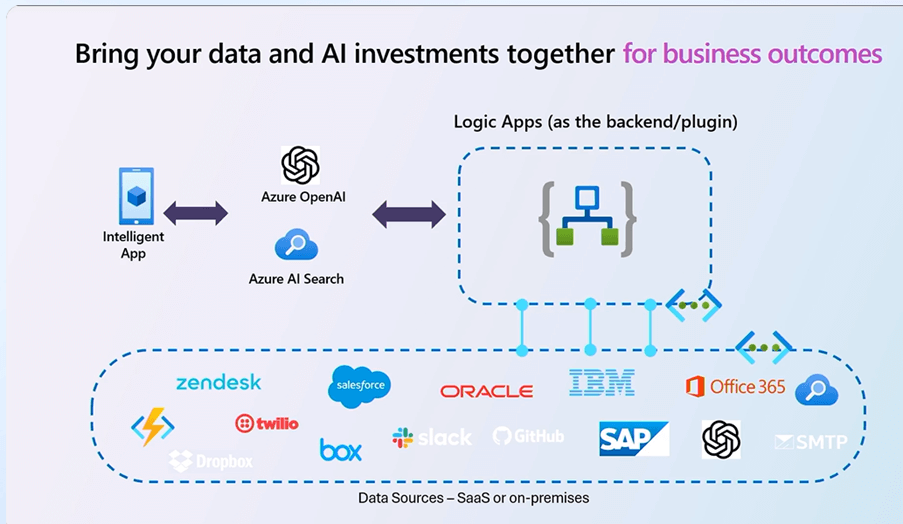

The Logic Apps can be used as a plugin to transact with the different LOB systems with the connectors. The connectors and LLM can go hand in hand to achieve the integration.

This can be achieved using

- Azure OpenAI Service Assistant in the OpenAI Studio

- Semantic Kernel plugin

The session was concluded with the roadmap for the Gen AI development including the Python support and much more.

With the GenAI approach, it is surely going to be a game changer in the Integration space as it simplifies things even further with the built-in actions and improved experience. With the plugins available, all the business applications can be built easily, and things can be automated.

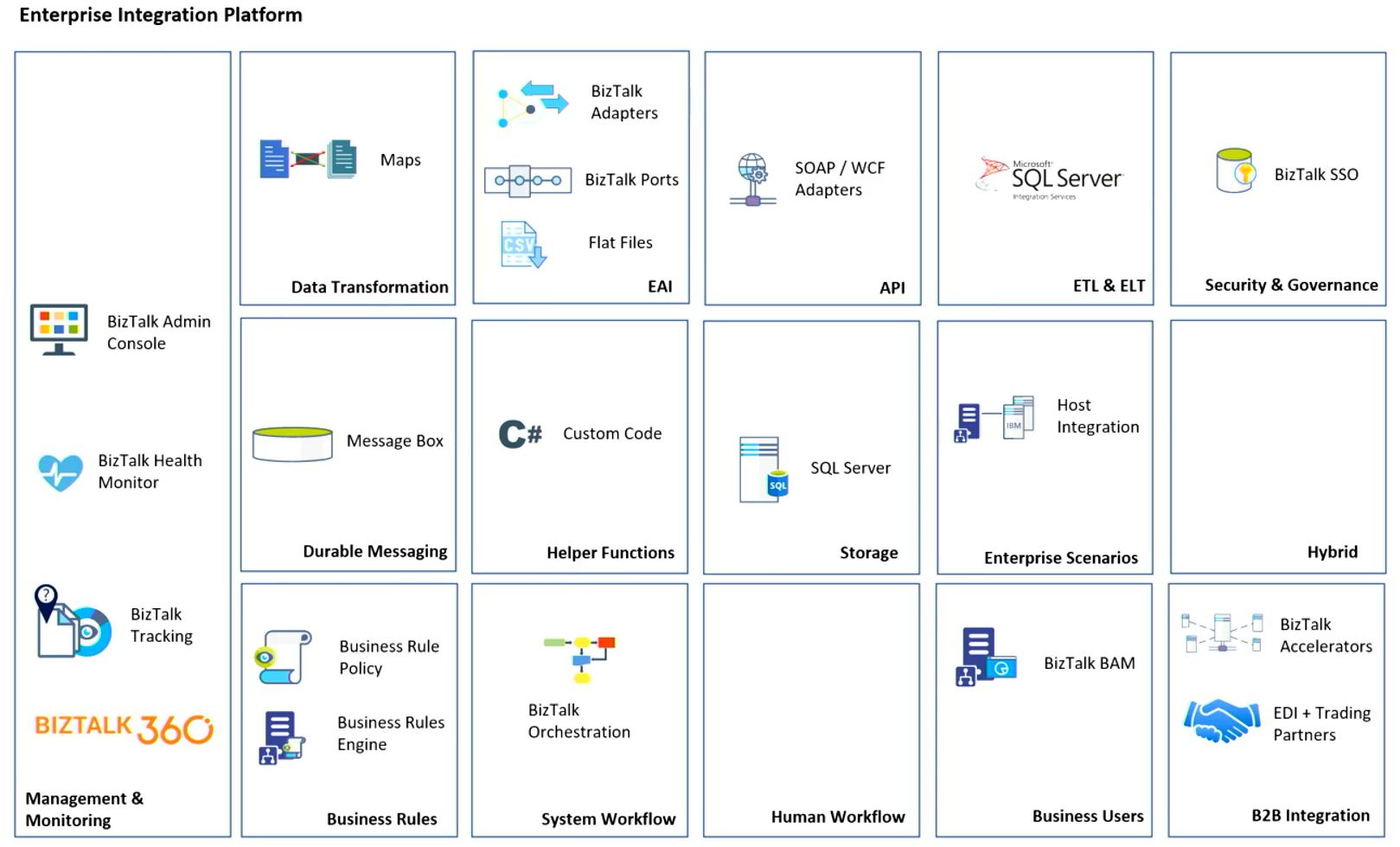

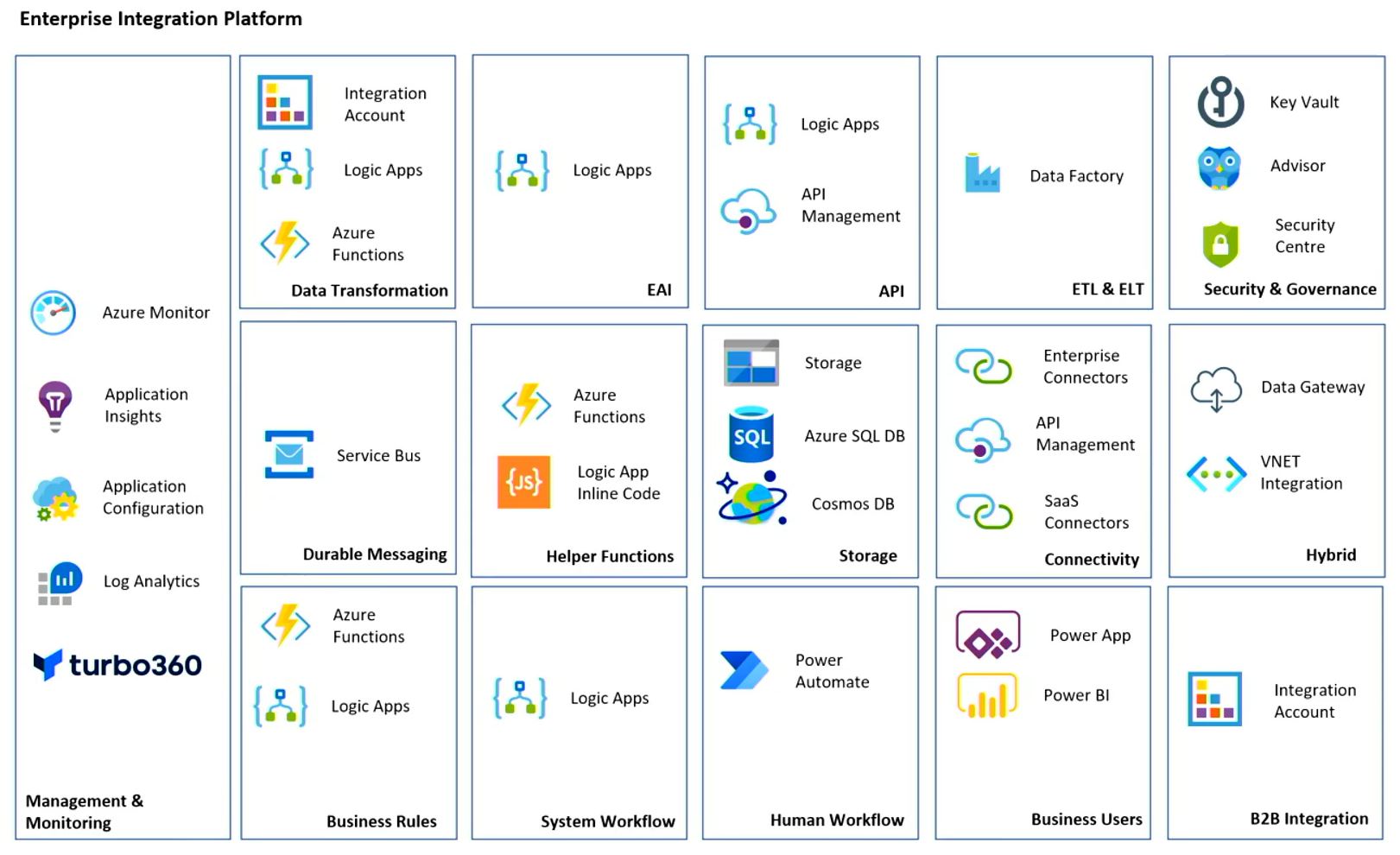

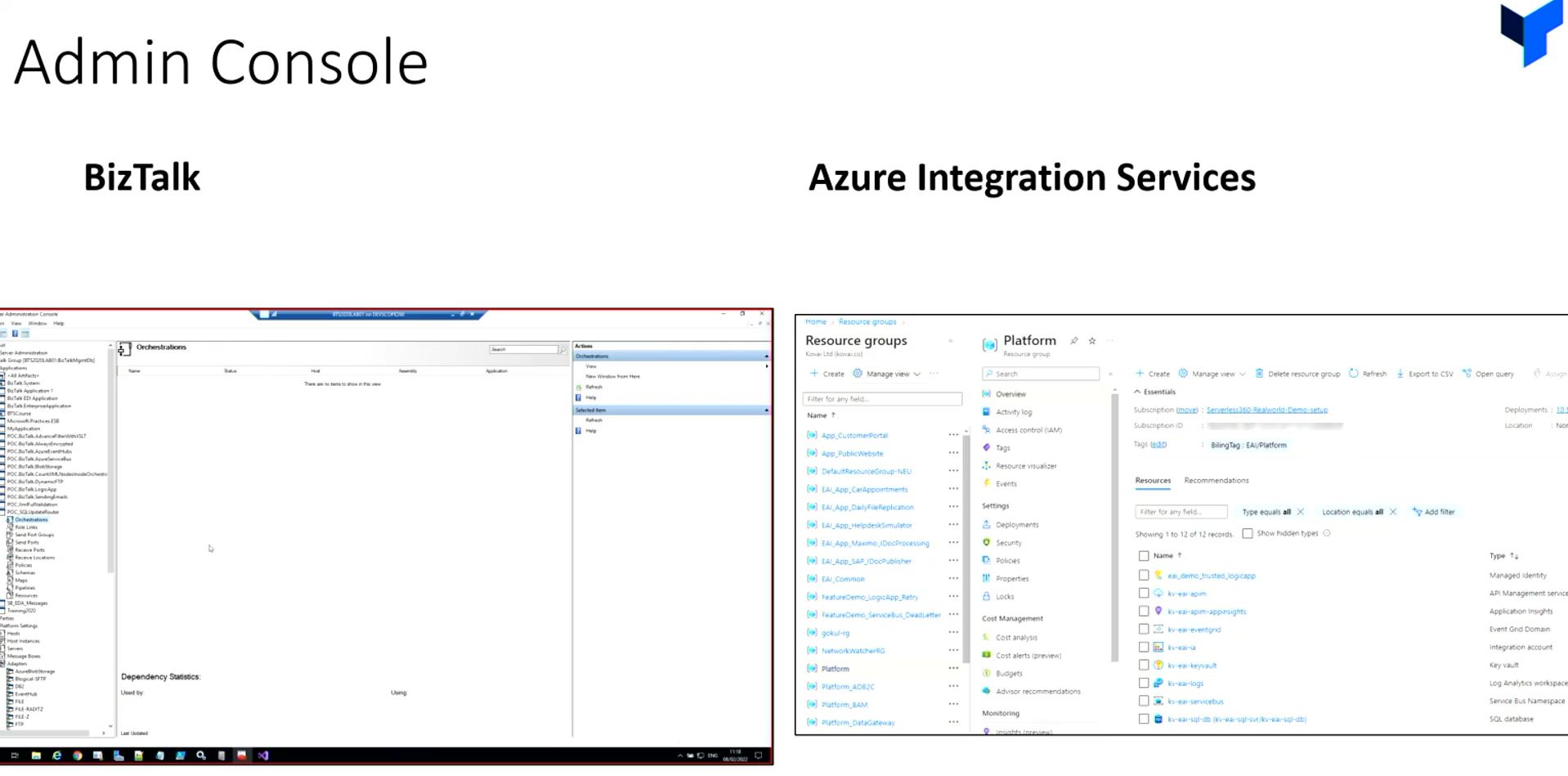

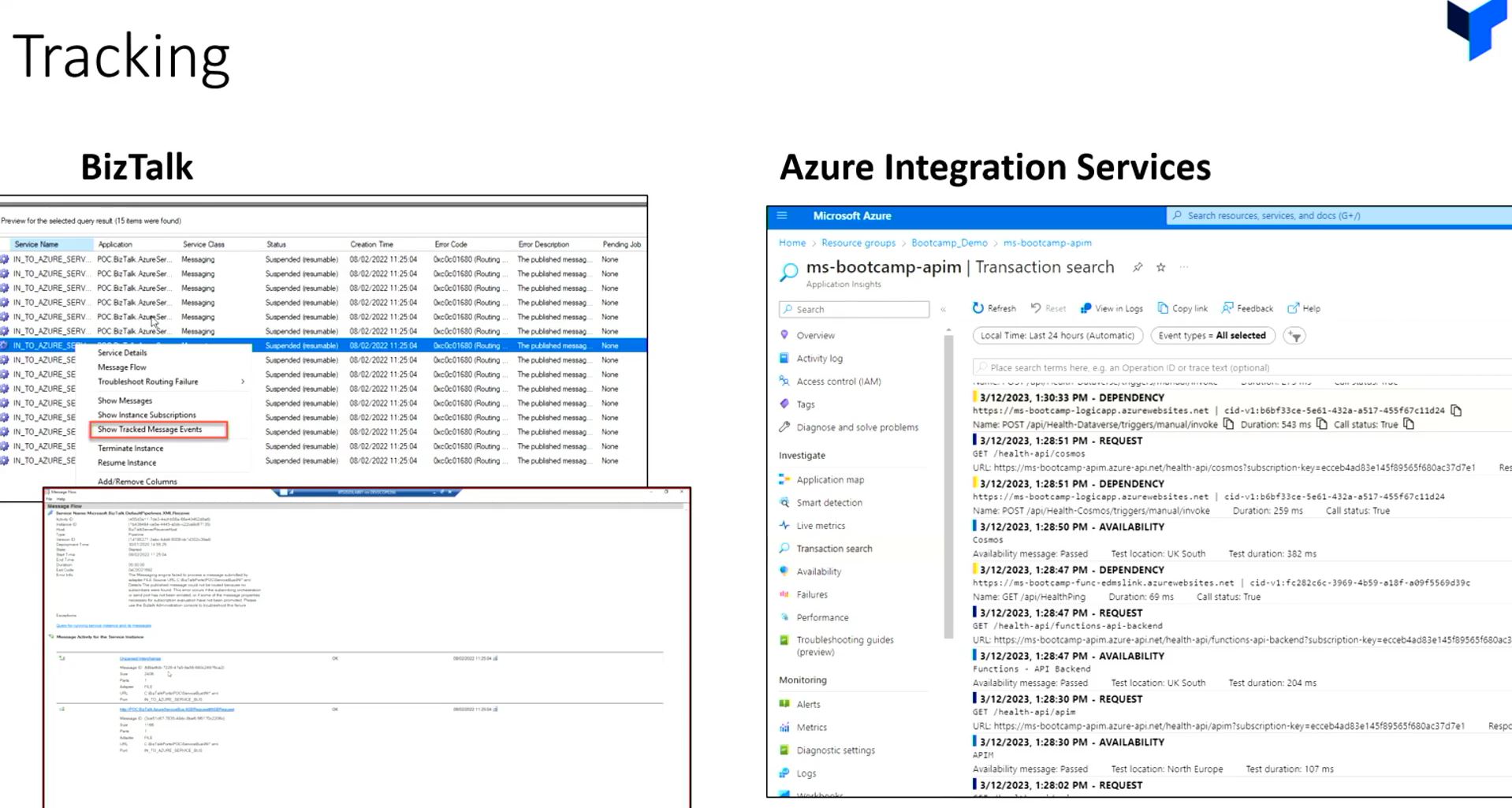

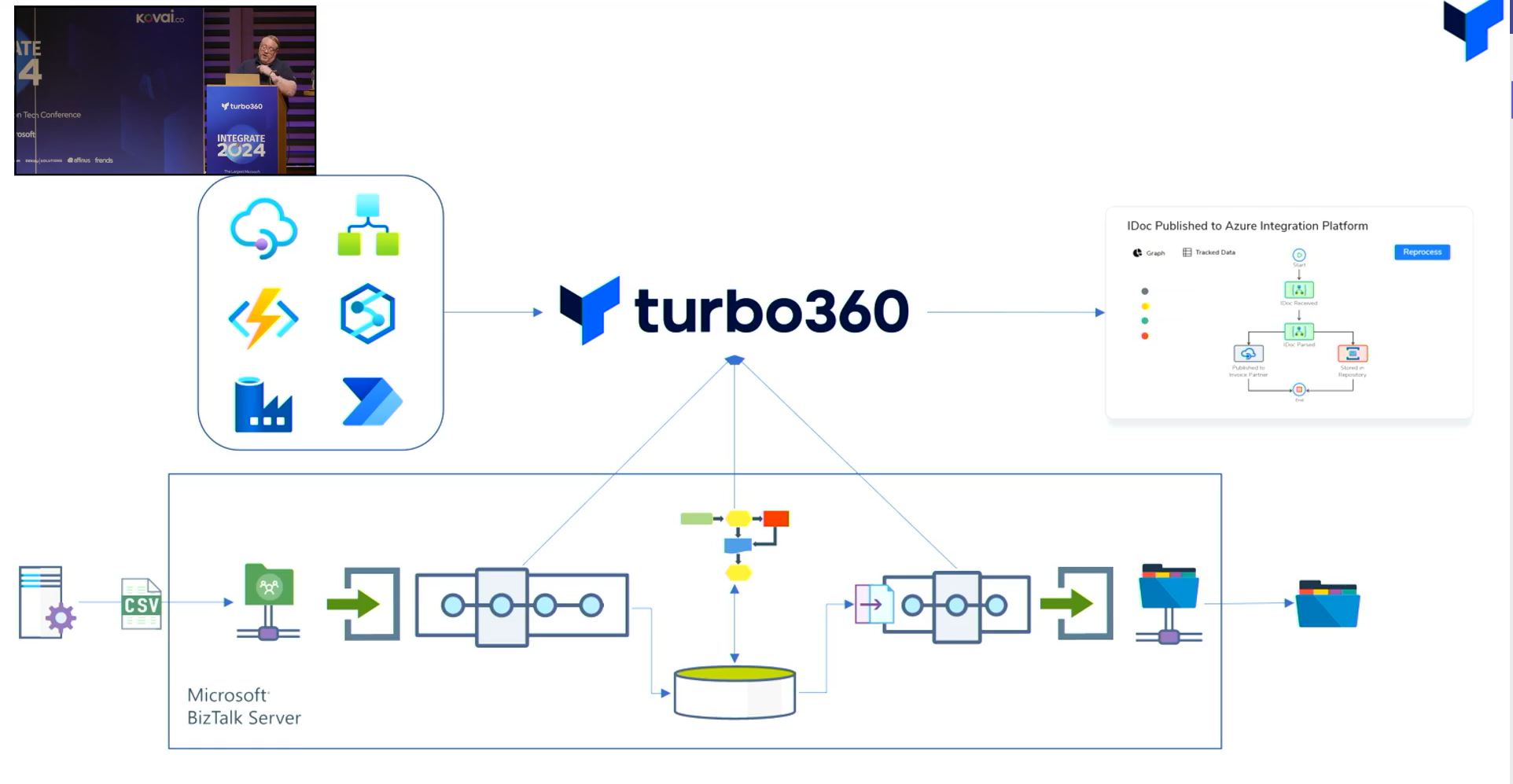

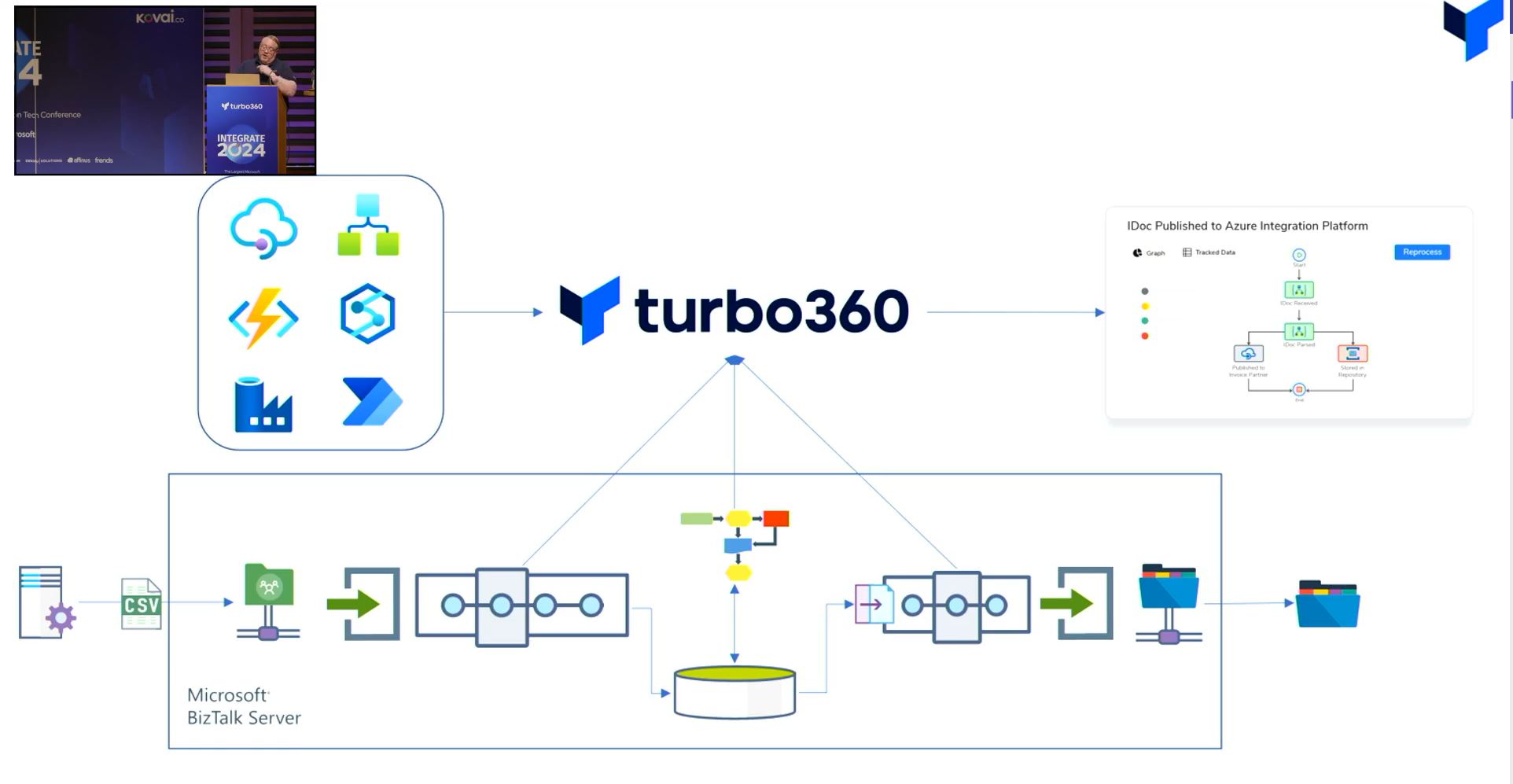

#5: BizTalk to Azure Integration Services – Supercharged by Turbo360

On day 2 of Integrate 2024, Michael Stephenson, Product Owner of Turbo360, started the session with the most awaited topic “BizTalk to Azure Integration Services – Supercharged by Turbo360”.

Michael Stephenson began the topic by comparing the list of resources and services that are supported in BizTalk360 & Turbo360.

Elements of BizTalk360:

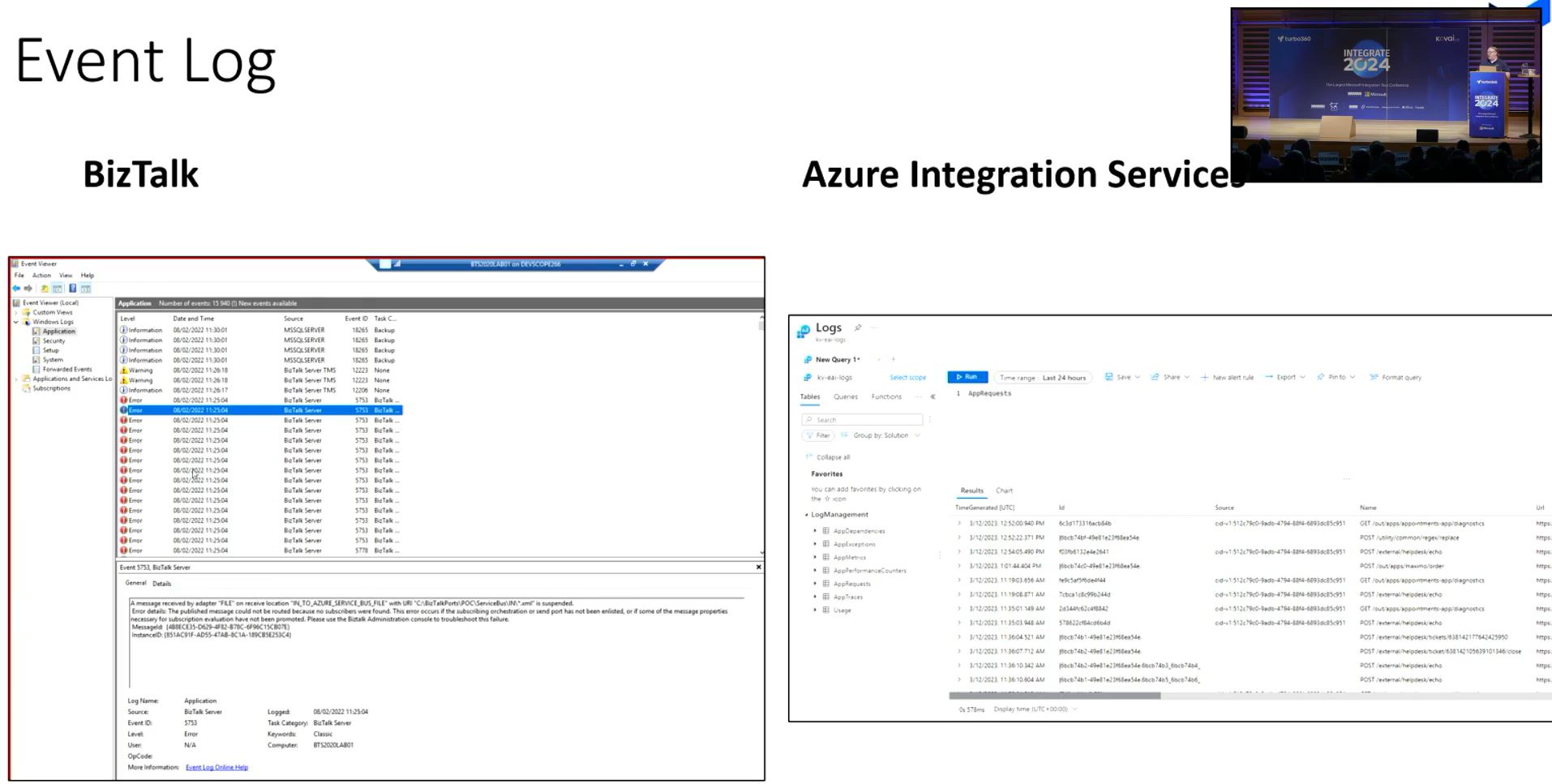

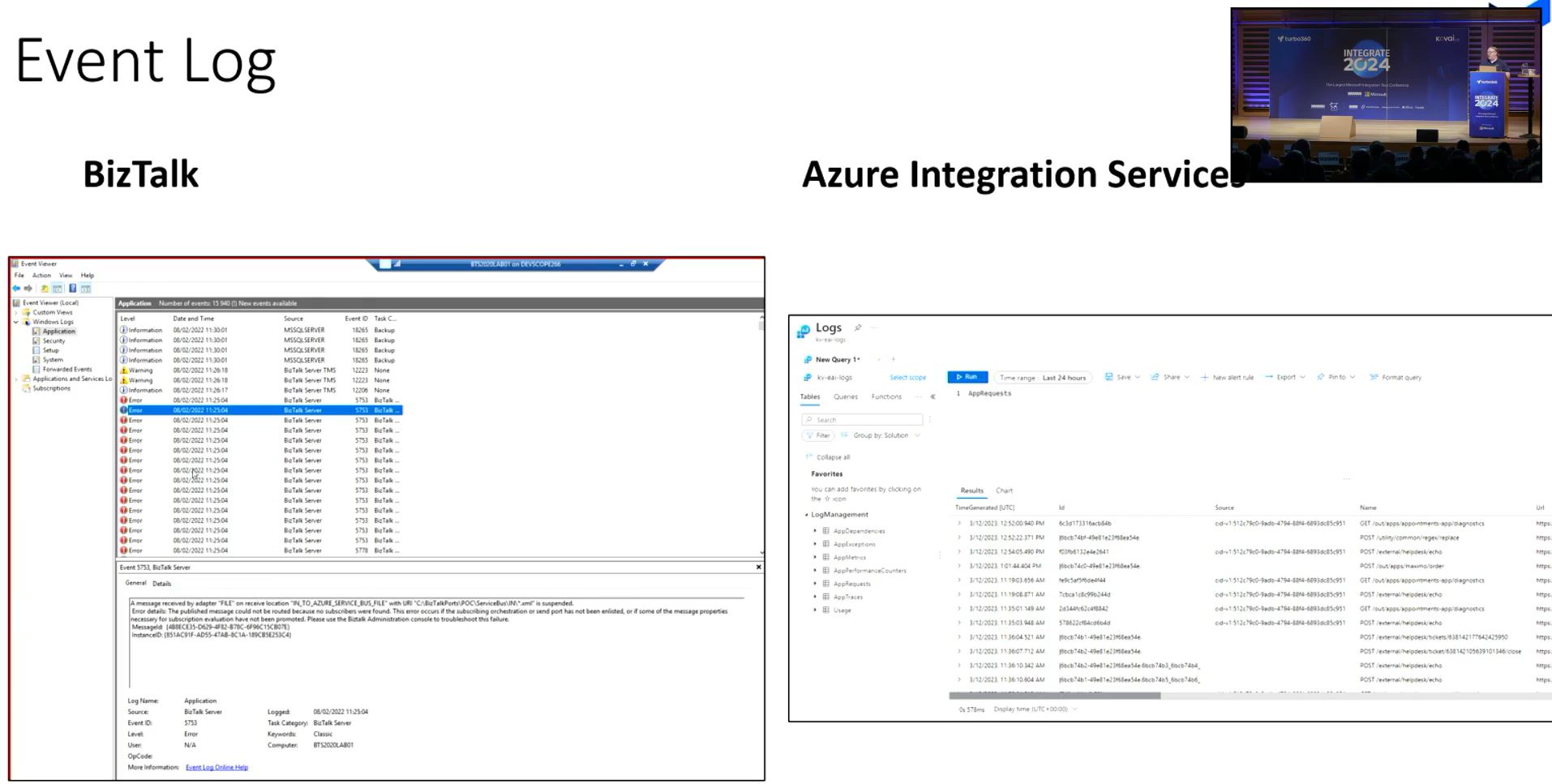

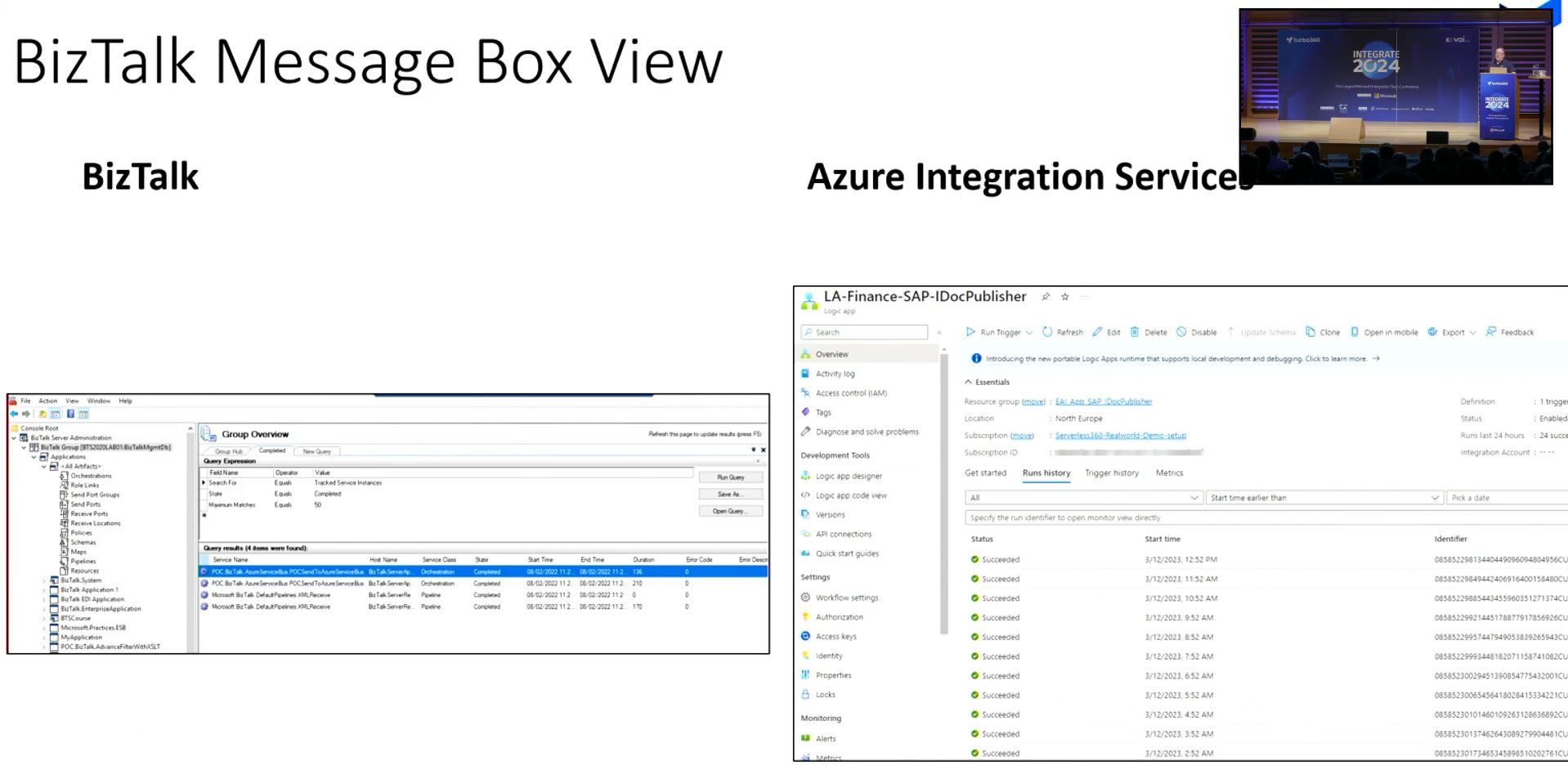

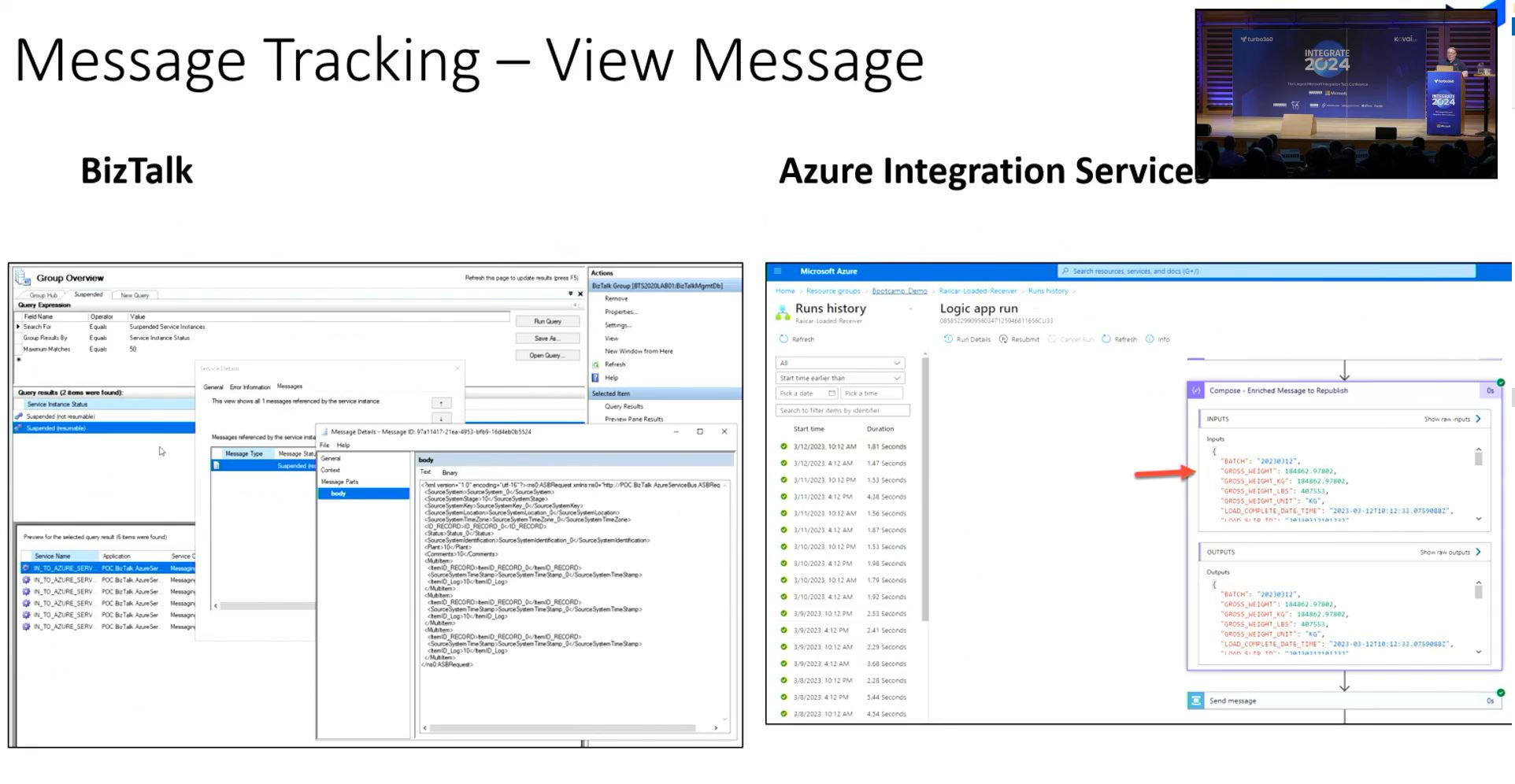

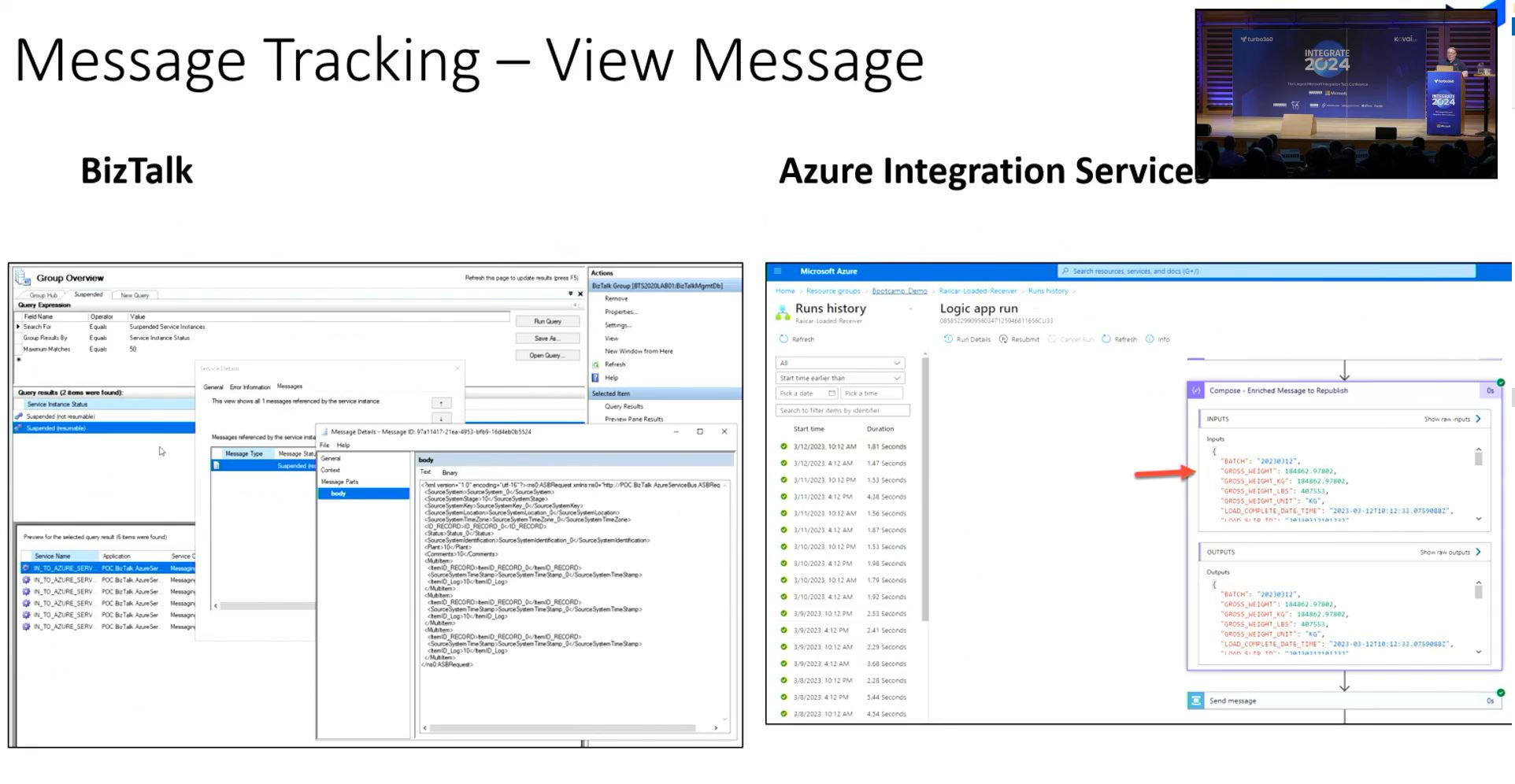

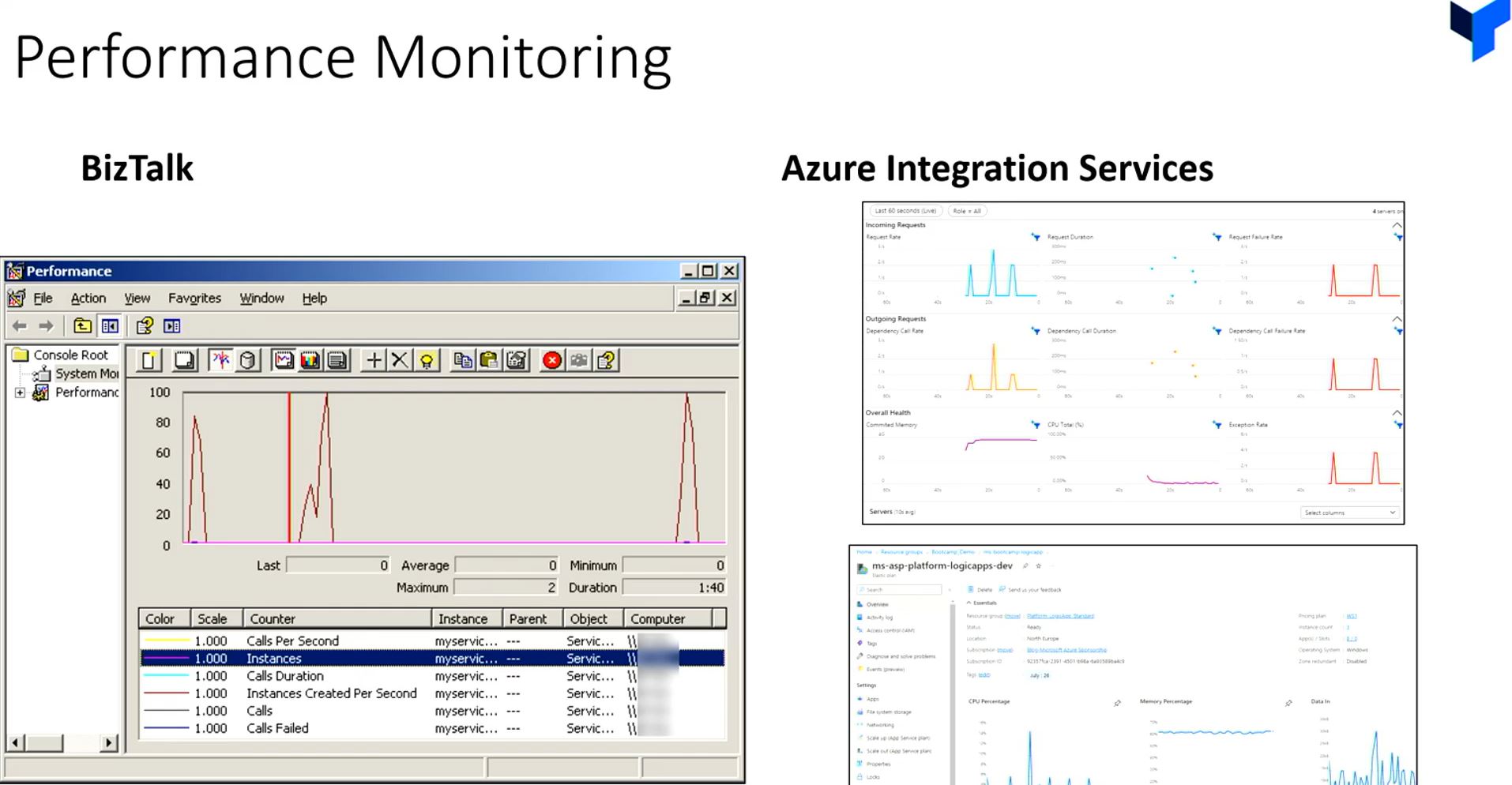

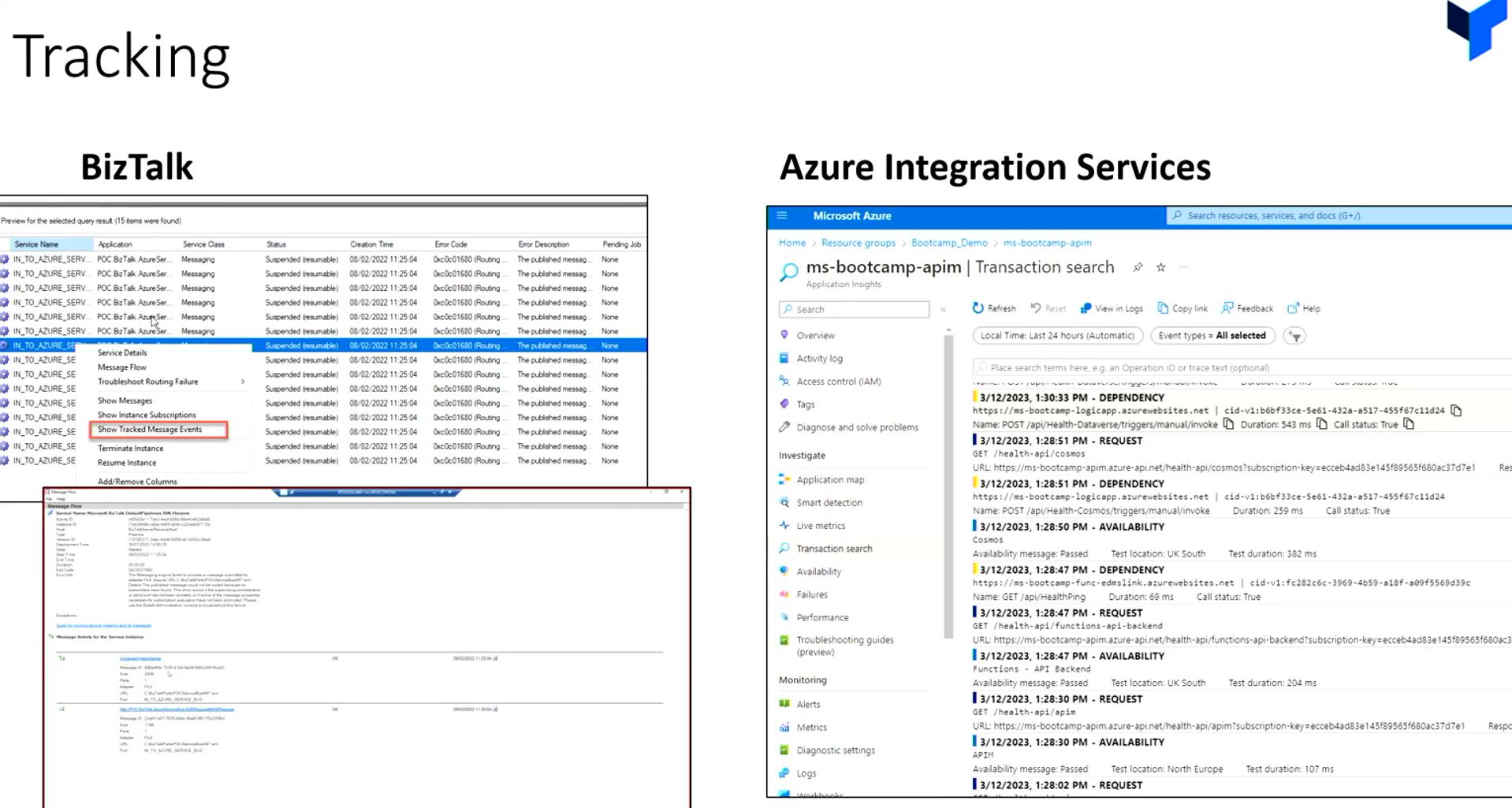

He also compared the valiant features in BizTalk to Azure Integration Services, i.e. Admin Console, Event Log, Tracking, Viewing Tracked Messages, Performance Monitoring and Business Activity Monitoring.

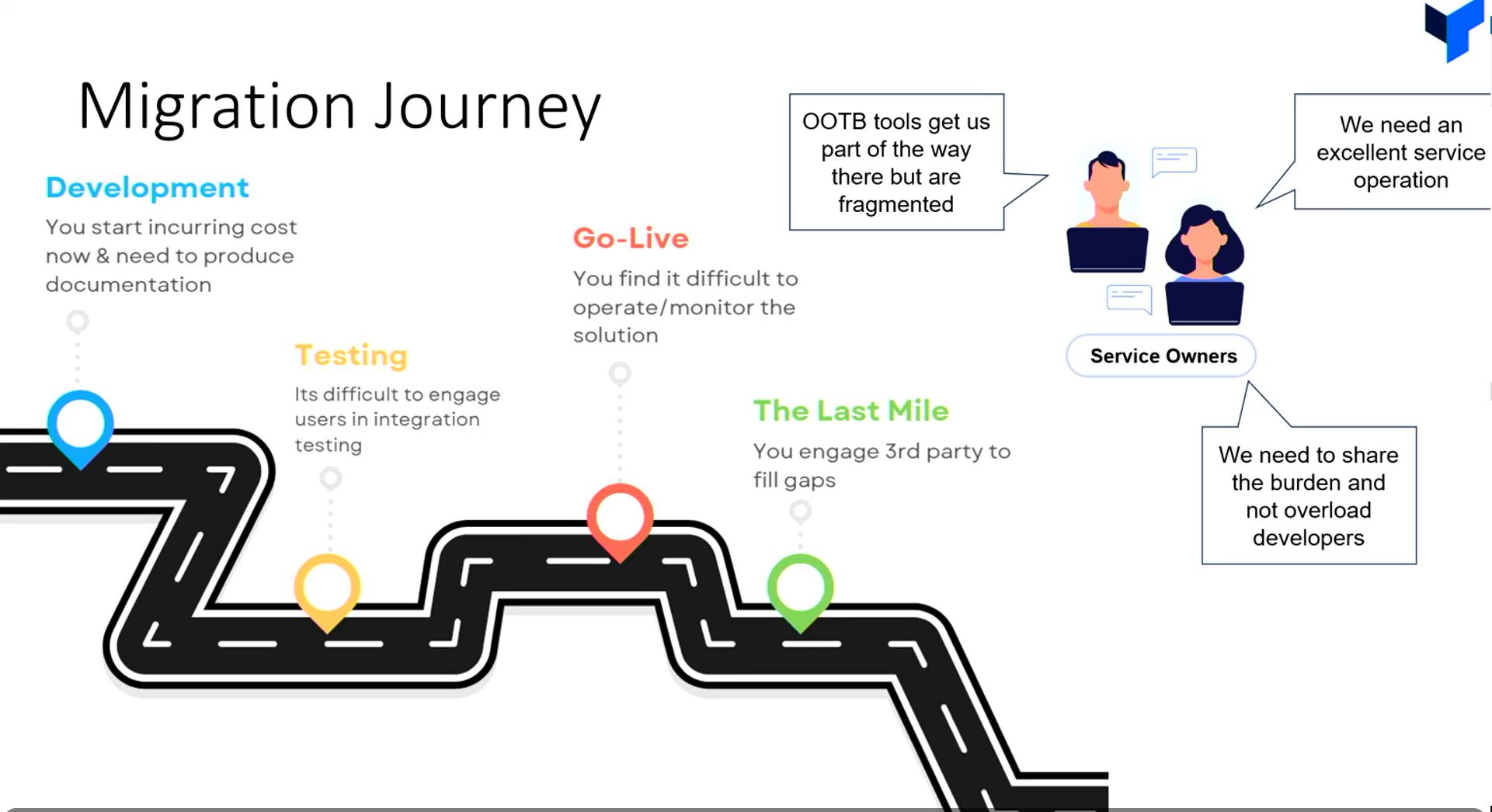

He explained the Migration Journey with an illustration and provided the audience with a list of Takeaways.

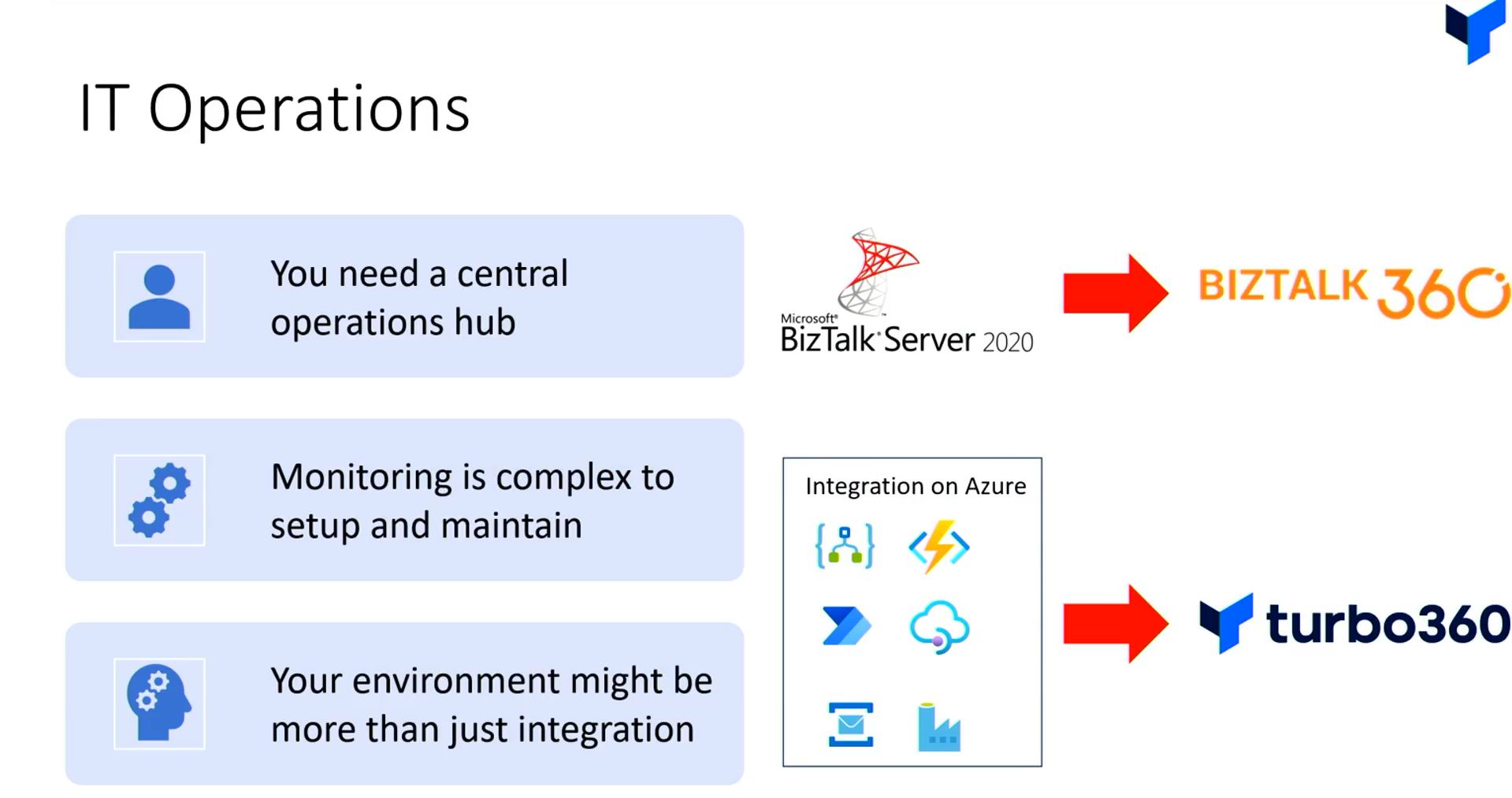

He later started to explain the IT operations involved in BizTalk360 and Turbo360.

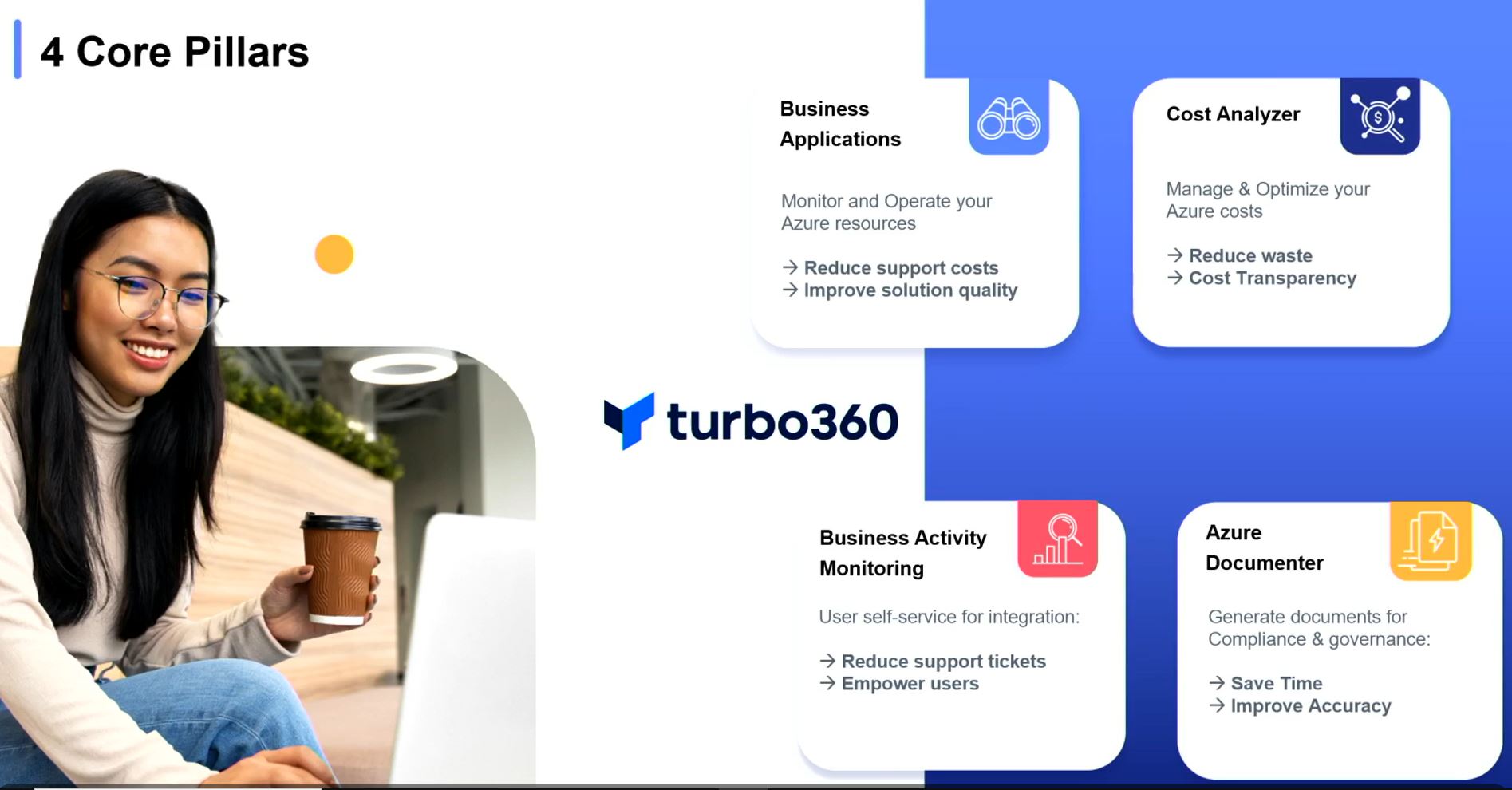

Michael Stephenson ended the session by showcasing the modules of Turbo360, namely,

Business Application- Create instances of your business applications and manage its azure resources.

Business Activity Monitoring- Achieve end-to-end tracking on business process flow across cloud-native and hybrid integrations and monitor your business flow at ease with pluggable components.

Cost Analyzer- Analyse, monitor and optimize the cost of multiple Azure subscriptions in a single place.

Azure Documenter- Generate high level technical documents in different dimensions for your Azure subscription.

#6: Integrating AI into your healthcare based solutions through Logic Apps

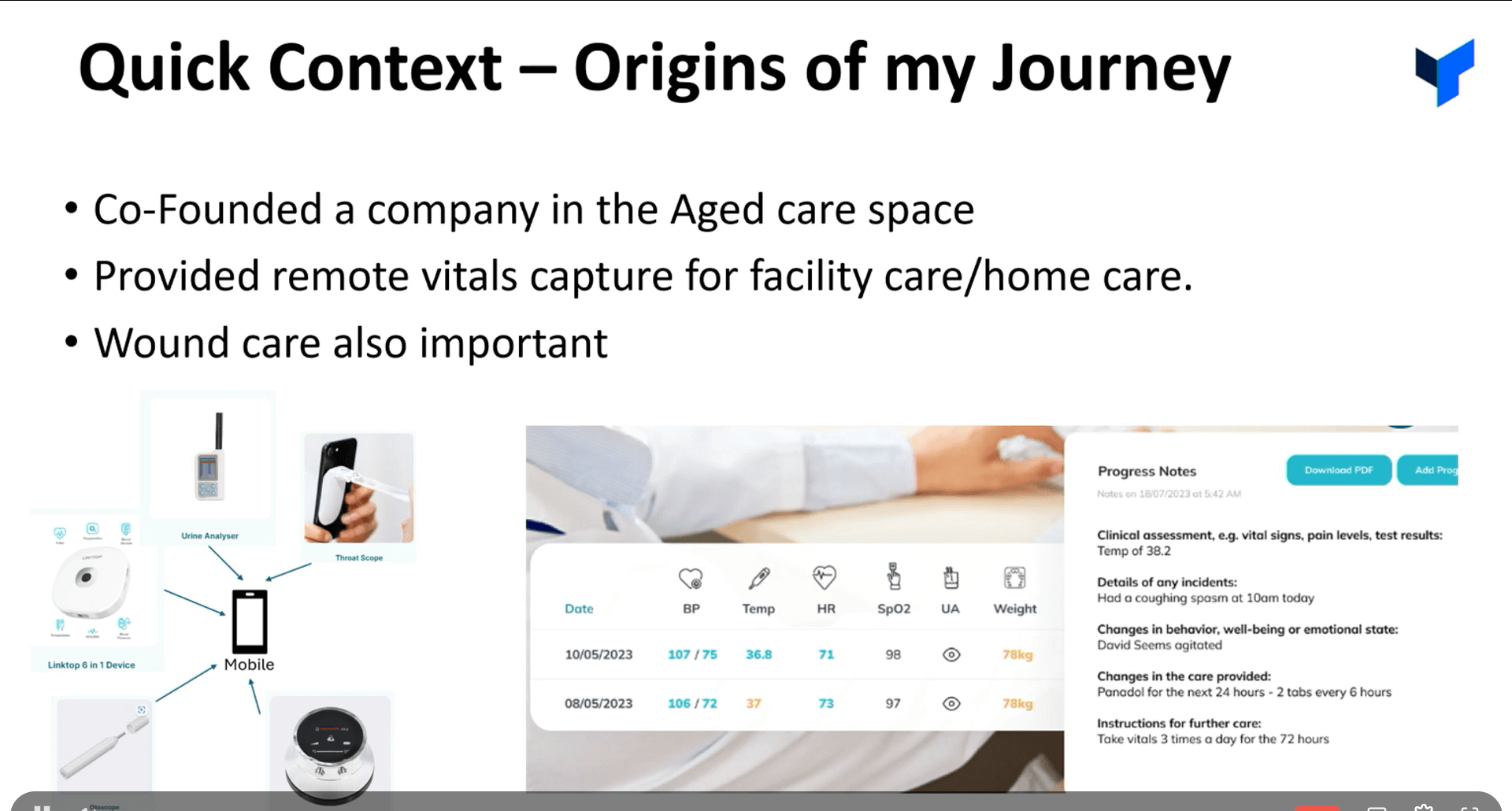

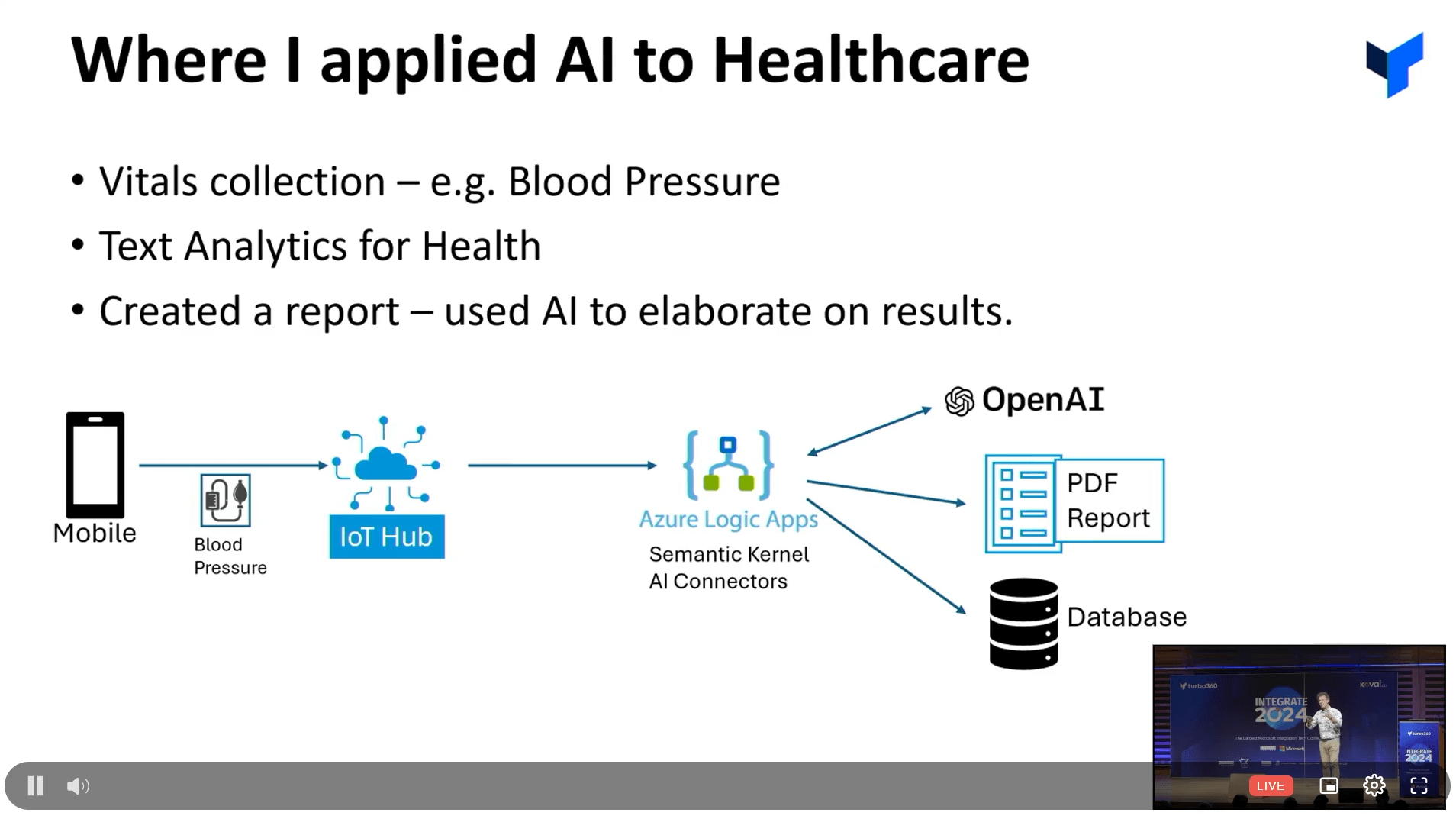

Mick Badran warmly greeted the audience by sharing charming details about his family. He introduced himself as an MVP with over 30 years of experience in integration, expressing his passion for his work. He shared his background in a healthcare startup and highlighted his expertise in key technologies such as Logic Apps, IoT Hub, and Azure OpenAPI.

Mick then began the session by sharing the origins of his journey. He co-founded a company in the aged care space, providing remote vital capture for home care and sharing few interesting insights about wound care.

He further discussed on the advancements in technology and their impact on the healthcare industry, emphasizing the importance of machine learning models in healthcare decision-making processes. He also addressed the challenges and solutions in integrating technology within healthcare, particularly in remote areas.

Mick classified three models on AI basics, starting with:

AI Basics I: He compared AI to a calculator, noting that it has numerous functions and can provide answers to anything we ask, highlighting it with the phrase “knows everything about anything.”

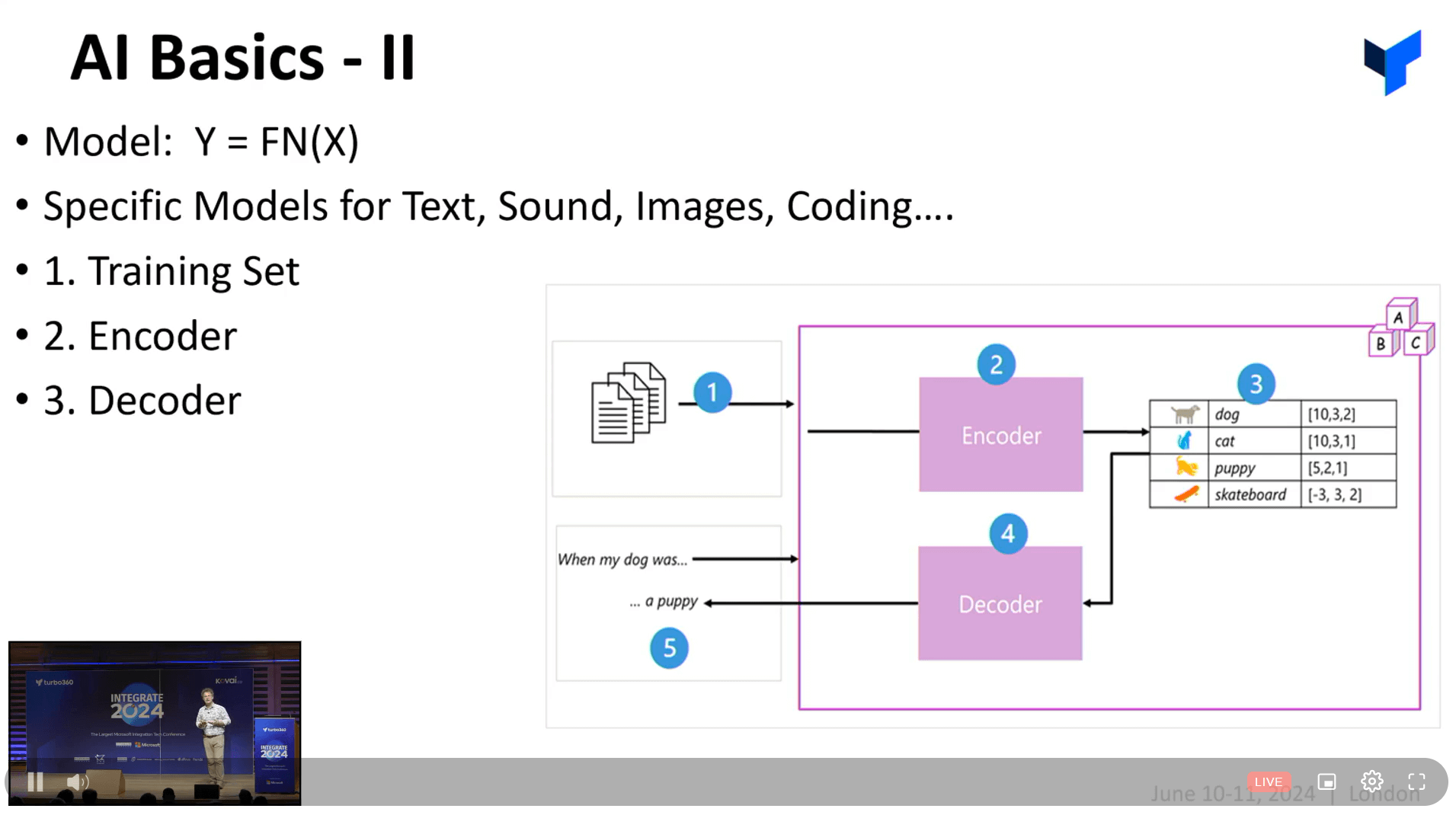

AI Basics II: Mick elaborated on AI models for text, sound, and image capturing, using the example of an encoder and decoder to illustrate their functionality.

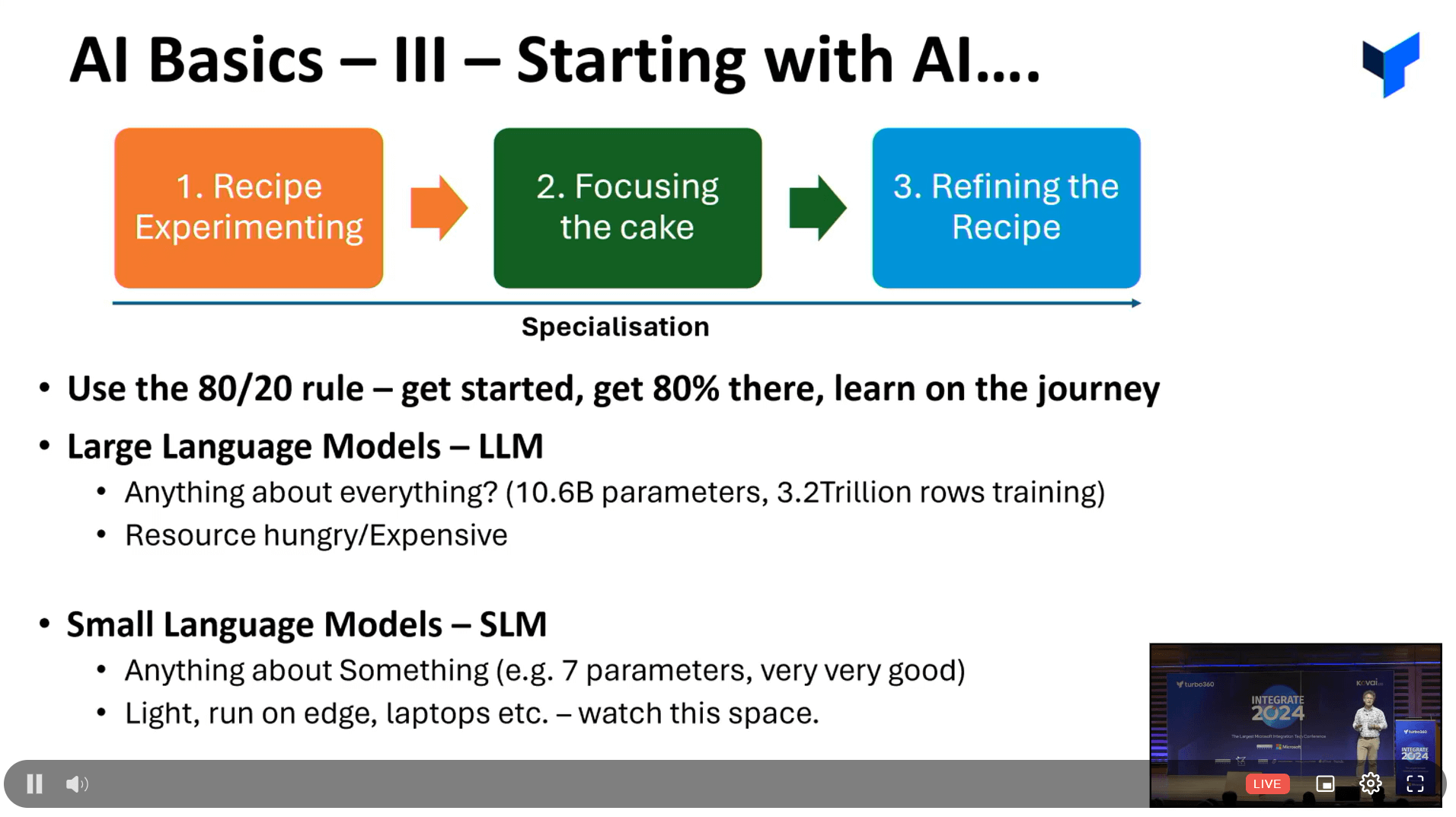

AI Basics III: In the last model, he explained the 80/20 rule and differentiated between large and small language models, discussing their respective pros and cons.

Mick later presented specific examples of how technologies like cognitive services, translation services, and IoT devices are used in healthcare. He described how mobile devices, Bluetooth hubs, and video consultations enhance healthcare access and quality.

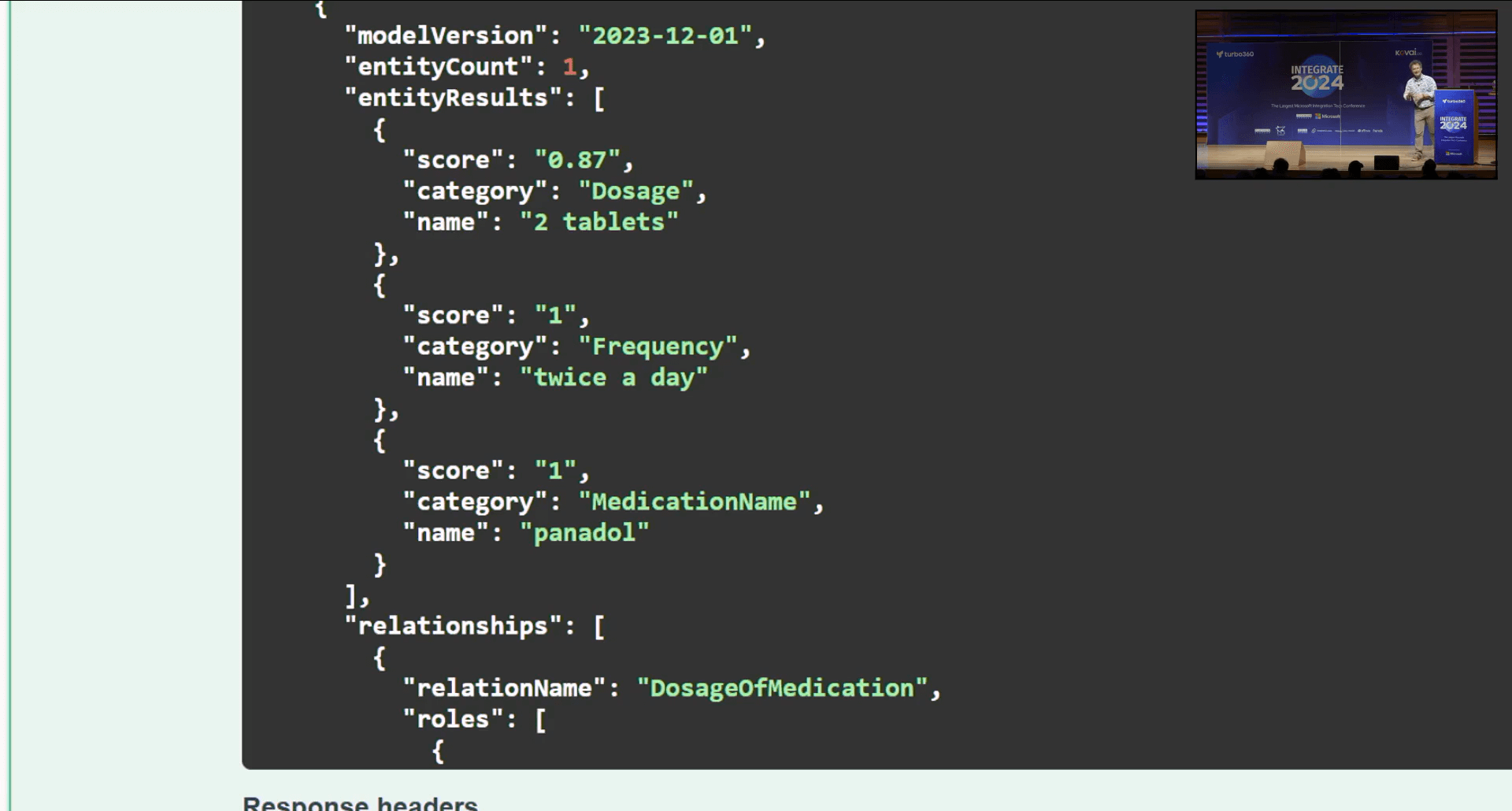

Demo 1

Mick discussed upon the implementation of logic apps and integration with other services and demonstrated text and report generation using LM Studio, a tool capable of providing precise details about any medicine. He demonstrated this with a sample demo on the medicine “Panadol.” Additionally, he introduced another tool capable of capturing images with sharp edges and providing information about their sizing, which was quite interesting.

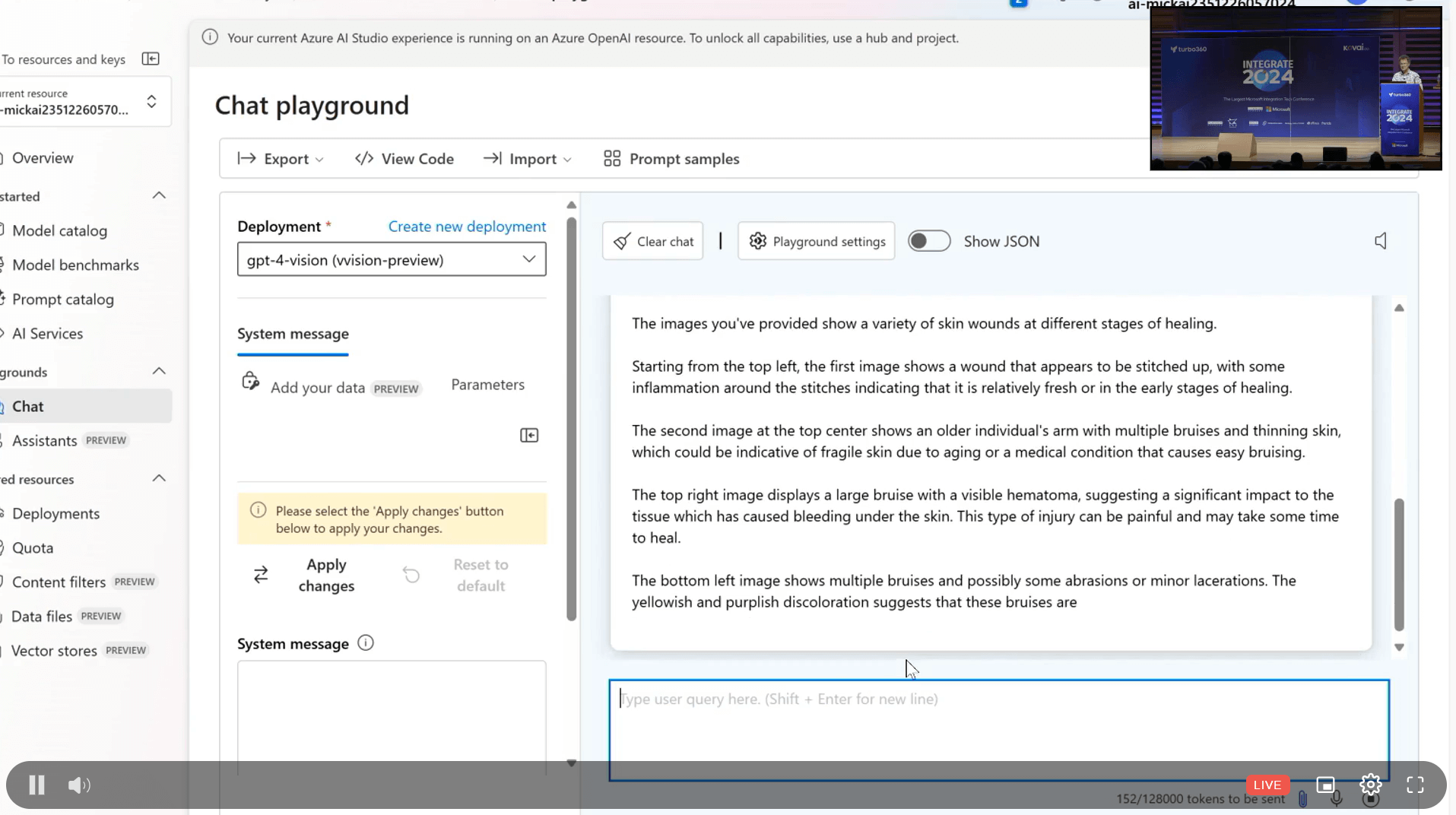

Demo 2

Mick talked on the uses of of AI and machine learning in tasks like document indexing, image classification, and video analysis. He linked image classification to wound care, explaining how an uploaded image of a wound can provide insights into the healing process and characteristics of the wound. Additionally, he introduced a bot named MediPortal, designed based on logic apps that provide insights on various healthcare-related topics.

Finally, Mick concluded the session with a comprehensive summary of his demonstration, ending on a light note by posing a fun riddle.

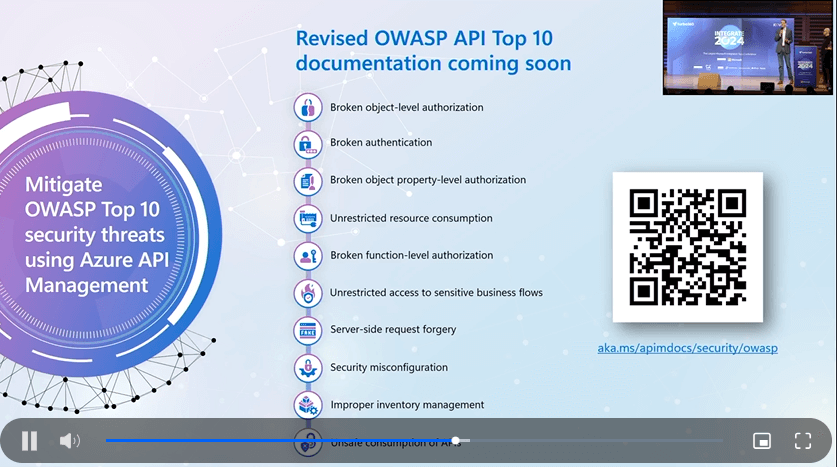

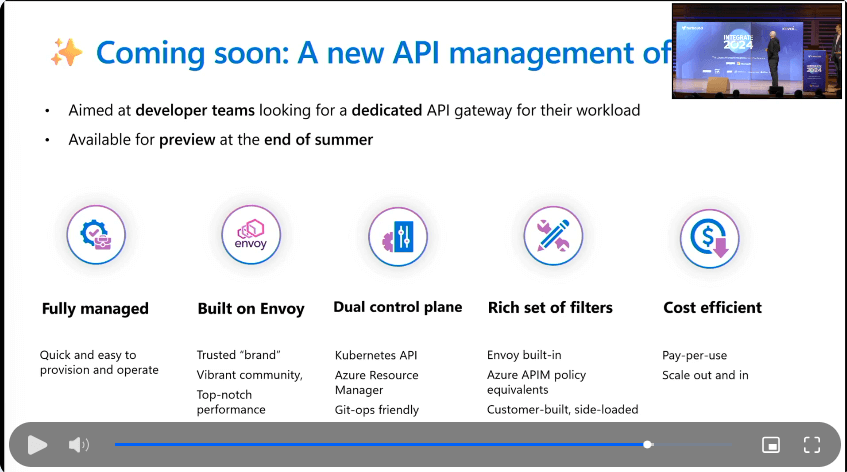

#7: Accelerating Innovation: Exploring Azure API Management’s Latest Advancements and Future Developments

Day 1 of the Integrate event exceeded our expectations, with all the sessions being livelier and the audience experiencing great learning opportunities. On Day 2 of Integrate 2024, two speakers, Mike Budzynski and Vladimir Vinogradsky, took the stage right after the lunch break to discuss the exciting topic Accelerating Innovation: Exploring Azure API Management’s Latest Advancements and Future Developments.

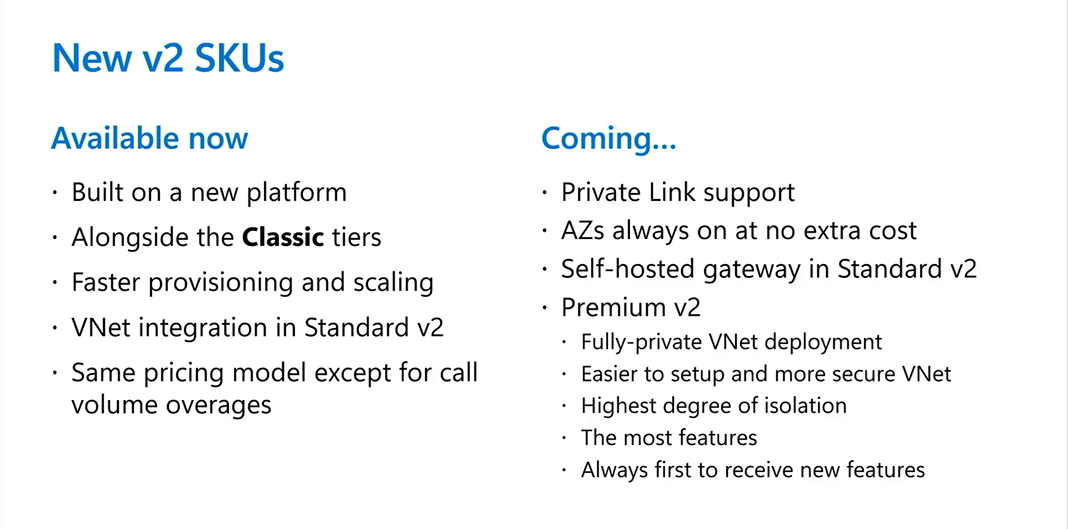

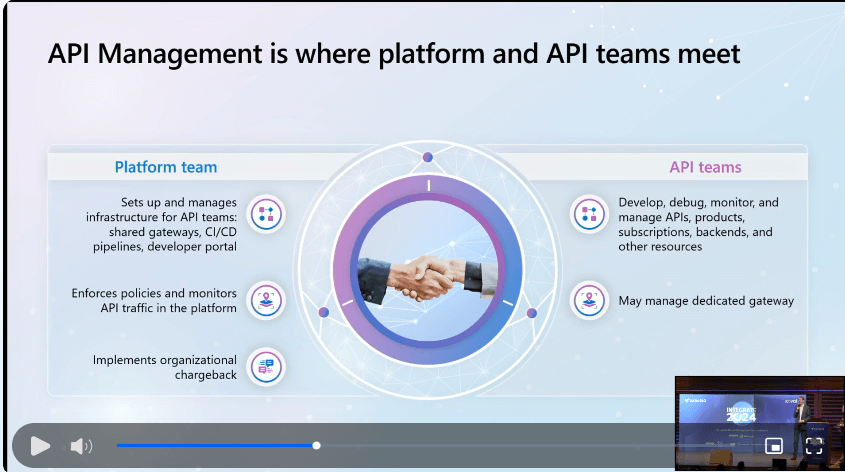

Vladimir began the session by covering the latest developments in API Management and the future developments.

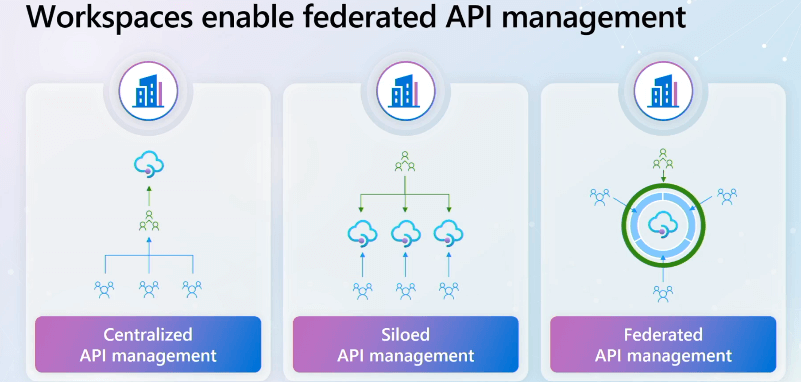

Then Mike delivered a deep dive session on API Management and API teams. He also highlighted the workspaces that enabled federated API management.

What’s Coming Next?

Mike mentioned that workspaces will become generally available in mid-summer. Workspaces will support runtime isolation, enabling:

- Isolation and monitoring of consumption and faults

- Association of a gateway resource with a workspace in API Management

- Independent control plane with networking and scale configuration

- API hostname is unique per workspace per gateway

The speakers showcased the workspace and API security with a live hands-on demo, which greatly impressed the audience.

The session concluded with a discussion about the future of API management and an engaging Q&A session.

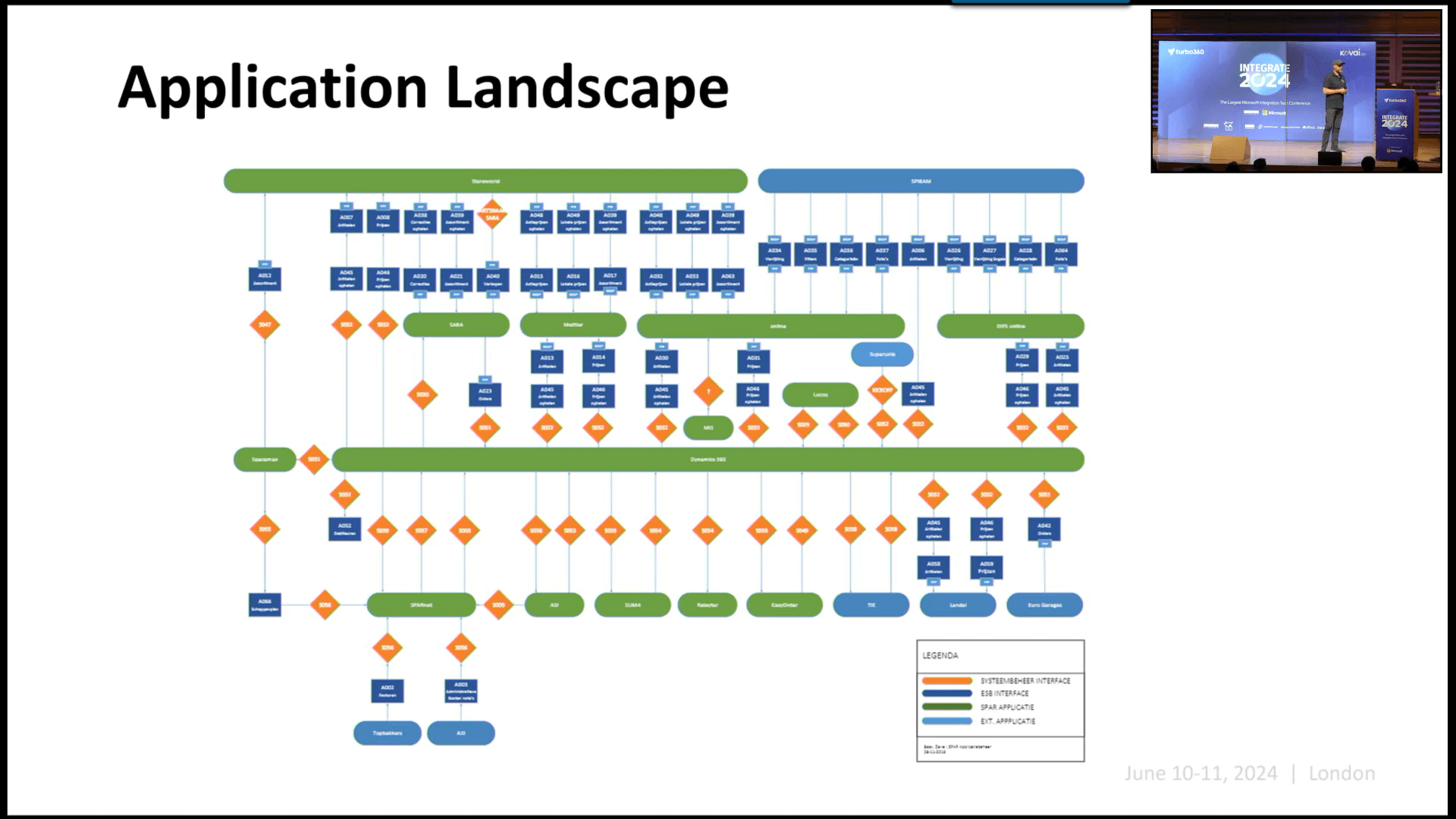

#8: Migrating from a retail on-premise solution to an integration platform in Azure

Steef-Jan is an Integration Architect, Microsoft Azure MVP, blogger, author and frequent public speaker. He got his session off to a humorous and engaging start, as always. He gave a talk about a transformative journey that included moving from an integration platform hosted in Azure to an on-premises retail broker solution.

He discussed his actual project experience from discovery to proposal, including how to design the platform, start with a single domain, identify solution building blocks, and create road maps.

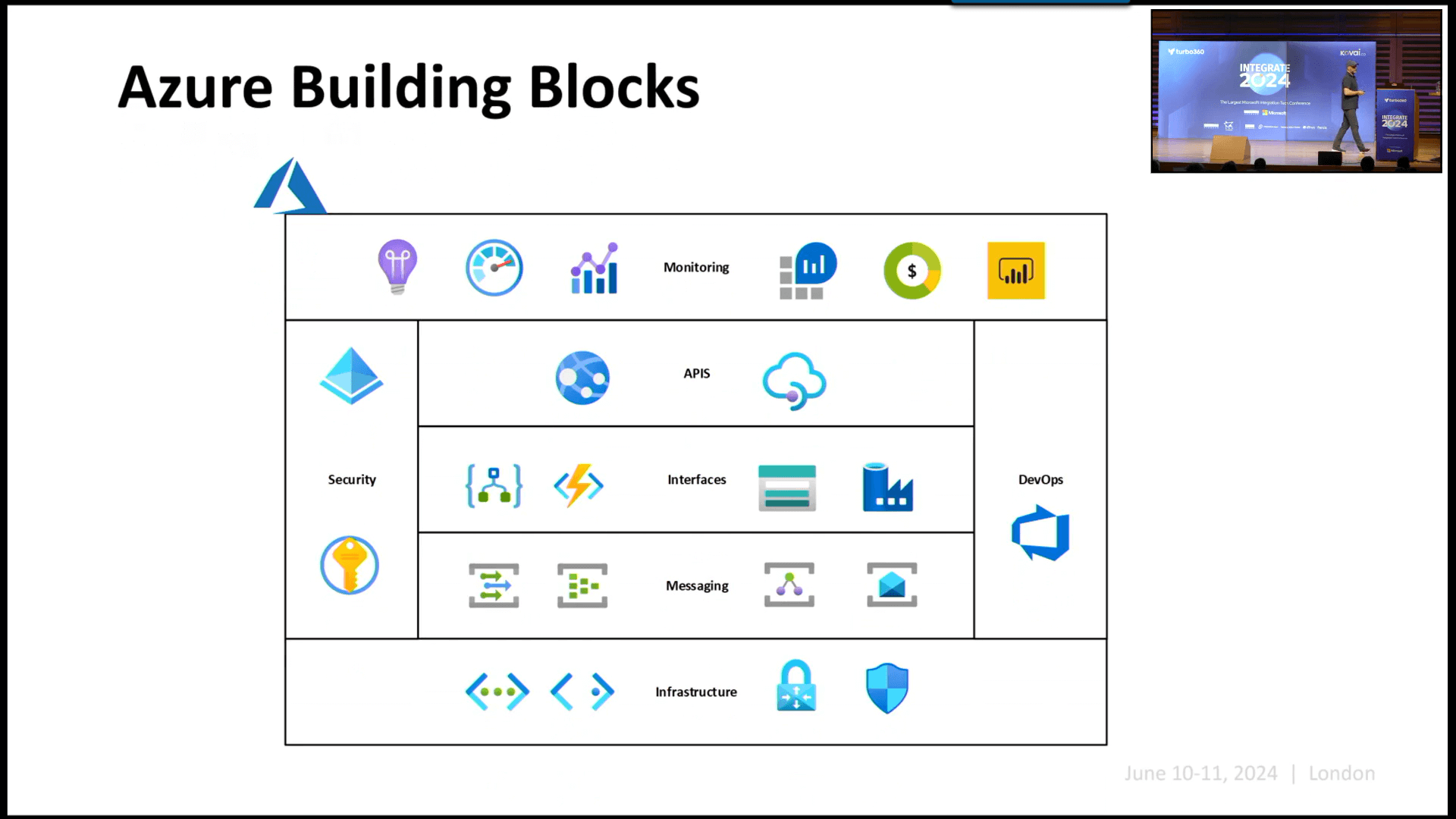

He then shifted the conversation to the difficulties and risks associated with moving from on-premises to Azure building blocks, as well as the ways in which we can use Azure to get around these issues. These issues included monitoring, APIs, security, interfaces, prices, messaging, infrastructure, and DevOps.

Steef-Jan has provided insights on overcoming obstacles and optimizing advantages by outlining and delving into the technical nuances as well as strategic factors involved in this migration process.

Choosing Cloud Migration: Showed and shared the learnings of how Azure Integration Services can seamlessly accommodate NewWay’s functionalities while unlocking the scalability, agility, and cost-efficiency of cloud computing.

He mentioned the PaaS Guidelines,

- Fit For the purpose

- Proven (Reference Azure Architecture centre, Azure well-Architected Framework)

- Scalable

- Complaint

- Testing (Outside-in)

- Documentation

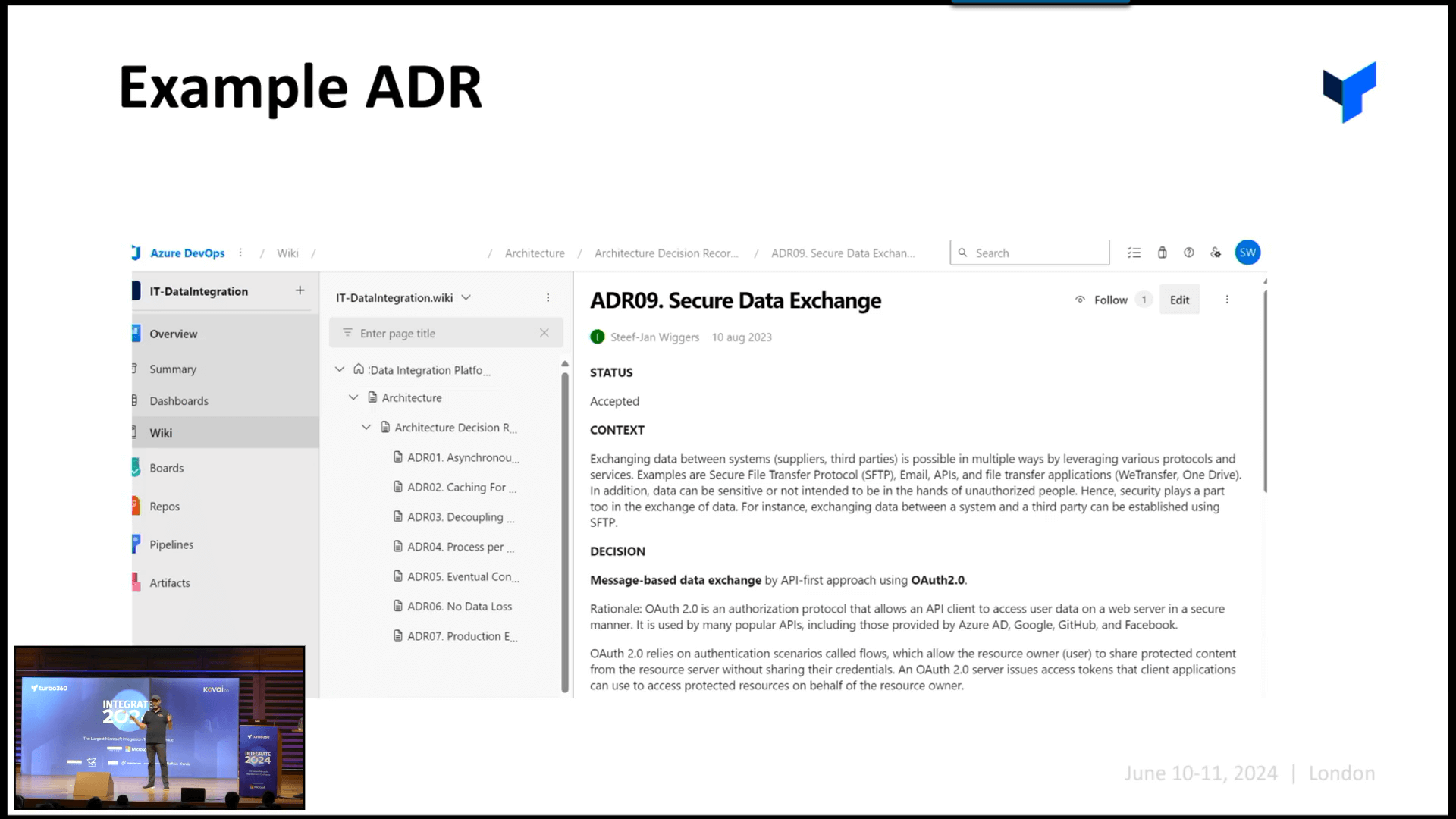

For Designing the Architecture, Steef-Jan briefed about the Functions over logic Apps, Azure Redis Cache, Storage capabilities, Messaging – Event grids and Service Bus, API management, Deployments (Bicep not ARM), Reuse – Shared Packages, Architecture Design Records (ARD) with a quick ADR example.

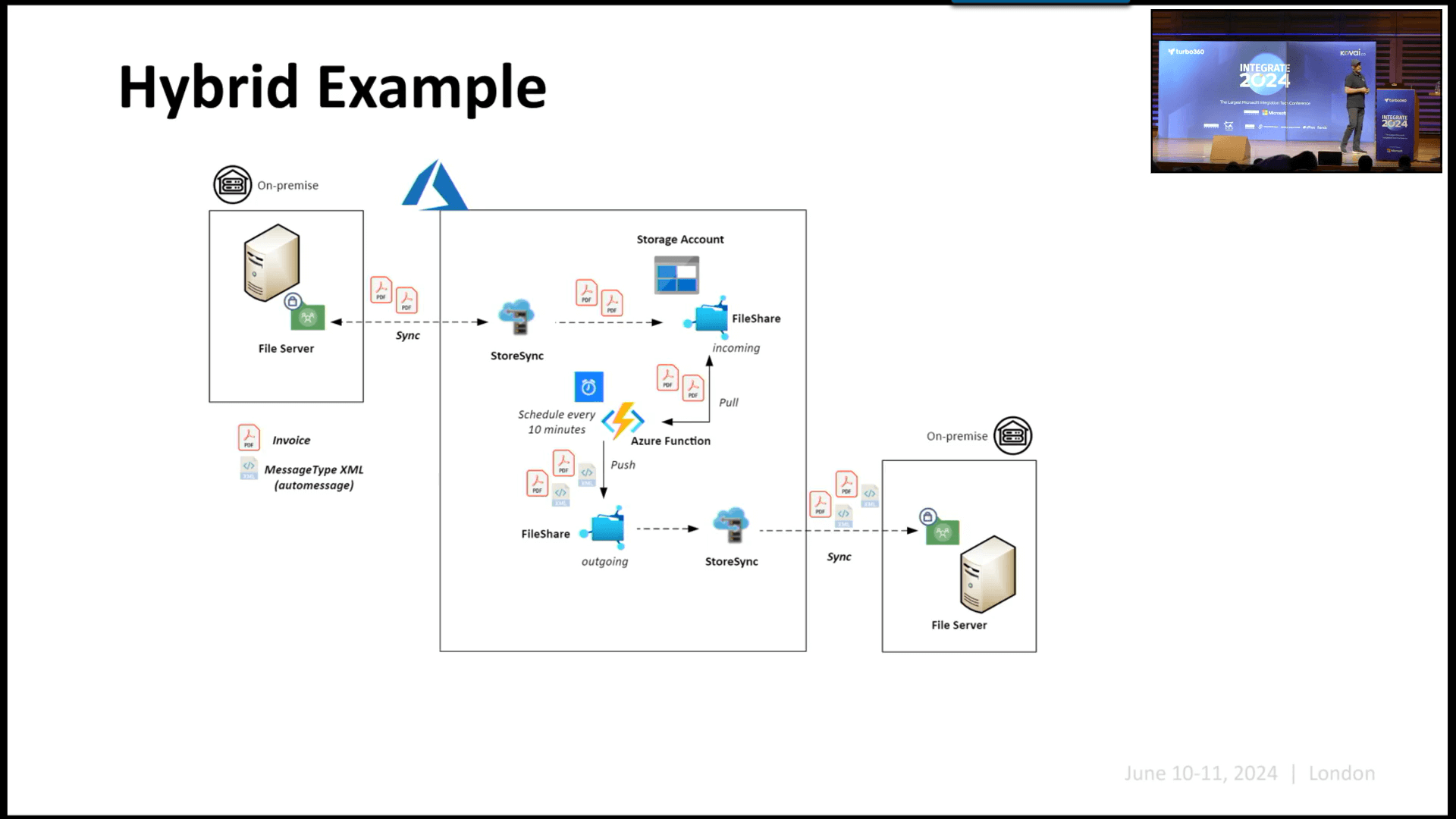

To Implement an integration platform, he has shown and explained how he used the Integration Pipeline and Transaction API using Hybrid with an example,

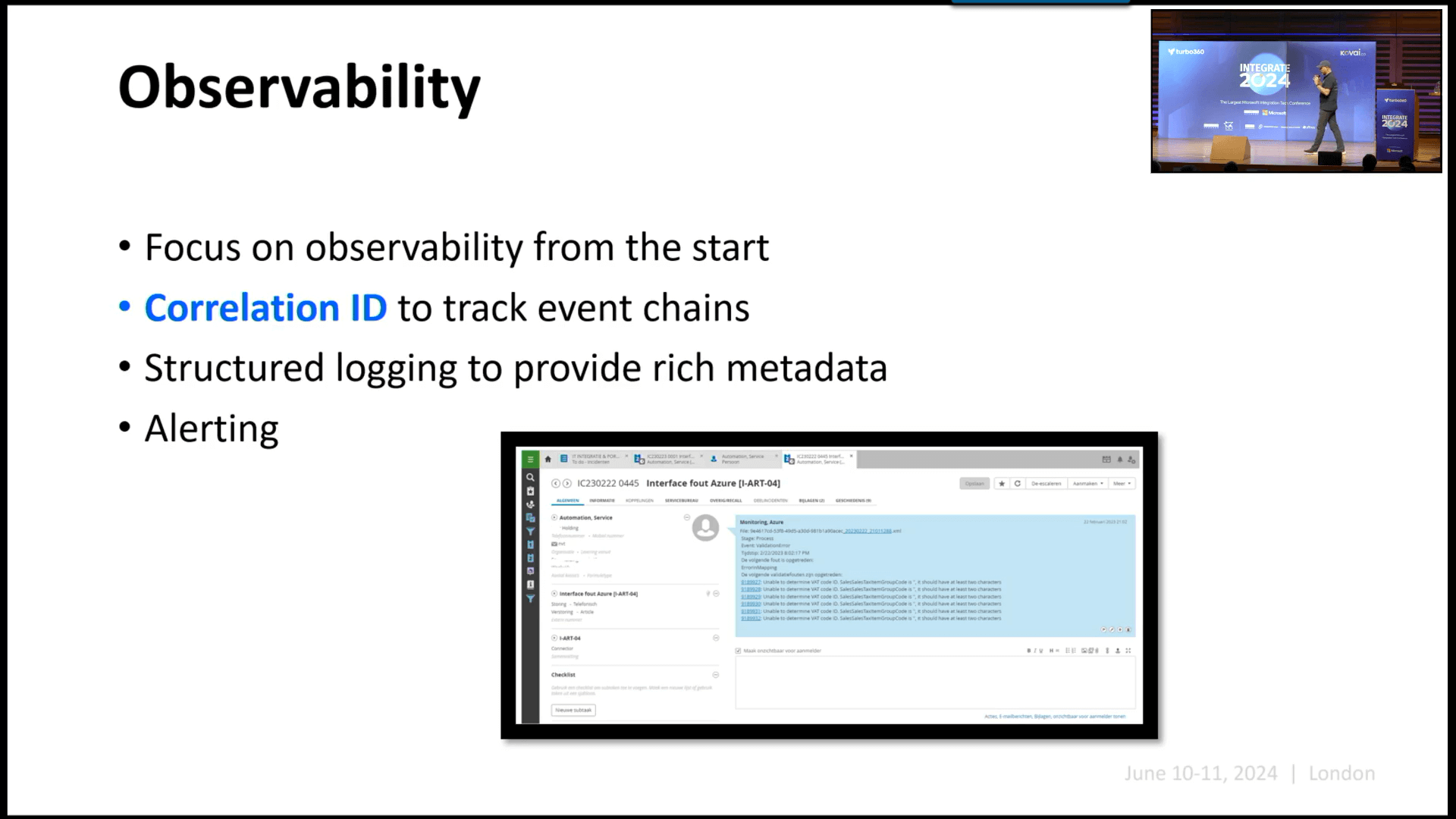

Observability: Steef-Jan explained the challenges of monitoring, from the legacy BizTalk server using Windows event viewer/application logs to Azure Domains, APIs, On-Prem

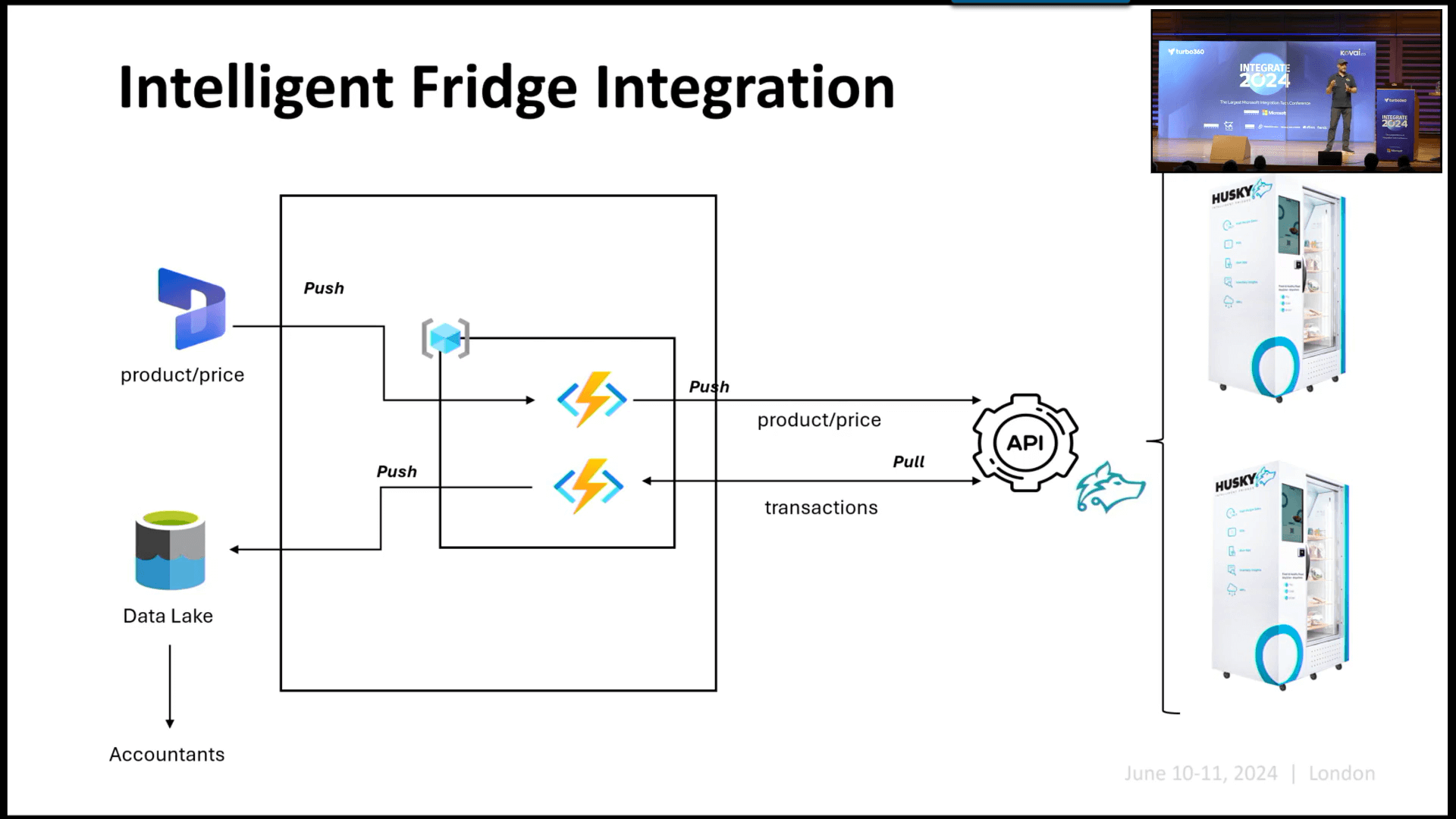

Evolving platform: At last, he mentioned the evolving platform, Azure, with a real-time example of how the Retail industry has developed like the Intelligent Fridge integration, teller machines, and Digital screens.

Before ending his speech, Steef-Jan showed the Retail Media Integration has been implemented and his learnings which is a great learning experience.

#9: Intrusion tactics: mastering offensive security in Azure integration

We are almost at the end of Integrate 2024 with just two more sessions. And there could not be a better way to end the events that having banging sessions on Integration tech.

Nino Crudele is a Security Manager at Avanade who has contributed heavily on the security for integration applications. Nino’s soul purpose of this session is to make everyone better in Security.

As always Nino’s session in Integrate is no Joke, everything discussed in this session is only for educational purposes.

You must learn to attack the master the art of defence

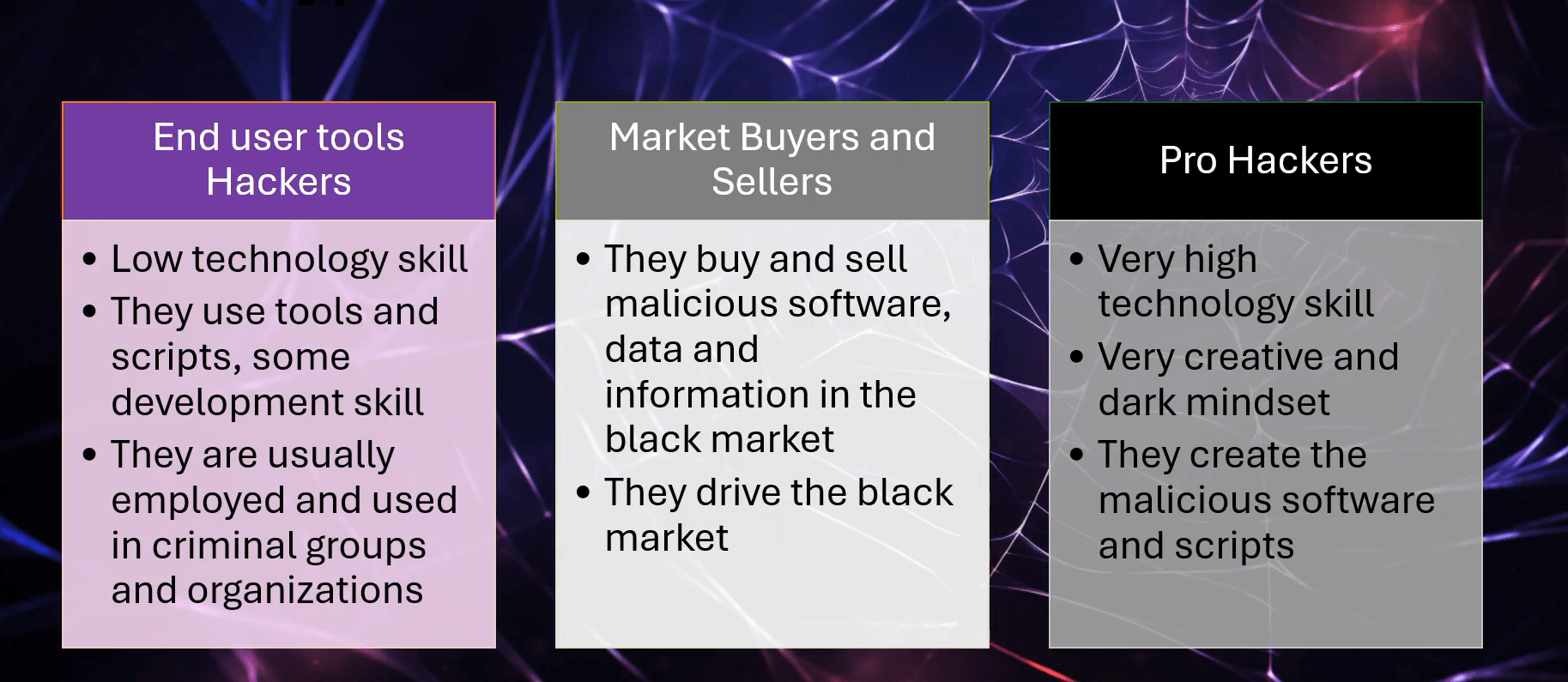

Types of Hackers in the world:

- End user Tools Hackers – They make use of the existing tool or build some basic malwares to hack into systems. They are usually employed by the companies for testing purposes.

- Market Buyers and Sellers – They deal with millions and billions worth of data/software. This could also be companies buying software to make their system more secured.

- Pro Hackers – Really technical with deep knowledge. These are the people who built the malwares or tool for both hacking and security.

NOTE: Malware is a generally used software for hacking.

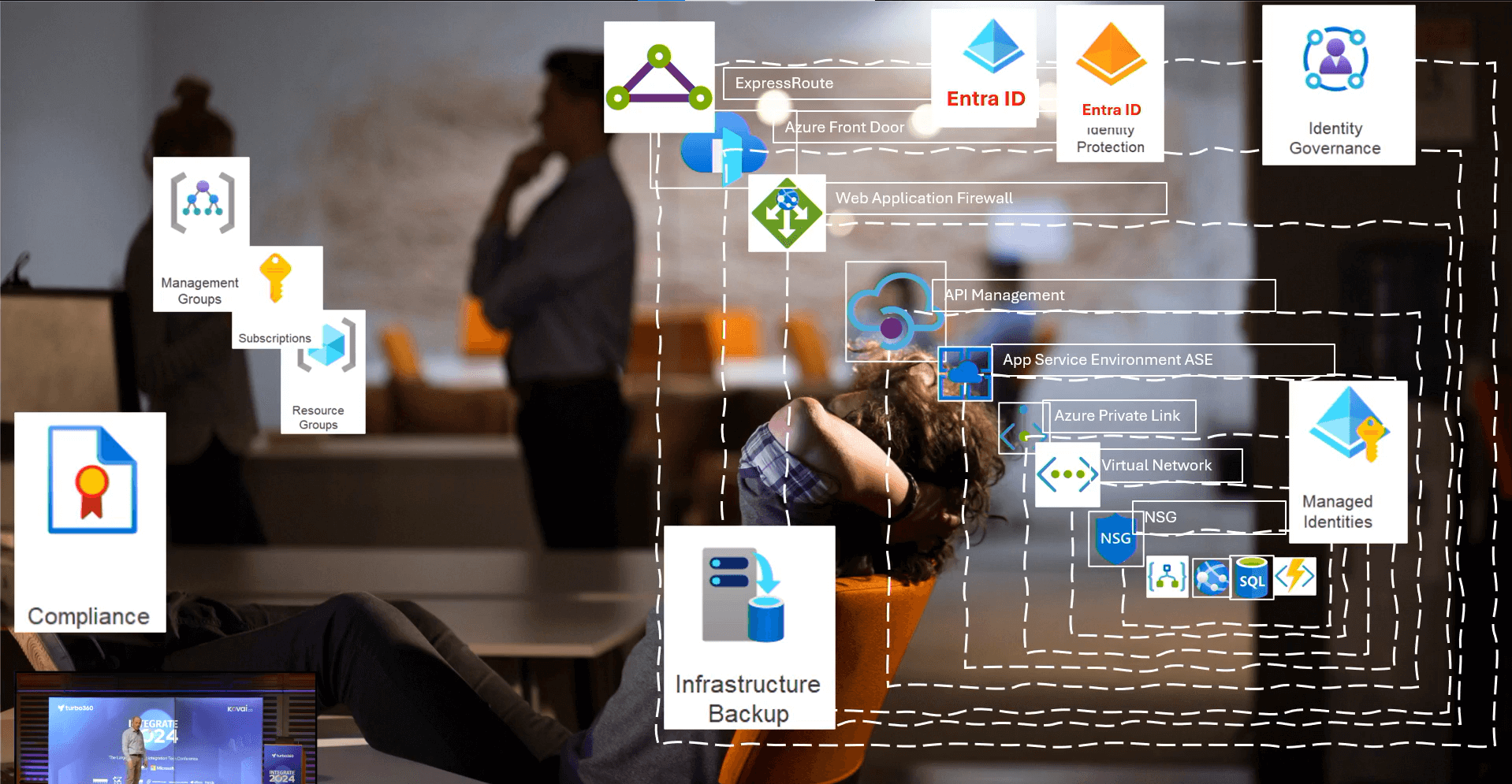

Typically, how Integration builds a secured solution:

The Integration team receives a message/request from the business team with the request and details of the application and the integration team starts to build the application. The integration team always thinks to build an solution so secure, even James Bond would need a password reset.

Hence the integration team starts additional security components to the solution like NSG, Vnet, Azure Private links, ASE, APIM, Web application firewall, front door, express route, etc.

Even after all these security in places, after a month the SOC team discovers the company information is leaked in dark web.

How the data is breached?

That’s impossible right? But that’s where the hackers outstand the developers. Sometimes when we focus fully on the bigger items we miss on the small ones.

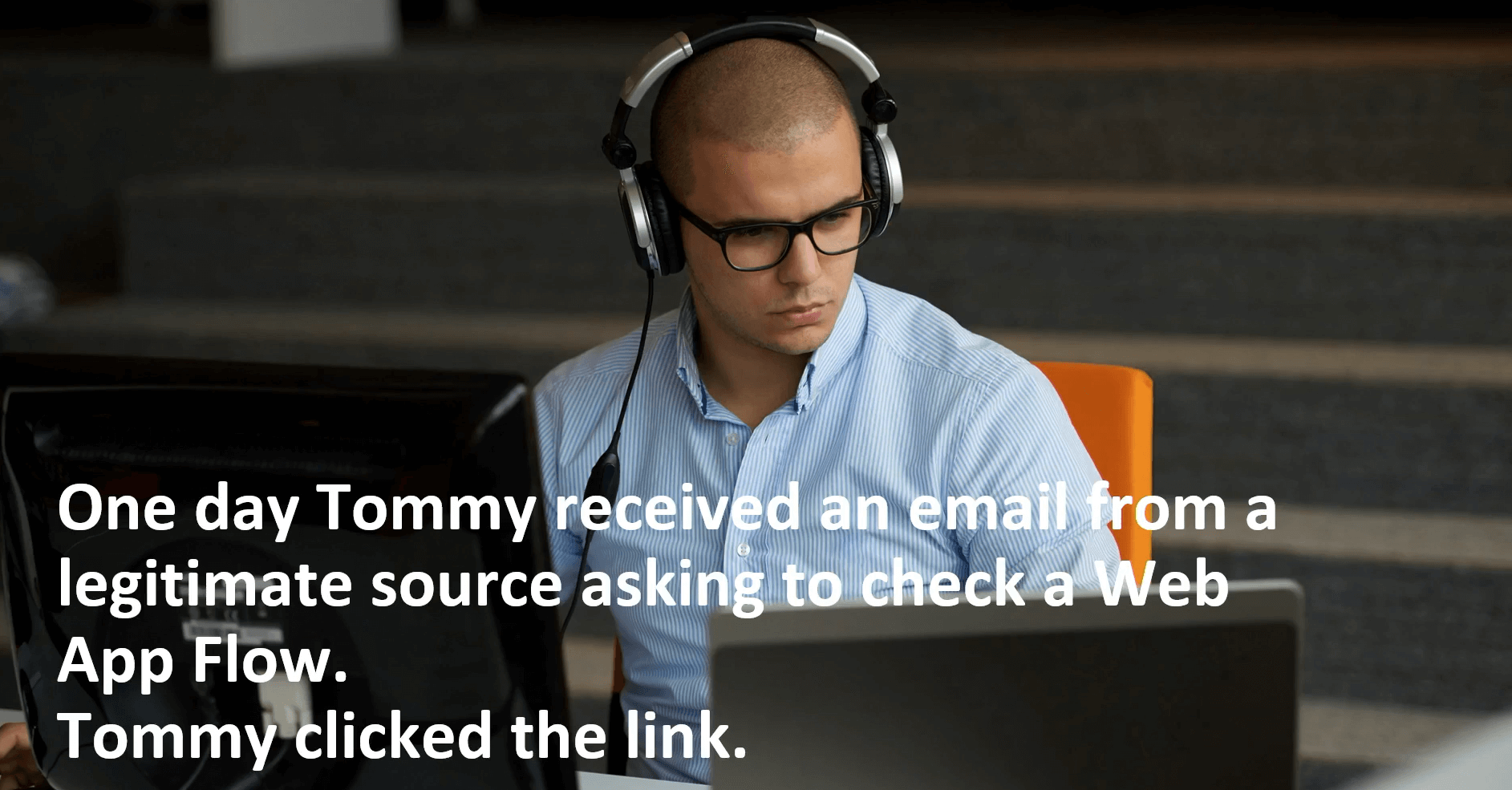

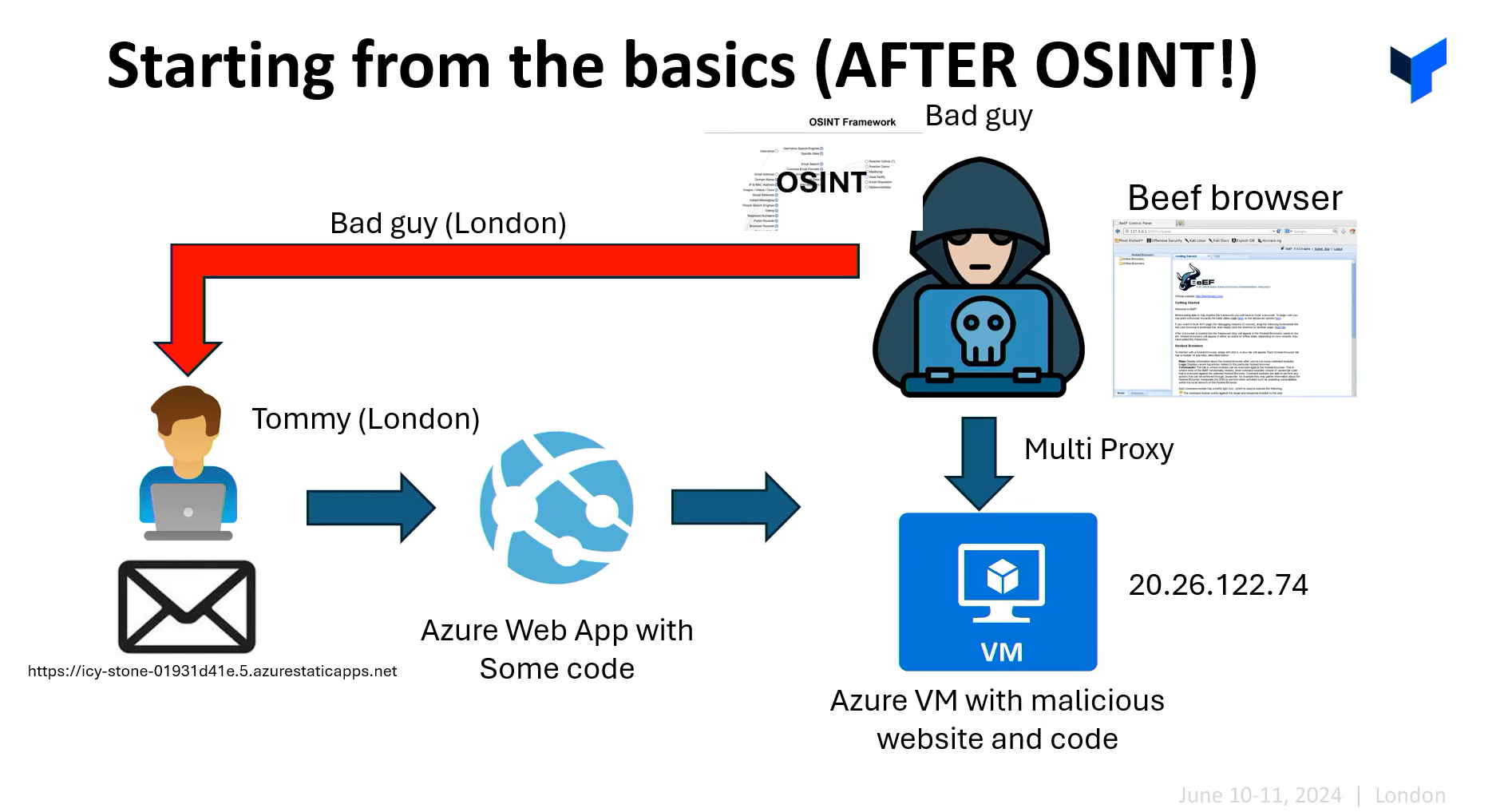

One day Tommy (Integration team) received an email asking to verify the application and that’s the end of story.

Let’s talk on how the hacker actually did it. The hackers do the below things:

- Looks for the correct person to initiate the attack

- Opens a free Azure account to look legitimate (as the company is also using Azure)

- Creates the required resources (web apps and VMs)

- Installs all the required malwares to the VM

- Sends an email to the target with the webapp’s link

Primary Target for Attackers:

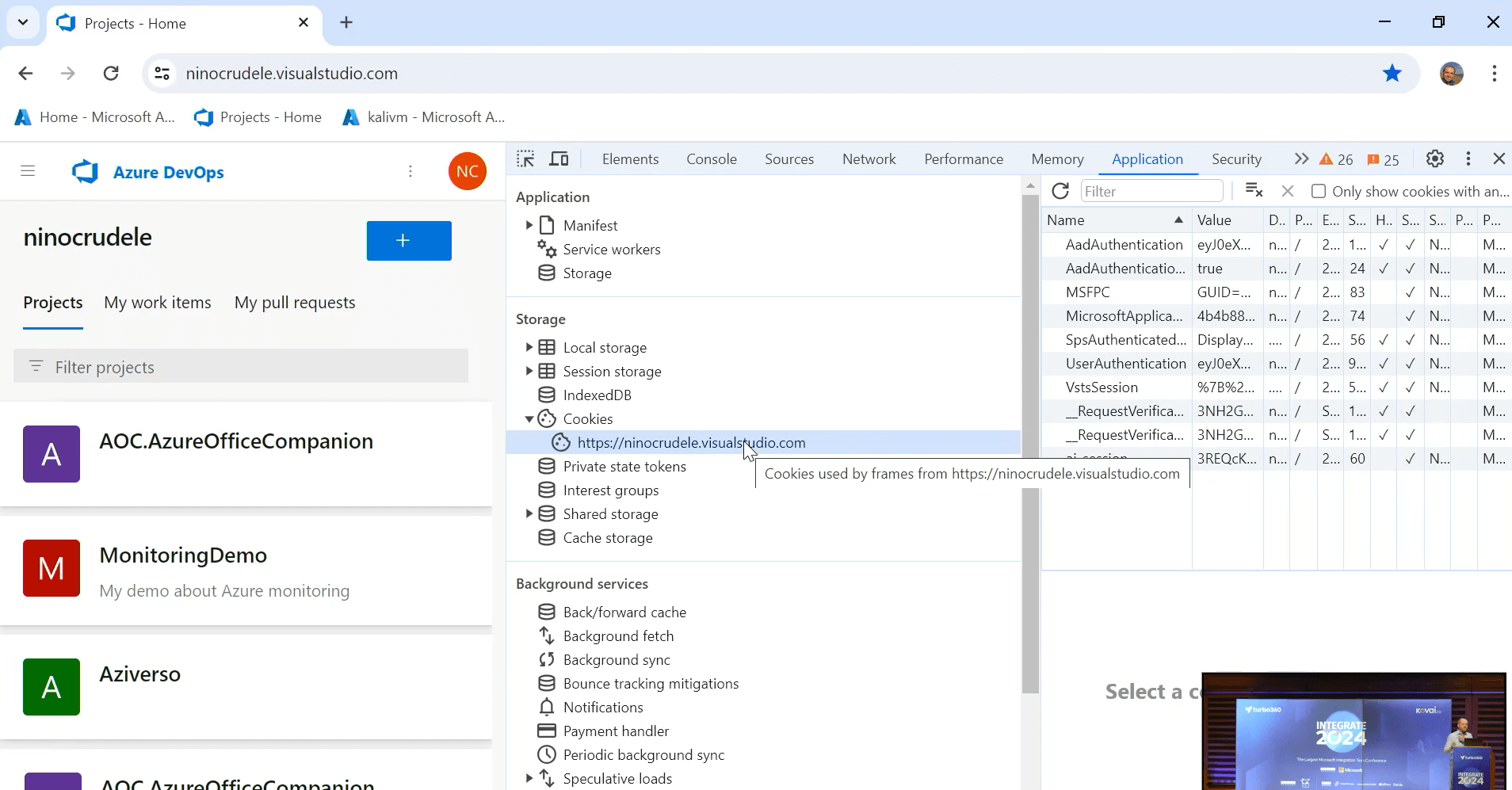

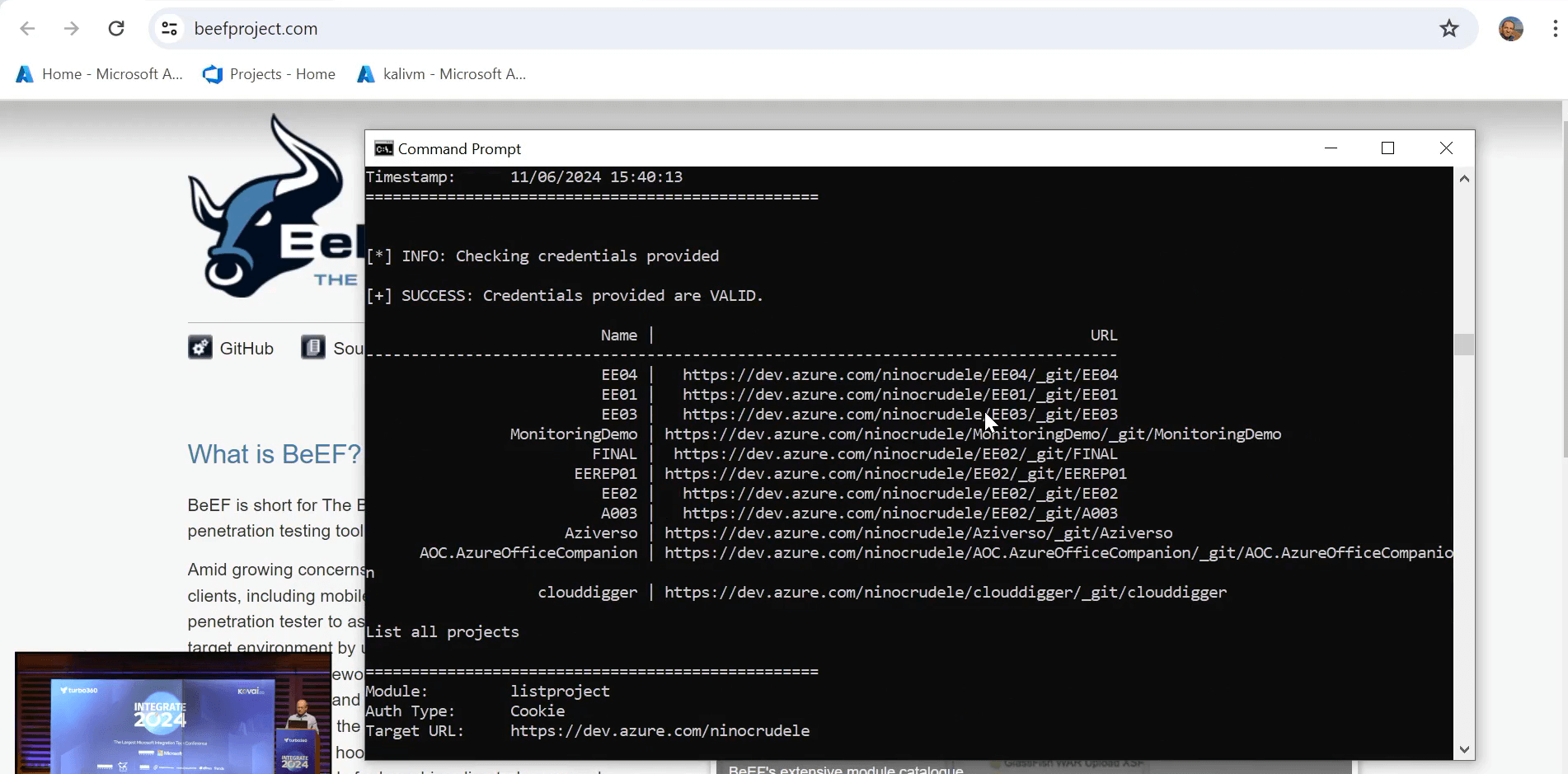

All attackers will usually attack or target the DevOps because the cookie in DevOps stores all the password for the source code access.

Beef is an application that can be used to attack and retrieve the user’s credentials. But no matter how good the attackers are they need an entry point into our application which 90% of the times are via users.

Then few real-time hackings on Nino’s own DevOps were demoed for better understand.

2023 Crime Reports:

Though APIs makes 70% of web traffic, 90% of attack to corporate happens via Email. And out of all the crime types, Phishing/Spoofing complaints are number 1 in volume.

How to protect us from attackers:

The first steps is to build a technically secured solution using Azure offerings like NSG, Vnet, Azure Private links, ASE, APIM, Web application firewall, front door, express route, etc. But you need to also need to understand only 10% of attacks happens from breaching strong security layers and rest 90% comes from everything else.

The important message to remember is we are the first and very last line of defence.

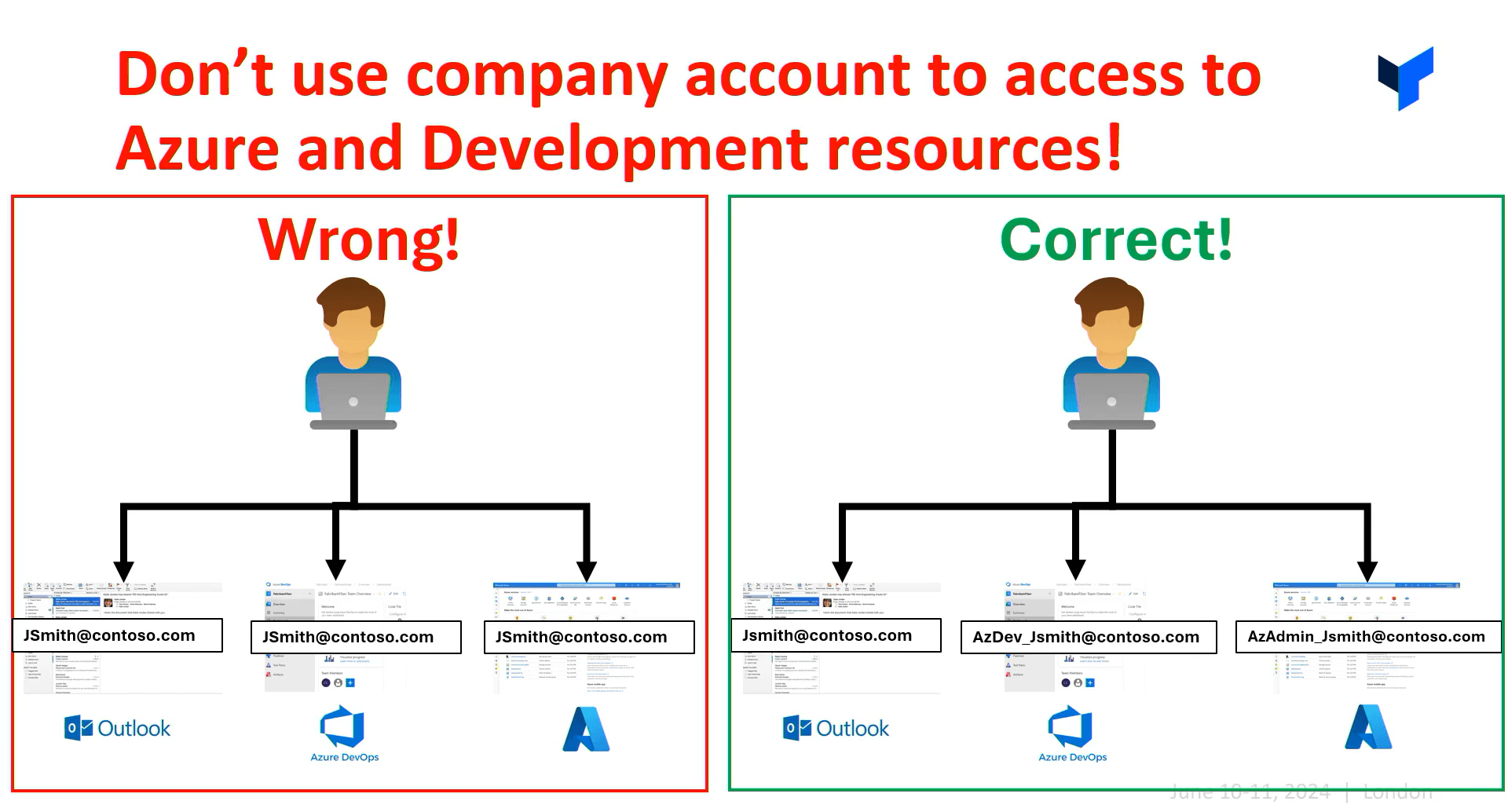

Few best practices:

- Don’t use company email to login to development and azure resources. Use different login credentials for each and every solution.

- Use advanced threat detection with specific user and entity behaviour

- Use clean naming conventions

- Use Integrations to evaluate and automate the intelligent accounts management

- Use Defender for O365 intelligent integration

- Organise specific training with experts

- Organize security games in the company

#10: Azure OpenAI + Azure API Management Better Together

Hello there Techies! It’s the last day at Integrate 2024 and there were more fascinating sessions witnessed where Andrei Kamenev and Alexandre Vieira, presented the session on “Azure OpenAI + Azure API Management Better Together”.

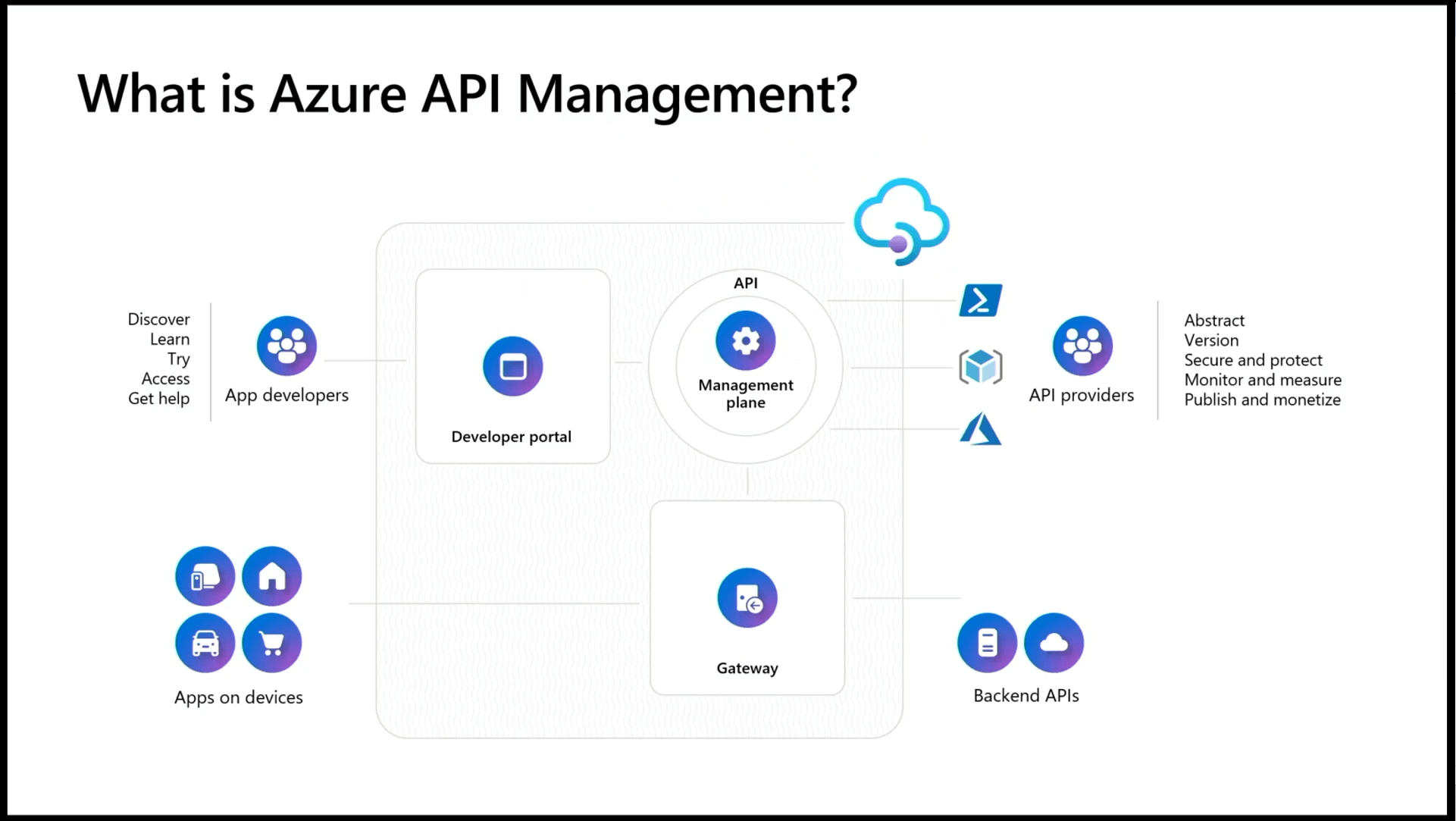

Andrei greeted the audience and introduced himself as Product Manager at Microsoft and Alexandre, Senior Azure Technical Specialist at Microsoft. He then began the session by providing the insights on what Azure API management is, why OpenAI is a must and how it can be integrated in Azure APIM with secure management and pub APIs.

Topics covered:

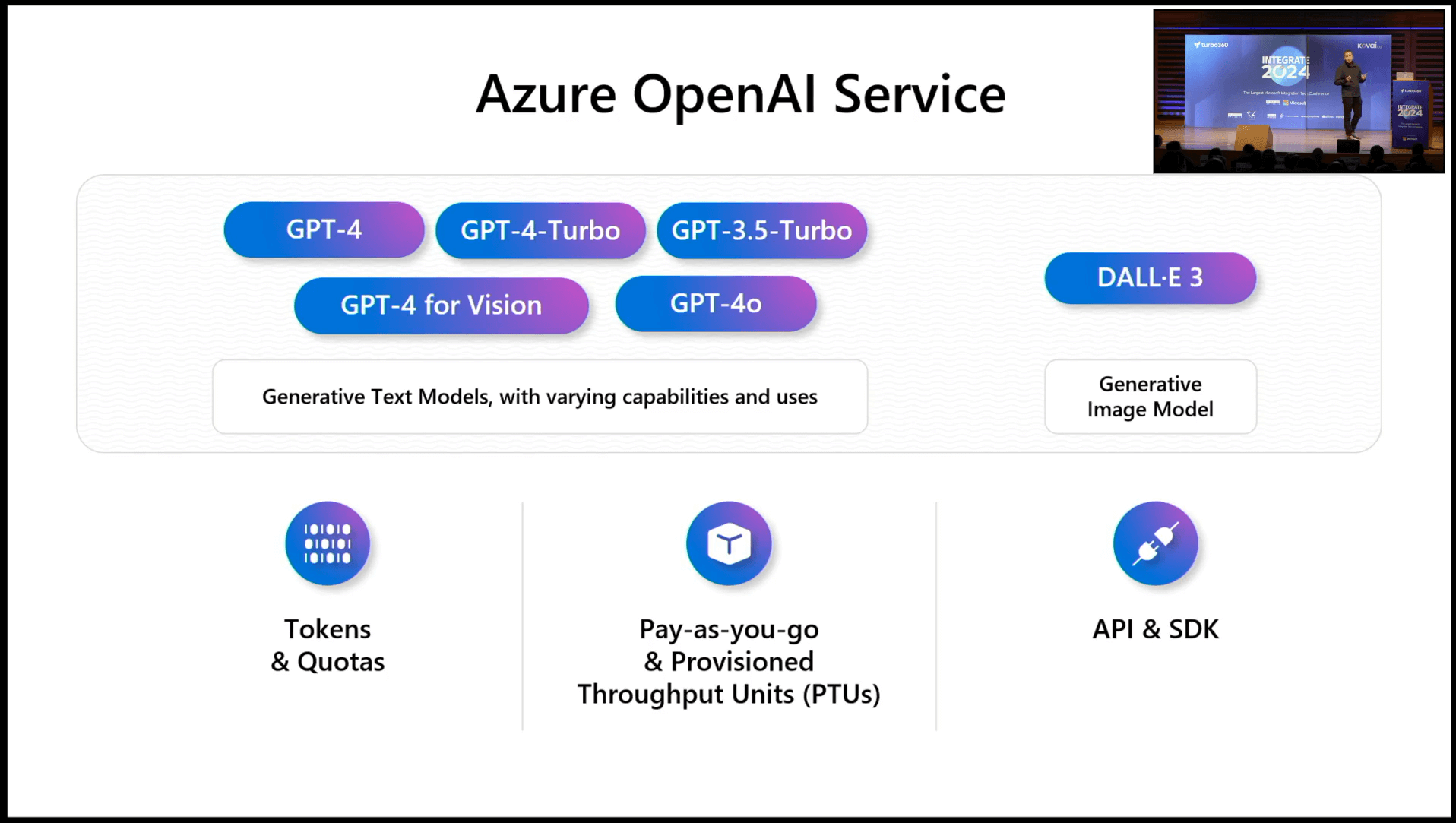

Azure OpenAI Service

- This used the AI models like GPT-4, GPT-4-Turbo, GPT-4 for Vision and GPT- 4o which understands and generates human like texts.

- DALL-E 3 AI Model generates an image model by providing the script.

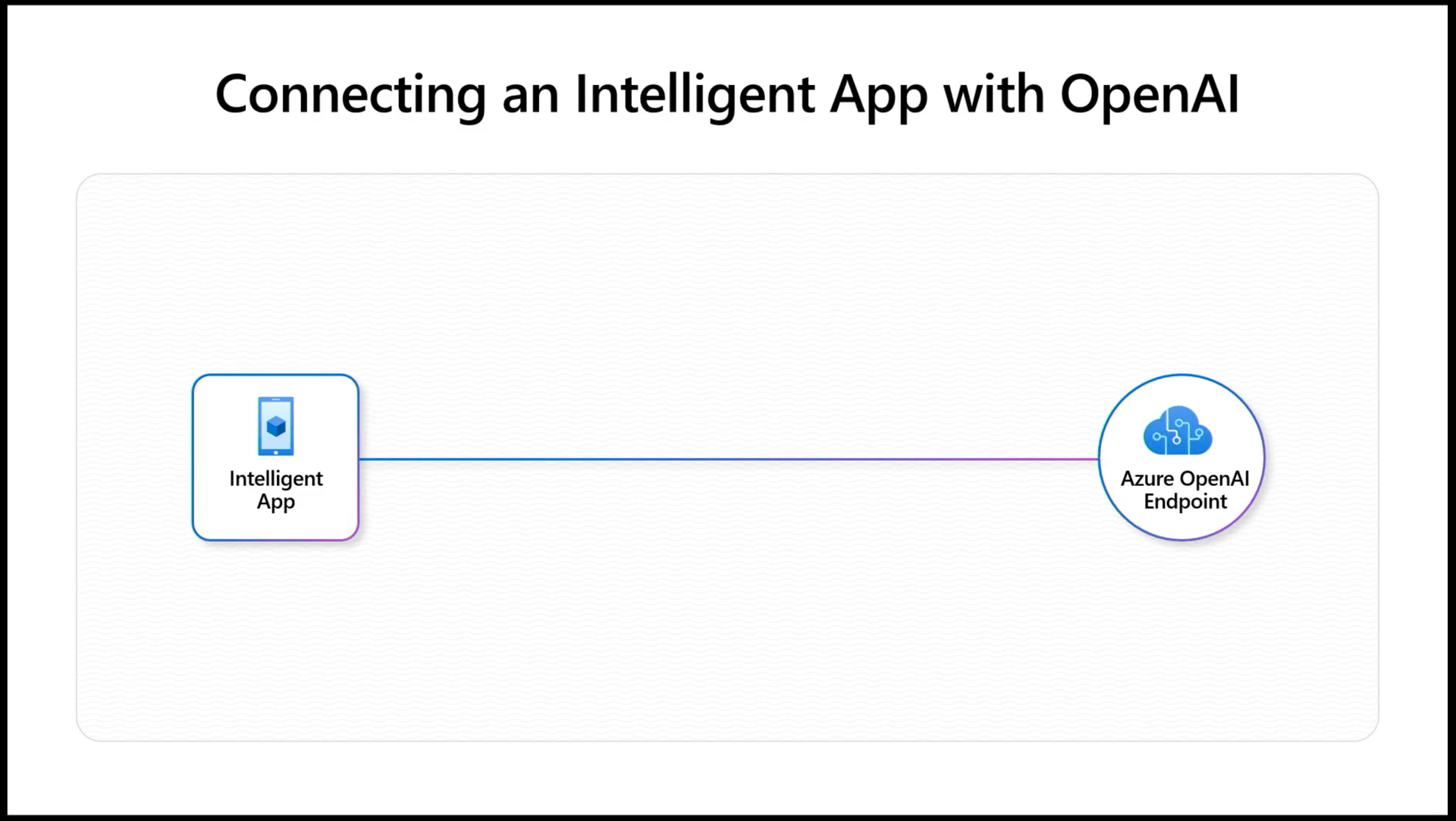

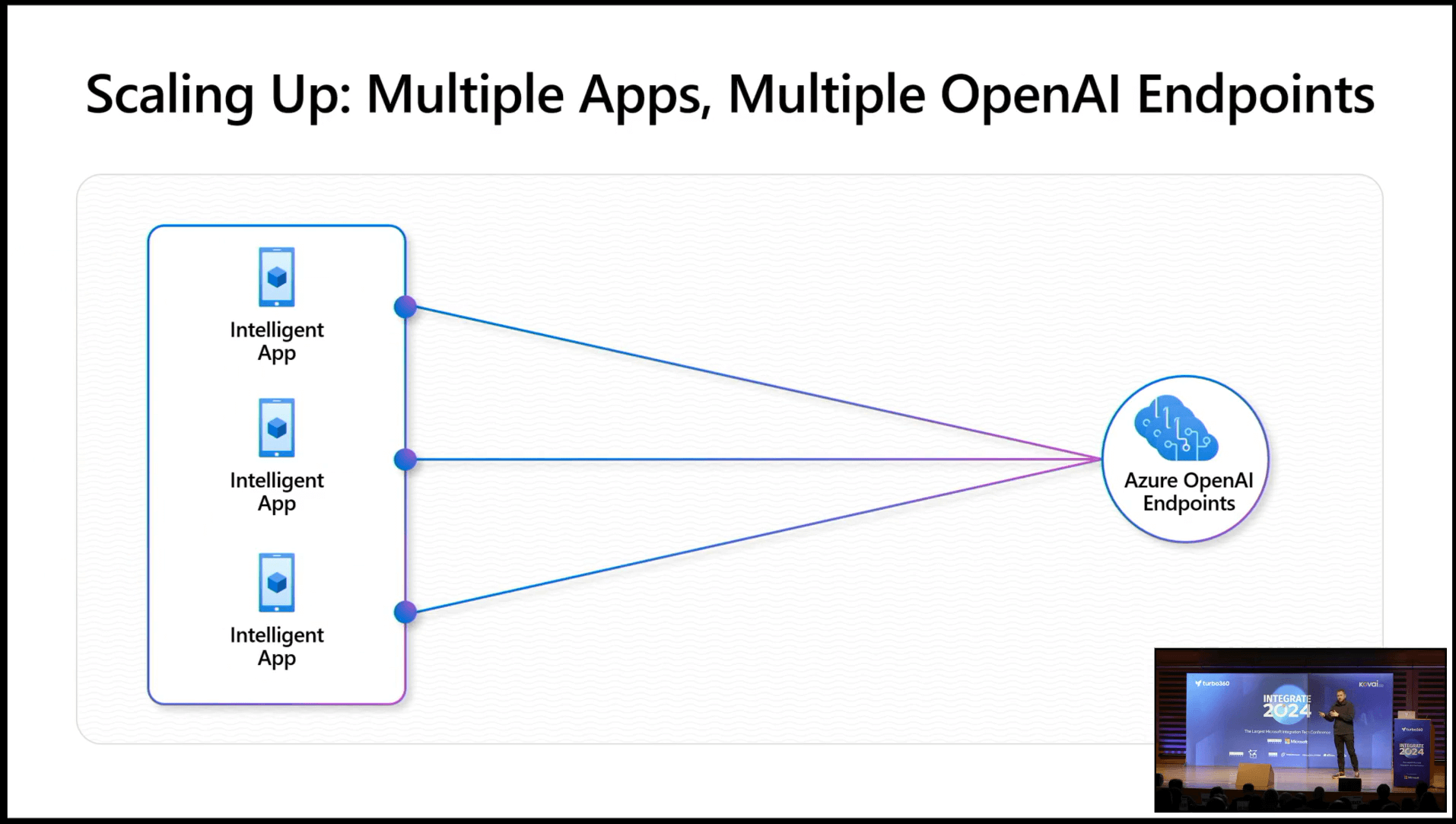

Connecting an App and multiple Apps with OpenAI

Challenges in Scaling up multiple Apps with OpenAI Endpoints

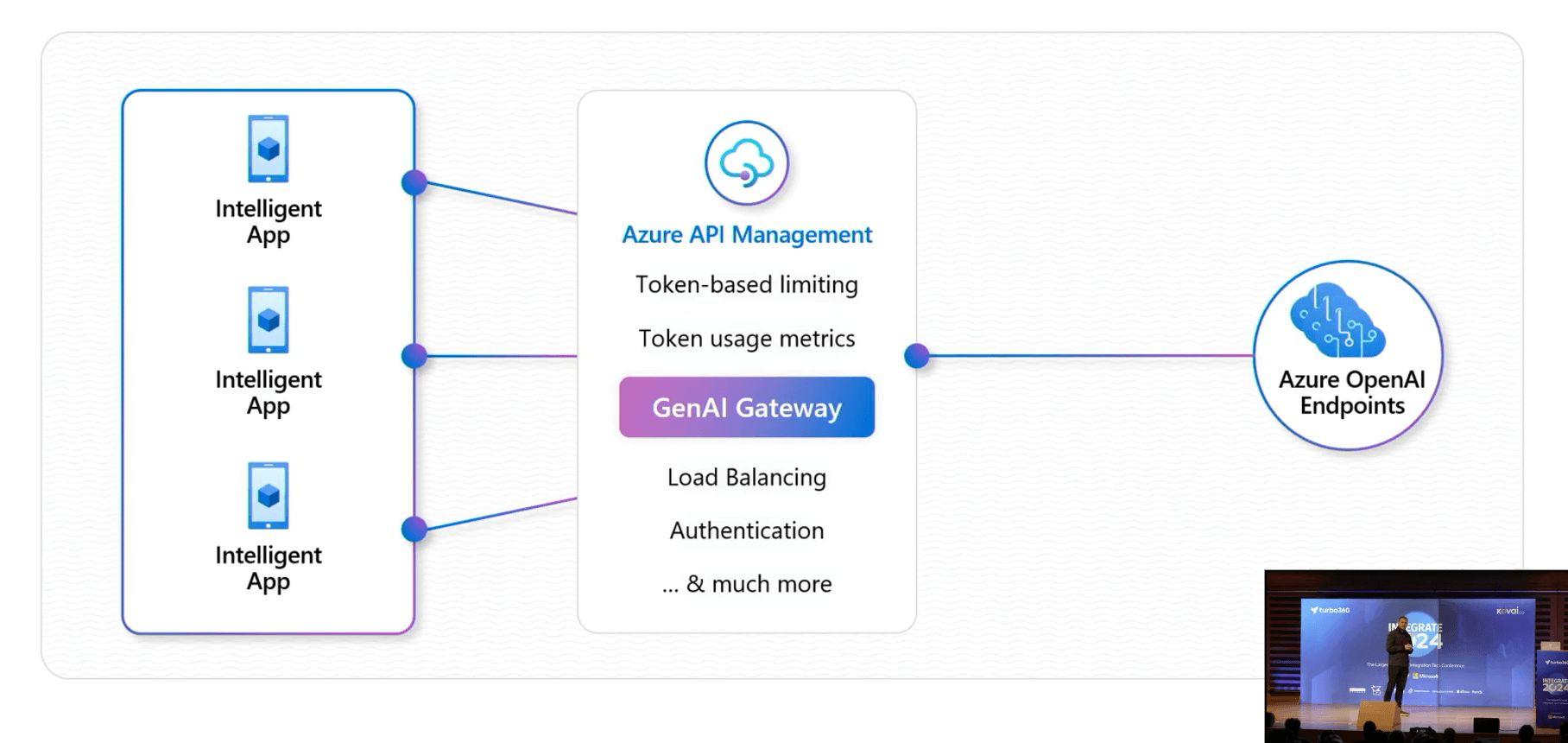

They explained that the solution for the above challenge by utilizing an GenAI Gateway in Azure API Management which can connect both multiple Apps with Multiple OpenAI Endpoints in terms of scaling them up.

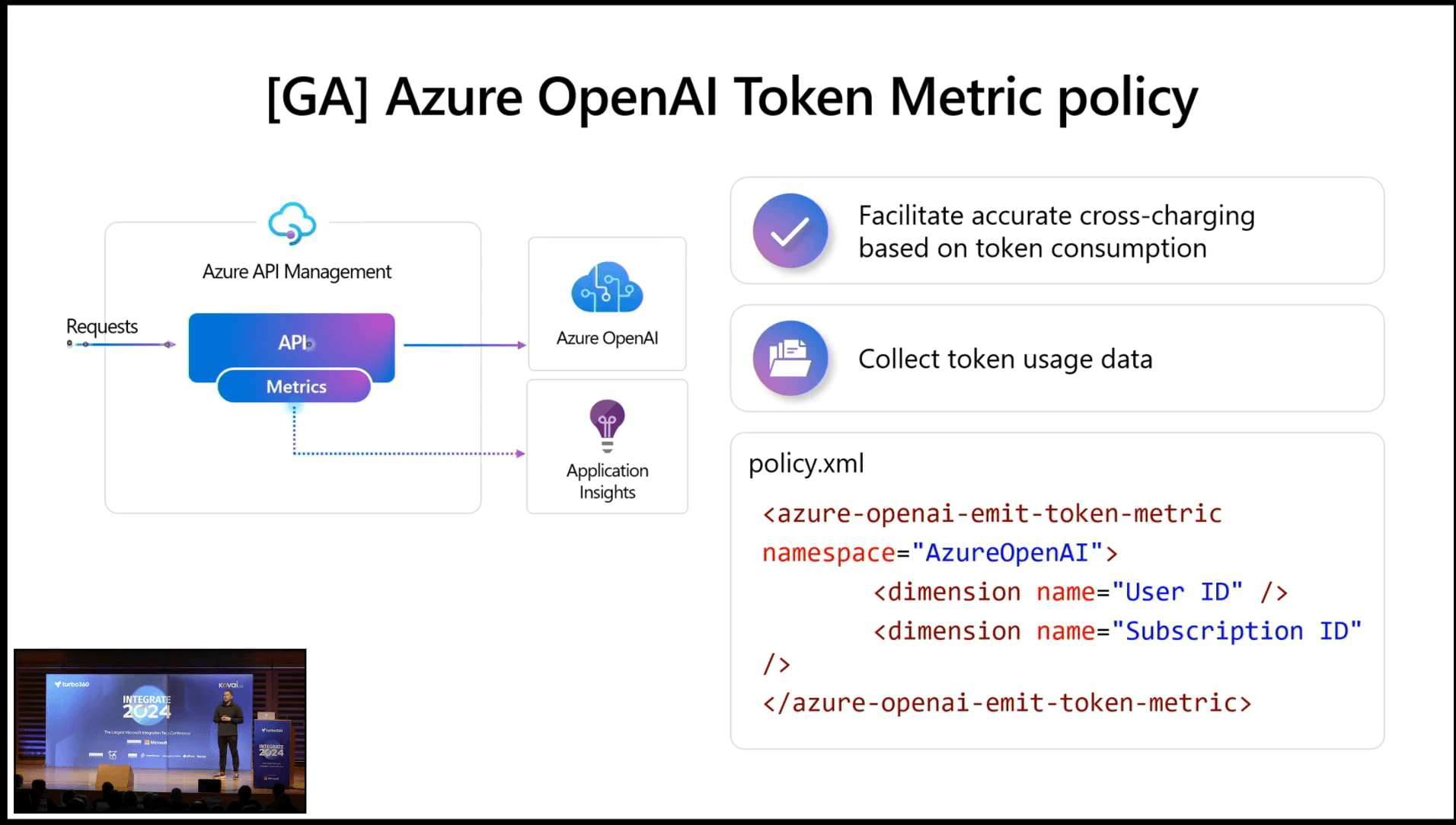

Token Metrics Policy

- Andrei explained that Tokens will be generated during each content in text to measure the input and output in the GPT models.

- Quotas ensures the limitations on the number of tokens the user uses in the given time frame which will be expressed as token per minute.

Demo

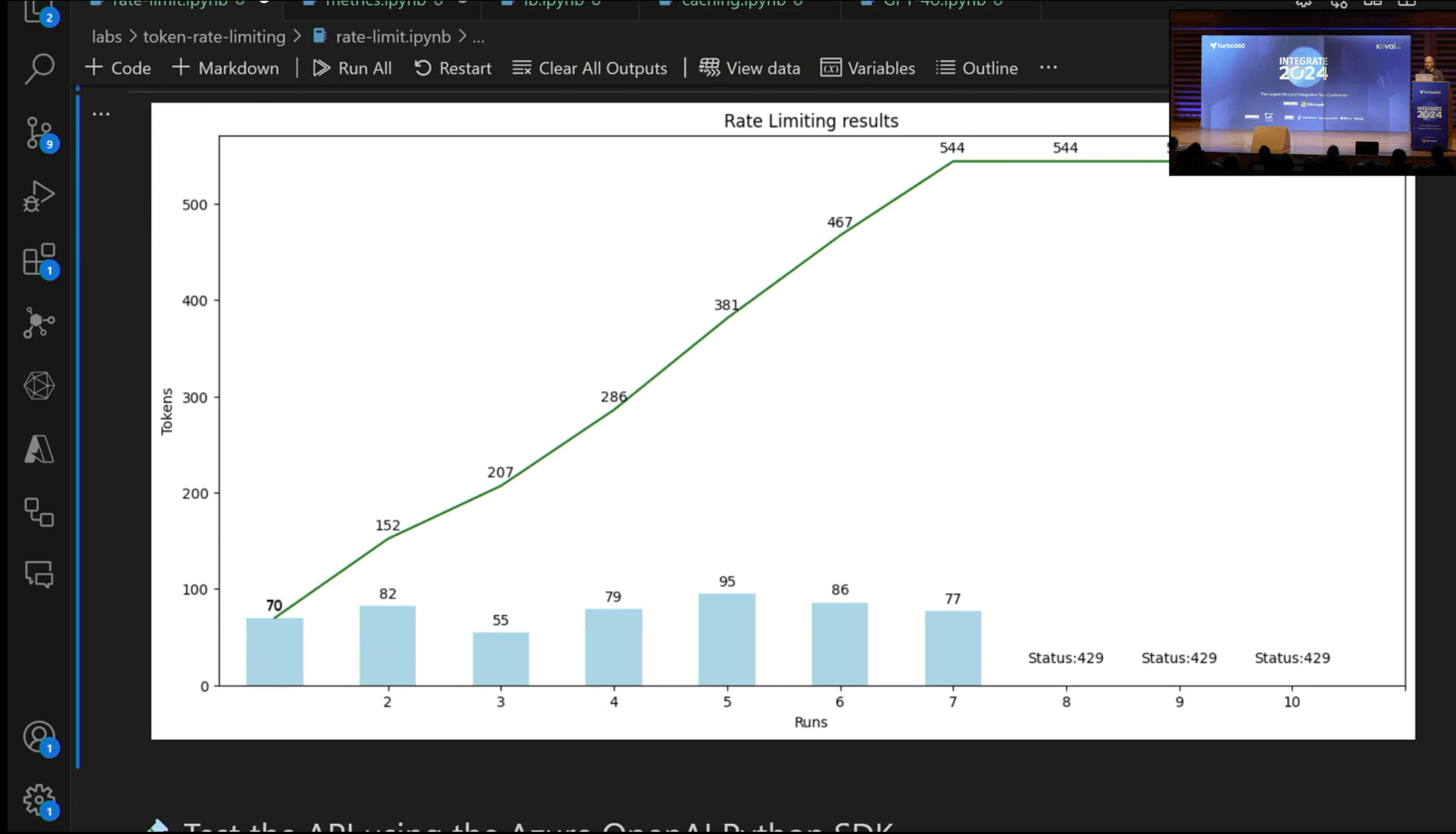

Alexandre showed how to create a new OpenAI service for his API by creating policies for managing the token consumption and token usages. He also explained on tracking the token usage with keyless approach. He then switched the gear to show the token rate limit demo, which helps users to experiment with their APIs in the APIM. He used Python for OpenAI using the Jupyter notebook

He used python sdk for OpenAI using Jupyter notebook and used pandas library to generate the graphs.

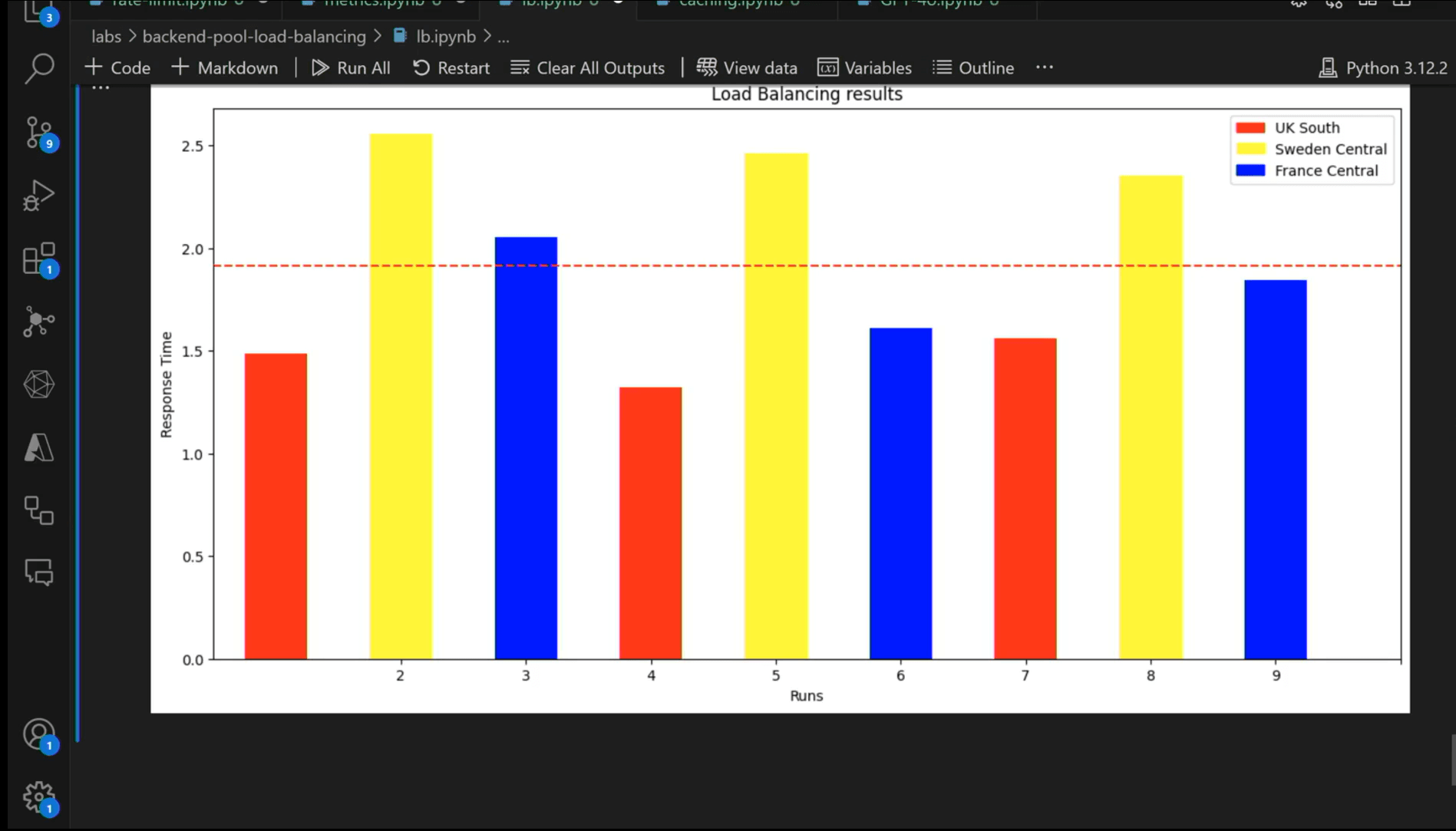

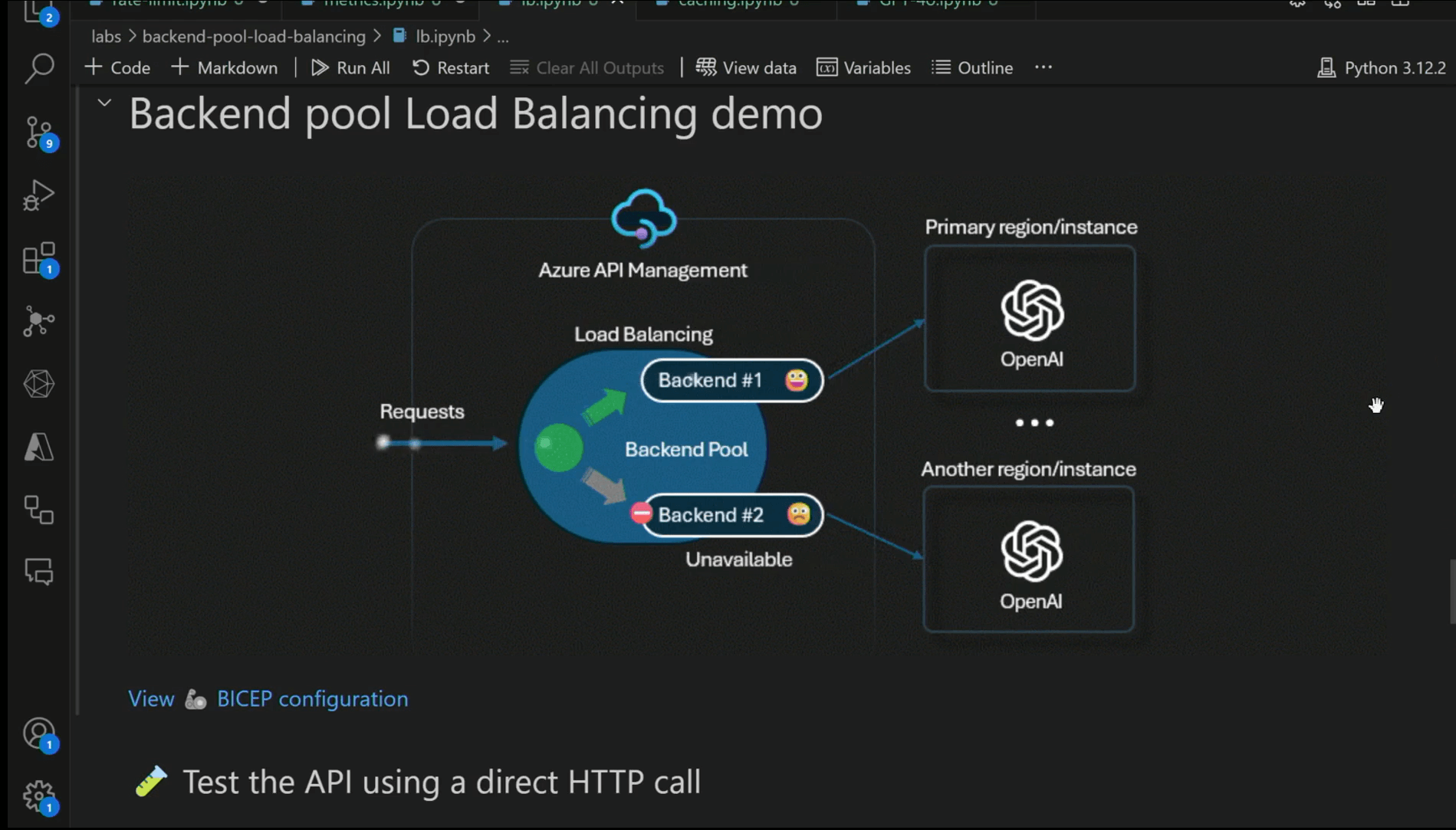

An example on backend pool load balancing demo was explained, how different applications in different regions can use the load balancing using mechanisms like round robins and few more through which experimenting the policies can be done and how they behave amongst different regions as a biproduct.

Conclusion

Finally, they concluded the session with a comprehensive summary of their demonstration and added that every application will be reinvented with AI and new apps will be built like never before.