It is again the time of the year for the most awaited conference on Microsoft Integration. Here we bring the highlights of Day 01 at the #INTEGRATE 2019 UK.

Table of Contents

- Integrate 2019 – Welcome Speech – Saravana Kumar

- Key Note – Jon Fancey

- Logic Apps Update – Kevin Lam

- API Management: Update – Vlad Vinogradsky

- The Value of Hybrid Integration – Paul Larsen

- Event hubs update – Dan Rosanova

- Service Bus update – Bahram Banisadr

- How Microsoft IT does Integration – Mike Bizub

- Enterprise Logic Apps – Divya Swarnkar

- Serverless real use cases and best practices – Thiago Almeida

- Microsoft Flow – Kent Weare & Sarah Critchley

Integrate 2019 – Welcome Speech – Saravana Kumar

With the bright weather outside and a spectacular number of attendees gathered, Saravana Kumar started the day with the Welcome speech. Integrate happens annually and this year with a lot more new people joining the event. The event used to be a BizTalk Server focussed initially and since last few years expanded the scope to general integration technologies in Microsoft space.

A big thanks to all the participants, speakers and sponsors making this happen Year after year. Your support means a lot to us.

What was started with humble 50 participants in 2012, INTEGRATE has now become a premier conference with close to 500 attendees on its 8th year.

This year the event has 15 speakers from Microsoft product group, Redmond. The conference has got the speakers such that, if you can’t find answers here, you probably don’t get a better chance anywhere else.

Being a community-focused organization, INTEGRATE is our biggest event and we do sponsor other initiatives like –

Integration Monday – a weekly webinar presented by MVPs, Architects, Technology leads and at times by Microsoft Product group on Microsoft Integration technologies. It is running over 140+ weeks now.

Middleware Friday – A big shout out to these gentlemen – Kent Weare & Steef Jan Wiggers who have regularly contributed to this initiative publishing short videos Integration concepts and tips. Recently it crossed 100th episode and they are still continuing.

Both the above initiatives are a part of the Integration User Group.

This year, we launched two more new initiatives –

Serverless Notes – A lot is now happening in Serverless space and we bring a lot of content and tips in a single place

Integration Playbook – Michael Stephenson, with his 10+ years in Integration space, publishes interesting content about Integration architecture patterns, design patterns, etc.

Both the above two knowledge base is published through a self-service SaaS tool from us – Document360

Launching our new Identity

BizTalk360 is our flagship product and for these many years, we have been known by this name as our brand. In recent years, we have come up with a few new products like Turbo360, Atomic Scope, and Documents360. All these products have paid customer now and are scaling up. It is only right for us to have an umbrella brand to reflect ourselves as a multi-product company. We leveraged our registered entity name – Kovai Ltd.

We will be known as Kovai.co, hereafter. You can read our growth story here

Key Note – Jon Fancey

Who else is better to set the tone for the next 3 days. It was Jon Fancey – Principal PM at Microsoft delivering the power-packed Keynote titled – Beyond Integration.

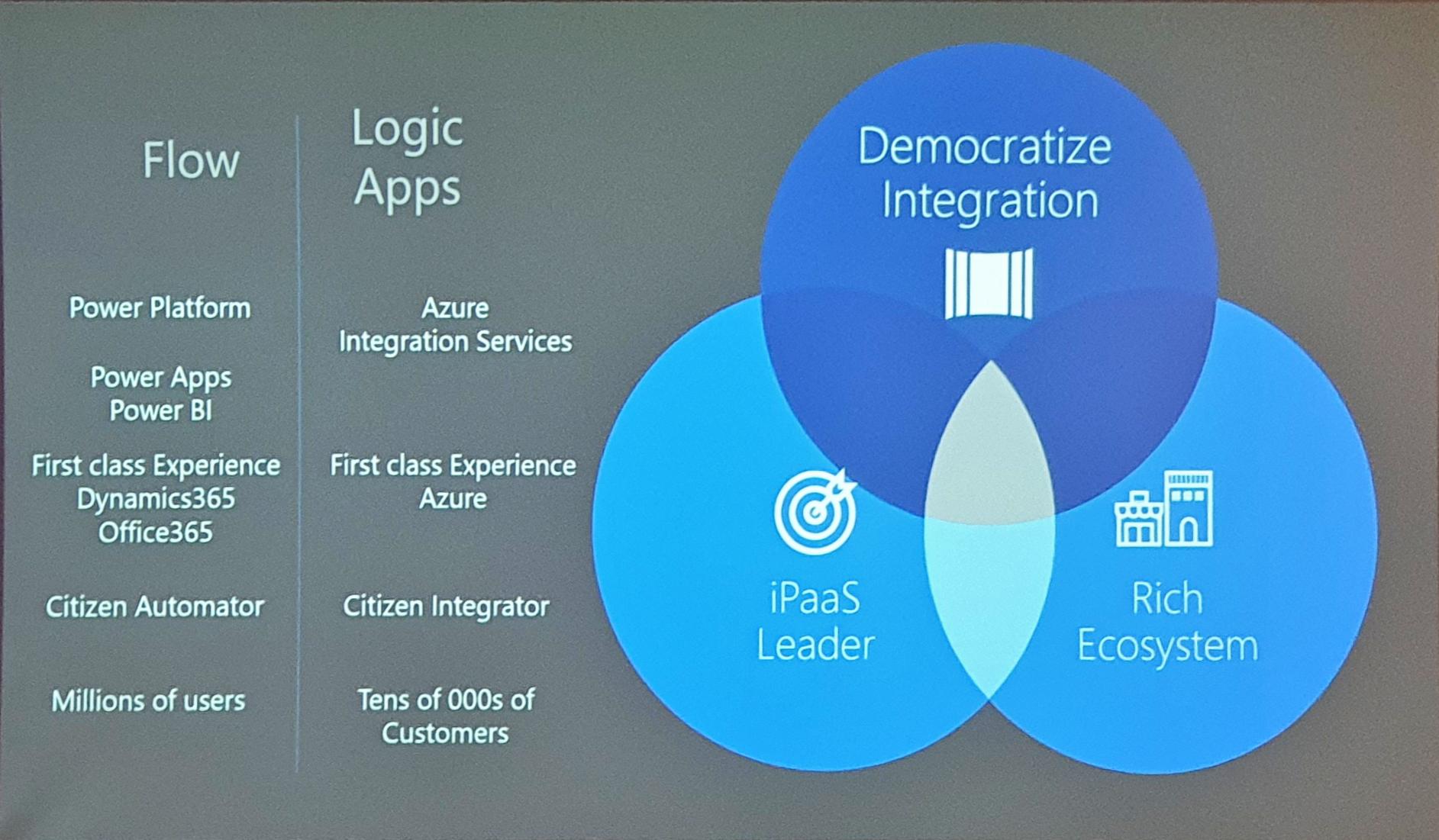

He started revisiting the Vision 2015 while setting up Logic Apps team. Microsoft Wanted to be a leader in the iPaaS space. Not with BizTalk alone but other products as well like SSIS. Achieve a rich ecosystem around it with a lot of investment plans. They primarily wanted to Democratize Integration.

In terms of democratization – there are Logic Apps & Flow teams. Flow has Power Apps platforms, Power BI providing citizens report and integrate data without writing any code. They provide the first-class experience with Dynamics, Office365 and Azure Integration Services (AIS).

In the year 2017, Microsoft made it to the Gartner magic quadrant in iPaaS offering and continues for this year also.

iPaaS platform today has 300+ connectors for Flows, Logic Apps, Functions, etc. Not only Microsoft Connectors but enabled an open sourced lot of connectors. He quoted Gartner –

The more you innovate, the more you need to integrate

i.e. The more platforms you got, the more you enable connectivity between them.

Initially, the integration was focused on BizTalk, which in turn is centered around B2B integration and EDI. But today it has expanded with a lot of possibilities like IoT, ETL, MFT, etc Integration is now so distributed and moves away from the Hub and Spoke model.

As a part of the keynote, Jon Fancey invited 3 enterprise customers who partnered with Microsoft on their Integration transformation.

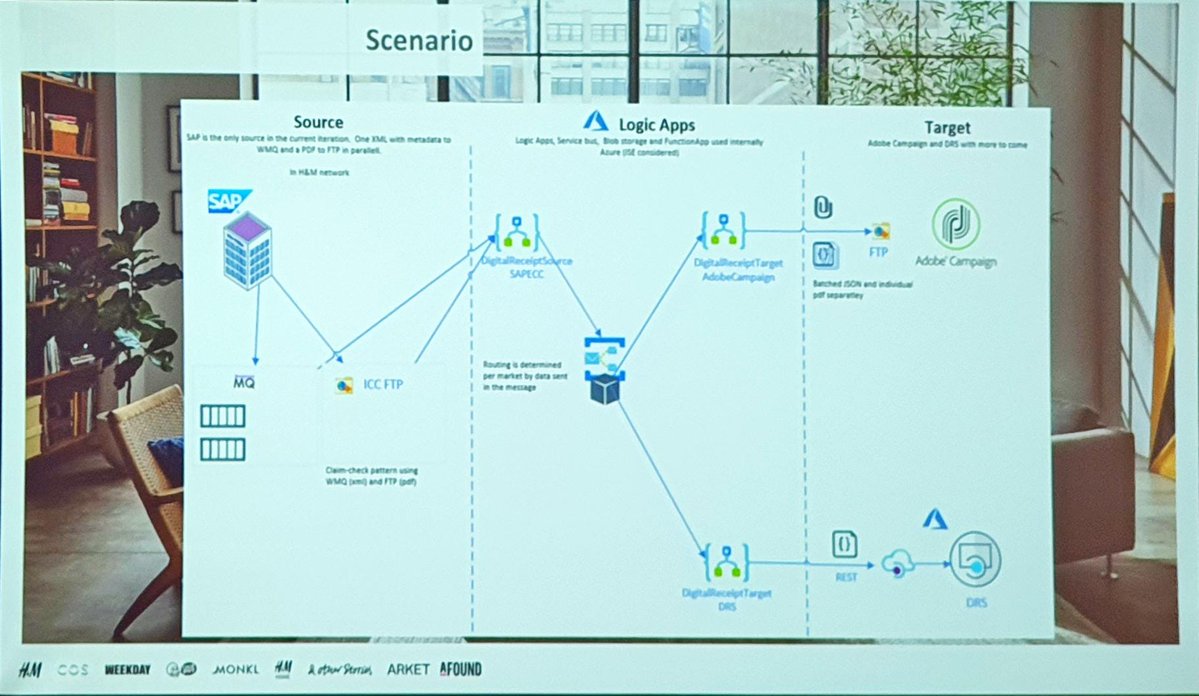

Case Study 01: Ramak Robinson, Area Architect Integration from H&M

Demonstrated the case study for – H&M with over 5000 stores and 180,000 employees. She explained how they wanted to transform H&M from an online store to providing their customers with an Omni experience to buy from anywhere they are or using any device or channel.

Having Technology as a core driver of their value system, they envision to be the most loved design group in the world. They dream of delivering thorough technology with more customer focus.

With 630 integrations using BizTalk, IBM MQ, in-house built, Azure Service Bus they wanted to transition to the cloud. Leverage benefits of the cloud – Retire the old applications or replace with SaaS or Cloud native applications.

Started using Integration Service Environments (ISE) to host their Logic Apps and provide a direct connection to on-prem without needing OnPremise Data Gateway processing > 2 million messages/day. They look at replacing BizTalk with Logic Apps and Azure Functions.

Represented a digital receipt solution hosted on Azure which they are building in close association with Microsoft.

Case Study 02: Daniel Ferreira, SHELL

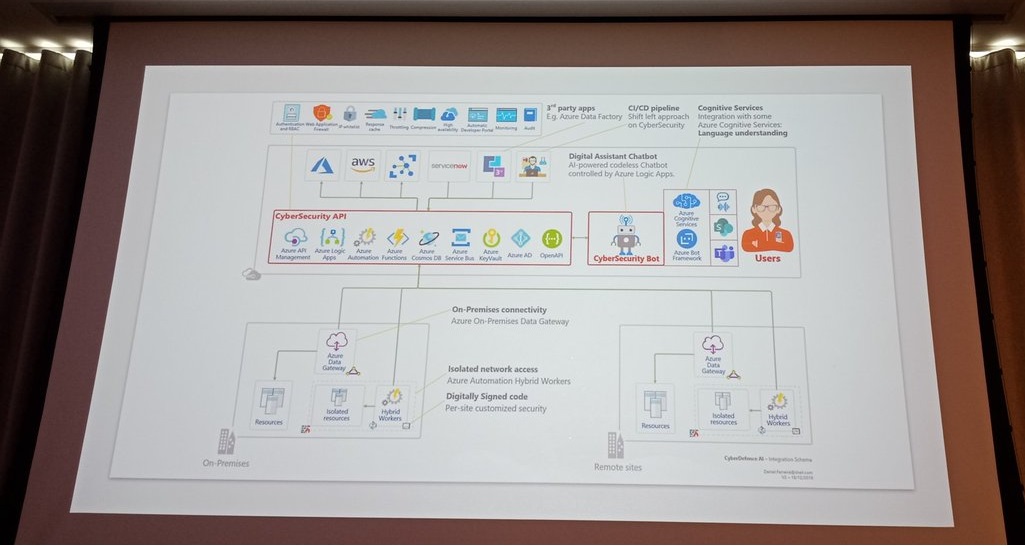

Demonstrating SHELL’s integration transformation using API Integration to support their Cyber Security operations using the API and Bots. The API is managed through API Management. The entire backend of API is build using Logic Apps thus going completely Serverless.

One of the reasons to use API Management is its cache feature that enables a faster response. Their bots are integrated with Microsoft Teams.

Daniel presented an interesting Demo of their Bot implementation integrated with Microsoft teams built using Logic Apps and managed through Azure API Management.

Case Study 03: Vibhor Mathur, Anglo Gulf Trade Bank (AGTB) project

They wanted to optimize the cost of operations and automate whatever and wherever possible. They also wanted an architecture that is lean, scalable and secure. To utilize as much PaaS offering with Security Logic Apps ISE was used.

The entire platform is a huge integration challenge as they decided to use commercially available products. An entire integration layer is built using Azure Integration Services including Logic Apps, Service Bus and API Management. Strong engineering principles were followed using Azure DevOps to manage the repository, run CI/CD pipelines, code quality reports, and nice dashboards.

They also implemented the Azure Active Directory that provided Multi-Factor and SSO authentication to manage their identity. Used B2B for partner integration and B2C for client customers.

Azure Security Centre was used to handle threat protection. To make the bank to be HA and to simulate failover Azure Storage accounts helped. The observability of the platform was enabled through Log Analytics.

To monitor the platform seamlessly to monitor the rules, queries and across IIS and PaaS.

They basically wanted to achieve;

Resilience – Making the security of the bank more robust. Azure Security Centre, Sentinel

Relevance – to support a discrete type of organizations and bespoke systems. To run a lean and thin team to run these integrations.

Ready for future – wanted to expose the APIs for the partners to explore the offering.

Finally, Jon Fancey closed the keynote with a set of questions to the audience that set the context for the rest of the event.

What are you going to – Integrate, Automate, Migrate, Accelerate, Modernize, Disrupt, Change and Create. Now there are tools at your disposal.

Logic Apps Update – Kevin Lam

Kevin Lam shared some interesting updates about Logic Apps. He was so excited about #Integrate2019 and kickstarted the session by giving an introduction about the integration world. And also, he mentioned how connectors play a major role in logic apps.

- You can also create your own custom connectors and publish it to Azure marketplace

- Just in the last 6 months, the Logic app has 38 New Connectors

- That’s so cool! Logic App supports almost 300 Connectors

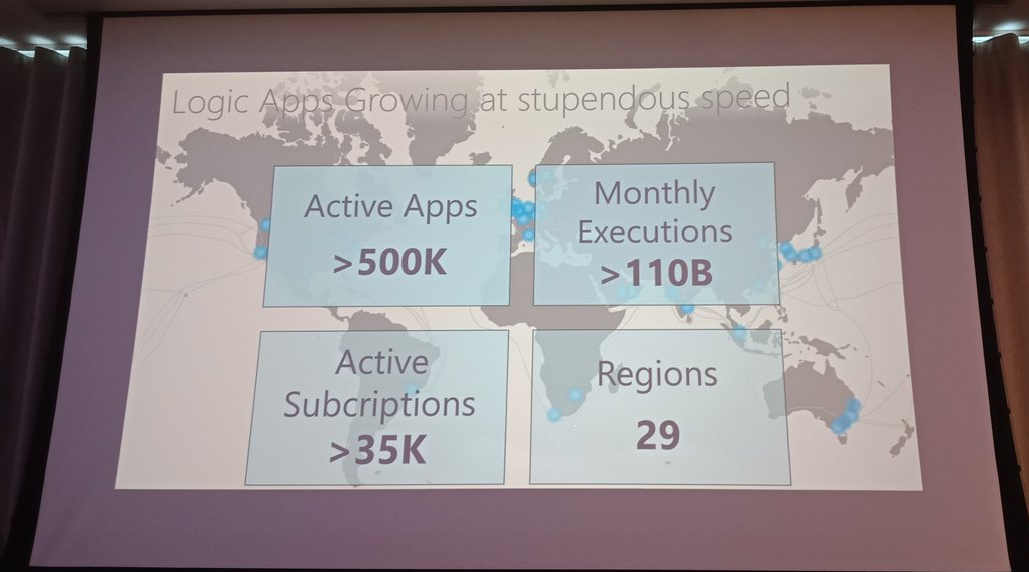

Well, they are growing all around the world by conquering 29 regions and some good number of active apps up and running.

And, 35,000 subscriptions use logic apps and half a million active logic apps running in the service and 4 billion actions running every day. They are also trying to conquer 10 more Regions in the upcoming days.

Integration with Logic Apps

Logic Apps help in integration with its five unique capabilities. Fasten your seat belts! let’s explore them with Kevin.

Service Orchestration

Designer or Code – You can create logic apps using designer view or code view.

Concurrency Control – You can use concurrency control for push triggers.

Scheduling – Schedule your logic apps to run at specific business hours.

Error Handling – try, catch helps you the handle errors efficiently.

Long Running – You can perform long running tasks with Durable Functions.

Message Handling

Message Protocol – It supports many protocols such as SOAP, SMTP, etc.

Message Types – JSON, XML, plain text, etc. message types are supported

Communication Pattern – Your logic app can be designed as in an async or sync pattern.

Message Architectural Pattern – It is the same as Sequential Convoy in BizTalk

Data Wrangling – It maps your data from one format to another format.

B2B – It allows you to monitor and manage your B2B Logic Apps.

Monitoring

History – You can view the run history and trigger history.

Diagnostics – It helps you to check the status of the run and you can resubmit the failed run from the Azure portal.

Tracking – You can track your business data using log analytics.

Instance Management – You can also efficiently manage your instance from the portal.

Security

RBAC – Admin can configure their roles for different users as required.

Auditing – Each user action in the portal are captured.

Static IP addresses & IP communications – You can efficiently secure your environment using these IP addresses.

Roll access keys – You can create access keys for your specified roles.

Encrypted at rest – All your messages are property encrypted at rest protocols

What’s most recent and upcoming with Logic Apps New and integration

Service Orchestration

What’s New?

- Sliding Window trigger

- Static Results

What’s Next?

- Type-handling

- Create with config and templates

- Download parameterized template

Message Handling

What’s New?

- RosettaNet

- EDI core connectors

- Blockchain

What’s Next?

- Service Bus with claim-check

- AMQP action

Monitoring

What’s New?

- Batch trigger tracking

What’s Next?

- Azure Advisor

- Resubmit from action

Security

What’s New?

- System-assigned managed identities

What’s Next?

- Secret inputs/outputs

- Connector Multi-auth

- User-assigned managed identities

- Connections with managed identities

- OAuth request trigger

Kevin then presented his demo to create a logic app with some simple service bus scenario. You won’t believe that the creation of the logic app is faster than ordering a pizza. In the connector, you can specify your security settings if necessary.

Developer Support

One of the major tools that are used to create a logic app is Visual Studio 2019. You can define your logic app via arm template, and you can straight away deploy it to the Azure portal using Visual Studio 2019. It also makes easier to deploy it using Cloud explorer.

Visual Studio code – a lightweight version of Visual Studio also helps you to create logic apps via arm templates.

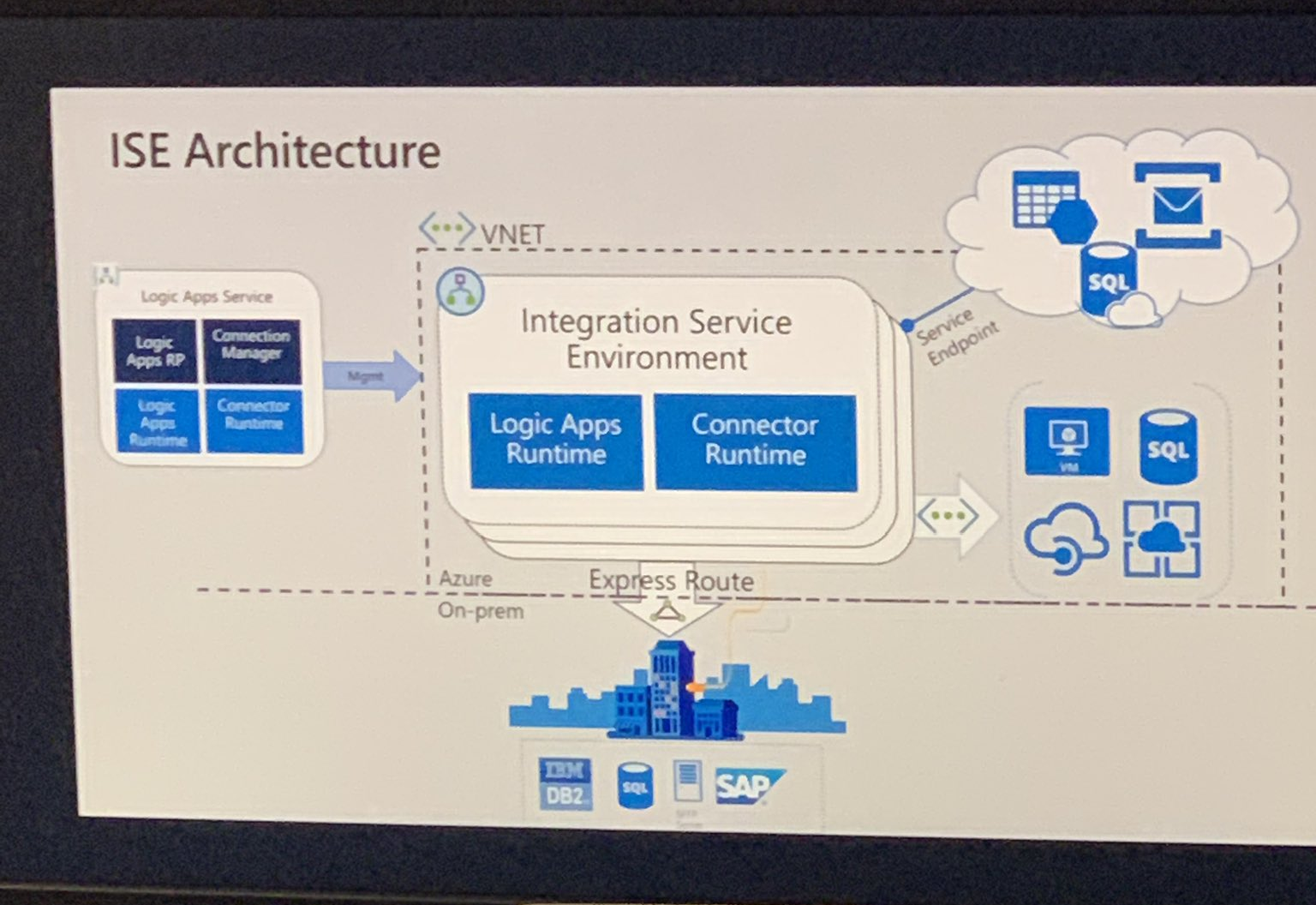

Integration Service Environment (ISE)

ISE is a private dedicated environment to run your logic apps. It gives your own private static IP’s which can be accessed efficiently. And also, he briefly explained about ISE Architecture.

ISE supports lots of connectors and, it helps you to manage your custom connectors efficiently. You can have your own SSO and, you can increase dev instance as per need. These private dedicated environments can be multi-tenanted as of the user requirements. You can create ISE From VS2019, and you can easily deploy the respective environment to the azure.

API Management: Update – Vlad Vinogradsky

Vlad started off this session by explaining API Management Service – It is used by API providers to expose the API to App developers. APIM consists of 3 things a gateway, a management portal with the configuration database and a Dev portal. The API providers work with both the Management plane and the API Management Services through several interfaces. APIM works as a façade providing one place to manage access and to control API usage.

APIM is integrated with other Azure Services like Observability, Networking Stack, Virtual Networking, Azure AD. Event Hubs etc.,

Recent developments

- Policies – It is basically the encapsulations of the API that are configured in different scopes. Policies are a collection of Statements that are executed sequentially on the request or response of an API. The team has added a new support element for encrypted documents, this can do simple and advanced validations.

- Managed Identities – It is a very good way to authorize services to each other in Azure. Now you can enable Managed Identities in API Management. Policies related to Managed Identities are released to authorize the backend services.

- Protocol Settings for Managed Identities

- HTTP/2 support on the client-facing side of the gateway

- Bring Your Own Cache (BYOC) – You can bring your external cache like Redis cache. Using an external cache allows overcoming a few limitations of the built-in cache. All the policies remain unchanged.

- Subscriptions – You don’t have to associate a user with a key. If you wanted to create a key for an App in your organization, you don’t want to have a user. Now if you want to access to API you don’t have to create a User, you just create a Key and start using it. Also, you can enable tracing on any Key you want. By default, only admin subscription enable tracing.

- Observability – It is now more symmetric, you can configure same settings for both Azure Monitor and App Insights, enable/disable settings for whole API tenant, conserve resources by turning on sampling, you can also specify additional headers to log and more.

- API Management Functions – Now you can specify the Functions that you want to expose without exposing all the functions in the Function App.

- Consumption Tier – GA last week. Unlike other Azure API Management tiers, the consumption tier exposes serverless properties. It runs on a shared infrastructure, can scale down to zero in times of no traffic and is billed per execution.

- DevOps Resource Kit – An open source project that provides us guidance and components to support the creation and/or extraction of APIs from an API Instance deployed to Azure. You can find the resource kit on its official GitHub repository.

Upcoming Developments

- New Developer portal – The team is working on it for the last 6 months. You can know more about this in Mike Budzynski’s session tomorrow (API Management: deep dive – Part 2)

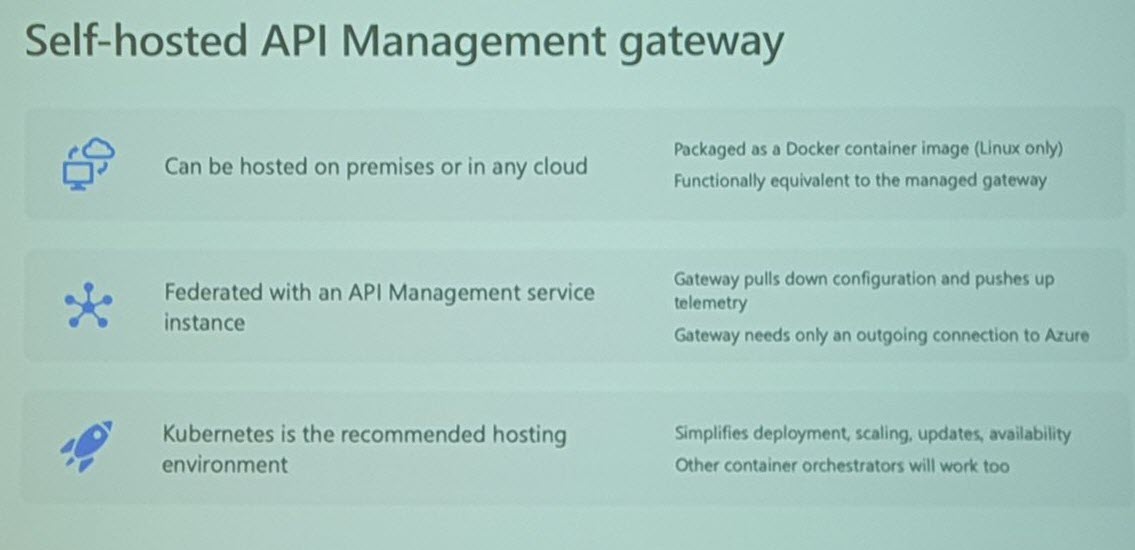

- Self-hosted API Management gateway – API Management was a cloud only service, which means all the components are deployed in the cloud. However, 80-90% of enterprise customers have both Cloud and On-premise. To solve the challenges faced in this scenario, we are working by making gateway components deployable in your environments both on-prem and cloud. With this, you can have and manage all your API’s in one place. – Preview will be available in summer.

The Value of Hybrid Integration – Paul Larsen

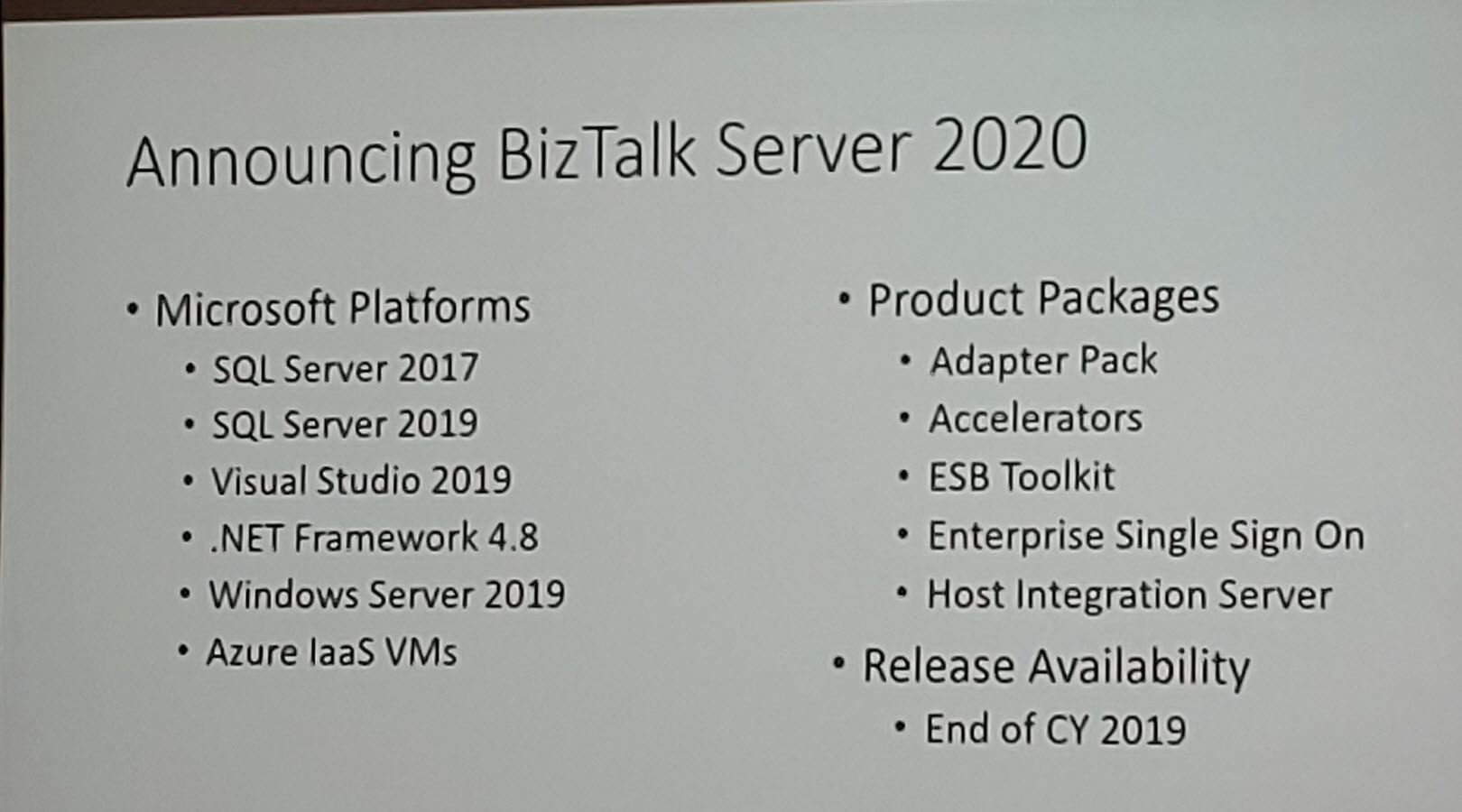

Paul started his session “The Value of Hybrid Integration” by explaining about the legacy of the BizTalk Server and he gave the meaning for the word Hybrid with some examples. He made the big announcement “BizTalk Server 2020” and he also announced it will be released at the end of 2019.

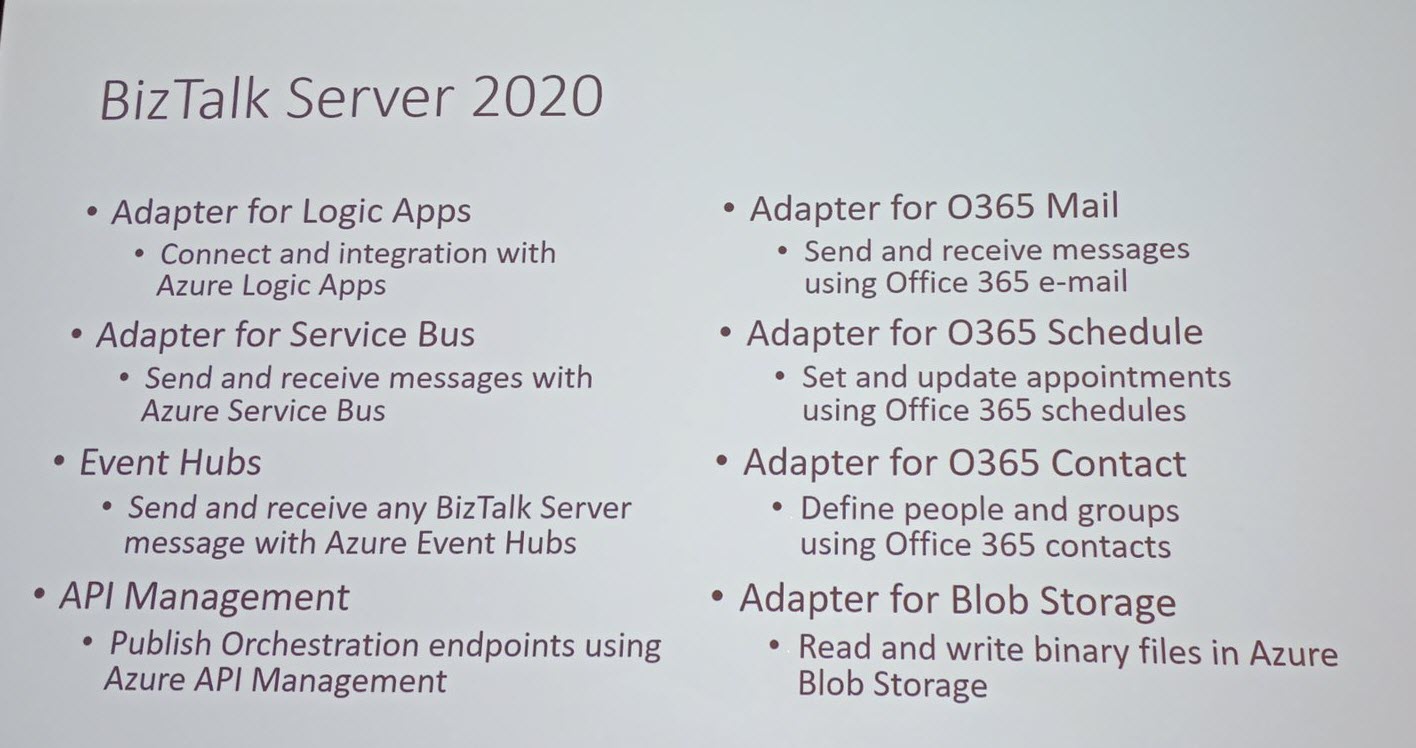

He explained about the integration of BizTalk Server and Cloud with adapters for Logic Apps, Service Bus, Event Hubs, API Management, O365 Mail, O365 Schedule, O365 Contact, Blob Storage (Dev).

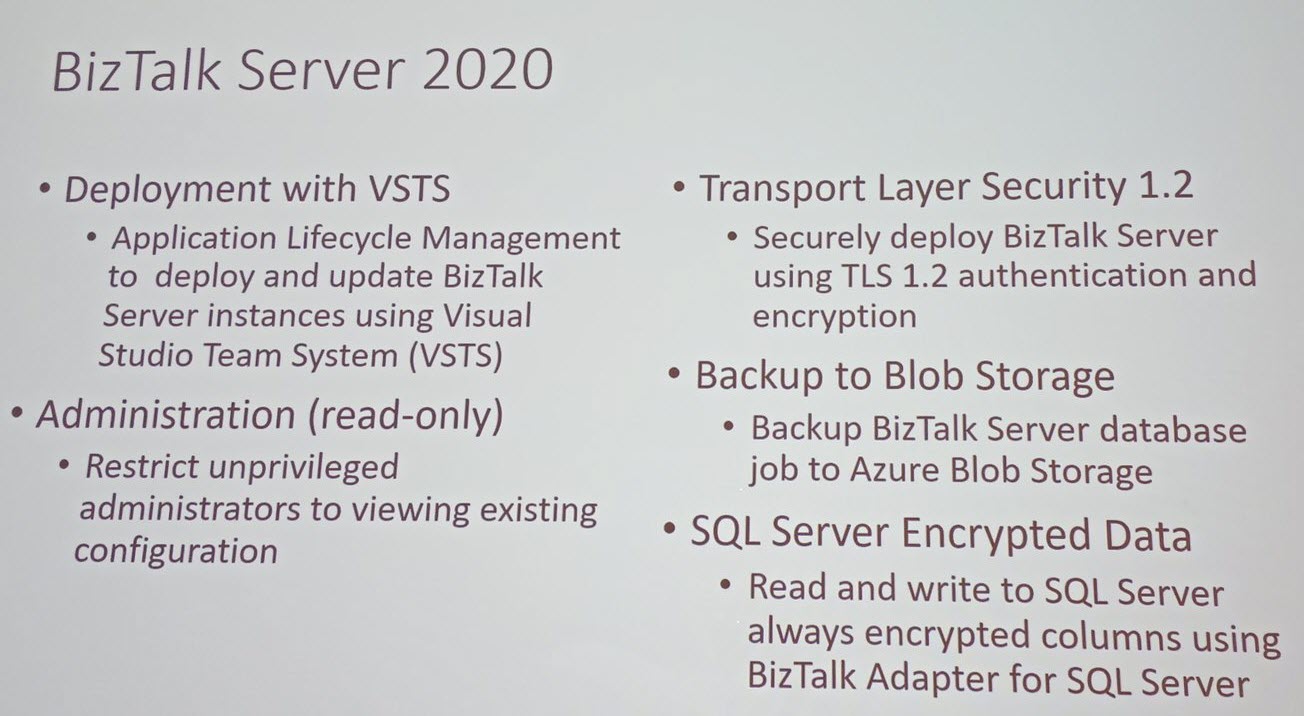

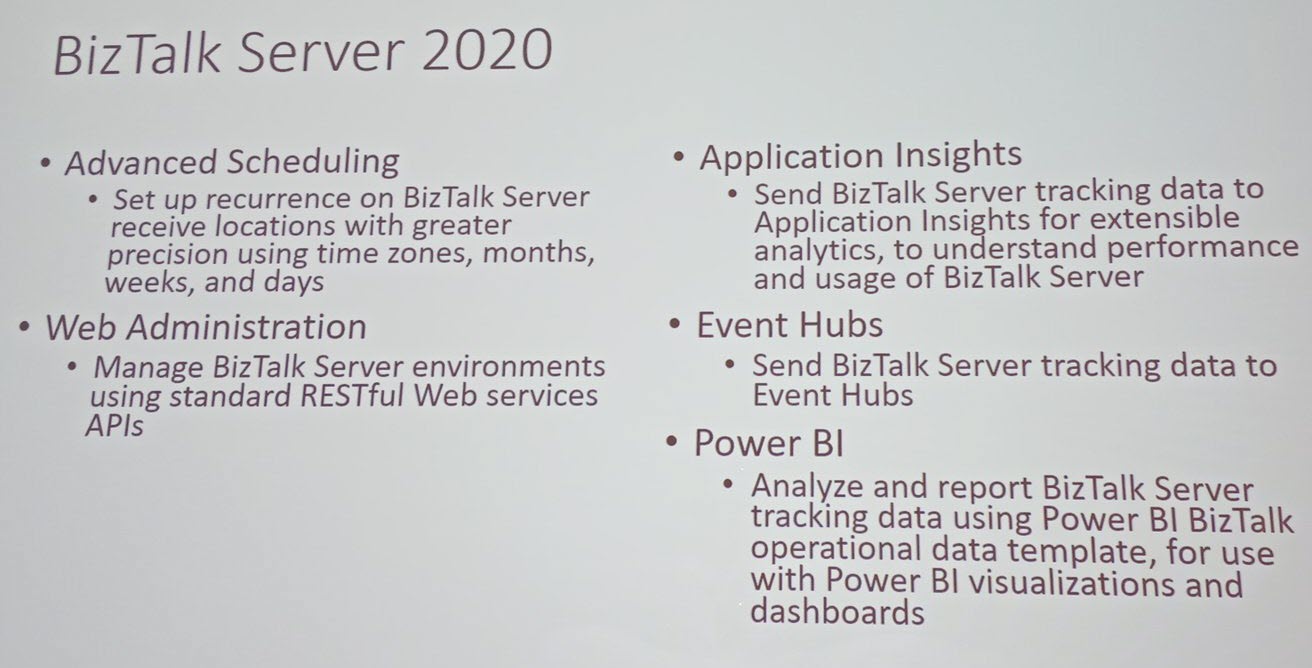

This Server has the capabilities to deploy Biztalk Server instances using VSTS, Back up to Blob Storage and so on. It also has the integration with App Insights, Event Hubs, and Power BI, etc.,

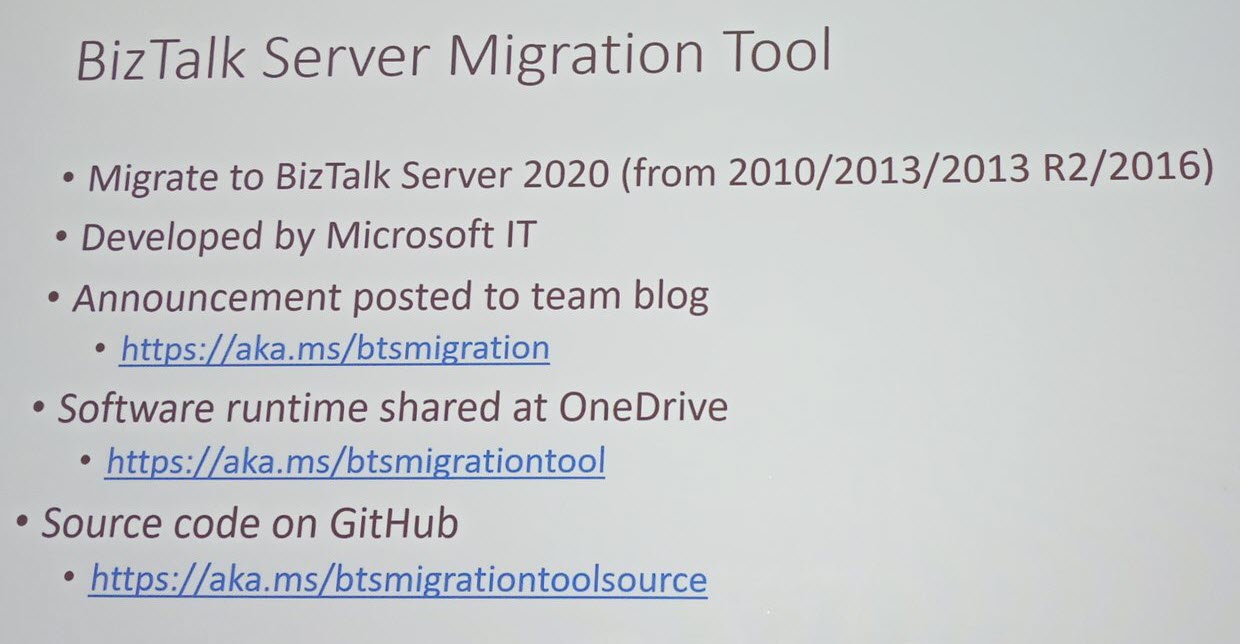

After the adapters and some Integrations, he announced BizTalk Server Migration tool which is a server migration tool and Host Integration Server 2020. This Migration tool will be helpful on dehydrating our configuration on old BizTalk server and re-hydrate that configuration to new Azure IaaS or VM.

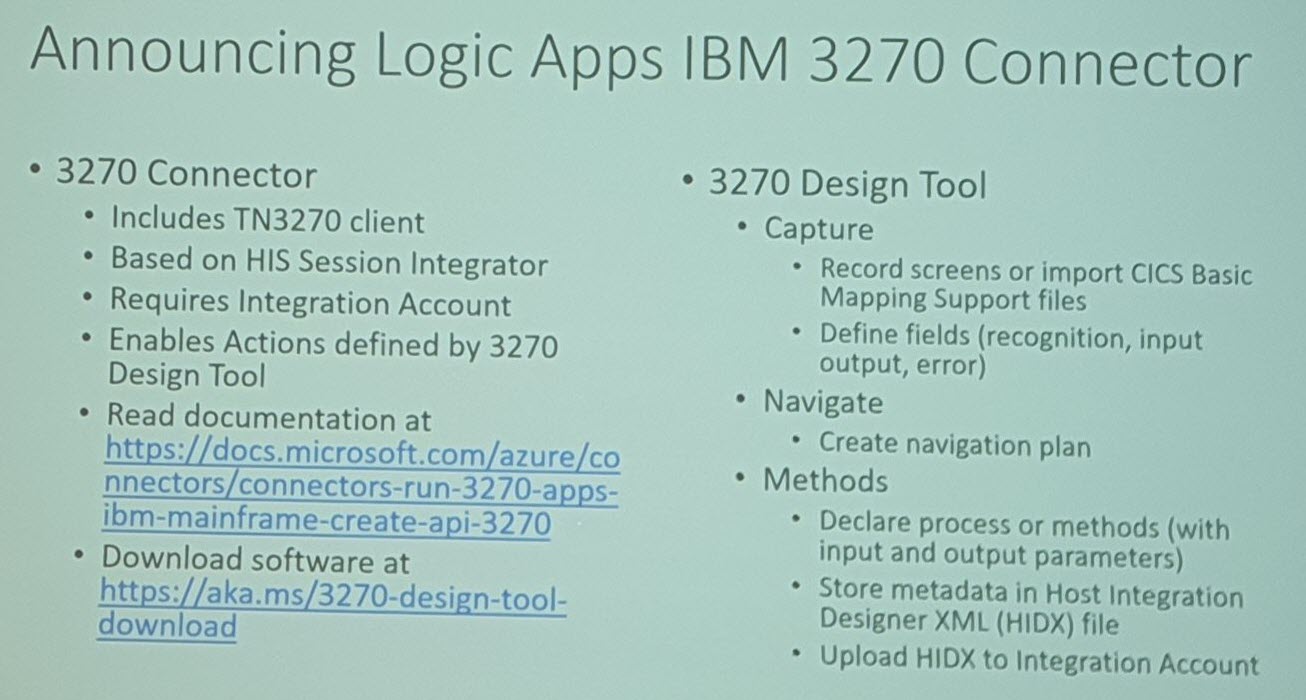

Again, he made an announcement on Logic Apps connectors for 3270 screens which is available and in preview and 3270 Design tool and explained where the 3270 screens are used and how the 3270 connectors can be used.

After the announcement of 3270 connectors, he demonstrated the Integration of IBM mainframe programs and Azure Cloud application with 3270 Logic App connector.

After the Demo, he made a couple of announcements, CICS, IMS screen clients for 3270/5050 connectors and VSAM, DDM .Net clients for IBM file systems. Finally, he ended his session by sharing the roadmap and his contact information.

Event hubs update – Dan Rosanova

Dan Rosanova, Group Principal Program Manager at Microsoft. Product owner Azure Messaging brought in the latest updates on Event Hubs.

Dan started his session by describing the earliest form of messaging pattern, Queue. He then briefed the fundamentals and working of the queue. He then highlighted two important aspects of the queue like;

- Server-side cursor

- Competing Consumer Model

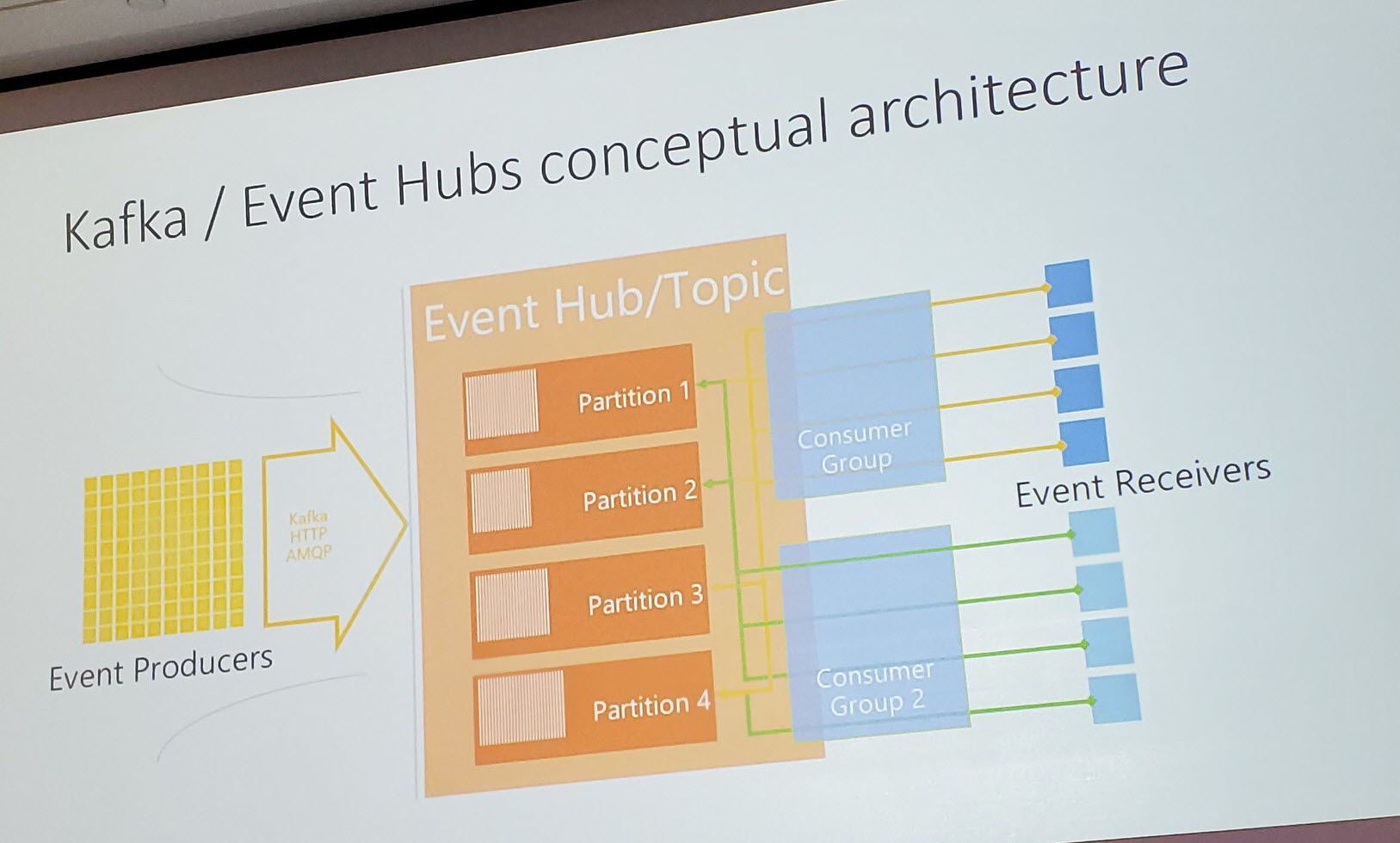

Then he started to explain ‘How Event Hubs & Apache Kafka are different from Queues’. The difference between these two by depicting the working of a cassette, where a stream can be recorded and played again and again. He explained the concept of Event Hub partitions using this.

The conceptual architecture of Kafka/ Event Hubs and the working of the Event Hubs. He also highlighted some cool aspects like

- Client-side cursor

- Multiple readers reading same data making the reads non-destructive

A list of few cool things that comes with Event Hubs

- PaaS Service

- SSL & TLS all the time

- IP Filtering and VNet

- AAD – Managed Identity

- Auto Inflate – scaling up

- Geo Disaster-recovery

- Capture

Dan explained what they did with Event Hubs capture and How it works.

- Batching on real-time stream

- Policy-based Control

- Uses AVRO Format

- Fully integrated with Azure – Raises Event Grid Events, Trigger Functions, Logic Apps

- Does not impact the Throughput

- Offload batch processing from the real-time stream

- Works with Auto Inflate

He then showed how the Capture should be set up in the Azure Portal which is straight forward Configuration and shared that 20% of the customers are using the feature, which should increase in the upcoming days.

He then pointed out two primary purposes to use Capture like;

- To have a copy of the data

- Use cheap Storage

Dan drifted his session towards Apache Kafka. He started it by mentioning few services using Kafka internally, some telemetry stuffs like Splunk, IBM QRadar, Azure messaging stack and applications built on Event Sourcing pattern.

He then mentioned Four different offerings of Kafka in Azure.

- DIY with IIS

- Marketplace Offering

- Clustered Option

- PaaS Service

He stated the steps of setting up Kafka, which was a bit lengthy configuration setup.

He then enabled Kafka in Event Hubs which was a One-click process and explained how the team was able to pull it off. He also mentioned how they started with Event Hubs.

Dan then depicted some super cool stats for Azure Event Hubs, where he focused on availability which was 99.9998% which was awesome. He then explained how they were able to achieve this.

Finally, he showed some charts from real-time IoT Hub cluster performance and spoke about the load balancing improvements that was recently done.

Service Bus update – Bahram Banisadr

Premium Service Bus Namespaces

The main update announced for Azure Service Bus is Geo Disaster Recovery. Geo Disaster recovery is available only for Premium Namespaces.

The available standard Namespaces can be migrated to Premium Namespace by using Standard to Premium in Place Upgrade. This can be done by following the below steps

- Create a Premium Namespace within the same region

- Sync the Metadata of both the Namespaces

- On Successful migration, the receiver clients will disconnect and reconnect on its own which is handled by client retry policies.

- Finally, the connection string of existing standard namespace will be pointed to the premium namespace.

A demo was done on migrating an existing standard namespace to premium namespace.

Enterprise Features

The enterprise features announced are Virtual Net Service endpoints and Firewall. This allows the users to restrict access to the premium Service bus Namespaces based on Virtual network setup or IP range. This is available in Powershell and ARM Templates.

In the portal, this can be done by selecting from the available options namely all networks, selected networks. The first precedence will be for Virtual net and then for the firewall. Selected IP addresses and Virtual net configs can be configured.

Managed Service Identity and RBAC

Managed Service Identity can be set up by following these steps;

- Leverage Azure Active Directory

- Set up a Service Identity

- Specify a specific role. For Service Bus, there is a built-in role called Azure Service Bus Data Owner.

- Assign this in the App.config in your client.

- This is with using Managed Service Identity with Azure Service Bus

SDK Updates

.Net and Java

A major release was done on Java and .Net SDKs. Recently they have added Management support, Web Socket support and Transaction support for .Net and Java SDKs

Node js SDK is currently available and Python supported SDK will be available soon.

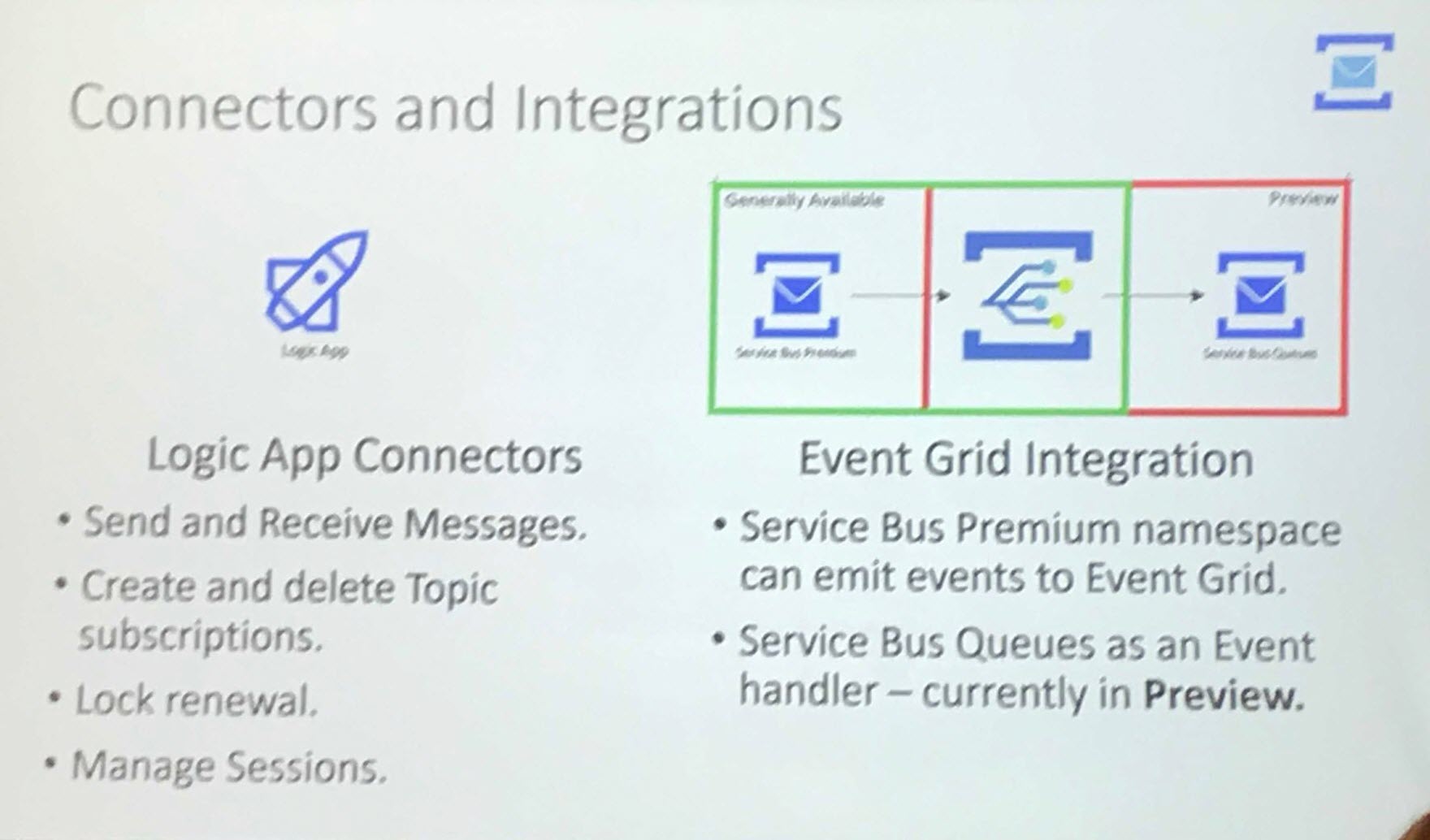

Connectors and Integrations

- The currently available Logic App connectors for Service Bus can be used for

- Send and receiving messages

- Creating and deleting Topic Subscriptions

- Renew locks of Long running Messages

- Manage sessions

Event Grid Integration

Service Bus Premium namespaces emit events to event grid for a long time now. Service Bus queues have added as an Event Handler which is in preview.

Data Explorer for Service Bus

A browser built-in tool for performing the capabilities of Service Bus Explorer will be released soon.

This will be available within the Queue or Topic Subscription in Azure Portal. The capabilities that will be available are

- Send, receive and peek messages from Queue

- Send messages to Topic, receive and peek messages from Topic Subscriptions

- Access Dead-Letter messages

- View Messages and Message Content

Additional updates

Messaging units have been increased to 8. Now we have 1, 2, 4 and 8 messaging units.

They have Private Endpoint Support in their Road Map.

They will be adding BYOK. They already have encryption at REST in Server Side. They can add their own keys in the Key Vault and use it for Encryption.

How Microsoft IT does Integration – Mike Bizub

Mike Bizub, Migration to Logic Apps and Microsoft Azure BizTalk Services and Vignesh Sukumaran, Software Engineer from Core Services Engineering team.

This session will look at consideration for migration from BizTalk to Azure Integration Services. Mike started the session with a Recap to B2B Approach. Many businesses dealing large volume in integration are moving from BizTalk servers to PaaS.

- Different types of protocols and standards must be considered

- Create reusable and leverage across different lines of business

- Segmented the messages into and out of the traffic at the gateway

- Analyzed message to understand the protocols

- Arrived at the conceptual view to move to PaaS

Series of logic apps were created for EDIFACT, X12, etc processes. The metadata of the messages is analyzed to arrive at designing these logic apps. This analysis helped to define the background for the transformation.

Composable Architecture

Built on the concept of routing. It will have a series of sources and destinations. Logic Apps will represent the Adapters in the processes. There will be logic apps specific to the endpoint so that it can be reused.

Different types of patterns like Pass Through, Pub Subsystem, etc are built to expose an API specific to these patterns which will eventually call a corresponding Logic App.

Telemetry

Through Hot Path telemetry captures exceptions from each Logic App processes and push it to Event Hub through a Service and forward it to a ticketing system and AppInsigths.

Event Hubs and OMS are used to manage Warm path and then forwarded to Hot Path.

ALM and DevOps

Teams are distributed across Redmond and Hyderabad. ALM and DevOps are used to manage code repository, CICD pipelines and deployments. Define policies for code reviews, security risks and follow some compliance checks. Perform Unit test and functional tests. They help to manage deployments faster.

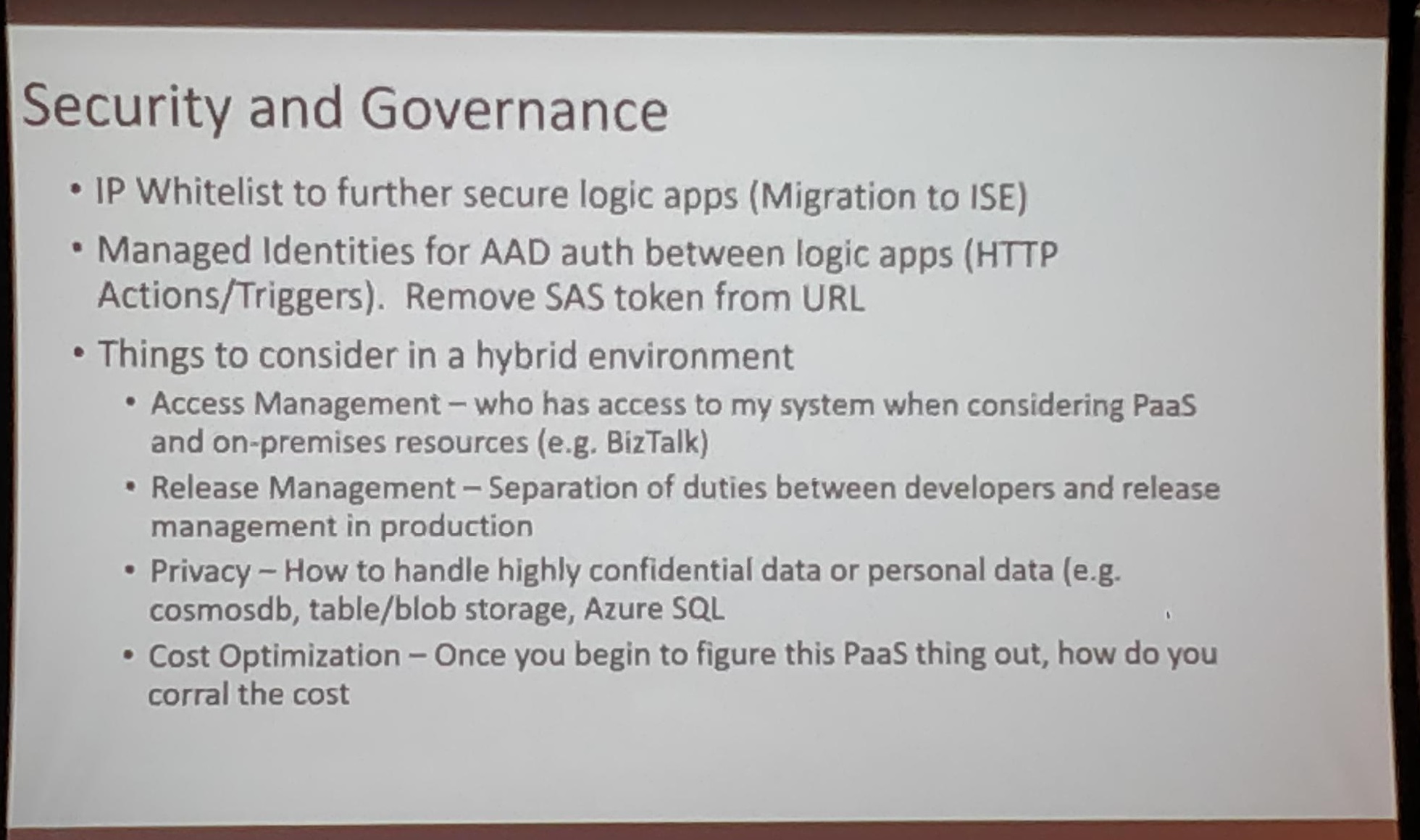

Security and Governance

- Migrating to ISE for security and whitelisting IPs

- Need to be careful about how and where the metadata are created and managed along with managing SAS tokens and Secrets. Managed Identity can be used to control access to the metadata

- In a hybrid environment, governance is important. Managing access to the system is very important

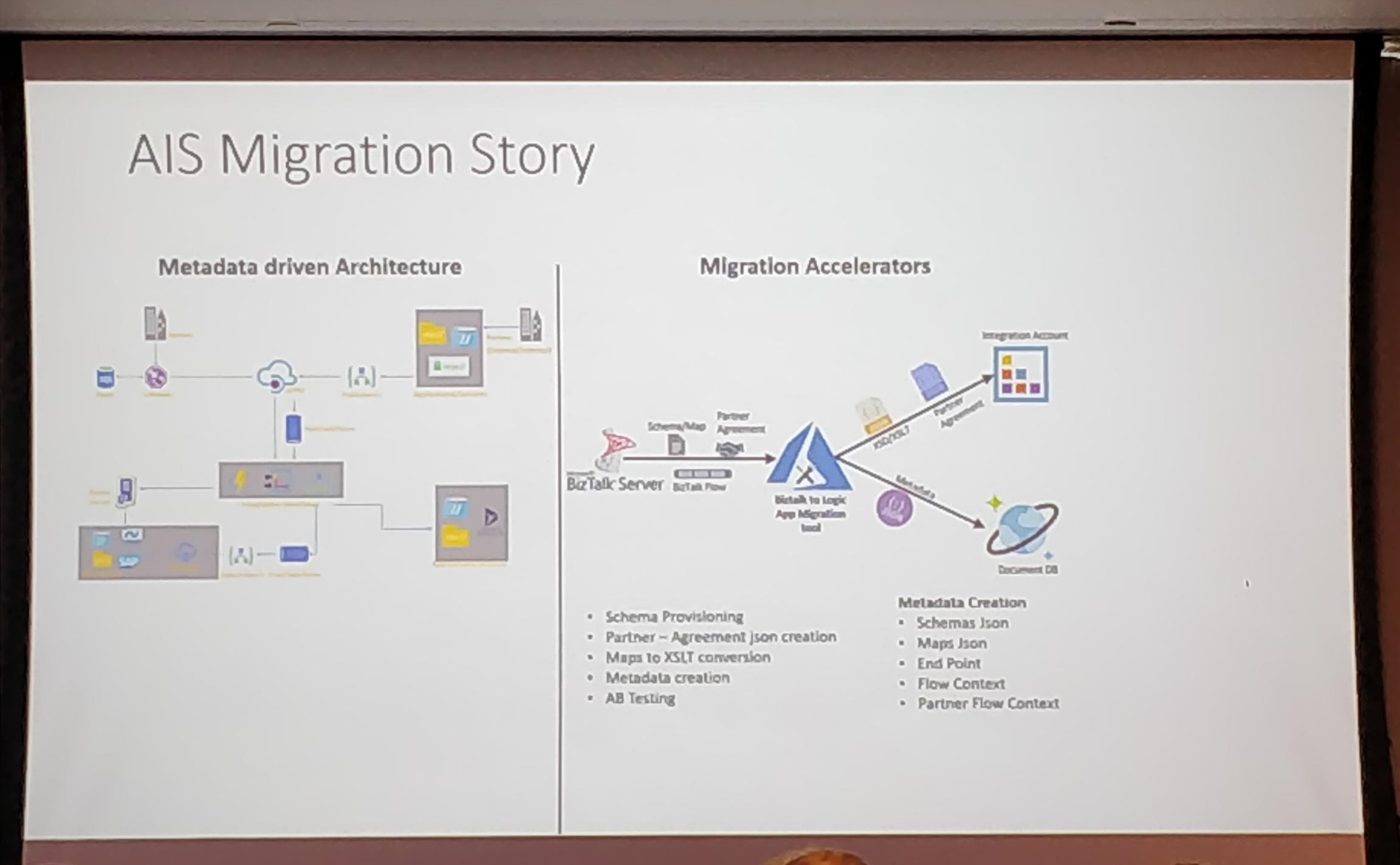

AIS Migration Story

Vignesh started explaining Metadata driven architecture to migrate from BizTalk to PaaS architecture using the accelerator tools. An integration account with some 20 logic apps which can handle B2B and A2A scenario. Customization are possible with Functions. Meta Data are used to fuel this migration.

Metadata is the binding configuration that defines the message type, schema, map and what kind of transformation must be applied and endpoint the message must flow through.

Migration Accelerators will help in migrating partners artifacts and schema migrations. Not only the artifacts but the orchestrations can be migrated in a single click. It will help to convert BizTalk definitions to AIS understandable Integration account language.

With these accelerators, what will take 5 days to complete the migration for a transaction can be reduced to 3 hrs.

Enterprise Integration Disaster Recovery

For a High Availability system, DR implementation is a must. An Active-Passive DR model. A primary and secondary region is handled through traffic manager. A Runtime sync and Artifact Sync are practiced. Scripts are available to ensure traffic is diverted from Primary to Secondary region.

For the open source Accelerator tools, Demos and scripts, visit this github page

Enterprise Logic Apps – Divya Swarnkar

Divya Swarnkar started her session on how effectively the enterprise integration is being used in Logic Apps. There are lots of connectors available in Logic Apps to solve our B2B requirements.

She explains enterprise integration with an interesting scenario of the Grocery store.

- We can make use of enterprise integration concept in a grocery store to increase the profit.

- We can use some business process tracking tools such as SAP and Dynamic 365 which tracks and send the business data to the maintenance team.

- Where the maintenance team can analyze the respective data and provide us the efficient result of it.

She started to explain this scenario by her real-time demo using Logic Apps and SAP. In Logic App, we have a connector for IOT where we can receive necessary data from any IOT devices. These are mainly connected using integration accounts.

She also makes use of maps which we can create and deploy using Visual Studio 2019. And also, Usually, logic app contains the telemetry data. If you want to analyze the data, you can push this telemetry data to log analytics.

New Improvements

There are various improvements done in the enterprise integration world such as;

- Now the integration account limit has been increased from 500 to 1000.

- A new version of AS2 Connector has been launched.

- Monitoring has been introduced for batch activities.

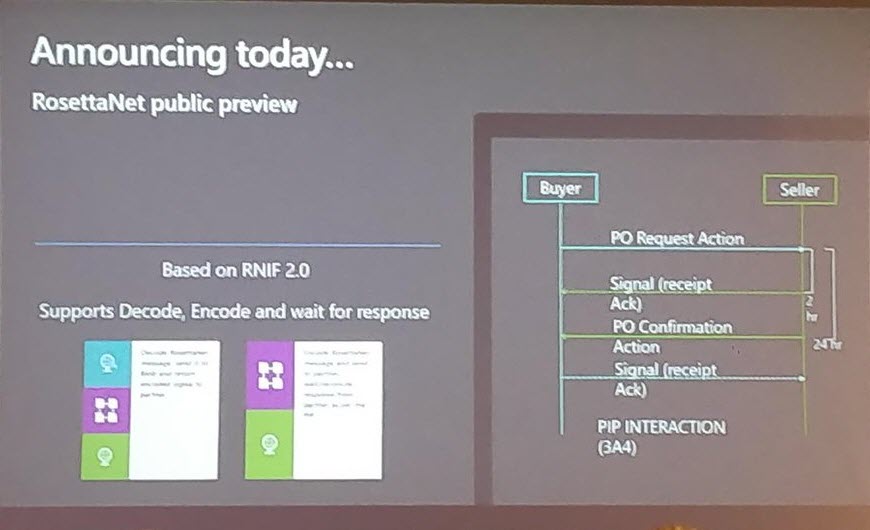

Announcing today!

An important announcement has been made at Integrate2019 for LogicApps. Rosetta Net is now available for public preview! IT supports decode, encode and also wait for a response. It will be very useful in B2B scenarios.

She jumped into the demo to show some real use cases of Rosetta Net. There are already predefined templates available in logic apps for encode as well as for decode. You can select the respective template and some the connectors need pip configs. You can either create a pip config or map it with the existing pip config.

Coming soon!

Some of the exciting stuff that can be expected soon are;

- Gateway across multiple subscriptions

- Ability to search by object name

- You can send multiple transactions from SAP to Logic Apps

- Adaptive chunking

- SAP Connector for ISE (Integration Service Account)

- EIP tools for VS2019

- More Core connectors

- Performance Improvements

Future Improvements

Some of the future improvements they are aiming for are;

- They want to extend their support in health care

- Create a greater number of connectors for enterprise based on the requirements

- Configuration store

- Standard Integration account for dev’s

Serverless real use cases and best practices – Thiago Almeida

This session was mainly focussed on how various organizations make use of Serverless in solving their business problem.

The session was initiated with the definition of Serverless. An entity can be Serverless if it has the following capabilities.

- Scalable

- Reliable

- Event-based

- Infrastructure is hidden

- Code or Workflows based

- Auto scalable

- Micro billable

He discussed the File Batches challenge in detail. The organization needs to append all the files within the same batch into a single JSON file.

Some of the proposed solutions were;

- Load file or their metadata into the database and add a timer trigger to check if all files in the batch are available to process

- When each file arrives, query storage check if all files in the batch are available to process

- Use Service bus with Session enabled queues or topic subscription. The batch id can be sent as session id and the number of files can be added to the message header

- Azure Durable functions can be used to trigger the orchestration to process files of the same batch ID

- Logic App and Function Apps with the Batch trigger can be used

He demonstrated how they solved the Invoice payment scenario of a Brazilian customer using Durable functions. A Business problem of Fuji Films was solved using the Cognitive Face API’s who wanted CICD and Unit tests for their Implementation. IOT hub, EventHubs and event grids were used in solving the problem of Johnson’ s control who use thermostat. Azure used Storage Account blob trigger of Event Grid to track the blob usage among multiple customers.

Premium Azure Functions can scale up and down dynamically so that they can be used without any message dropping and delay.

A combination of Logic Apps and Service Bus can be used in solving a Purchase order scenario.

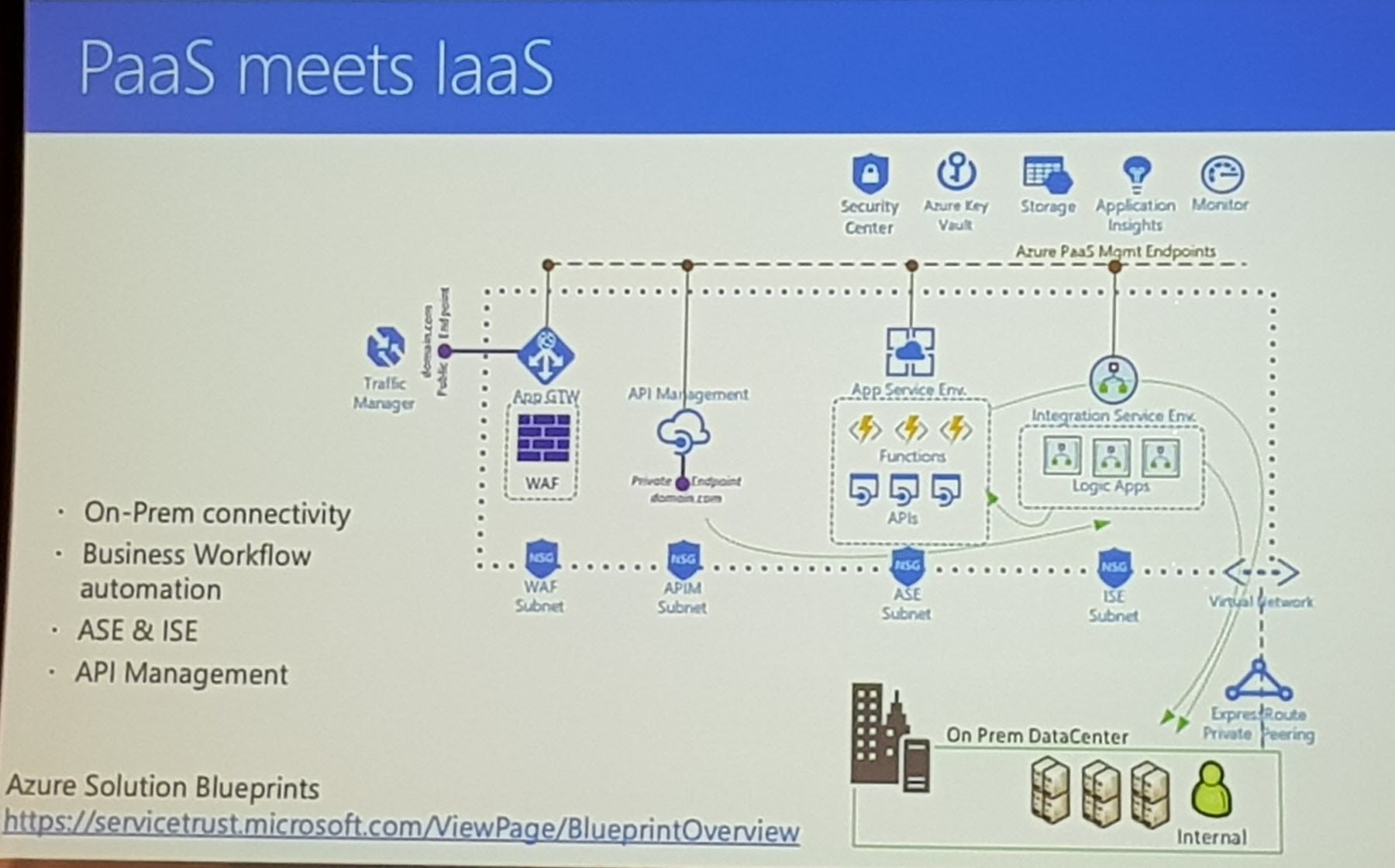

PaaS Meets SaaS

By using the below features PaaS can be used along with SaaS;

- On-Prem connectivity

- Business Workflow Automation

- ISE and ASE

- API Management

Microsoft Flow – Kent Weare & Sarah Critchley

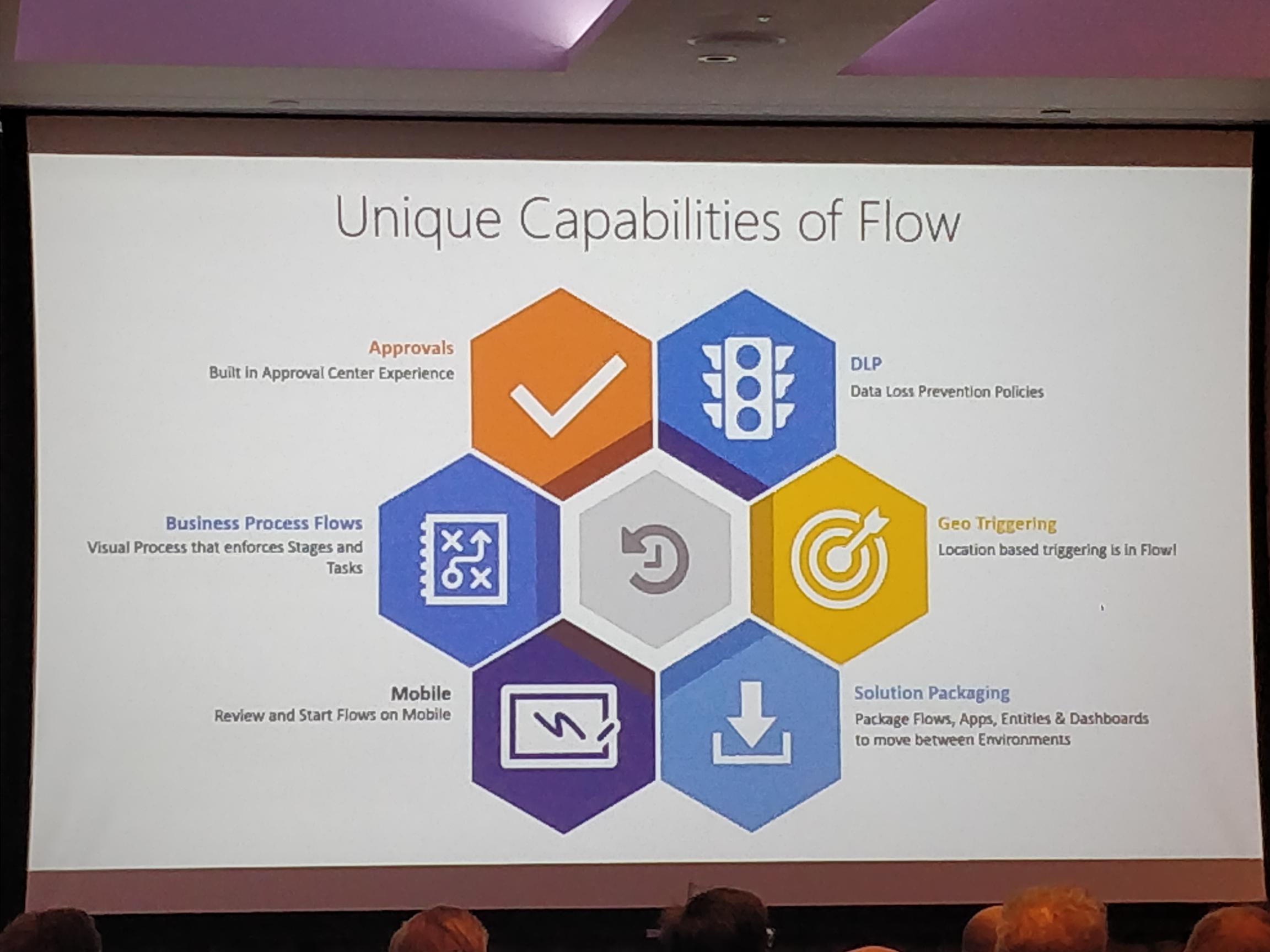

In the last session of the day, 1 Kent Weare and Sarah Critchley took the stage with a session on “Empowering organization to do more with Microsoft Flow”. Flows help non-developers automate their workflow across different apps and services without having to write a single line of code.

Here are some key points discussed by Kent on Microsoft Flow;

- There are a lot of services available in the Integration space. Microsoft flow is being more completive in scaling. Flow serves as a global SaaS solution.

- Microsoft flow is being used in 200 different countries across the globe. It is a no code approach and can reduce 70% of custom development.

Some of the unique capabilities:

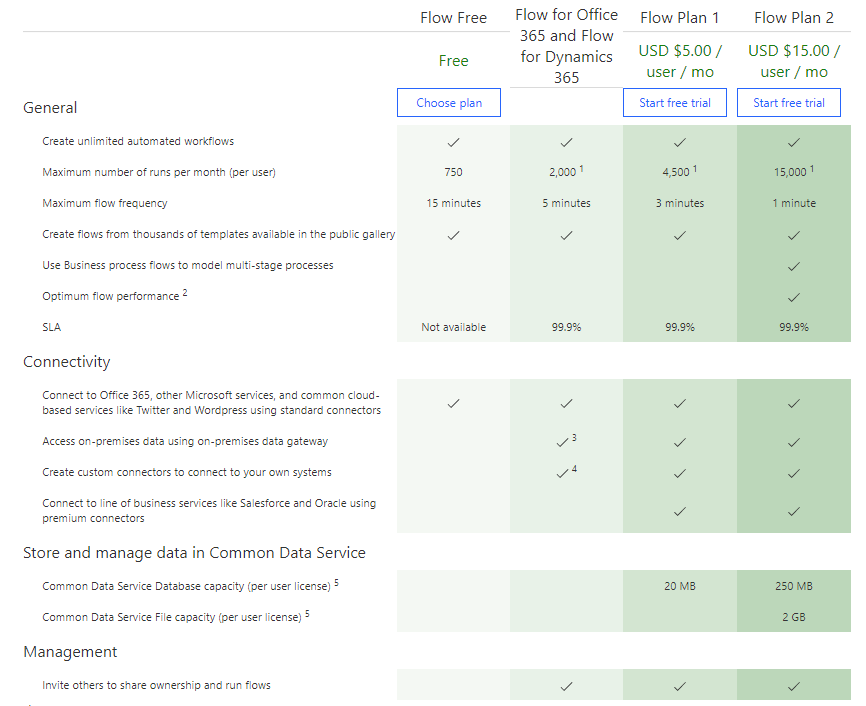

The above picture represents the recent changes in licensing model for Microsoft flow.

Dynamic365:

The rest of the session was taken over by Sarah where she discussed Dynamic365 services which are managed by Microsoft. It is primarily being used in Sales, Marketing and Finance, etc.

Power Apps:

Sarah gave an overview of Power Apps, its core is a Platform as a Service. It allows you to create Mobile Apps that run on Android, iOS, Windows (Modern Apps) – and with almost any Internet browser. It also allows you to design UI in a very easy way. It allows you to create modern driven apps.

The session concluded with some key takeaways on Microsoft Flow and Dynamic365.

That’s a wrap on our summary of Day 1 at INTEGRATE 2019 and here’s a glimpse of Day 1 in pictures. We already looking forward to Day 2 and Day 3 sessions. Thanks for reading! Good night from Day 1 at INTEGRATE 2019, London.

Integrate 2019 Day 2 and 3 Highlights

Intergrate 2019 Day 2 Highlights

Intergrate 2019 Day 3 Highlights

This blog was prepared by

Arunkumar Kumaresan, Balasubramaniam Murugesan, Kuppurasu Nagaraj, Mohan Nagaraj, Nishanth Prabhakaran, Ranjith Eswaran, Suhas Parameshwara