Table of Contents

- Scripting a BizTalk Server installation – Samuel Kastberg

- BizTalk Server Fast & Loud Part II: Optimizing BizTalk – Sandro Pereira

- Changing the game with Serverless solutions – Michael Stephenson

- Adventures of building a multi-tenant PaaS on Microsoft Azure – Tom Kerkhove

- Lowering the total cost of ownership of your Serverless solution with Turbo360 – Michael Stephenson

- Microsoft Integration, the Good the Bad and the Chicken Way – Nino Crudele

- Creating a Processing Pipeline with Azure Function and AIS – Wagner Silveira

Scripting a BizTalk Server installation – Samuel Kastberg

After talking so much about the cloud on last 2 days of integrate2019. Samuel, Senior Premier Field Engineer at Microsoft, kick-started the first session of last day, Scripting BizTalk Server Installation.

Installing and configuring BizTalk server is not straightforward, it can take a lot of time. It is important to have scripts which cause fewer errors than manual installation. You can run such scripts in a different environment and get everything in place. The same script can be used for setting up different environments which lower time and costs.

What you should script:

- Things you can control

- Things you want to be the same over and over again

- Good Candidates

- Windows Feature

- Provision VM’s in Azure (if the environment needs to be created in Azure)

- BizTalk features and group configurations

- MSDTC settings, Hosts, Host Instances

- Visual Studio, SQL server

- You’re a favorite Monitoring tool

- Bad candidate

- Things going to change over a time

Samuel advised considering below points before you start;

- Decide what your main drivers are, repetition and control are the drivers to automate, standardized developer machines, disaster recovery preparation, and test environments

- Decide what your baseline is and document it, think what could change in 6 months or a year

- Document the execution process, Scripting is not a replacement for the documentation

- Set a timeframe for your work

Good Practices

- The main code should orchestrate process – Create functions for tasks

- Name scripts to show the order in which you are going to run

- Write a module for common functions which you are going to run over and over (Logging, Prompts, Checking, etc.)

- Use a common timestamp for generating files

- Debugging is a good friend (Before run just step in)

- Be moderate with error handling Add a reminder to the information source

- Add reminders to information sources

Windows feature installation

- Choose different tools of your taste

- Server Manager

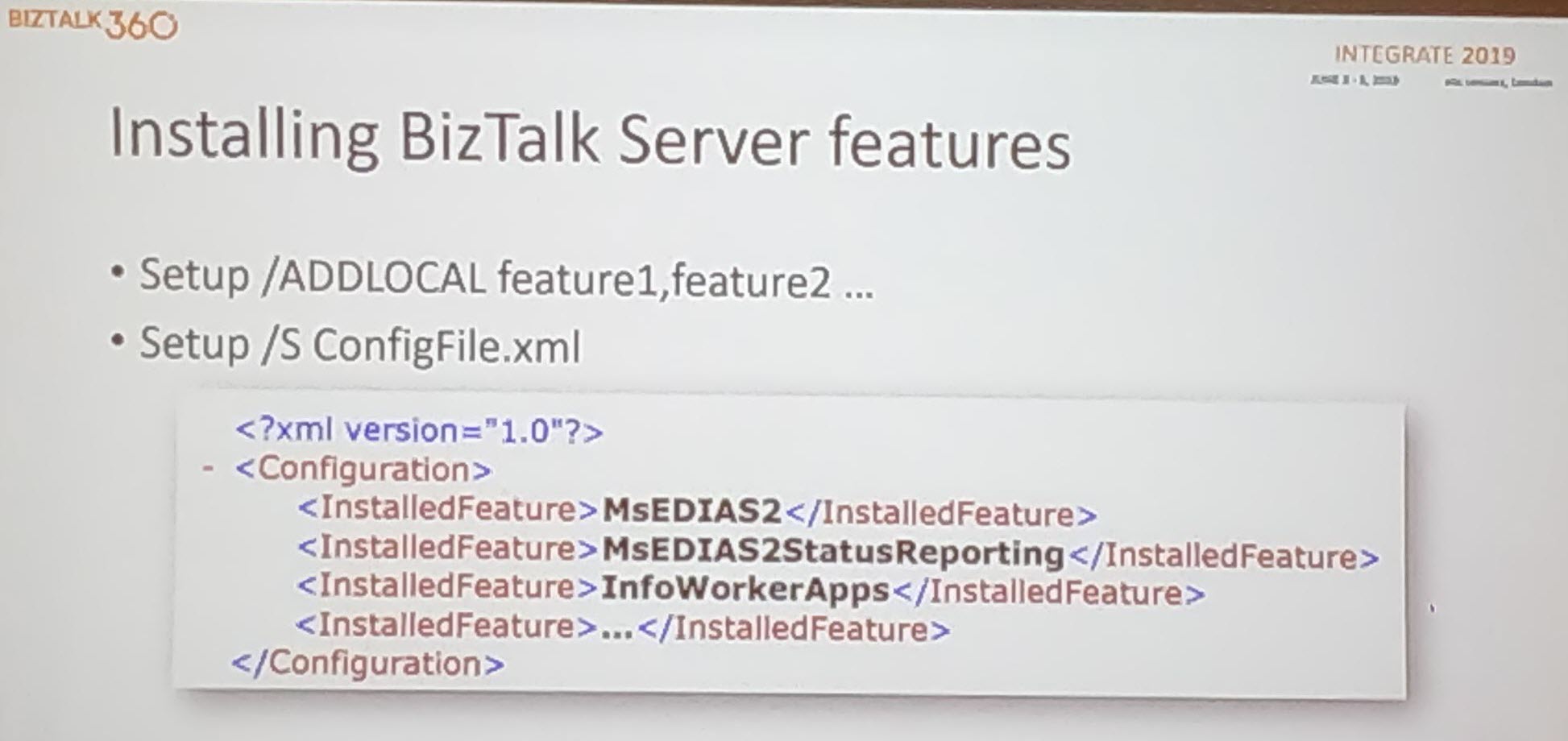

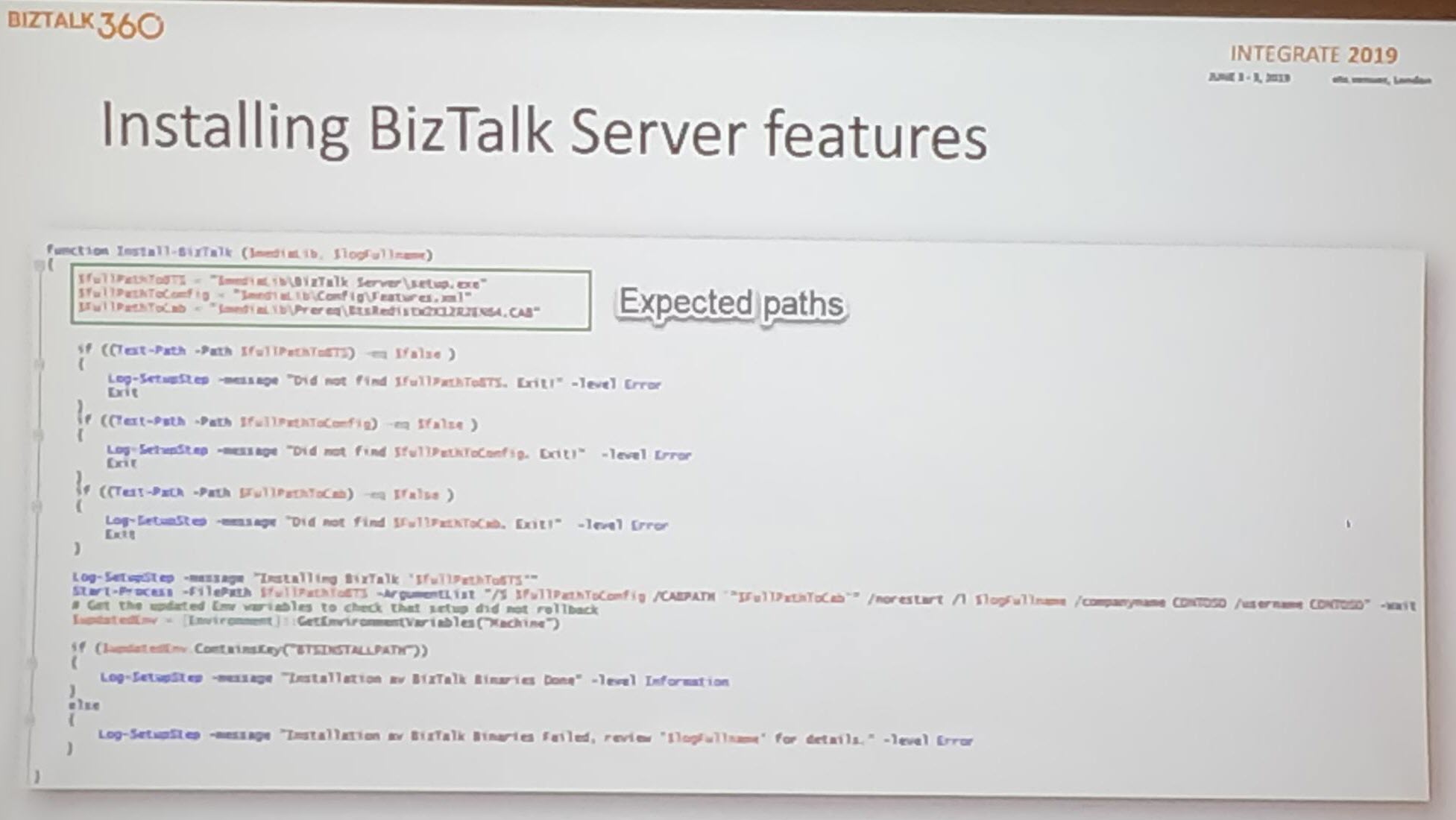

BizTalk Server Feature Installation

Setting up the BizTalk Server product consists of installation and configuration. Installation adds the binaries to the system. The configuration creates or joins a BizTalk Group and enable/configure other features as Rules Engine, BAM and EDI.

Setup/AddLocal – Export configuration .xml file (Select the required features).

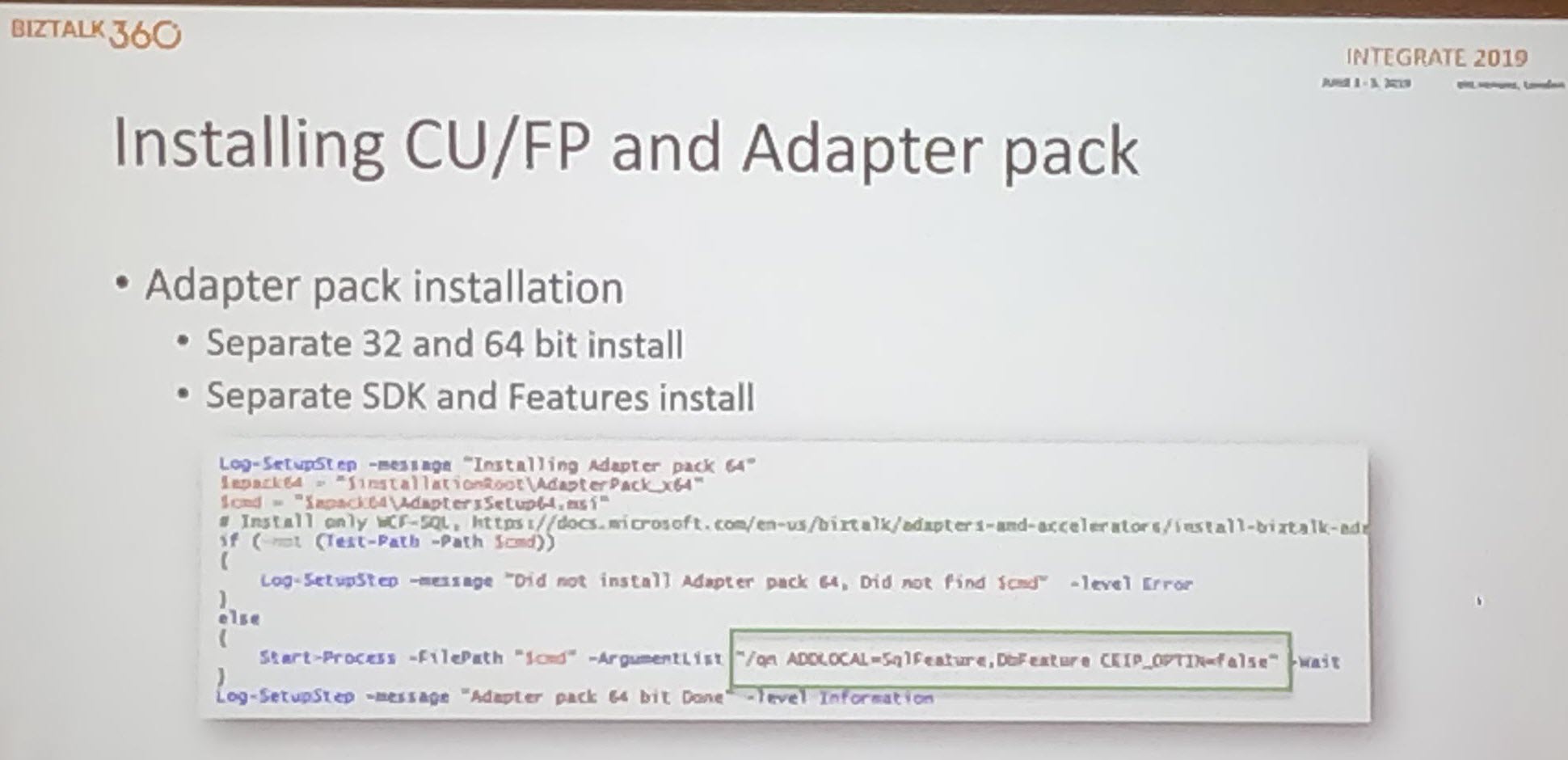

Installing CU/Adapter pack – More complex

Configuration File – Used for providing required parameters

- Consists of feature element – Each one maps to specific sections in the configuration tool

- Each feature contains one or more questions – Answer attribute selected = True

- Export from config.exe

- Edit the export to be a template- Replace the real values at configuration time. You need one file while creating a group

Configure Host and instances – Use WMI or PowerShell provider

Create handlers – Use WMI and PowerShell

Handling secrets – Use KeyPass, Key Vault. KeyPass to use when no internet access is available.

Run the Script in your environment !!!

BizTalk Server Fast & Loud Part II: Optimizing BizTalk – Sandro Pereira

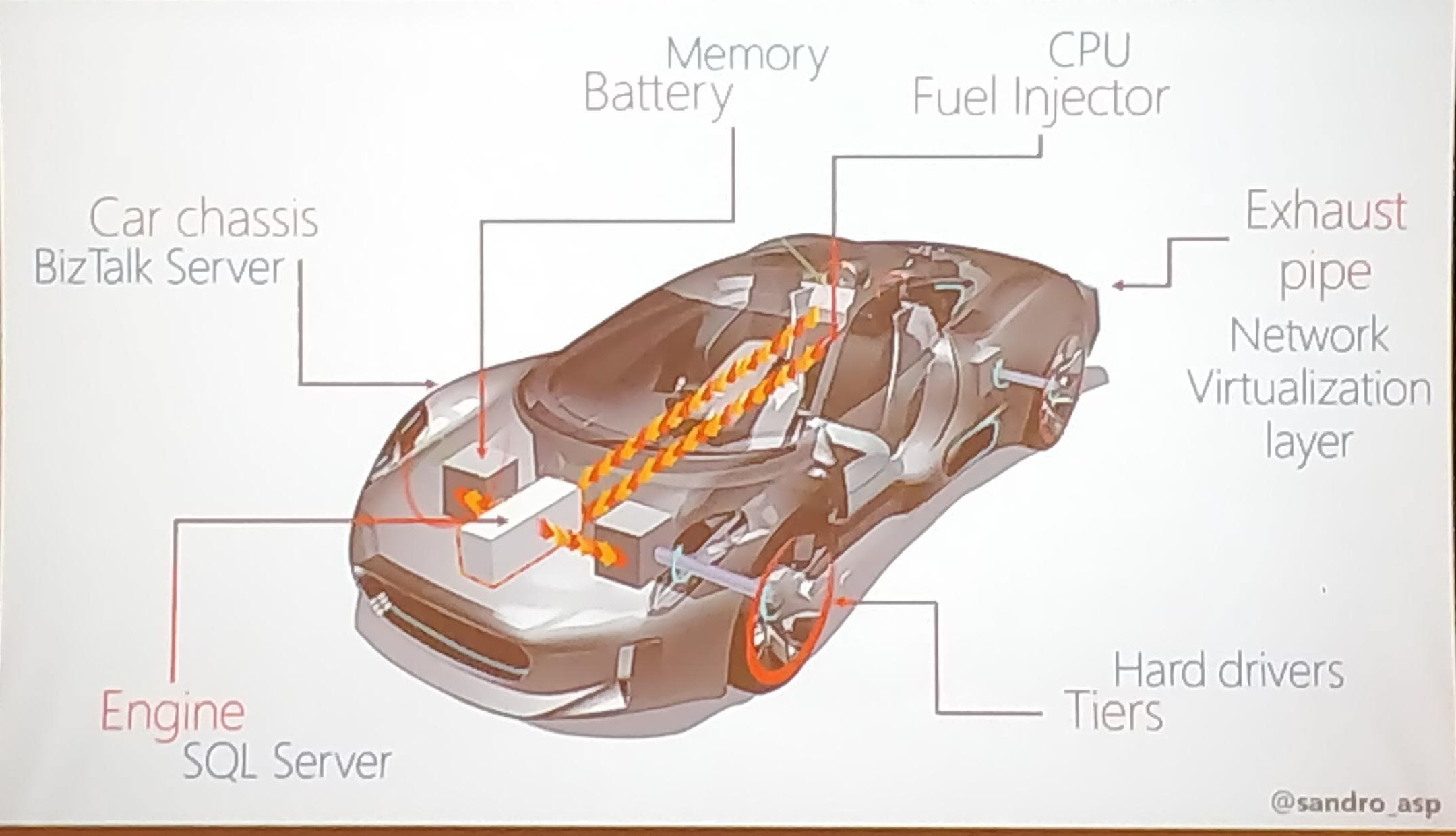

Sandro Pereira, as usual, sets the theme of the session by comparing the BizTalk Server Performance to different types of cars like Formula 1 cars, BMW cars, Truck Cars.

Performance with BizTalk Server

Explains the different parameters that affect the performance of the BizTalk Server with car functioning

- Memory

- Network

- Disk Space

- BizTalk Server

- SQL Server

He explains different tips to optimize the performance

- What is your requirement?

To improve the performance of the BizTalk environment, choose the right set of infrastructure to manage your business requirement. - Message Processing (Slow Down)

BizTalk message processing can be slowed down when there are quite huge volumes of messages. In that case, use queues to process the messages - Techniques to optimize the performance

Sandro explains the different techniques which can be used to fix the performance issue- Observation

- Analysis

- Apply Fixes

- Redesign the system

If an existing BizTalk solution is a bottleneck in the performance, you can suggest redesigning the solution to meet the business requirement and space to scale the solutions for future challenges.

- Move to Historic Data (Tracking)

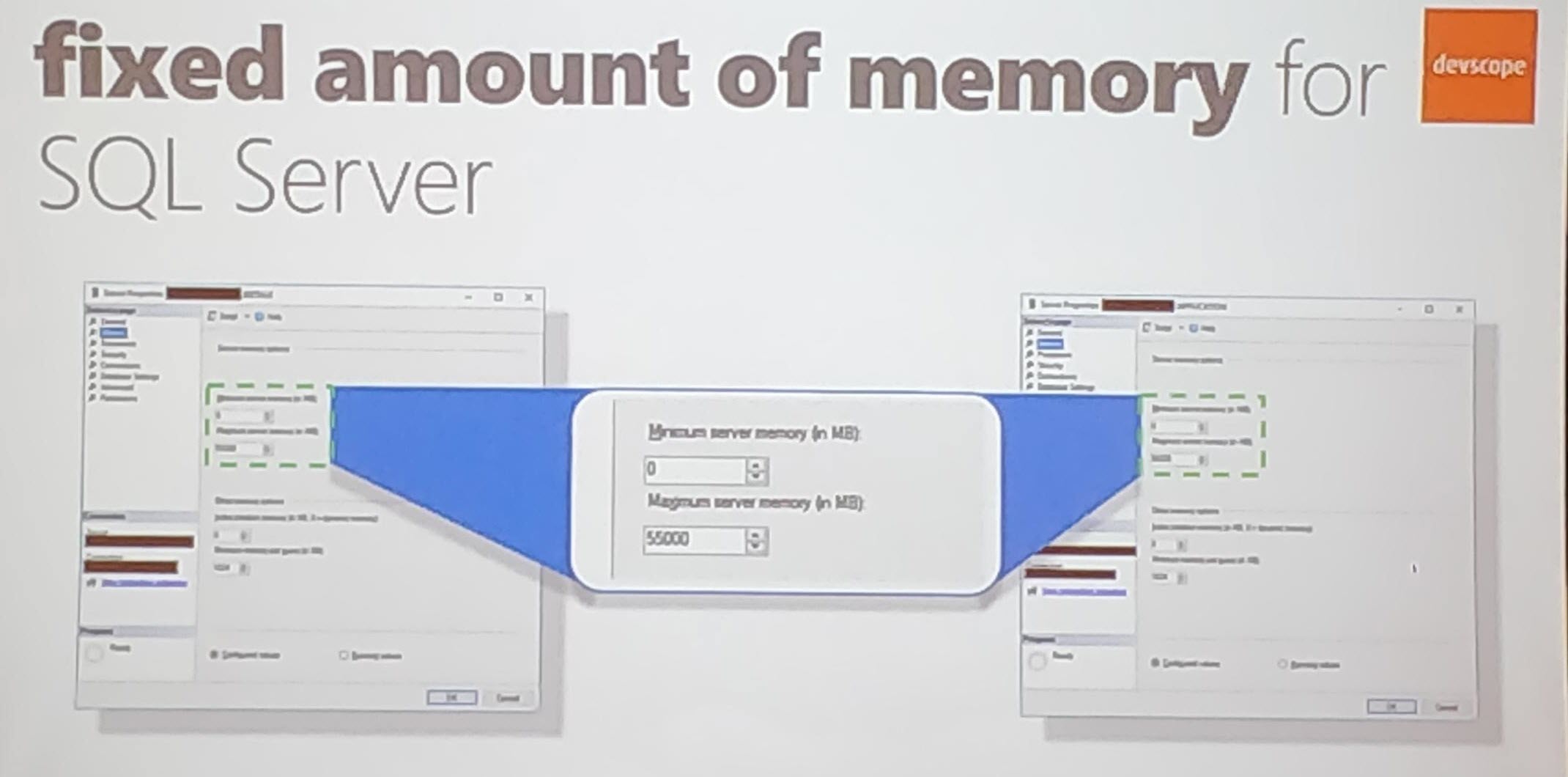

Sandro explains about how to manage the historical data. Storing the important data is important but use the minimum tracking necessary, to avoid database and disk performance issues. - SQL Server Memory optimization

Use SQL Server memory configuration to optimize the performance of the message processing.

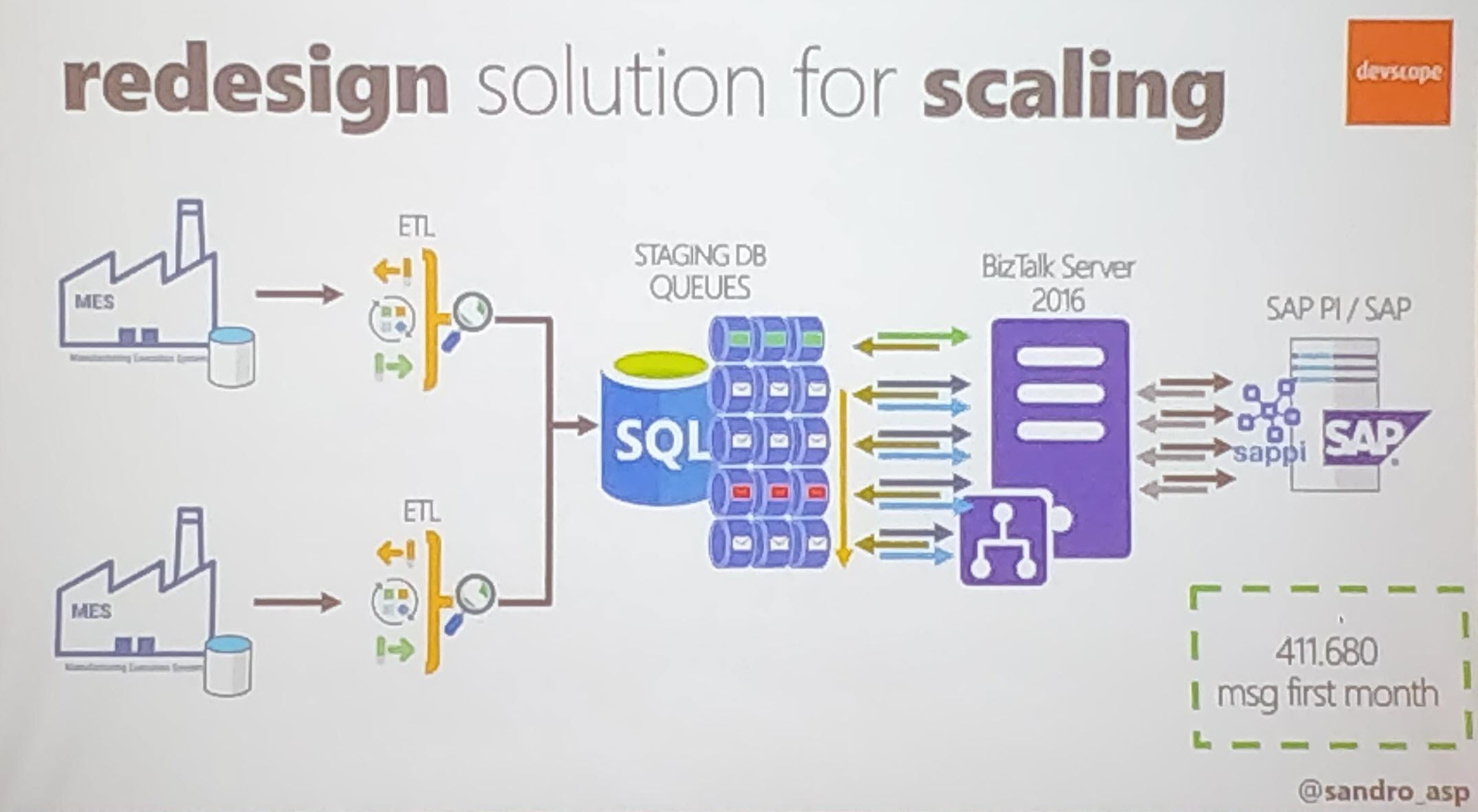

Sandro then explains two real-time solutions in which how performance improvement is achieved;

Cock Sector World Leader

He explains how the BizTalk Team Scaling the solution when the performance hit in one of the manufacturing sections;

- Follow the sequences based on the number of messages (5 million messages)

- Dividing the process – Parallel process (Queues)

- Scaling up the SQL Server Tier

- Move data to Historic (Enable the Minimum tracking)

Banking System

Sandro explains how they provide the solution in one of Banking systems with an example of opening a Banking Account scenario. He explains various techniques used in these Banking Solutions;

- Azure

- API Management

- Composite Orchestrations

To process the data to open a banking account takes more than 1 min to process the data. He explains the factors that affect the performance;

- Network/LDAP

- Composite Orchestration Expression shape

- Resources Unload (Slow First hit)

Fine tune the performance to process the creating bank account with;

- Improve the warming up by Recycling BizTalk and IIS

- Memory

- IIS Application Pool

- MQ Agent Recycling

He then explains various solutions to optimize performance;

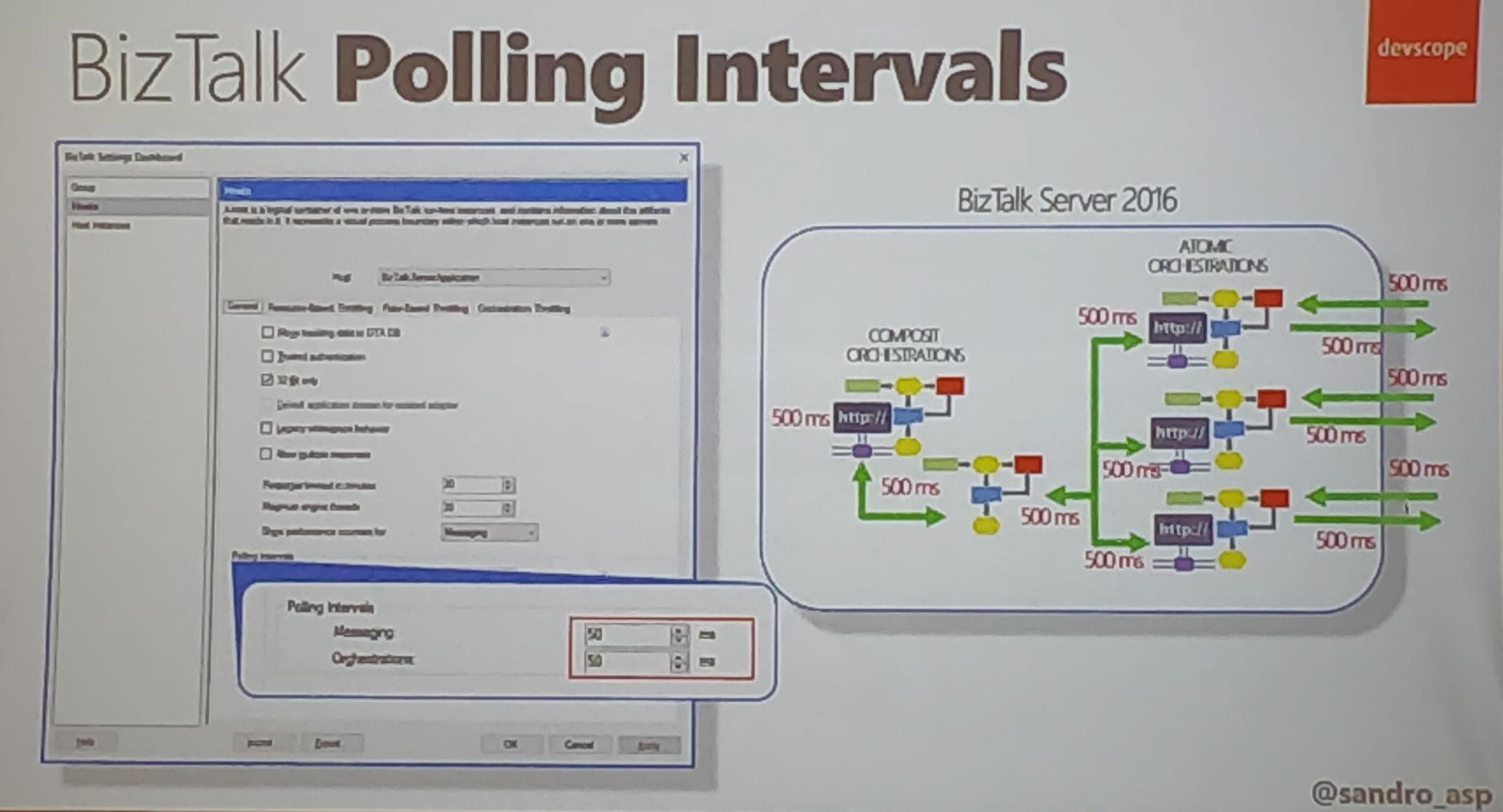

- BizTalk Polling Intervals in MQ Agent (Careful on the set the polling)

- Tune the performance with configurations

- Orchestration Dehydration (Composite Orchestration)

- SQL Affinity – Maximum number of memories

- Priority in BizTalk: Set Priority Send port priority (10 lowest – 1 Faster)

Changing the game with Serverless solutions – Michael Stephenson

Michael Stephenson introduced himself as a cloud & integration freelancer as well as Microsoft MVP for more than 10 years.

The main theme of this talk is to explain various components involved in building a real-world application using serverless solutions.

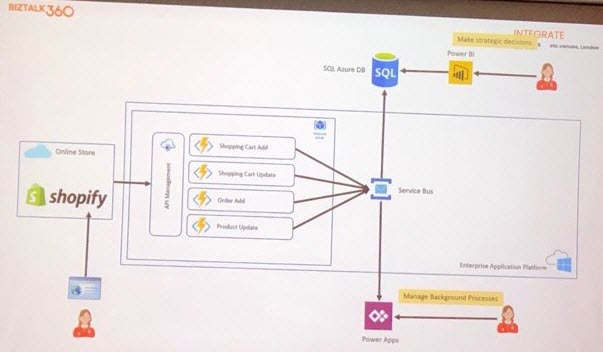

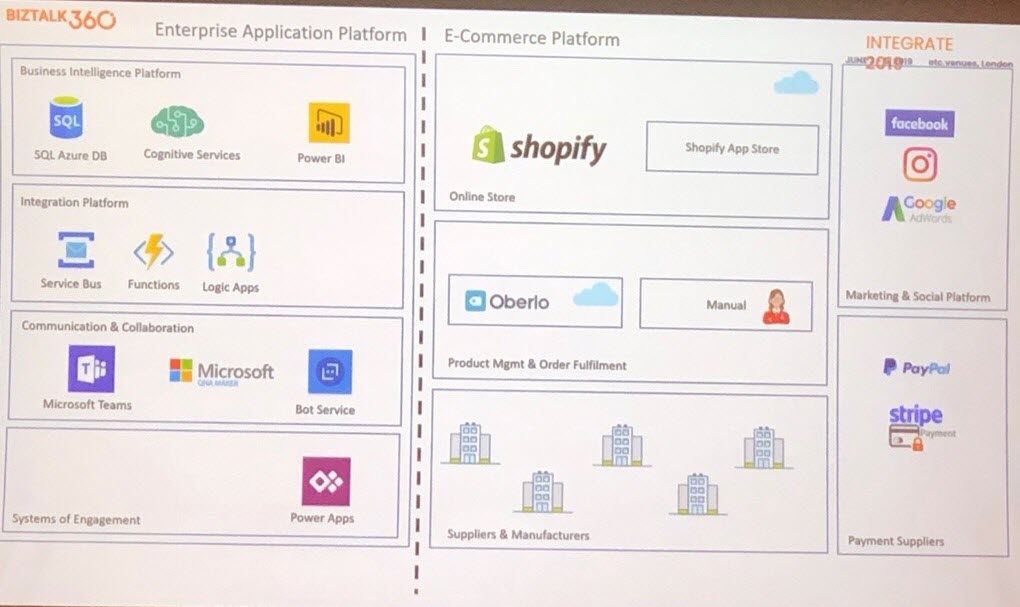

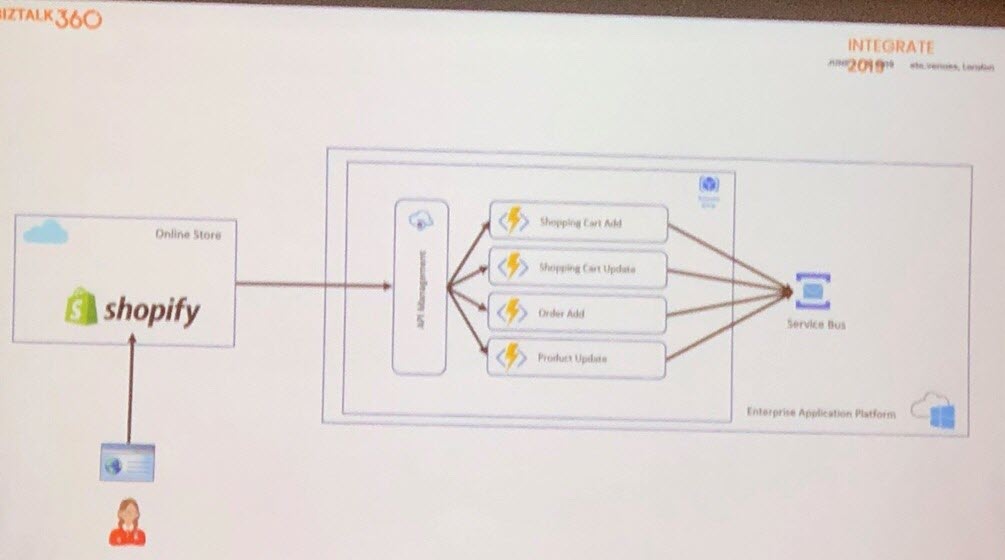

Mike provides the synopsis at the beginning of talk itself. The idea is to discuss the serverless components involved and solutions used to build the shopping cart called Shopify.

He discussed how the cart is using Application Insights to capture the various stats about the client-side information. Using that information, the issues which has been faced customers can be fixed.

Principles

The talk has been continued to discuss the various principles involved in his talks about the process of building the solution.

- What are the common business problems?

- Can I do some cool demos for talks?

- Can I make a story comparable to the real world?

To compare with a real-world example, Mike explained the components of Shopify.

Serverless components

Shopify has been built with various components like API Management, SQL database, Power BI, Power Apps, etc.

He explained about the flow of data from user endpoint to various storage, processing and notification services involved.

Shopify deals with solutions like management stuff, managing supplies in the background, handling payment in PayPal or Stripe, handles the supply chain scenarios and social media engagements.

Demo of webhooks

Mike showed a demo on how webhook is used in the application.

Webhooks are triggered when the Service Bus receives a message. Only a few lines of codes help the user to set up the message handling scenarios.

One can build an investigation on received data with the help of Power BI.

Most Popular products

The most popular articles can be found by for example, whatever the products are which added in cart or searched for in the portal. These have been stored using Logic Apps.

This information can be processed and provided with analytics like what is the most popular product in the last two weeks.

Service Bus Cognitive Service helps to find the pattern and helps to show the related products based on user input and matching the user query with offsets.

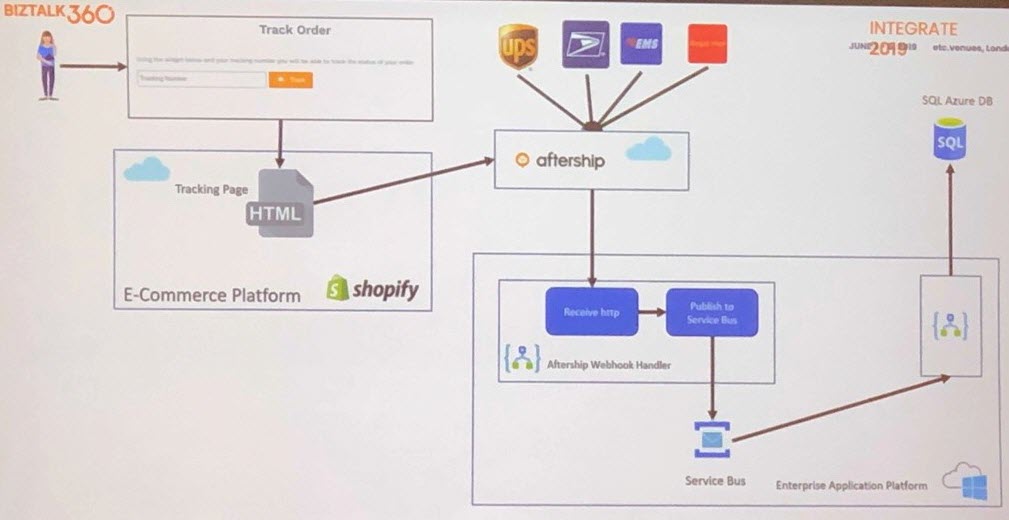

Shipping data

Tracking the order is the key feature in any shopping portal. Aftership can be integrated into the ecosystem to keep us up to date with the shipping status.

Once the shipping is started the customer can use a web application to track the order.

For example, if an order takes longer time to deliver. If we could predict and deliver the information earlier to the customer, that will help them to get engaged with the product.

Using Logic App triggers and webhooks, a notification can easily be sent to various communities in Microsoft Teams.

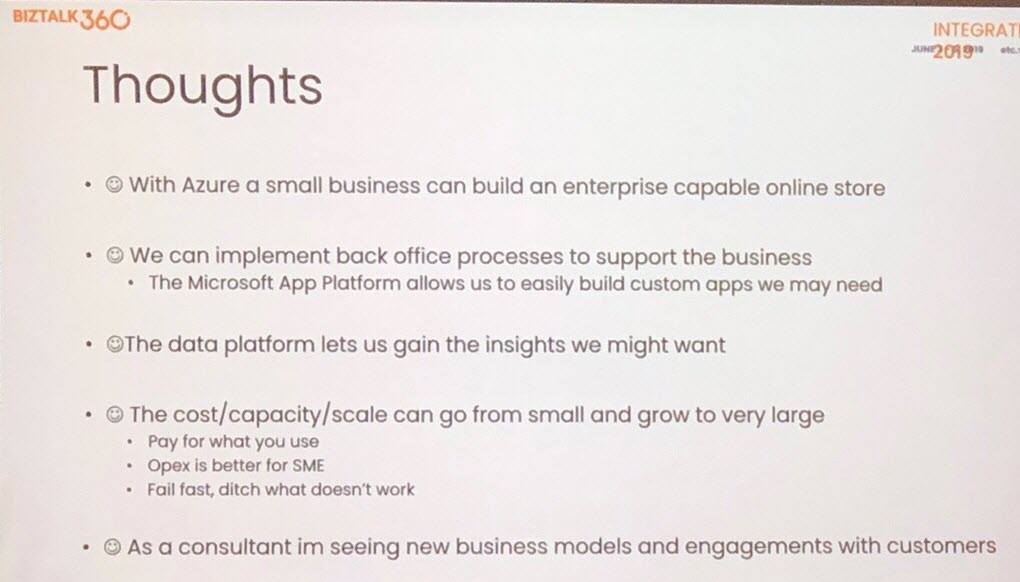

Thoughts

At the end of the talk, Mike shared his thoughts on building a business solution with Serverless.

Adventures of building a multi-tenant PaaS on Microsoft Azure – Tom Kerkhove

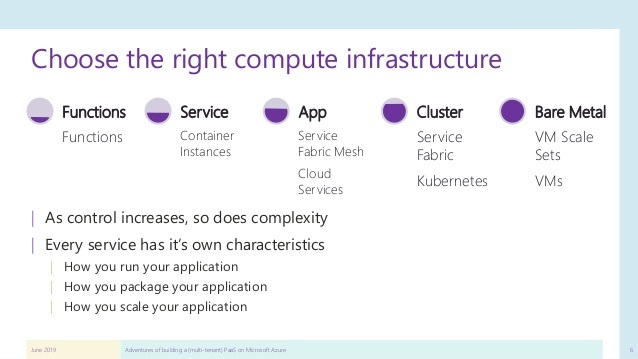

Tom Kerkhove, Azure Architect at Codit, kicked off his session on Scale. Basically, Scaling exists of two types, being – Scale up/Scale down and Scale-out/Scale in. The right computing Infrastructures are varying from less managing Functions to More Managing Bare Metals like VMs. This reminds us the fact that the more control we have, the more complexity rises.

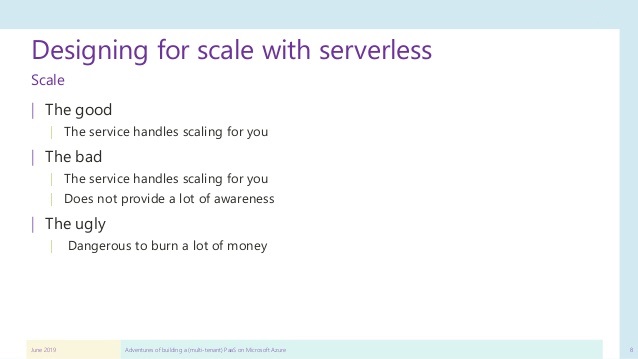

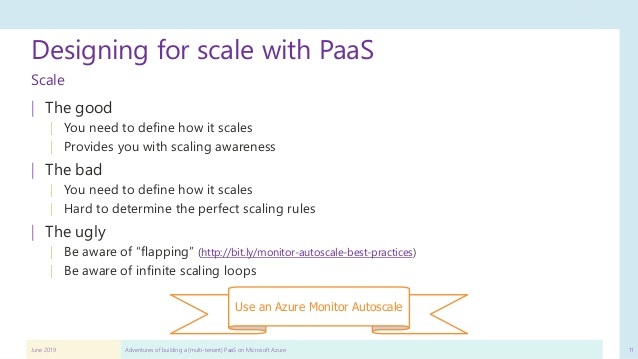

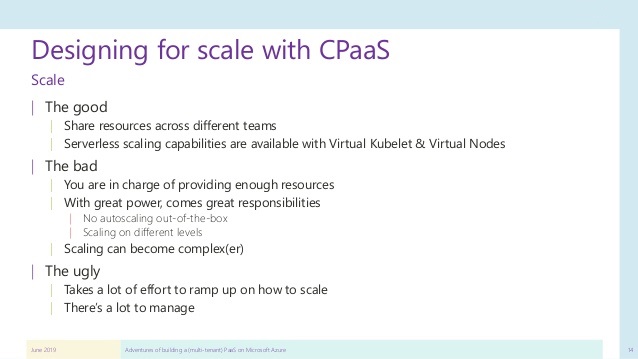

Tom advised on how to make designing for scale with:

- Serverless

- PaaS

- CPaaS

Here are some pictures portraying the good, bad, and ugly scaling design ideas on Serverless, PaaS, and CPaaS:

Next, Tom shared his analysis of Auto-scaling on serverless services and some Tips.

Tenancy:

He suggested some ideas on choosing the right tenancy model which is very important for scaling.

Here are some ideas of choosing tenancy model:

- Choosing a sharding strategy – Spread all data across a smaller database (e.g. Elastic Pool). Using Shard managers and Cost efficient, sharding is a better way.

- Determining tenants – Determining the tenant that is consuming your services.

Monitoring:

On monitoring, he recommended that training your developers to use their own toolchain and automated tests would be better as it should be a shared responsibility.

Here are some good actions he suggested to be followed on monitoring:

- Enrich your telemetry

- Health checks

- Handling alerts

- Write Root cause analysis (RCA)

Webhooks:

For webhooks, He suggested several points to be followed:

- Providing good DNS names of your services

- Do not reduce your API security because of your 3rd Party

- Always route webhooks through an API gateway

- Provide user-friendly webhooks

Embrace Change:

Finally, He showed how they ship their product with DevOps and he concluded with the sentence “We live in a world of constant change, do change”

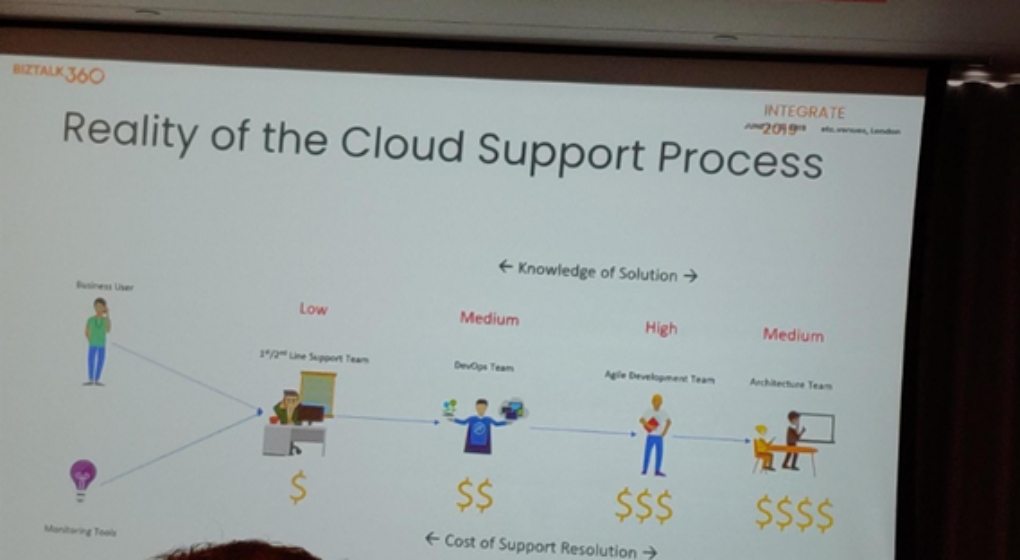

Lowering the total cost of ownership of your Serverless solution with Turbo360 – Michael Stephenson

Michael Stephenson, Freelance Cloud Specialist and Architect started his session on how Turbo360 reduces your total cost of ownership in your Serverless solution. Let’s see what Microsoft does to reduce its total cost.

- Microsoft provides a lot of services like Function Apps, Service Bus, Logic Apps and they ensure that the core platforms do their job in an effective manner

- The DevOps team should ensure that they divide the roles based upon resources in different regions

- This can be easily done using Turbo360 by the powerful concept called Composite Application and the support team can be assigned based upon the entities associated in a respective composite application

- Using democratization, you can make functions available for less experienced or less skilled people. However, there is a chance where a less skilled support person can break your existing business solution

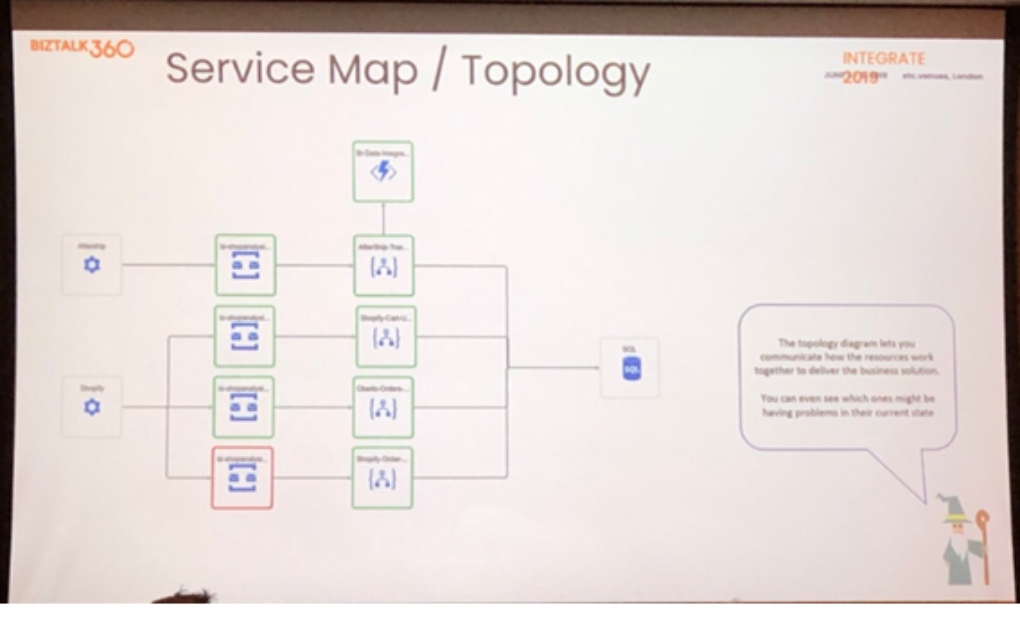

Service Map/Topology in Turbo360

The Topology feature will be very useful to create a topology diagram that represents the business orchestration of your composite application. The diagram can be created with the entities that are part of a composite application.

BAM in Turbo360

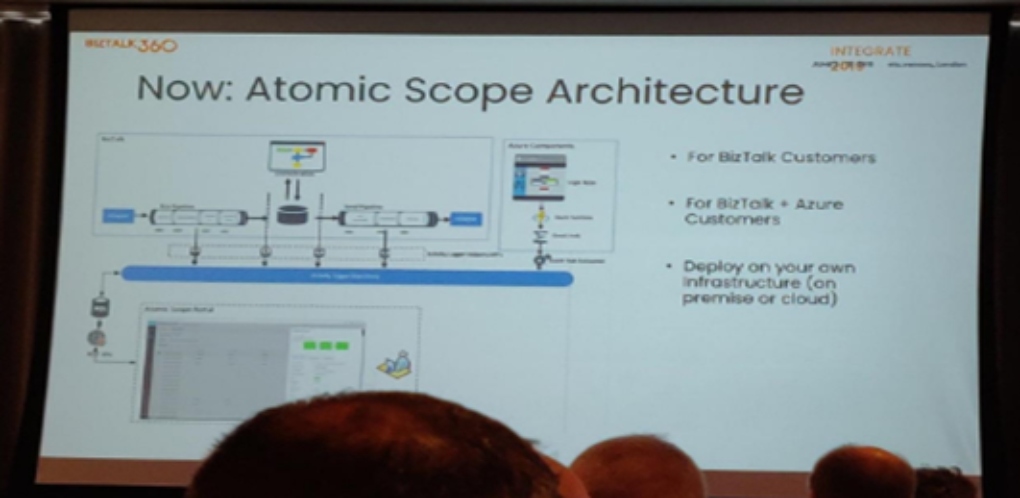

Atomic Scope is an end to end monitoring tool for Azure Integration Services with rich business context and It supports three types of solutions,

- BizTalk only solution

- Azure only solution

- Hybrid Integration solution

The below image depicts the current architecture of Atomic Scope.

This is where Turbo360 became even more powerful, we had an idea of integrating Atomic Scope with Turbo360. So, we decided to bring the tracking capability within Turbo360.

- You are now able to configure business processes in Turbo360 portal

- You also get a simplified SaaS provisioning (we set up and manage Atomic Scope infrastructure behind the scenes)

- Many customers were asking for a combo of these two products and we think this is the right way to do it.

Explore more about BAM in this respective link.

Key Features to democratize support in Turbo360

Some of the key features that democratize support are,

- Composite Application – Group your entities effectively

- Service Map / Topology – Represent your business orchestration of respective composite application

- Activities – Schedule activities to send messages to queues/topics, etc. and be able to purge blobs

- Monitoring – You can make use of different types of monitors available in Serveless360 to monitor your azure entities

- BAM – Get an end to end visibility of your business process

- Governance Auditing – Maintains logs of the user activities in the system

Microsoft Integration, the Good the Bad and the Chicken Way – Nino Crudele

Nino Crudele is back to INTEGRATE with great charm, energy and his signature style in presenting sessions. This year he is a Certified Ethical Hacker as well. Congrats to him!

He started the session noting his connection with Paolo Salvatori, Principal Program Manager at Microsoft, a good friend of him remembering their days working together on BizTalk.

He was sharing his experiences in leading cloud team adopting Azure. He still suggests BizTalk is a top option for complex on-premise and hybrid mediation.

Adopting to Azure comes with its own challenges and How to avoid side effects moving to Microsoft Azure.

The real challenge is not the Technology, but to embrace the Azure Governance – which is a massive and complex topic. Governance is everything – without using Azure Governance, you cannot even think of using Microsoft Azure.

Avoid Chicken way – don’t struggle, be brave

The transition in Azure Scaffold – which is the principle behind Microsoft Azure that now includes Security, Cost Management, Identity & Access, Automate, Templates & DevOps, Monitor & Alert.

What exactly is a Management group

Once an agreement is established with Microsoft, an account is created with a Management Group. Within a Management Group, you can create subscriptions, create resource groups and then tags.

Policies in Management Group

- Do not try to manage resources directly from the Management Group.

- Organize subscriptions in management groups, then configure security and cost

- Policies can be disabled and enabled whenever required

How to organize the Roles

Managing roles is very important. There are multiple roles like Owner, Reader & Contributor. Owner – should be used in delegation only.

Global Admin – God in Azure Governance, can see whatever happens. Does not provide this access to anybody on a longer time. Use Privileged Identity Management to provide for some time.

APIs are more powerful in the Azure portal usage. A massive framework is built to make Azure operate through APIs. Cost is the number one problem in Azure. You can have a lot of fancy and tech-savvy tools like PowerBI with analytics, but the finance team loves Excel and want to keep things simple.

Nino suggests costing to be organized at the Department and Project level

What Finance department will care about

- Totals by Department

- Totals by Location

Departments or projects may be distributed in multiple regions.

Avail discount from Microsoft

Use the price sheet from Azure Portal. Mark the cost with usage and try to negotiate for a discount for specific services but not for all.

Security

This is important and there are a lot of tools like Burpsuite, Nmap, Snort, Metasploit, Wireshark & Logstalgia

He suggests having a Dedicated team and resource to manage security. Probably good to outsource but it is best to different companies every time for pen testing and security maintenance.

Managing Networking is the Core

IP Schemas and Centralize security. Peering of vNet may be required. A good practice is to use Centralize Firewall like Fortigate.

He then touched upon another problem – DDoS attack. DDoS protection can be enabled in the security center.

Logstalgia helps to analyze how the packets are traveling around a network. Hitting webpage, endpoint, etc. The DDoS attack is well visualized.

Use Naming Standards

It is a must in Azure. He suggested a tip to use Excel agent to create VMs in Azure with desired naming standards.

Lack of Consolidation

Managing all these may need more knowledge and support. If your company has an Enterprise Agreement – support is free. Create a support ticket in portal. Not only Technical support but also advisory from Microsoft.

There are a lot of Options in Azure with pros and cons.

One such possibility is Continuous Deployment through – Automation, ARM Templates, PowerShell. An ARM Template may have security issues in terms of script injection. He suggests not to trust anybody, there is always a possibility that support engineer could inject scripts in PowerShell. The advice – Hashing is Integrity. Any change in PowerShell security should be alerted and so the execution could be stopped.

Advanced Troubleshooting

Need to check how the firewall is configured, who are using different ports. You cannot use the Azure portal for all these.

He has collected some 2000+ APIs for his purpose using which troubleshooting can be done. He could provide subscription details as a parameter and get all details.

Created heuristic view

Nino emphasized on the necessity to visualize these data and correlation between data. He shared a tip on how to display the data collected from all these API in a sensible form to Excel for further analysis.

Documentation is very important

He also built a tool Aziverso.com that will help to collaborate cost management to Microsoft Office. Another tool Cloudock, it provides better documentation for the Cloud Architecture.

He presented this valuable session in a fun-filled manner for the audience to be well engaged throughout.

Creating a Processing Pipeline with Azure Function and AIS – Wagner Silveira

The session started with a case study explaining the difference of how a solution was built a year before and what are the options for the same now. One of his clients had the requirement to design a solution that would process a large volume of EDI messages which should be enriched by data coming from a SQL Server database in the cloud and pushed it into a big data repository for reporting and data mining. This should allow control of retries and notification for failed messages, including the visibility of where a single message was in the entire process.

Here are some of the requirements in detail;

- The message would need to be received via a secure HTTP interface.

- A copy of the message should be stored for auditability.

- After the message was successfully received, the process should guarantee the delivery of the message.

- An invalid message should be rejected at the beginning of the process.

- The solution should scale without requiring much intervention

- The operations team should be able to trace the process and understand where a single message was in the process and be notified in case of any failures.

- The process should allow for extended validation and extra processing steps. It should also allow the big data repository or the processing of the data could be replaced without a huge impact on the system.

The logical design of the requirement had components like Inbound API, Inbound Processing pipelines (Logic Apps, Service Bus, Integration Accounts), Outbound Processing Pipeline (Logic Apps, Service Bus), Staging repository, External Repository, Outbound API, Big Data Repository and finally App Insights.

Reality checks from the initial logical design

- The message sizes were too big to use service bus as the messaging repository

- The volume of the messages and the number of steps required to process it would imply in a sizeable bill for Logic Apps, which could make it hard to swallow.

- EDIFACT Schema was not available

- Higher Operational cost

- Lack of end to end monitoring

With reality checks, there was a need for an updated solution. The solution was updated with Azure Functions in Inbound and outbound processing pipelines with .NET components. Also using storage account and Service Bus for claim check patterns.

Difference between the two solutions

- Azure Functions

- EDIFACT support via .net package

- Claim check pattern to use Service Bus

- Ability to cut the cost with dedicated instances

- Azure Storage

- Payload is under control for Claim check patterns

- Applications insights

- End to end traceability using the correlation ID

- Track a message for the entire process

- Allow for the exception to capture the same way

So, the key components are Claim check patterns and Application Insights. He then showed a quick demo of the updated solution.

Exception management and retries on Functions

- Catch blocks using a notify and throw patterns

- Leverage functions and Service Bus peek lock patterns

- Adjusted max delivery count on Service Bus

- Messages that failed were delivered to Dead Letter Queues

Dead letter Queue Management

- Logic Apps polling the subscriptions dead letter queues was reduced to every 6 hours

- Each subscription DDQ could have its own logic like;

- Reprocessing count

- Resubmissions logic

- Notifications logic

- All DDQ messages to different blob storages with email notifications

- Send the Error blob storage notifications

1 year later

Now, if the same solution is created today ie., after 1 year, let have a quick look at the options available. With new technologies available, here are some suiting this requirement.

- Integration Service Engine – with options for On-Premise

- Azure Durable Functions

- Event Grid

In this case, they still thought that the Functions will be still a good fit as it allows more flexibility.

New features release now have more impact. Some of the important new features released in the last year are;

- Azure Functions Premium

- Integrated support to key vault

- Integrated support for MSI

- Visual network support + Service endpoints

Lesson Learned

- Review the fine print – know the limitations and the project volume and match that with available technologies

- Operational cost is a design consideration

- Make the best of each technology – Understand the price of each technology vs how they fit well together

- Think about the big picture – Don’t focus on short needs – spend more time on design

Summary

- Weight the technology options available

- Think about integrations operational cost

- Understand your solutions fit in the big pictures

- Understand the components roadmap

Integrate 2019 Day 1 and 2 Highlights

Integrate 2019 Day 1 Highlights

Integrate 2019 Day 2 Highlights

This blog was prepared by

Arunkumar Kumaresan, Pandiyan Murugan, Mohan Nagaraj, Nishanth Prabhakaran, Saranya Ramakrishnan, Senthil Palanisamy, Suhas Parameshwara