In the previous post “How to deal with large Service Bus messages”, we touched on message size and how to reduce it when message size exceeds the maximum allowed by the underlying service. Several approaches discussed had their pros and cons

Reduce the message size

This is the most naïve approach. In the real world, reducing message size is not always possible. For instance, assuming the maximum allowed message size is 64 KB. The data and metadata all have to be within 64 KB. This is possible for simple messages. For more complex messages or messages that contain business data that you cannot reduce to the desired size, this approach is unrealistic.

Use optimal serialization

Serialization can influence message size, but it cannot fix the problem. Serializing a message as JSON vs. XML will reduce the overall payload size to some extent, but it won’t be drastic. You can reduce it even further by selecting a serialization such as binary. The trade-off would be the readability of the message. Not to mention that even a binary serializer will not be able to compact messages beyond a certain level. After all, if binary data such as PDF is sent along with a message, you can compress the PDF file. But you cannot miraculously reduce it from a few megabytes to 64 KB we assume to be our message limit.

Use the claim check pattern

The pattern is implemented by storing message data in a persistent store and passing a reference to the persisted data along with the message. The receiver is responsible for retrieving message data from the persistence store used earlier and reconciling it with the message.

Choosing Messaging Service

To continue with the scenario defined above, we’ll use a messaging service that has a fairly limited message size similar to what has been used as an example. The service is the Azure Storage Queues (ASQ).

Azure Storage Queues is one of the veteran services. Part of Storage service, it offers basic queuing capabilities offering the ability to store millions of messages up to the total capacity limit of a Storage account used. The service limits message size to 64KB for the message body. It does not allow message metadata in the form of headers. The message body is the payload with senders and receivers agreed upon serialization and format.

Choosing Persistence Store

With Azure Storage Queues elected as a messaging service, we’ll be using; Azure Storage Blobs as the logical Persistence Store choice. While there are possible alternatives, Storage Blob is cheap, conveniently associated with the same storage account used for Storage Queues, and offers very generous blob size (up to 4.77 TB). No message should be this large, but it’s comforting to know there’s enough space if that space is needed.

With the two service selected and in place, the next step is to get to the code to implement the pattern.

Message flow without claim check pattern

Sending messages

Before implementing the claim check pattern, let’s have a look at the base code required to send a message using the Azure Storage Queues service. The code to send requires WindowsAzure.Storage nuget package.

// create a storage account

var cloudStorageAccount = CloudStorageAccount.Parse(connectionString);

// create cloud queue client

var client = cloudStorageAccount.CreateCloudQueueClient();

// get a reference to a queue we’ll use to send messages to named “receiver”

var queue = client.GetQueueReference("receiver");

// in case queue doesn’t exist, create it

await queue.CreateIfNotExistsAsync();

// create a message

var message = new CloudQueueMessage("A message to send");

// send it

await queue.AddMessageAsync(message);

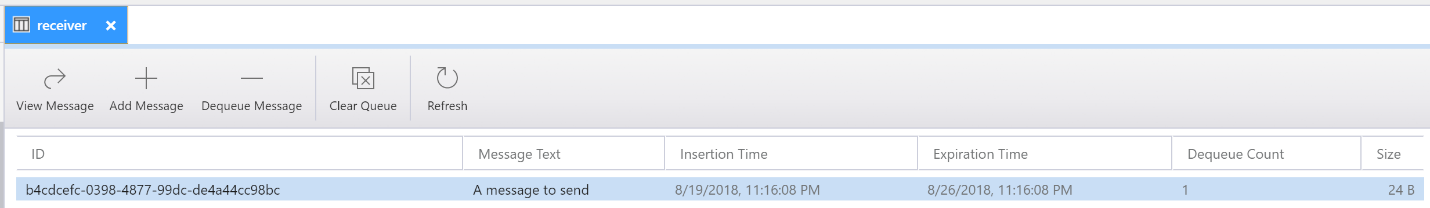

Using the code above, a message is successfully sent to the “receiver” queue and wait until it will be received. Note that by default, a message will be stored for seven days only. This is also known as message time-to-live or TTL.

To store messages for longer than 7 days, the TTL parameter has to be specified when sending messages.

Receiving messages

// create a storage account

var cloudStorageAccount = CloudStorageAccount.Parse(connectionString);

// create cloud queue client

var client = cloudStorageAccount.CreateCloudQueueClient();

// get a reference to a queue we’ll use to send messages to named “receiver”

var queue = client.GetQueueReference("receiver");

// in case queue doesn’t exist, create it

await queue.CreateIfNotExistsAsync();

// receive a message

var message = await queue.GetMessageAsync();

// use message body

Console.WriteLine(message.AsString);

// delete it

await queue.DeleteMessageAsync(message);

Implementing the claim check pattern

Now let’s add message claim implementation. There are several ways to implement it. The simplest way is to replace the message body with the name of the blob used to store the original message body. We’ll use the code created earlier to send/receive messages and modify it to introduce a simple claim check pattern code.

Sending messages

// blob client to access Blob storage

var blobClient = cloudStorageAccount.CreateCloudBlobClient();

// using container name agreed upon by sender and receiver parties

var container = blobClient.GetContainerReference("blobs");

// in case container doesn’t exist, create it

await container.CreateIfNotExistsAsync();

// message body (assuming it’s more than 64KB)

var messageBody = "A message to send";

// blob name to be used to store message body

var blobName = Guid.NewGuid().ToString();

// upload message body to Storage blob rather than send as a message to allow more than 64KB

var blob = container.GetBlockBlobReference(blobName);

await blob.UploadTextAsync(messageBody);

// send message with blob name rather than actual content

var message = new CloudQueueMessage(blobName);

await queue.AddMessageAsync(message);

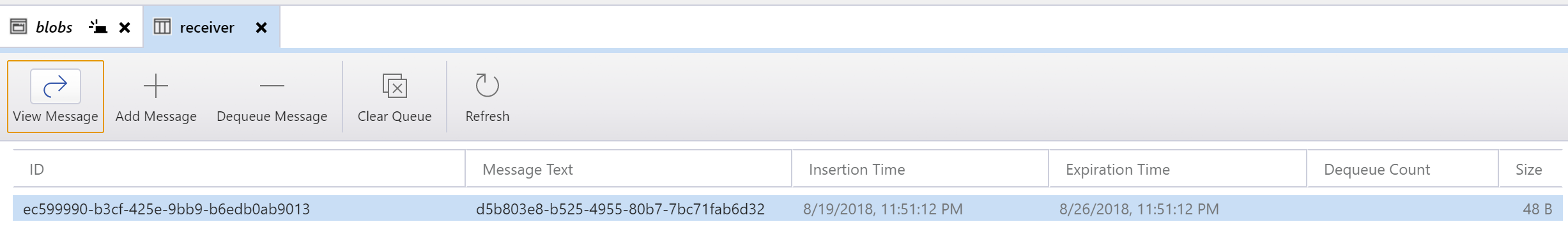

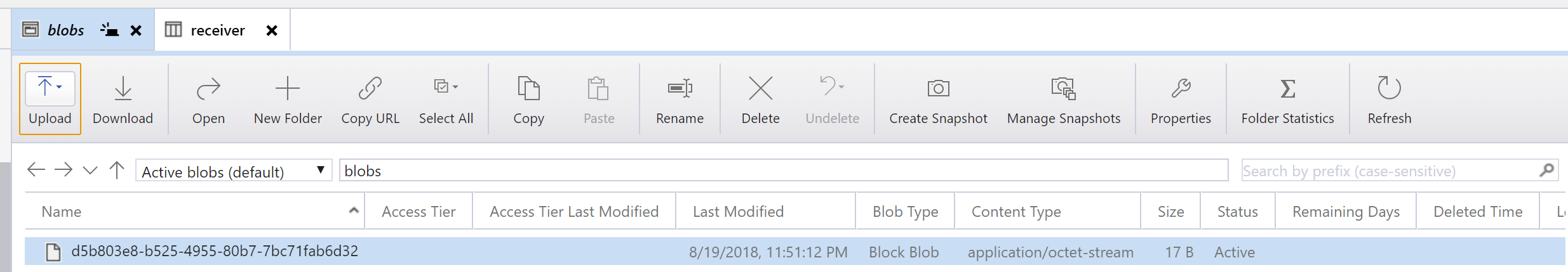

Quick queue examination will show that the message is sent with the content “d5b803e8-b525-4955-80b7-7bc71fab6d32”. This is a GUID used to generate Blob name to persist the actual contents intended for the message.

Receiving messages

// queue code from the previous sender snippet, omitted

// queue code from the previous receiver snippet, omitted

// blob client to access Blob storage

var blobClient = cloudStorageAccount.CreateCloudBlobClient();

// using container name agreed upon by sender and receiver parties

var container = blobClient.GetContainerReference("blobs");

// in case container doesn’t exist, create it

await container.CreateIfNotExistsAsync();

// get a message

var message = await queue.GetMessageAsync();

// get blob name containing holding message body

var blobName = message.AsString;

// get reference to the blob to read the contents

var blobReference = container.GetBlockBlobReference(blobName);

// get the content and print it

var content = await blobReference.DownloadTextAsync();

Console.WriteLine(content);

// last step – delete the message when the blob is successfully read

await queue.DeleteMessageAsync(message);

Et voilà! We’ve got a basic claim check pattern implemented for Storage queues to overcome the limitation of 64 KB in a few lines of code.

There’s one thing that the updated receiver is not doing though, and you might have already noticed it. It doesn’t delete the blob. Spotted that? Good catch. Let’s talk about some edge cases and things to consider.

Edge cases and considerations

Blobs cleanup

In my naïve implementation, I have not bothered to clean up the blob once the message is consumed (read and deleted). While that could be an approach for messages that represent commands, messages that have a single destination to be processed at, this is not the case for events. With event messages, there are potentially multiple consumers, and it is impossible to deterministically know when a blob needs to be removed after all consumers are finished. This poses an interesting challenge of how to identify when those blobs can be cleaned up after all. One option is to keep them on the storage for good. After all, storage is cheap.

Another option is to identify the longest possible time that would take all the consumers to process a given event message type and set up a cleanup process to remove blobs after that period. If building a more complex system, consumers could report what event types they are supposed to handle to a centralized repository. The same repository would store details of specific events processed by consumers and be in charge of issuing a cleanup once all consumers are done with a specific message of a certain event type.

Conditional claim check

In the sample above message body has barely reached the maximum limit. With claim check pattern in place, every message regardless of its size will have the content stored as a blob first and then message sent with a reference to that blob. For small messages that this would potentially become very inefficient from a performance point of view. Storing a blob with a few bytes, sending a message with a reference to that blob, and retrieving the blob once the message is delivered has higher latency involved. This will translate into performance impact and throughput degradation.

A more sophisticated way to address it would be to adjust implementation. You can use claim check pattern when the message body exceeds the maximum or a certain size threshold. With a conditional claim check, small messages do not require additional steps involving blobs and can be processed much faster.

Claim check pattern with Azure Service Bus

What is wonderful about claim check pattern is that it can be used with any messaging service out there. Another major Azure messaging service such as Azure Service Bus while has a better maximum message size story is still somewhat limiting. Unlike Azure Storage Queues, Service Bus offers a pluggable pipeline model which makes it easier to apply pre-sending and post-receiving processors, also known as plugins. One of those plugins is ServiceBus.AttachmentPlugin bringing claim check pattern implementation to Azure Service Bus or Logic Apps using Service Bus.

What’s next?

Now that you’ve learned about claim check pattern, message size limitation is no longer a show stopper. It is important to mention that while it’s possible to send messages exceeding the maximum size, you should always assess the scenarios you’re working on and think through wherever it’s a good idea to apply claim check pattern to the developed systems. Just because messages size can be made terabytes in size doesn’t mean they should be.

Strengthen your Azure Service Bus monitoring, and get powerful toolsets and actionable insights to troubleshoot messaging issues with the help of Turbo360.