This blog is a transcript of the session “What’s new with Event Hub” presented by Shubha Vijayasarathy, Senior Program Manager for Messaging Services at Integrate Remote 2020.

Introduction

Azure Event Hubs is a big data streaming platform and event ingestion service. It can receive and process millions of events per second. Data sent to an event hub can be transformed and stored by using any real-time analytics provider or batching/storage adapters.

Agenda

The following is the agenda of the respective session,

- Explore about Streaming

- Introduction to Kafka

- Why Event Hubs for Kafka

- Roadmap to Event Hubs

Interesting Statistics

The session was started with some interesting statistics. These are some of the strategies that enterprises do today to increase their customers as well as revenue,

- 44% – Integrated Customer Analytics

- 60% – real-time customer experience which will provide improved customer experience

- 58% – customer retention

- 74% – Personalization at scale

- 70% – Increase Real time customer analysis solutions

Let’s take an example of Uber which provides you the following functionalities,

- Instant Notifications

- Cab Locations

- Real-time feed of the ride

- Faster Optimized trips

- Options for rides

- Real-time traffic updates

- Instant route change feeds

Basically, it’s an application which makes each ride of the respective person more special with all the above functionalities. And that’s where the transition happens, the data which is an important aspect makes us to move from data driven to customer centric and event centric. Previously we were worrying about the data at rest and the challenges of storing that data but now it has been changed a lot. We are expecting as,

- Data is in motion

- Respond and Personalize data for customer experiences

- Get Actionable Insights

- Real-Time Insights to apps

- Get data where it needs to be before it is needed

- Logs and events the source of truth

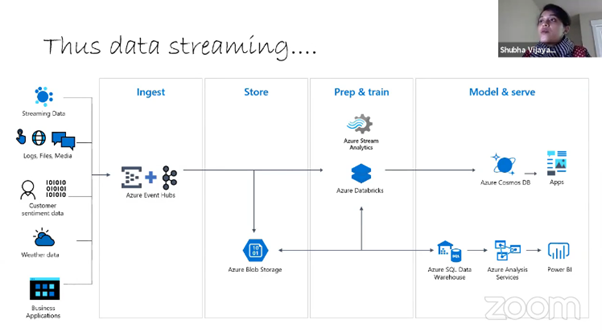

To achieve the above points, we go for data streaming. So, it can be done using Event Hubs or Apache Kafka.

Streaming Platform

The following are the expectations of a streaming platform like Event Hubs,

- High Availability

- Low-Latency

- Scalability

- Replay

- Persistence/Durability

- Process data as it occurs

- Immutable Events

- Apache Kafka or Azure Event Hub

What is Apache Kafka?

Kafka has a rich ecosystem but running on-prem with its own set of challenges but its open source. There are lot of addons coming up with Kafka such as,

- Kafka Mirror Maker

- Kafka Connect

- Kafka Stream

- Spark

- Storm

If you must deploy Kafka, you must procure the hardware, install the physical network, ensure reliable power, provide physical security, configure IP Networks, install OS, install/configure Kafka. It doesn’t stop with the deployment; you must manage it. In the management side you must load balance, procure more hardware for growth. Patch OS and Kafka, SSL etc.

To reduce the pain, you can integrate Kafka with Event Hubs.

How Event Hubs Support Kafka?

The following are some of the important points of how event hubs support Kafka,

- They do not run/host Kafka brokers

- They support Kafka by implementing the Kafka TCP Protocol

- Binary Protocol level supports

- This gives an Open API approach

- Not dependent on the underlying technology

- Makes solution cloud agnostic

- Provides versioning and compatibility

- Underlying, the same broker engine supports both AMQP and Kafka

- Focus on value of creation rather than operation.

What is Kafka Event Hub?

Event Hubs provides an endpoint compatible with the Apache Kafka® producer and consumer APIs that can be used by most existing Apache Kafka client applications as an alternative to running your own Apache Kafka cluster. Event Hubs supports Apache Kafka’s producer and consumer APIs clients at version 1.0 and above.

Why Choose Event Hubs for Kafka?

There are four main important reasons of choosing event hubs for Kafka.

Simplify Kafka

They need to simplify Kafka by zero code setup which is possible using Event Hubs. And, they want to make it as fully managed as well as easy to scale. Let’s say if there are more load to Kafka this scaling would be more useful, and they are planning it from 1MBps to >5GBps

Ingest Cloud Data

Using Event Hubs, they can easily migrate data to cloud. And, you can integrate it with more home-grown azure services like Service Bus, Functions etc. It also helps you to see some real-time data.

Gateway to Cloud

They can gain the full power of Kafka Ecosystem + Azure Ecosystem by doing this integration with Event Hubs. Since it is a cloud technology, it can be auto scalable, and it will be easy to manage as well as it provides more security.

Azure Essentials by default

By integrating with Event Hubs, you may get some addons from Azure such as Security, Compliance and Availability, Azure Active Directory/ OAuth Authentication, VNET/BYOK and finally Geo/Zone Redundancy

These are the main points of choosing Event Hubs for Kafka.

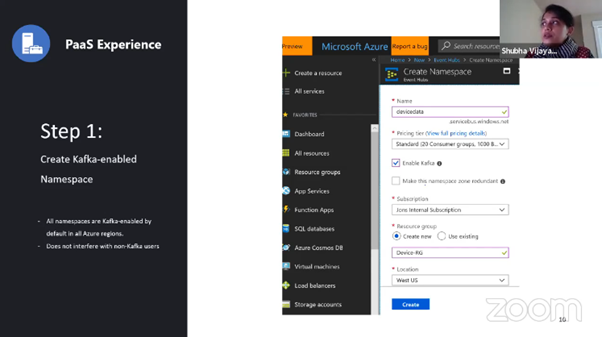

How you can create Kafka?

Goto Azure Portal and create Kafka Event Hub Namespace

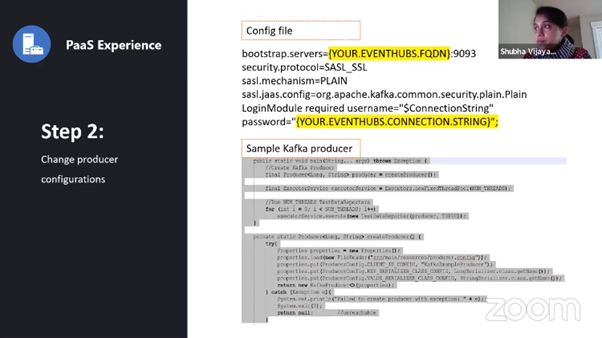

Just mention the respective port name and the connection string of the event hub namespace.

There was a clear demo depicting about using Kafka maker with Event Hubs. It was so simple, and you can easily get started off with step 1.

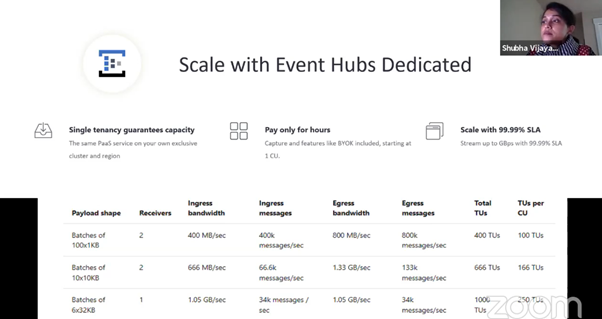

Event Hubs Standards vs Dedicated Limits

The following are the limits between Event Hubs Standards vs Dedicated.

Below are some of the benchmarks with Event Hubs Dedicated;

Is Azure Event Hub Kafka?

Azure Event Hub kafka protocol enables any Apache Kafka client to connect to an Event Hub, as if it was a “normal” Apache Kafka topic, for sending and receiving messages.

Azure Event Hubs Roadmap

Earlier this year they have bought some cool features such as,

- GA – BYOK for Dedicated clusters – Done

- GA – AAD token through OAuth bearer for Kafka – Done

- GA – Partition scale out – Done

- GA – VNet private link support – Done

- GA – Extended Retention (June 2020)

- Public Preview – Event Hubs for Azure Stack – Done

- Public Preview – Idempotent Producer (June 2020)

- Private Preview – Schema Registry (June 2020)

And we can expect the below features by third quarter of 2021,

- GA – Log Compaction

- GA – Kafka Connect Support

- Ga – Self-serve cluster management, and provisioning

- Public Preview – Schema Registry

- Public Preview – Premium EH SKU

These are some awesome features lined up with some tentative features such as compression, Kafka streams, Transaction and Data Replication for Geo-DR.

Conclusion

In this blog, we explored about some new stuffs coming to Event Hubs. It’s getting more powerful day by day. If you want to get started off with Event Hubs, use the below links,

The above links can be very useful to know about Event Hubs. So, why are you waiting? Go and check out and solve your Realtime problems with Event hubs.