Table of contents

- INTEGRATE 2023 – Welcome Speech

- Keynote – State of Microsoft Integration

- Getting started with Azure Integration Services

- Event driven application development in the cloud

- What is new in the world of Azure API Management?

- API management for microservices in a hybrid and multi-cloud world

- API Security Deep Dive

- Crush SAP application integration lead time with Azure

- Experiences releasing APIs to API Management

- Logic Apps Standard – the Developer Experience

Day 1 of INTEGRATE 2023 sets the stage for an exceptional Hybrid event in London, uniting top-notch professionals in the Microsoft Integration Space. Here are the key highlights from the inaugural day.

#1: INTEGRATE 2023 – Welcome Speech

The event commenced with an invigorating introduction by our esteemed CEO, Saravana Kumar, a Microsoft Azure MVP. He took the stage to share insights about Kovai.co, and its products.

As the session unfolded, Saravana Kumar traced the roots of INTEGRATE, emphasizing how it evolved from a modest event to the grand gathering it is today, celebrating its remarkable 11th year. The growth and success of INTEGRATE was apparent as he welcomed over 650 participants from more than 30 countries representing different companies across the globe.

During his address, Saravana Kumar also shed light on the community initiatives by Kovai.co. These initiatives included the highly acclaimed Serverless Notes, which provides valuable resources and insights from top Azure experts.

Additionally, he highlighted the popular Azure on Air podcast, where industry experts share their experiences and knowledge. Furthermore, Saravana Kumar highlighted about a valuable resource on migrating to BizTalk Server 2020.

With the participation of top experts in Microsoft Integration Space, INTEGRATE 2023 was poised to deliver an exceptional experience to all attendees.

#2: Keynote – State of Microsoft Integration

The conference began with an electrifying keynote by Slava Koltovich, Principal Group Product Manager for Azure Integration Services at Microsoft. Slava highlighted the significance of digital-first strategies, with 72% of organizations starting digital transformations after COVID-19. Digital leaders achieved 1.8 times higher earnings growth, emphasizing the value of a digital-first advantage.

Slava revealed a stunning statistic: over 750 million applications will be created by 2025, surpassing the cumulative count of apps developed in the last four decades. This signals a transformative shift in software development and integration. The future promises a new era of application creation, management, and release.

Key Highlights from Slava’s Keynote

During his captivating address, Slava emphasized several key points that resonated with the audience:

- Companies move towards composable enterprise strategies to innovate faster

- Integration Platform is the main enabler of composable enterprise

- Business are looking to build modern end to end experiences

- Integration Platform is the foundation connecting apps, data and providing easy access to services

- Organizations are looking to leverage a full bench of developers to build integration, automation and end-to-end solutions

- Integration Platform and Integration team role is to enable developers providing ready to use, self-service platform

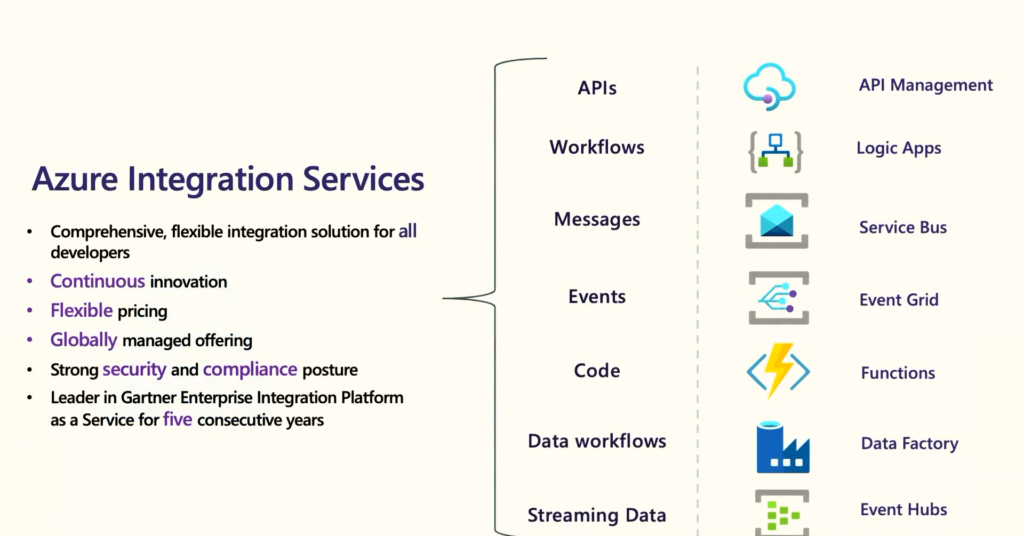

Azure Integration Services

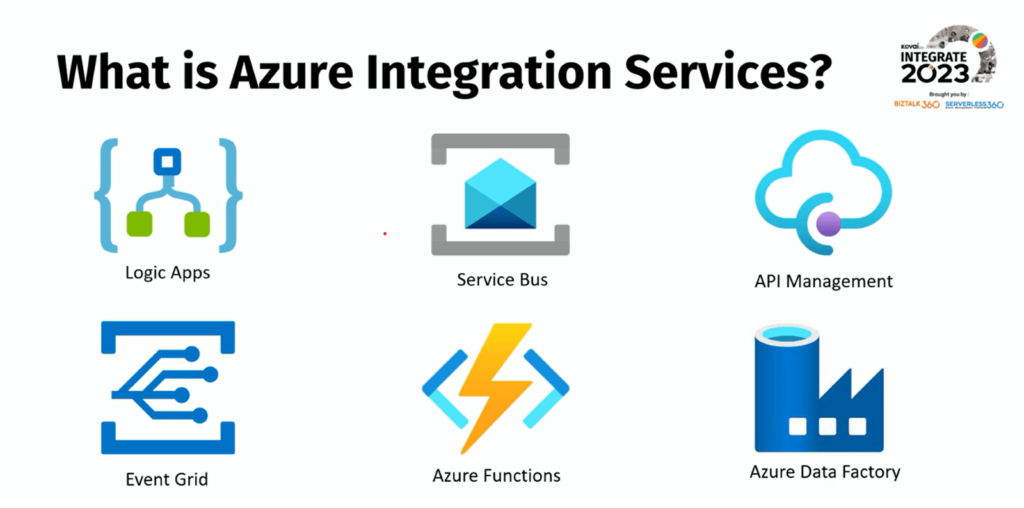

Slava addressed the broad capabilities of Azure Integration Services, encompassing everything from API integration to workflows to streaming data management. He delves into how Microsoft invests in Azure platform to address the evolving needs of modern enterprises.

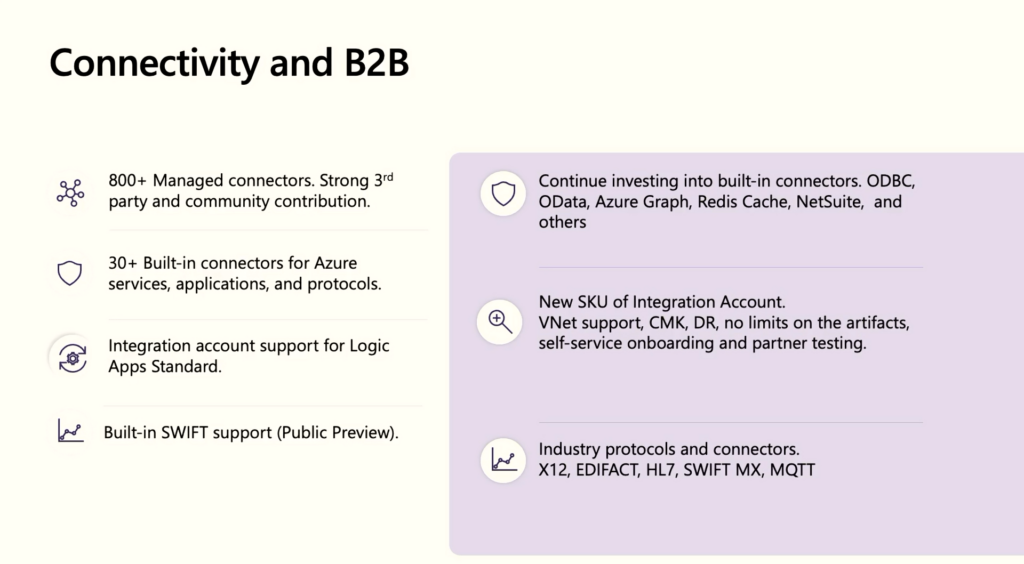

One of the key strength of Azure Integration Services is its extensive collection of over 800+ connectors that empower users with simplified connectivity to a vast array of Azure services, facilitating effortless integration with essential business applications.

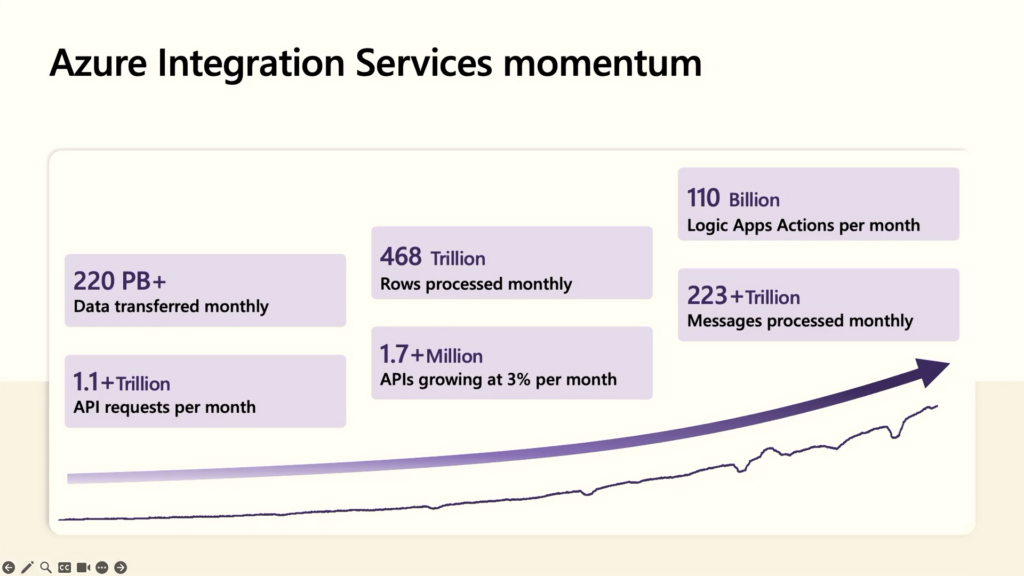

The Azure Integration services momentum

The graph below, depicts the extraordinary growth of Azure Integration Services (AIS) with an astounding number.

Slava introduced David Cleaver from Royal Mail, highlighting their strong connection with Azure Integration Services (AIS) to stay ahead of competitors. This partnership has enabled Royal Mail to leverage AIS’s capabilities, gaining a competitive edge and delivering superior services to their customers.

Exploring Microsoft’s Latest Updates and Investments: A Glimpse into the Future

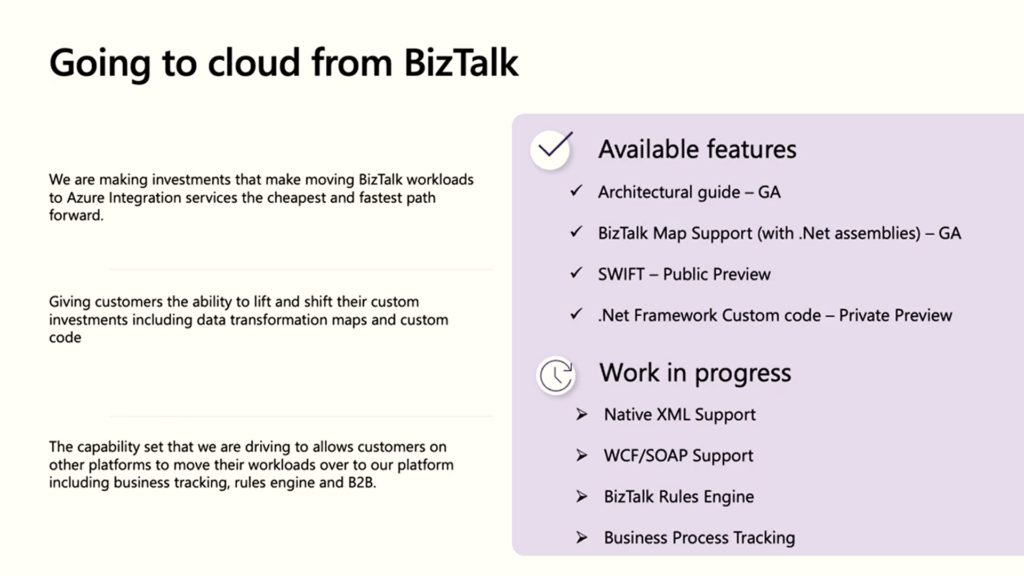

Slava’s keynote shed light on Microsoft’s active investments across all integration services, highlighting key areas of focus and strategic initiatives.

- In order to optimize migration in terms of cost and speed, Microsoft is working on introducing several upcoming features. Additionally, the .NET framework custom code will be made available for preview during the summer, aiming to enhance the migration process.

- Microsoft’s significant investments in connectivity and B2B integration, along with strong partner contributions.

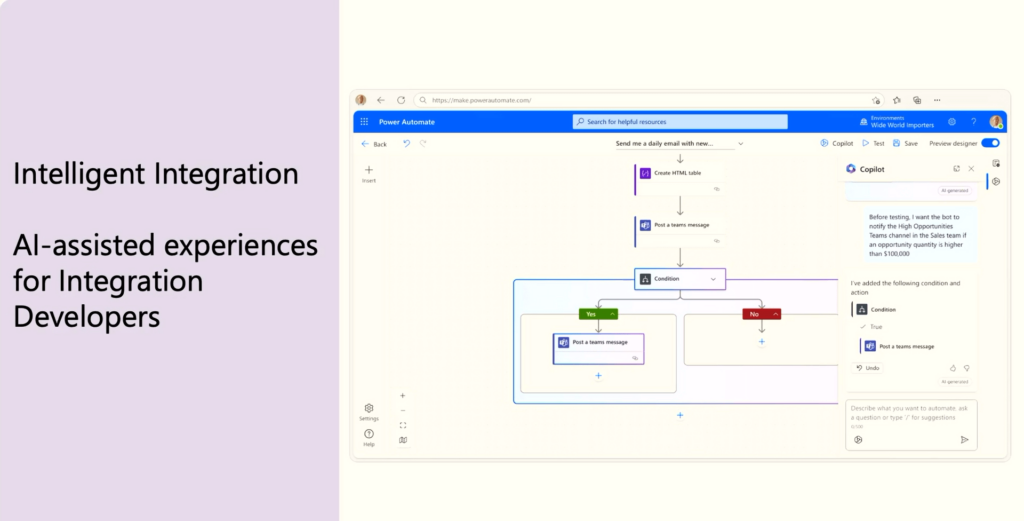

- Power Automate introduces Copilot, an AI-powered tool revolutionizing automation, enhancing productivity, and streamlining workflows.

In addition to their ongoing efforts, Microsoft is also actively working on providing Integration “Space”, end-to-end integration application monitoring and business process tracking. This exciting development showcases Microsoft’s commitment to empowering businesses with comprehensive tools to monitor, analyze and optimize their integration processes.

#3: Getting started with Azure Integration Services

Stephen W Thomas kickstarted the session with a lighthearted introduction and recollection of his previous Integrate experience. The speaker drove the session from an expert perspective although he fondly calls himself a drag-and-drop developer!!!

He explained the importance of Azure Integration Services and why it is the right time to start with Azure Integration Services. Every Business operates traversing through virtual landscapes which needs a seamless integration system for smooth business operations.

Azure Integration Services includes several key components which include Logic Apps, Service Bus, Event Grid, Data Factory, API Management, etc.

The speaker discussed about the market trends as follows:

- The overall BizTalk requests are down but at the same time, certain rates are up.

- The demand for Azure Integration Architects is high, which indicates that users are interested to know more about integration.

- A huge demand for Azure Logic Apps resources was observed.

AI Revolution – and AI Tools are the talk of the town, and the speaker talks about ChatGPT and how it has complemented his work and increased productivity.

He also says now would be the right time to strategize your BizTalk migration strategy and define your future Integration strategy.

#4: Event driven application development in the cloud

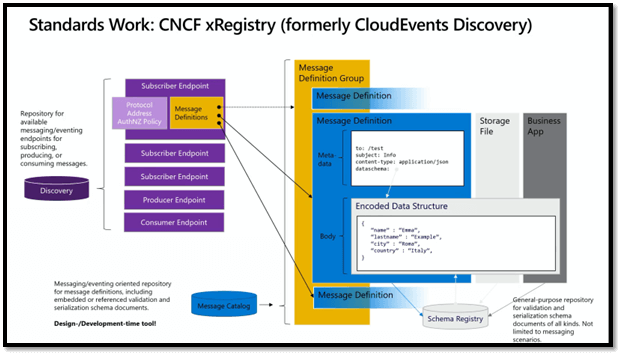

The world of technology is rapidly evolving, and the development of event-driven applications in the cloud is at the forefront of this transformation. In a recent session by Clemens Vasters, Principal Architect at Microsoft, the Azure data team shared their perspective on the present and future of event-driven application development, the challenges faced, and how they are evolving their platform to meet customer requirements.

This blog dives into the key highlights from the session and sheds light on the exciting developments in the world of real-time information pipelines.

Real-Time Applications in Action

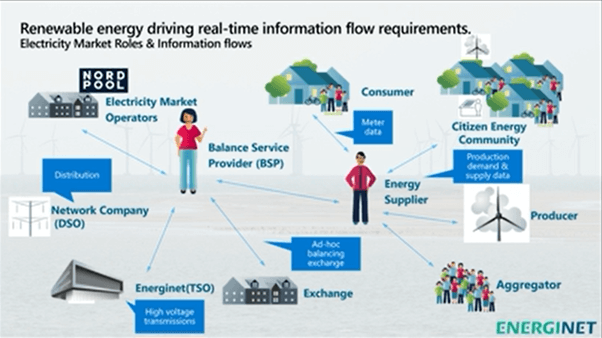

To illustrate the power and significance of event-driven applications, Clemens presented two compelling use cases: renewable energy and football. The renewable energy sector has undergone a massive transformation in recent decades. With the advent of decentralized power production from sources like solar and wind, transmission operators face the challenge of balancing energy grids in real-time. By harnessing the potential of data, accurate forecasting, and precise consumption patterns, the Azure data team empowers energy providers to optimize grid performance and integrate renewable sources seamlessly.

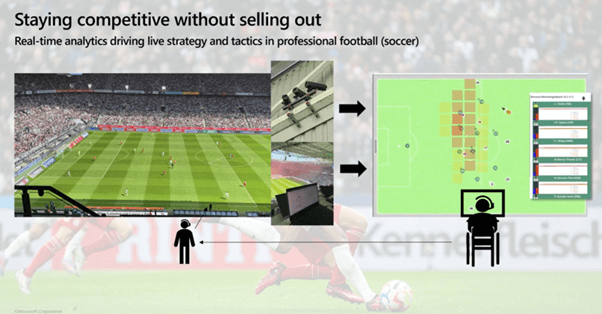

On the football front, the session highlighted the impact of real-time data analysis in the world of sports. With an extensive network of cameras tracking player movements, crucial data points such as distance covered, velocity, acceleration, and player fitness can be analysed in real time. Coaches can gain valuable insights into player performance and make strategic decisions based on the data. This exemplifies how real-time analysis through event-driven applications is transforming the way sports teams operate and make decisions.

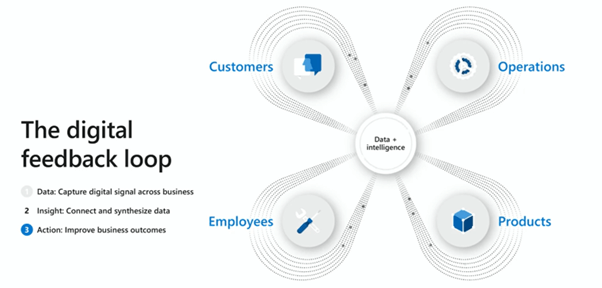

The Digital Feedback Loop

Clemens emphasized the concept of the “digital feedback loop” as a vital component of event-driven application development. This loop involves capturing real-time data, analysing it for insights and trends, and using those insights to enhance products or services. Real-time event flows from the backbone of this feedback loop, enabling the rapid acquisition and processing of data from various sources.

The Key Requirements for Event-Driven Applications

Throughout the session, Clemens outlined the core requirements for developing robust event-driven applications in the cloud. These requirements span connectivity and interoperability, encoding and validation, enrichment, aggregation, transformation and routing, storage and indexing, visualization and exploration, and app and device integration. Let’s explore some of these requirements in more detail.

Connectivity & Interoperability

To facilitate scalable and reliable communication paths, event-driven applications need lightweight protocols for constrained devices, multiplexing async protocols for high-throughput data links, and standards-based communication options for broad ecosystem integrations.

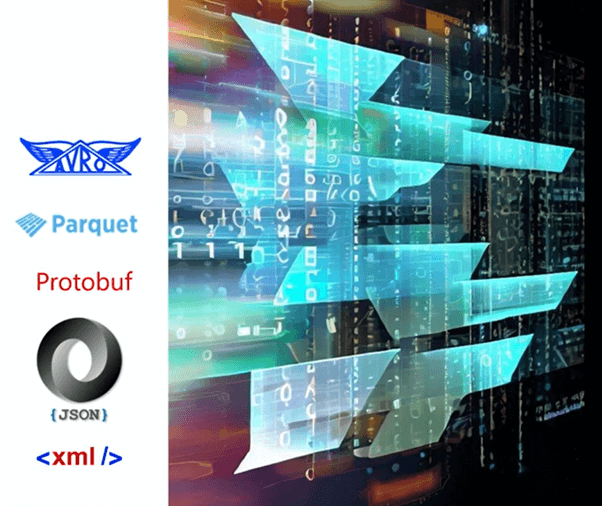

Encoding & Validation

Efficient data encodings, schemas, and flexible data transformations play a crucial role in ensuring fast data transfers. By employing schema registries and standardized encoding options, event-driven applications can seamlessly integrate with diverse systems and data sources.

Enrichment, Aggregation, Transformation & Routing

Real-time processing and transformation of streaming data are essential for extracting meaningful insights. Enriching streams with context and reference data, filtering and aggregating data, and performing in-stream computations enable efficient data handling and accelerate the delivery of insights.

Storage and Indexing

Efficient short-term and long-term storage of raw and aggregated event data is crucial for analysing historical trends and patterns. Storing events in indexed, relational or non-relational databases, time-series stores, or standardized flat file formats enables easy access and analysis.

Visualization & Exploration

The ability to explore stored event streams, correlate data with other sources, and detect trends or anomalies enhances decision-making. Ad-hoc querying, interactive visualization, and composite dashboards with real-time updates provide users with powerful tools to gain insights from event data.

App & Device Integration

Event-driven applications often involve capturing signals and telemetry data from apps and devices. Facilitating bi-directional communication, enabling low-latency ingestion of telemetry streams, and redistributing data to broad audiences are critical for seamless integration with diverse devices and systems.

The Future: Microsoft Event Grid and the Data Platform

Clemens introduced the newest addition to Microsoft’s event-driven ecosystem, Event Grid. Designed as a lightweight pub-sub broker, Event Grid enables the handling of discrete events at hyperscale. This complements other components like Azure Event Hubs and Azure Synapse Analytics, creating a robust data platform that seamlessly integrates real-time event streams.

The session by Clemens Vasters shed light on the exciting developments in event-driven application development in the cloud. Microsoft’s Azure data team is actively addressing the challenges faced in real-time information pipelines and empowering customers to leverage the power of data. By focusing on connectivity, encoding, transformation, storage, visualization, and integration, Microsoft continues to evolve its platform to meet the ever-growing requirements of event-driven applications. The future looks promising as the world embraces the potential of real-time data to drive innovation and transformative experiences across various industries.

#5: What is new in the world of Azure API Management?

Fernando Mejia – Program Manager of Azure API Management started the session by enlightening the recent product updates on Azure API Management. This session was purely demo-based, the updates were demonstrated lively, which was very interactive.

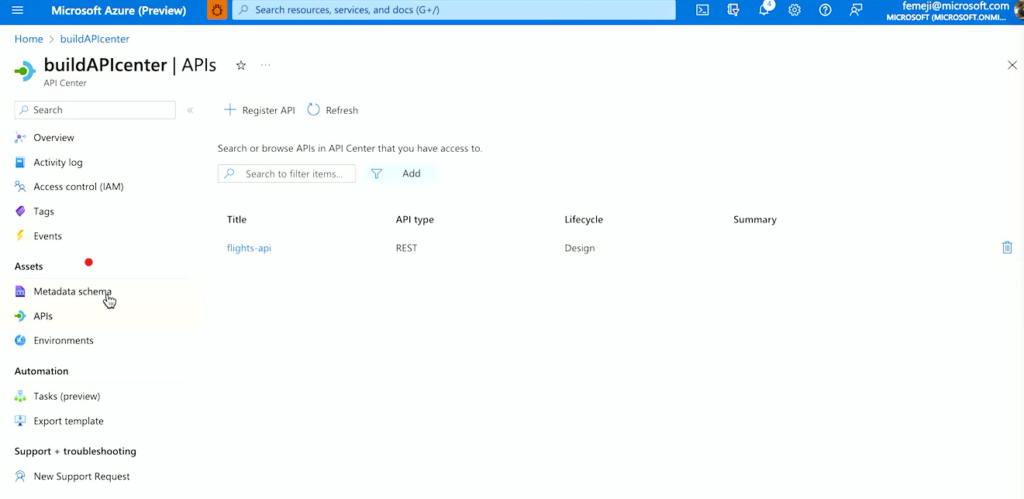

Azure API Center:

The Azure API Center is a recently introduced service within the Azure API Management platform. Its primary function is to provide a centralized location for tracking APIs, facilitating their discovery, reuse, and governance.

Here are some of the key capabilities of API Center include:

- API inventory management: Centralize all APIs within your organization, regardless of their type, lifecycle stage, or deployment location.

- Real-world API representation: Gather and present detailed data about APIs, encompassing versions, specifications, deployments & deployment environments.

- Metadata properties: Improve governance and discoverability by organizing and enhancing cataloged APIs, environments, and deployments through unified built-in and custom metadata.

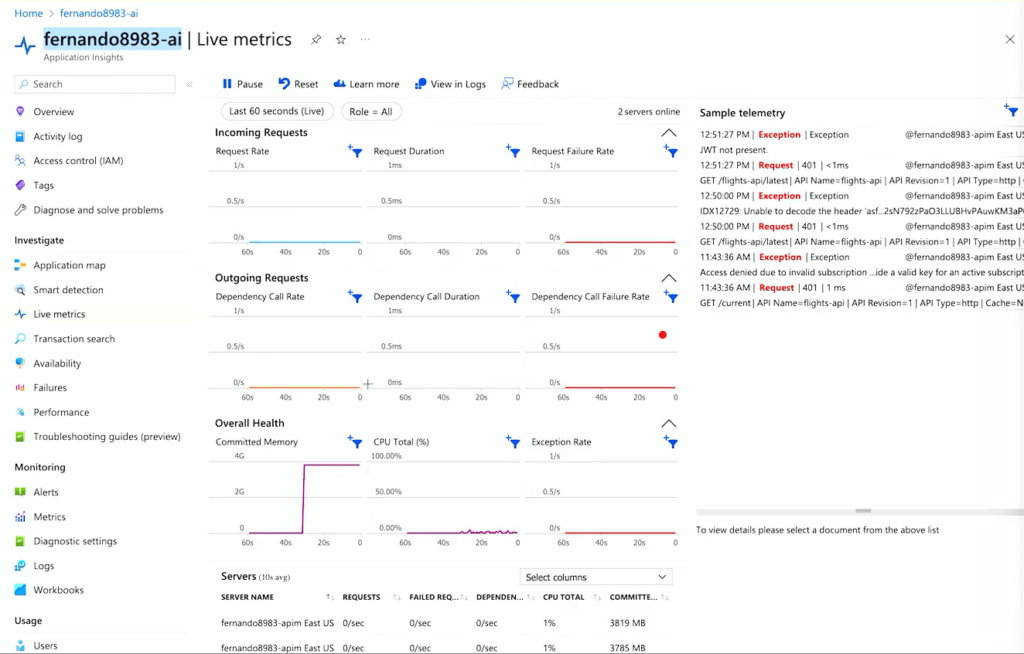

Integration with Application Insights:

Azure API Management can be seamlessly integrated with Application Insights to gain valuable insights into the performance and usage of your APIs. With this, you can monitor and analyze various aspects of your APIs, including request/response metrics, error rates, latency, and user behavior.

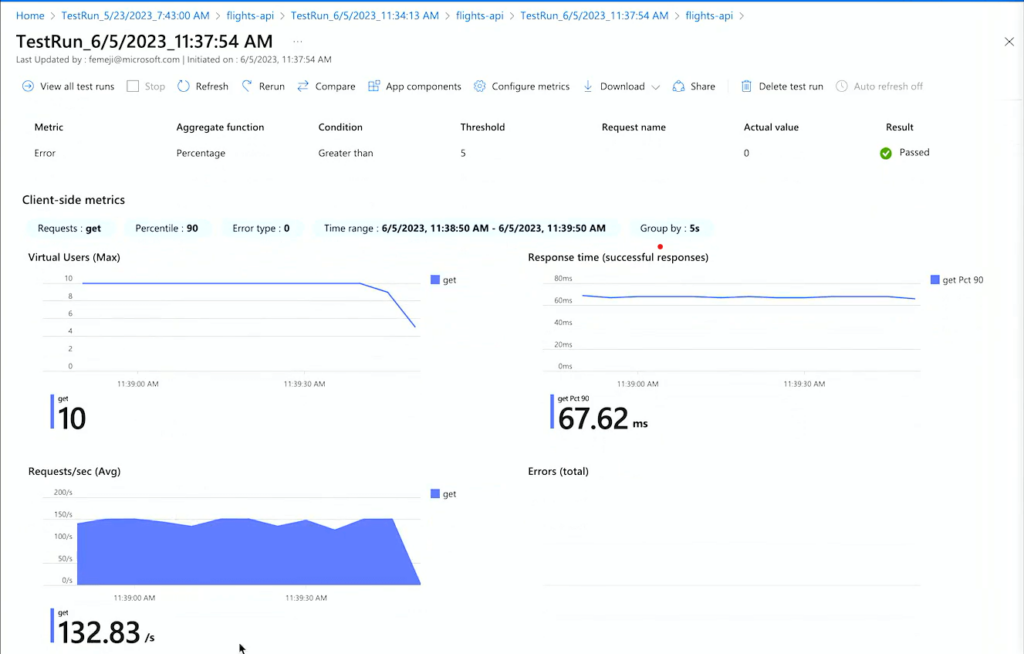

Azure Load Testing:

Azure API Management offers load testing capabilities to assess the performance and scalability of your APIs. With Azure Load Testing integration, you can simulate heavy traffic and analyze how your API Management instance handles the load. You can also be able to see the amount of traffic coming in through Application Insights.

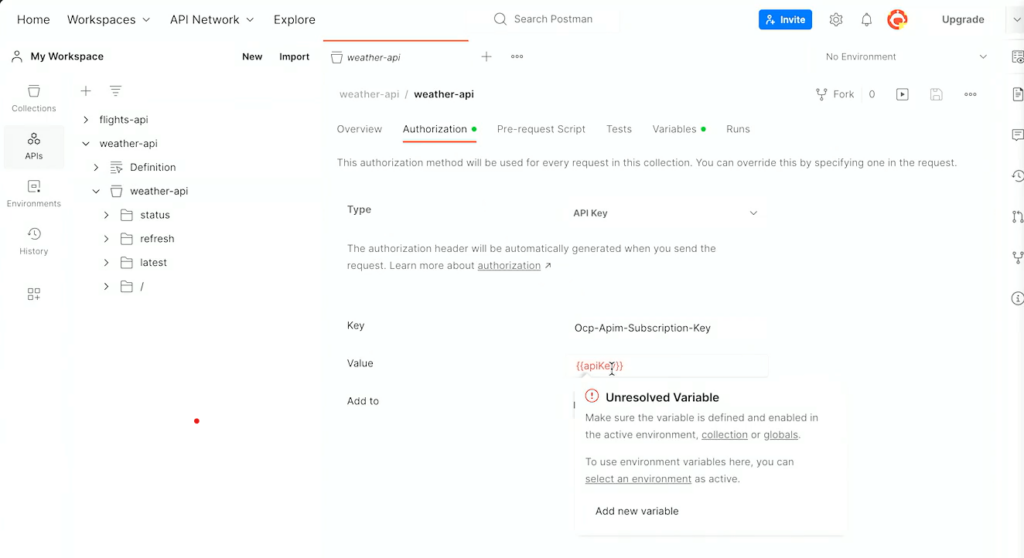

Postman Integration:

Azure API Management now offers integration with Postman, a popular API development and testing tool. By importing Azure API Management APIs within Postman, you can streamline the API development and testing process, making it more convenient to work with your APIs and ensuring their functionality and performance meet your requirements. As an add-on this Postman integration is also available in Visual Studio.

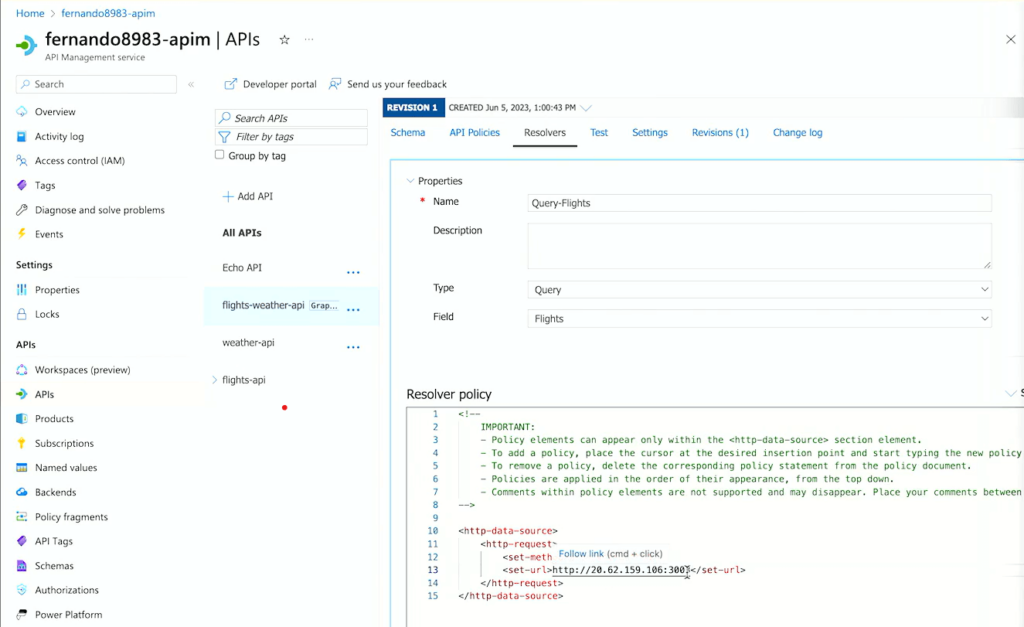

Synthetic GraphQL:

Azure API Management has recently introduced support for synthetic GraphQL APIs, offering developers the ability to effortlessly create and manage GraphQL APIs.

Microsoft Defender for APIs:

Microsoft Defender for API helps protect your APIs from common threats such as malicious attacks, abusive behavior, and unauthorized access. With Defender, you can enhance the security posture of your APIs and ensure that only authorized and legitimate requests are processed, reducing the risk of data breaches and unauthorized access.

Some of the key features of Defender for APIs include – Threat detection, Bot detection & Rate limiting.

#6: API management for microservices in a hybrid and multi-cloud world

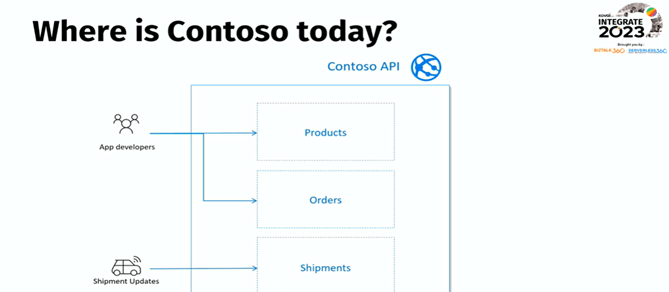

Tom Kerkhove started the session with referencing the journey of Contoso – a fictional company. How it gradually migrated from monolith and adapted to Microservices with Azure API Managements as an integral part of its business.

Case Study of Contoso

Contoso built its own Monolith API with three main modules for their business but faced difficulties in scaling the same.

They also faced a few other challenges along the way:

- Poor Security without granular control

- Poor App Developer Experience

- No governance and API Analytics

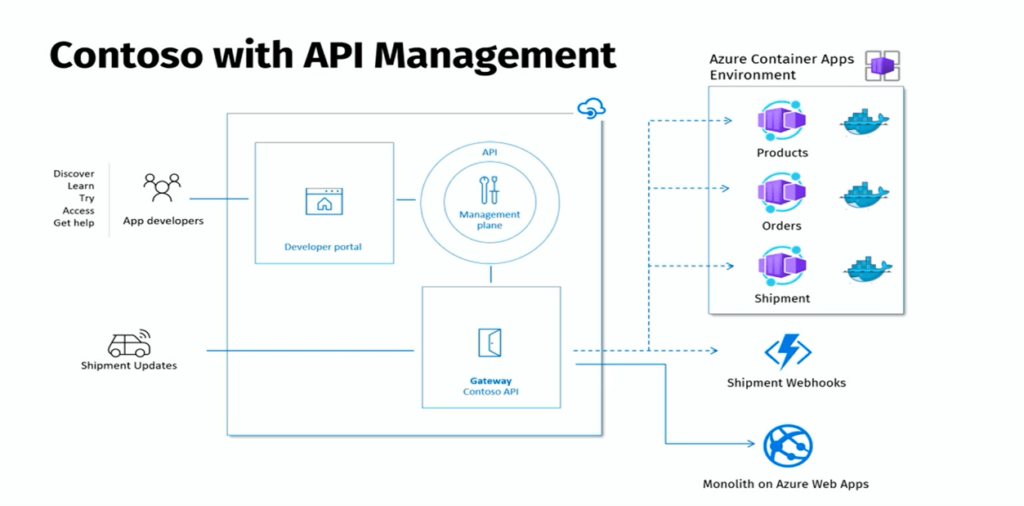

That’s exactly when Contoso made a shift towards Azure API Management. The new approach provides better insights to overcome the existing problem.

Benefits of integrating Azure API management with Contoso Monolith API

- Out-of-the-box API Analytics

- Built-in Developer Portal

- Consumer Management

- Centralized API Hub

How Microservice Architecture helped Contoso Scale up its business

As the company started attracting a lot of new customers, the existing monolith API Management became hard to manage.

They had no granular control access, only one technology stack can be used, release cycles were slow. Here, they made another big shift towards Microservices. They split their monolith into small services and dedicated smaller teams to easily manage and scale up the Services.

In the whole migration approach from monolithic to microservice architecture, they first started with logical separation. The initial set up was done with one product and a Logical API, but now, in this new approach they configured multiple Logical APIs.

Other approaches followed for better improvement are:

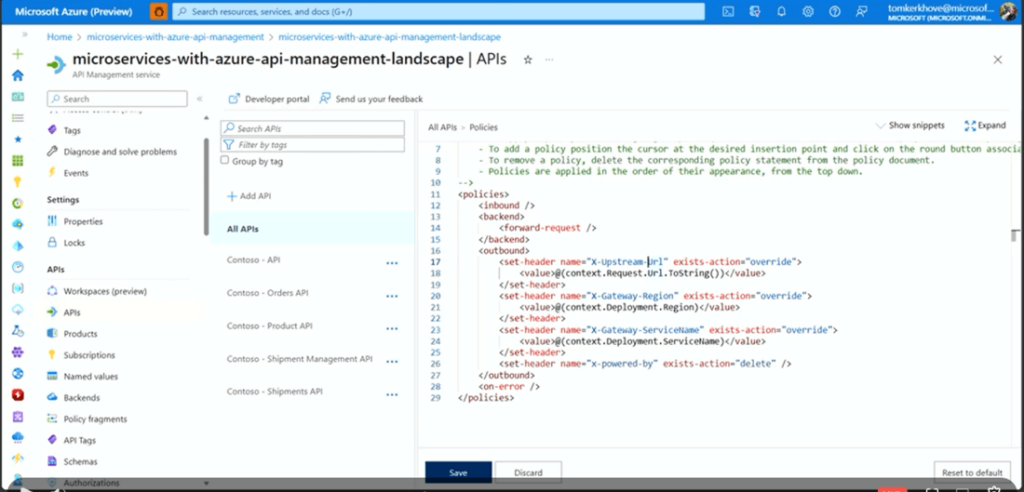

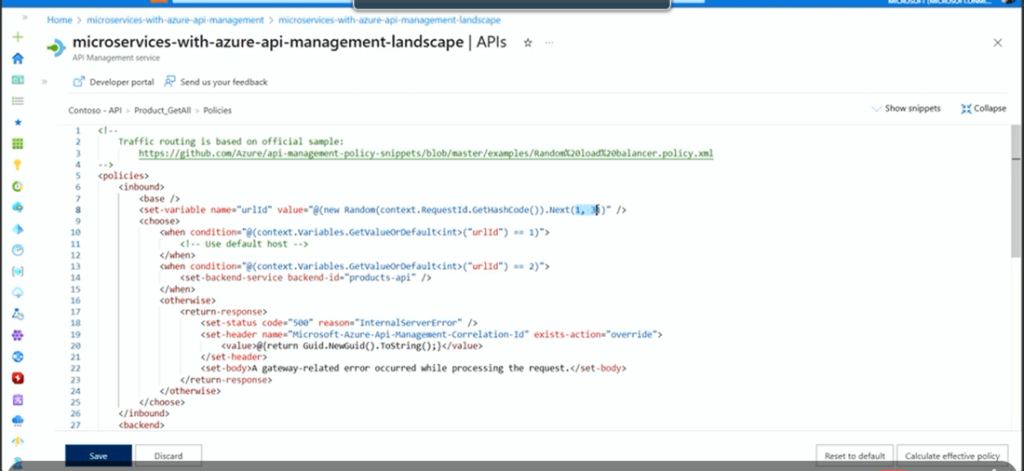

API Management to route traffics between monolith and microservices API Gateway

The API gateway can be configured in way to route the traffic between monolith and Azure Container App environment.

In the API management instance, relevant policy to perform the operation can be added with a condition mapped to two values. Depending on the satisfaction of the condition it routes the traffic to monolith or microservices in the API gateway.

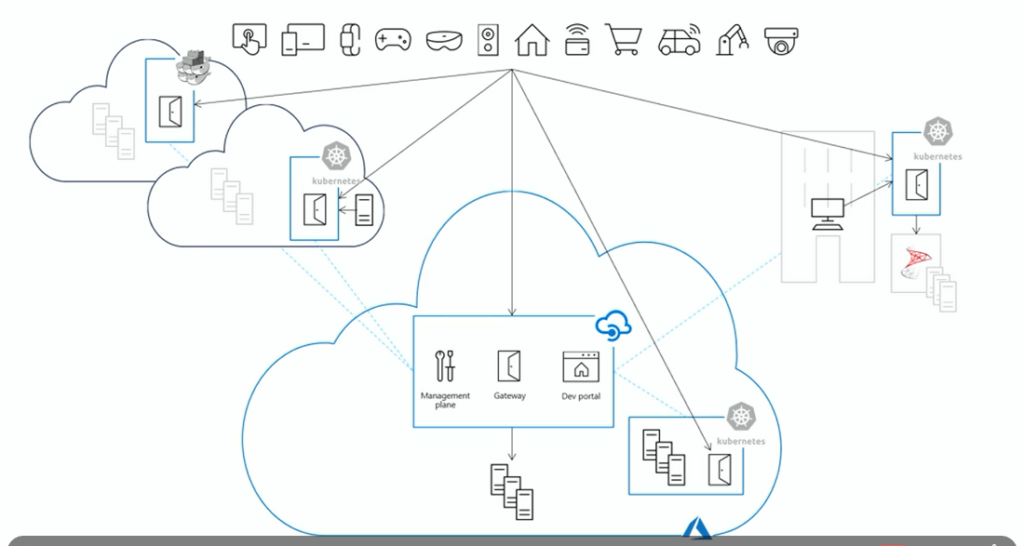

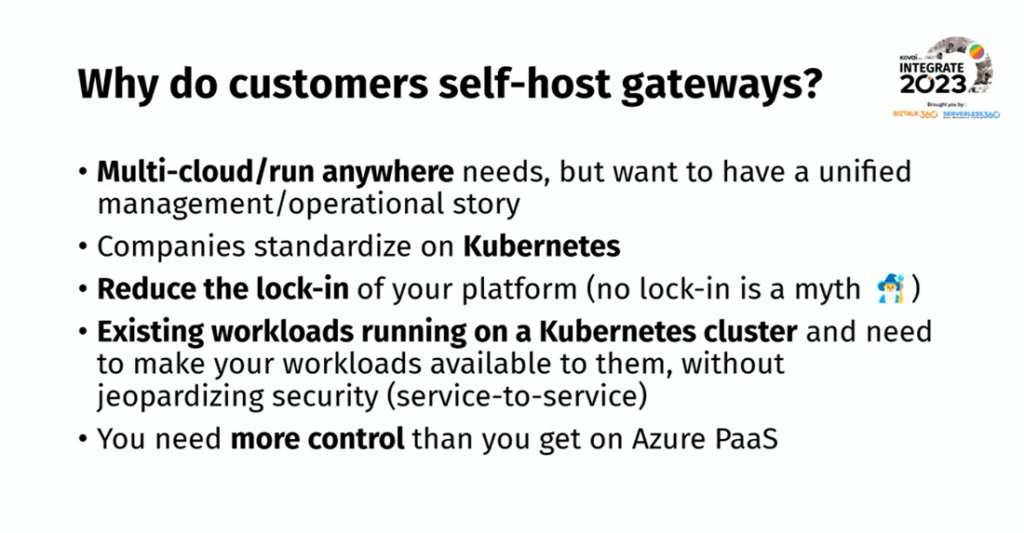

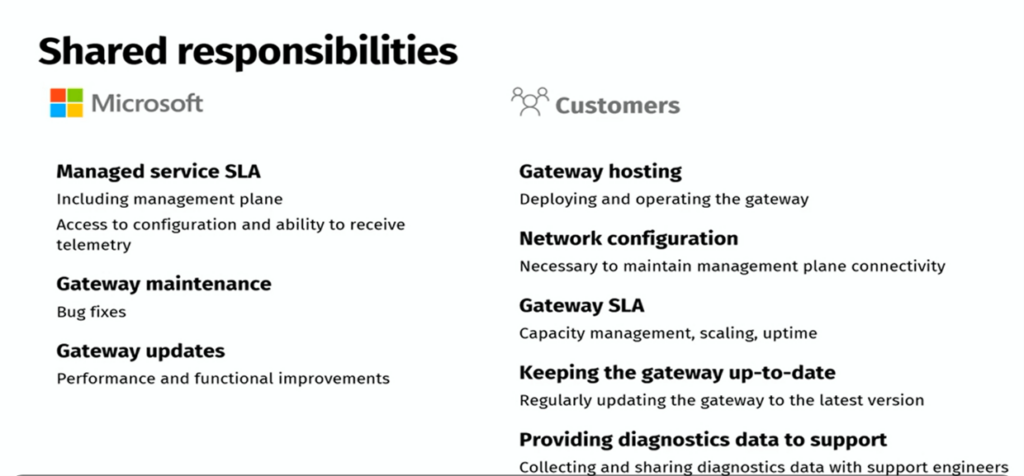

Self-hosted gateway for API Management

The self-hosted gateway can be a fantastic tool to your multi cloud or hybrid configuration.

It allows the cloud application to have its own API management and communication can happen directly with the other cloud or hybrid applications.

The deployed services can be docker, Kubernetes and Helm. The same was demonstrated briefly.

\

\

Shared Responsibilities

#7: API Security Deep Dive

Nino Crudele, Freelancer Azure Consultant, one of the dedicated speakers of Integrate 2023 presented the session on “API Security Deep Dive” with an exciting introduction about himself.

Nino covered the session with the following agenda:

- Thread and Attack

- Protection and Best Practices

- Conclusion and Takeaways

- Q&A

API – Quick Introduction

He mentioned that 83% of the web traffic is API-related, and API plays the fundamental technology stack in the Integration space. Nino presented the API security vulnerabilities like excessive data exposure, broken function level authorization, improper assets management, and more.

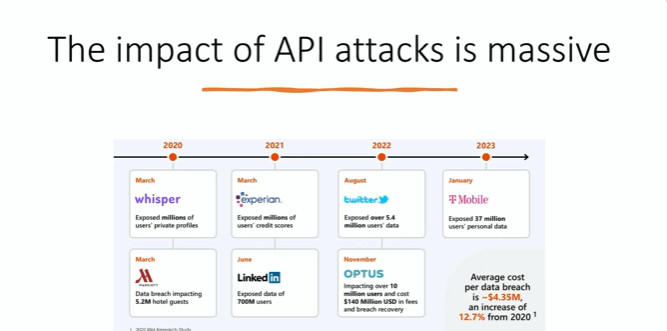

Nino shared some of the relays on API around the world which created a good impact on the audience. He highlighted some of the points below:

- 83% of All Internet Traffic belongs to API-Based Services

- There are over 2 million API Repositories on GitHub

- 56% of developers find APIs help build better digital products

- Cloud Automation will become a $623.3 Billion Industry by 2023

- Healthcare APIs are growing by 6.3% CAGR

- Open Banking to have 130 million users by 2024

He shared the impact of API attacks that happened in top industries from 2020 till 2023

Top 10 API Security vulnerability

During the session, Nino presented the Top 10 API Security vulnerabilities.

- API1 – Broken Object Level Authorization

- API2 – Broken User Authentication

- API3 – Excessive Data Exposure

- API4 – Lack of Resource & Rate Limiting

- API5 – Broken Function Level Authorization

- API6 – Mass Assignment

- API7 – Security Misconfiguration

- API8 – Injection

- API9 – Improper Assets Management

- API10 – Insufficient Logging and Monitoring

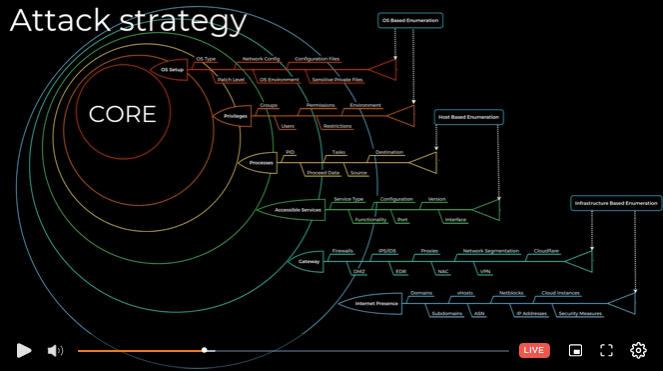

He explained the steps for strategy with the screenshot shown below and he demonstrated the security of API with live examples.

An in-depth look into API

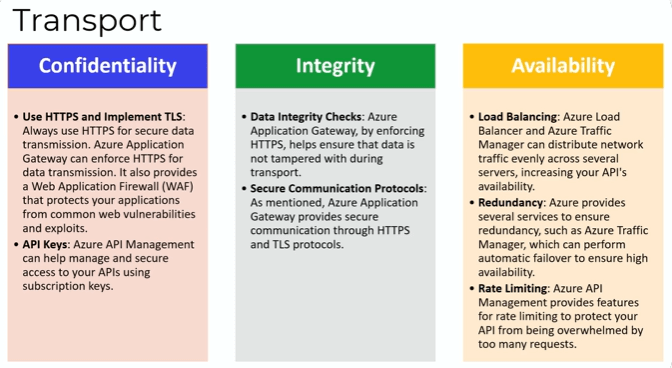

He shared the top 3 principles of API such as:

- Confidentiality – Preventing the disclosure of information to unauthorized individuals or systems

- Integrity – Preventing unauthorized users from making modifications, and protecting data from unauthorized alteration during transmission

- Availability – Ensuring that information is available when needed.

Then Nino demonstrated 3 principles mapped with C.I.A with API design. He even shared a handbook written by him which covers a practical hands-on decision strategy using the CIA Triad for security, book is covered with the following aspects:

Nino concluded the session with exciting questions and answers.

#8: Crush SAP application integration lead time with Azure

Martin Pankarz, Senior Product Manager, and Pascal Van der Heiden, Cloud Solution Architect from Microsoft, presented the session on Crush SAP application integration lead time with Azure on DAY 1 of INTEGRATE 2023. The session demonstrated the enterprise-ready app deployment for SAP OData APIs and Azure API Management equipped with everything a developer needs to hit the ground running. It also had exciting live demos for end-to-end scenarios circling SAP enabled by GitHub and Azure Services.

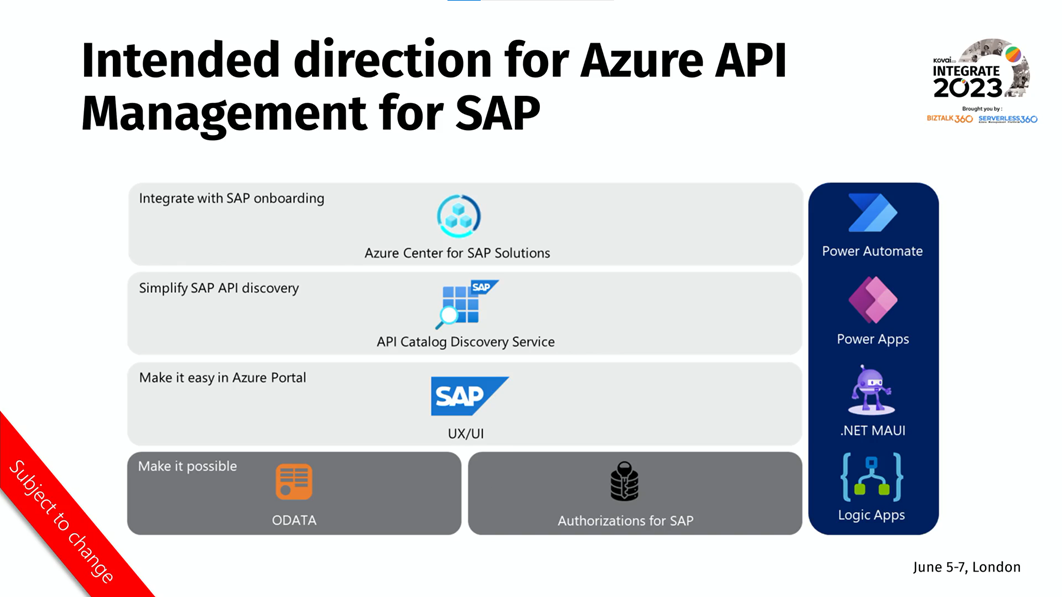

Martin started the session by giving a quick highlight on the upcoming release of the Native OData release for Azure APIM. He stated that >500k $ is lost due to integration issues per year, and 80% of organizations consider digital transformation a block. Following this, he gave a product intended direction for Azure API Management for SAP, among which they chose to speak and demonstrate profoundly on OData and Authorisation of SAP.

The first live demo was a generative AI lead OData Integration demo presented by Pascal. The highlights of the demo are:

- Generating a UI for the SAP Business Partner ODATA API with GitHub Copilot or ChatGPT.

- Securely link API Management with Azure Static Web App to link SAP ODATA API with / API.

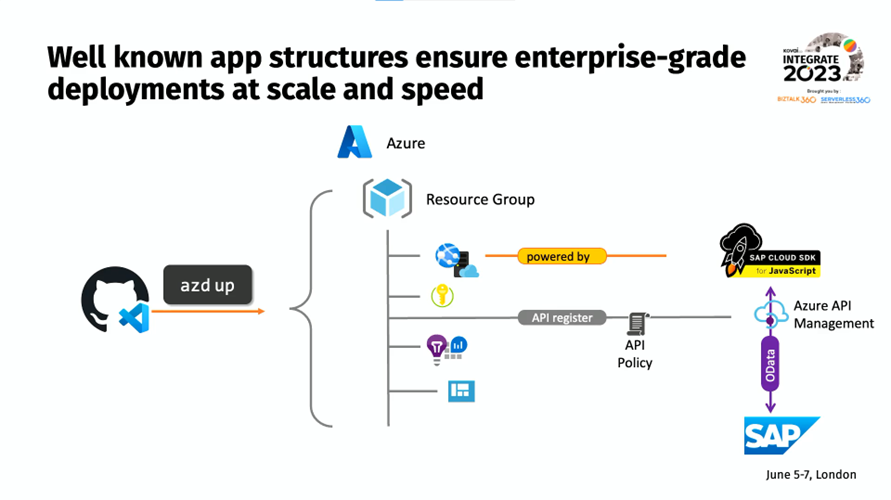

Followed by that, Martin gave a quick demonstration of how well-known app structures ensure enterprise-grade deployments at scale and speed.

This demo pictured applying all the Microsoft/GitHUb goodies like Copilot and Azure Developer CLI to the SAP app integration project and how SAP Cloud SDK is injected with APIM.

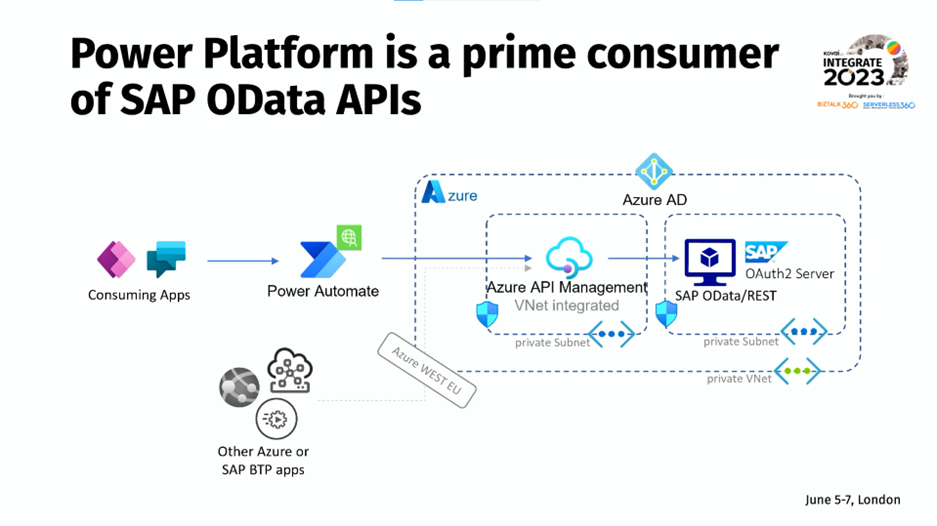

Pascal presented the final walkthrough on why Power Platform is a prime consumer of SAP ODATA APIs.

The presentation focused on,

- Mapping Power Platform users to SAP backend users with SAP Principal Propagation

- Lifting the burden of SAP token Caching and CSRF token handling from the low coder

- How SAP can be handled through API throttling

Martin and Pascal concluded the session by providing their GitHub public repository and resources to help the audience understand the concepts better.

#9: Experiences releasing APIs to API Management

Samuel Kastberg, Principal Consultant at Solidify with three decades of experience, shared his knowledge about releasing APIs to API Management in an exciting, lively session.

Samuel had to help a customer to ease the API release process. The customer had given the following objectives that need to be achieved in the process:

- Automated release of API

- Simply deploying and management for teams

- Improved security

- Lowered Cost

- Infrastructure as Code (IaC) with Terraform

- Standard format for validation and documentation

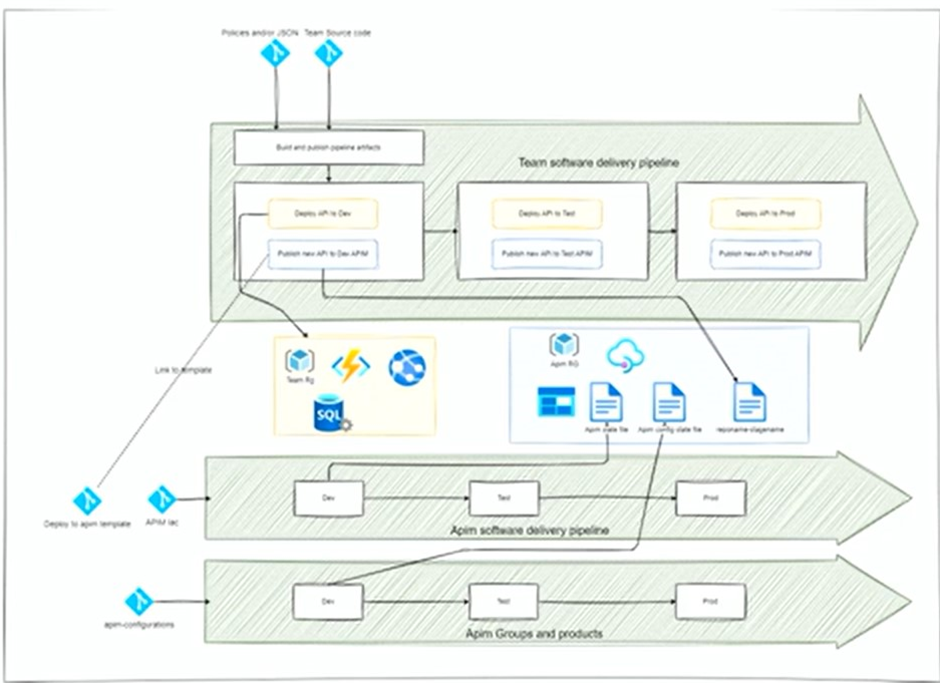

To achieve these objectives, Sam’s team has come up with solutions such as the ones mentioned below to address the objectives:

- Assist the teams in understanding the pipelines and APIs to work easily

- Export the scripts needed to release the APIs

- Create Pipeline templates based on the teams and their Repos structure

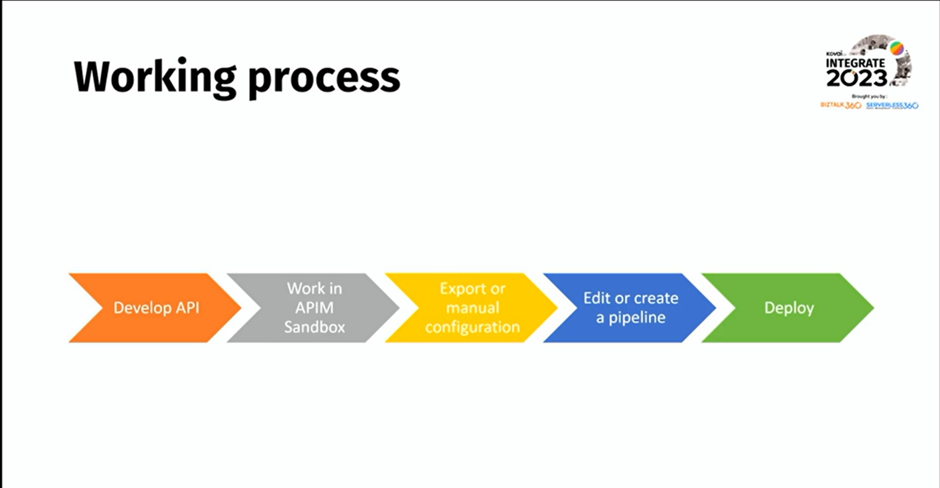

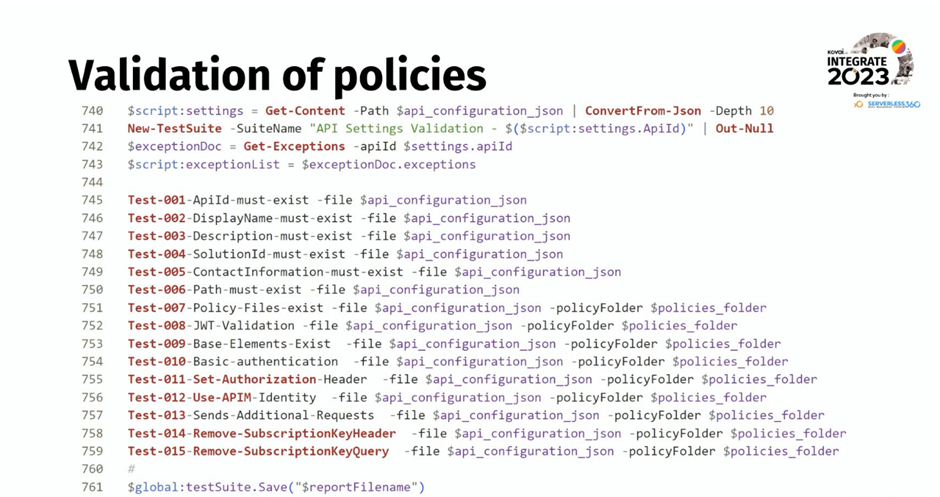

- Validation using policies as a test

This represents the process that will be followed for releasing the APIs. The teams can develop their own API or import them using the APIM Sandbox. They can also create scripts to write their own JSON configurations or export and use the scripts provided by Sam’s team. In the same way, the team can either create a pipeline or edit the existing one provided. Finally, the pipeline can be deployed.

A few basic details, mentioned below, would be required to release the APIs.

- Specification about the type of API that needs to be released

- Policies for validation of operation

- Setting file describing all the named values, backends, contact information, etc.,

- Parameter files with definitions for environment-specific settings

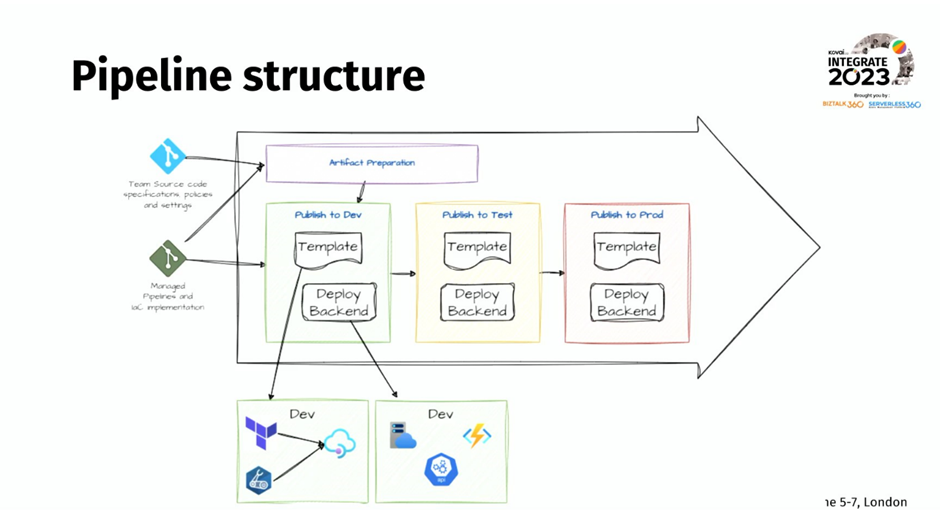

- Pipeline to release or Managed template

The team will have repos based on which they will create the artifact files required to create the templates. The managed pipelines and the IaC implementation will be used along with the artifacts to release the APIs using Terraform or any other methods they want.

The APIs can then be released into different environments, such as Dev and Production. The validations can be done in these environments using the policies that the teams have created. The validation only works if the policies are compliant. These can be used to test the different environments after releasing the APIs to each of them. Once the pipeline is run, the validation will take place, through which we can know if the API has been released without any faults.

Documentation of all the processes mentioned above is significant. Only with proper documentation will the team be able to adapt and work by themselves, minimize the dependency on others, and will be able to identify frontend and backend for maintenance.

Sam concluded the session by explaining how they overcame a few of the challenges put forth by the customer, such as different policies and migration script time, and successfully released the APIs for the customer.

#10: Logic Apps Standard – the Developer Experience

It’s always good to have a perspective of the Logic Apps standard from a developer’s experience. Wagner Silveira, Senior Program Manager at Microsoft covered the same during this session of Day 1.

- Logic App Standard Recap

- What’s new for developers?

- What’s Next?

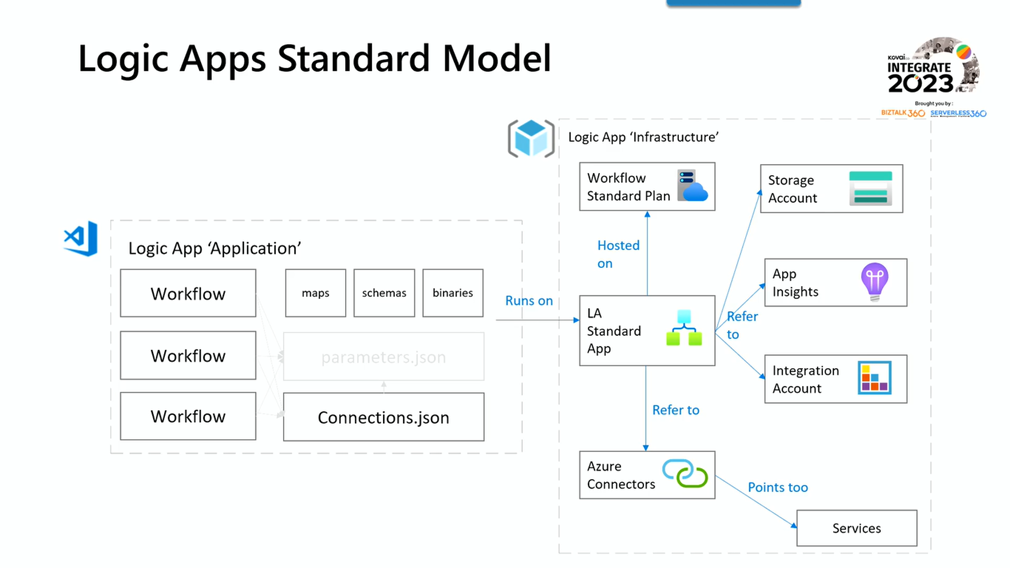

Logic App Standard Recap:

The above picture shows the standard Logic App model that exists in Azure. The Logic App contains many workflows which are referred to as connections. The maps, schemas, and binaries are components inside the logic apps.

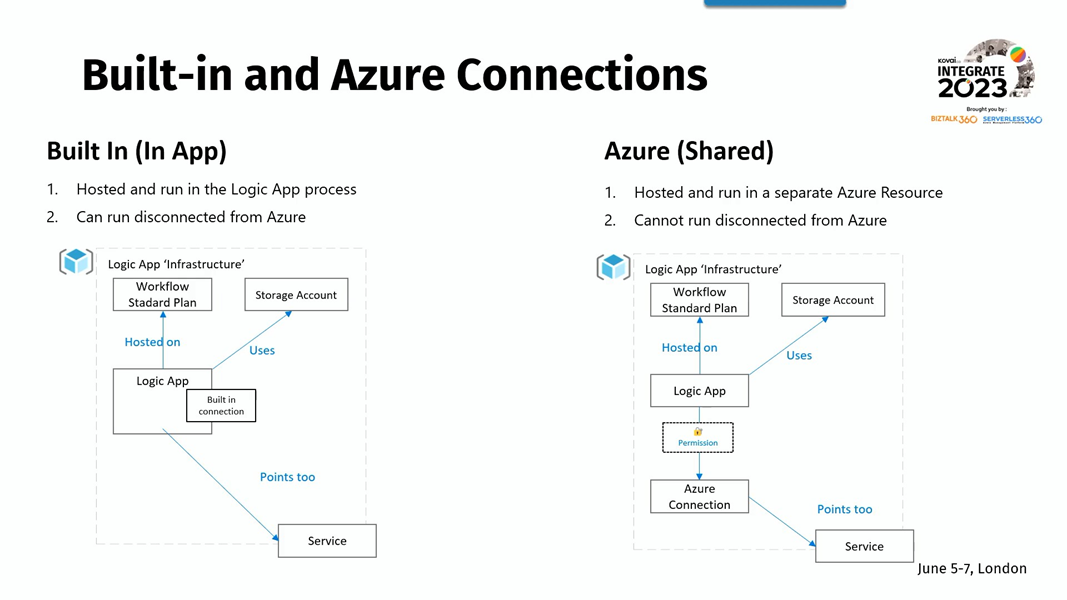

Built-in and Azure Connections:

The difference between the Built-in and Azure connections was discussed in the session. The built-in connections are used where there is a need for isolation of private endpoints, and it also provides better performance as it is hosted and run in the same Logic App process. Whereas, the Azure connection is hosted and run in a separate Azure resource, and it needs to be connected to Azure to run properly.

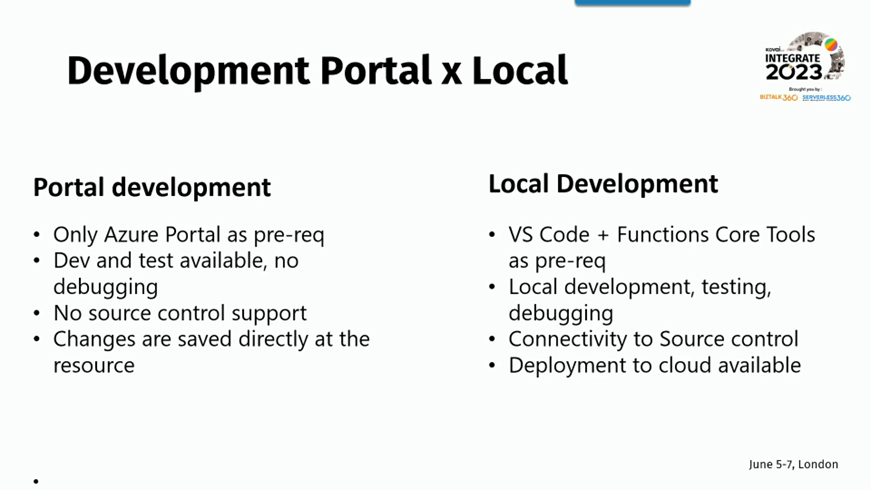

The difference between developing Logic App workflows in the portal and the local development was explained as well. The below screenshot gives some insight into the same:

What’s new for developers?

There are new and improved tools in Logic App which helps developers to explore. They are as follows:

- Designer 2.0

- Data Mapper

- Custom Code Support

- Export Tool

Designer 2.0:

The new designer provides better performance, included Zoom in and Minimap functionality in Canvas.

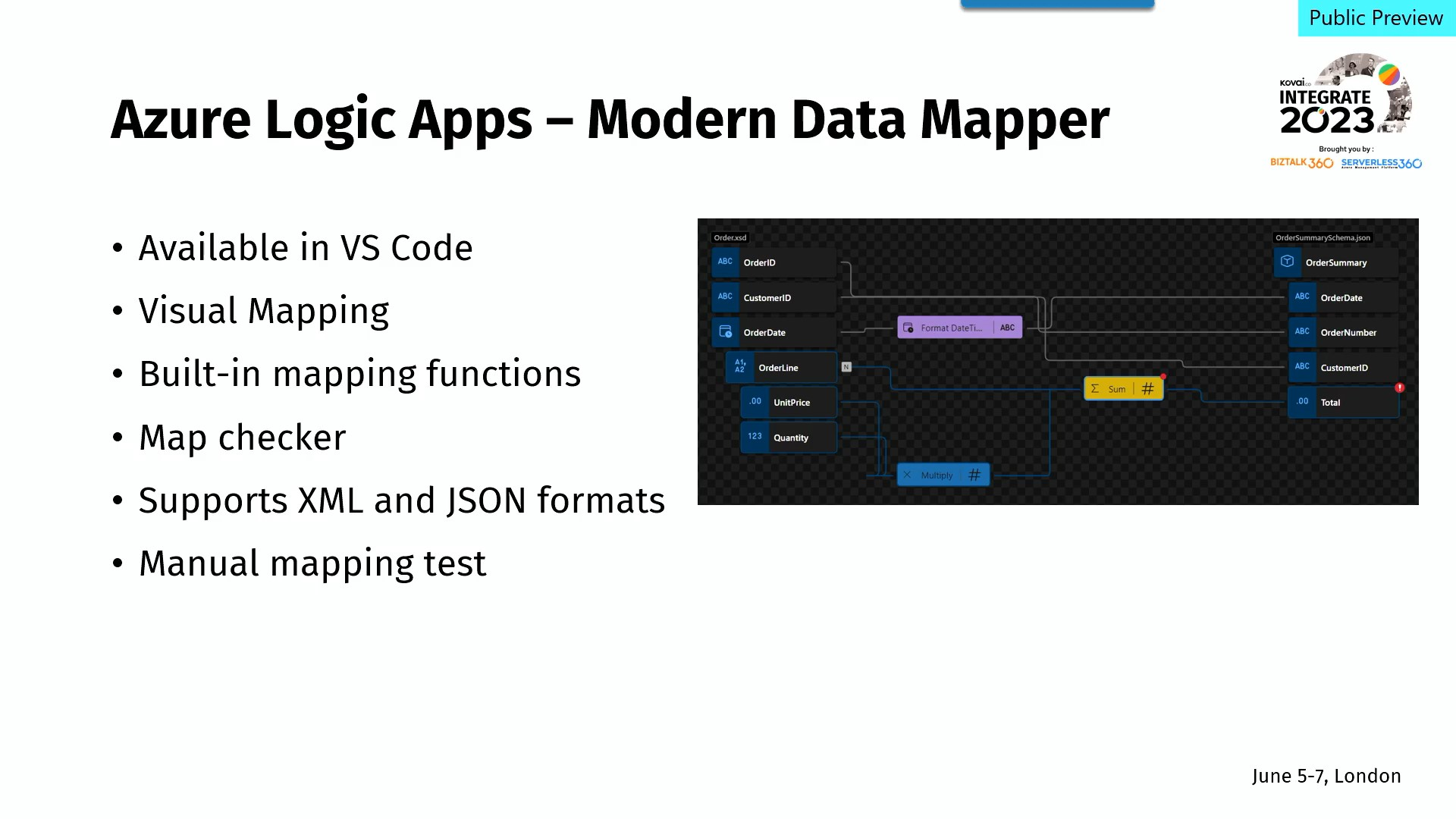

Data Mapper:

Data mapping is a visualization of the mapping of components just like visual mapping exists in the BizTalk server. Wagner provided a demo on how the data mapper in Logic App can be used to visualize the order processing and fetching the details such as the Order Date with the desired Timestamp and Total value of the order by multiplying the Unit price and the Quantity.

Custom Code Support:

The new improvements in Logic Apps support custom .NET code so that the developers can include their custom code to develop any workflows. This code can be called from Built-in action in Azure Logic Apps standard.

- It also helps in BizTalk migration scenarios.

- Local debugging for both workflows and code can be achieved with this functionality.

- It also provides full support for dynamic schema inputs/outputs including complex types.

Export Tool:

It is possible to export a group of Logic apps as a project with the use of Export tools. It pre-validates selected logic apps, supports ISE or consumption export and is available from Logic Apps Standard extension in VS code.

What’s coming in the near future:

Wagner concluded the session with the information that the focus now is on the developer experience in the following three areas:

- Onboarding Developers to explore simplified VS code installation and provide in-tool guidance for dependencies check.

- Local Development such as better management of Metadata files and Parameterization of connections from the start.

- DevOps Support which includes infrastructure template generation and CI/CD Pipeline Generation.