Table of contents

- Why is integration so hard?

- Service Bus Deep Dive

- Using Azure App Configuration with Azure Integration Services

- Modernising the Supply Chain with Logic Apps and EDI

- Real-world examples of Business Activity Monitoring with Turbo360

- Event Hubs – Kafka and event streaming at high-speed

- BizTalk Server to Azure Integration Services migration

- Azure Logic Apps Deep Dive

- Serverless Workflows using Azure Durable Functions & Logic Apps: When Powers Of Code and LowCode Are Combined

- Real-time event stream processing and analytics

After successfully concluding Day 1, we had an exciting Day 2 of INTEGRATE 2023 with some awesome sessions, powerful demos, and of course many updates from Microsoft Product Group.

#1: Why is integration so hard?

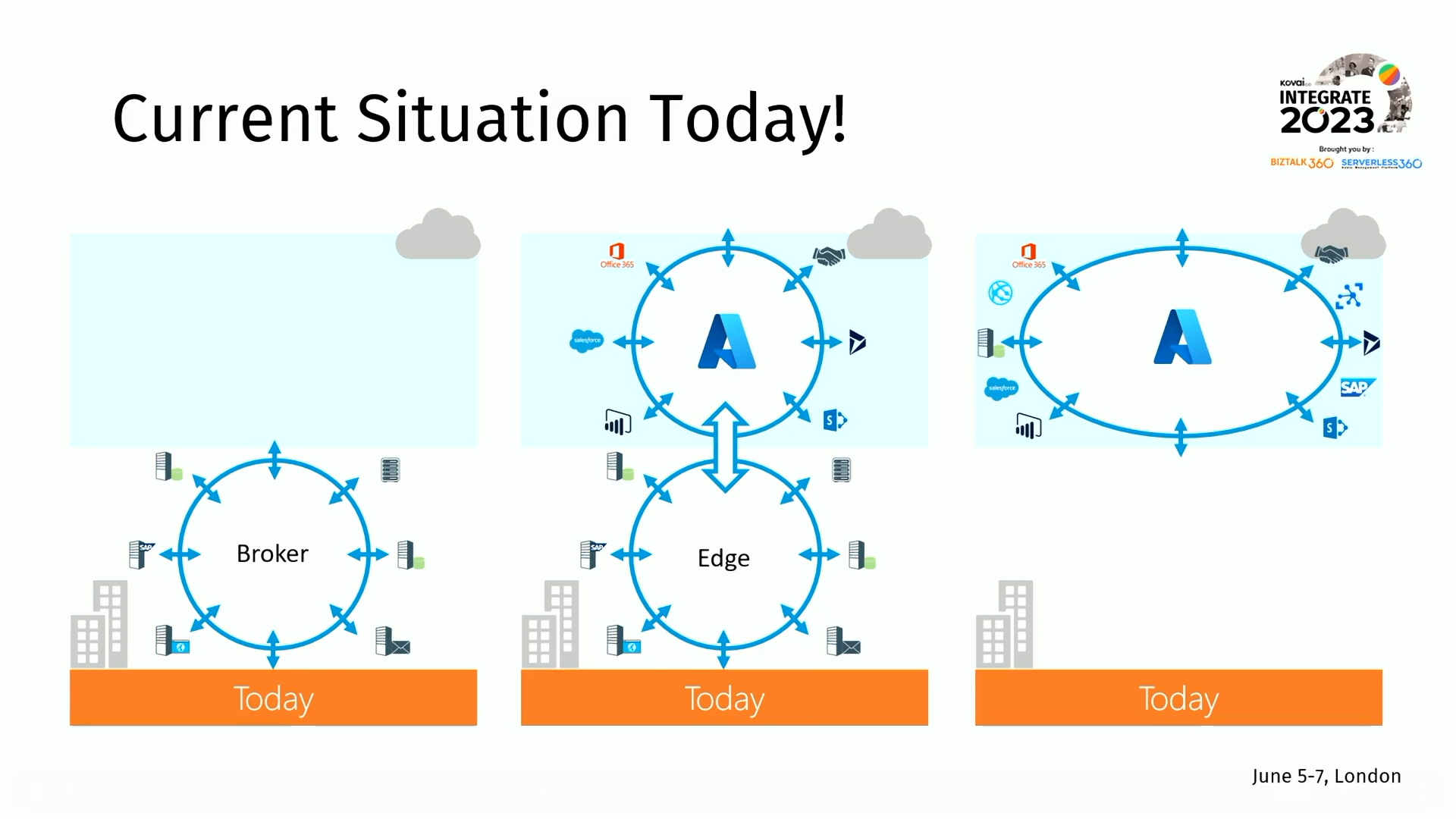

In this session, Steef Jan Wiggers sheds light on the challenges of integration. He emphasized that integration becomes complex when we merge diverse systems and applications developed independently, often utilizing distinct technologies and data formats. As a result, this leads to problems like data inconsistencies, system incompatibilities, and long-term maintenance difficulties.

He also illustrated the complexities of integration and provided insights on how the integration processes become more intricate due to the utilization of diverse file formats and protocols.

Navigating the Past, Present, and Future of Application and Integration Landscape

In terms of applications, he highlighted the transition from the dominance of SAP and mainframes in the past and showed how they will be migrated to the cloud in the future slowly.

Furthermore, he discussed his experiences on the Integration landscape with hybrid scenarios using Salesforce and BizTalk, emphasizing the potential future shift towards a fully cloud-based environment.

Challenges faced while building in the cloud:

- Consumption hurdles

- VNET integration

- Public/Firewall (mix)

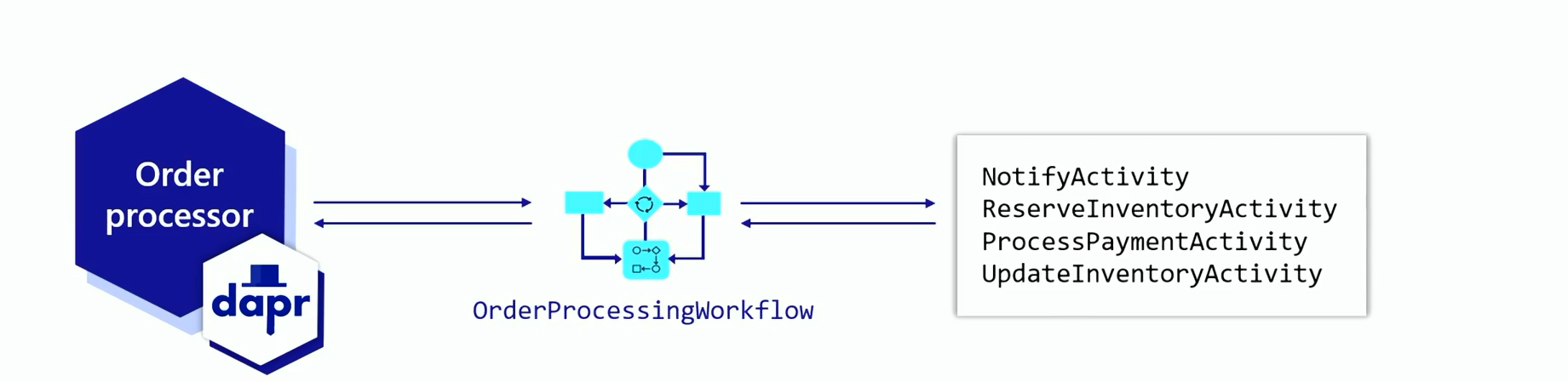

Dapr

Steef highlights the enhanced capabilities of Dapr that it extends support to the workflow in the Microsoft cloud through the Power platform, alongside Durable Functions and Logic Apps.

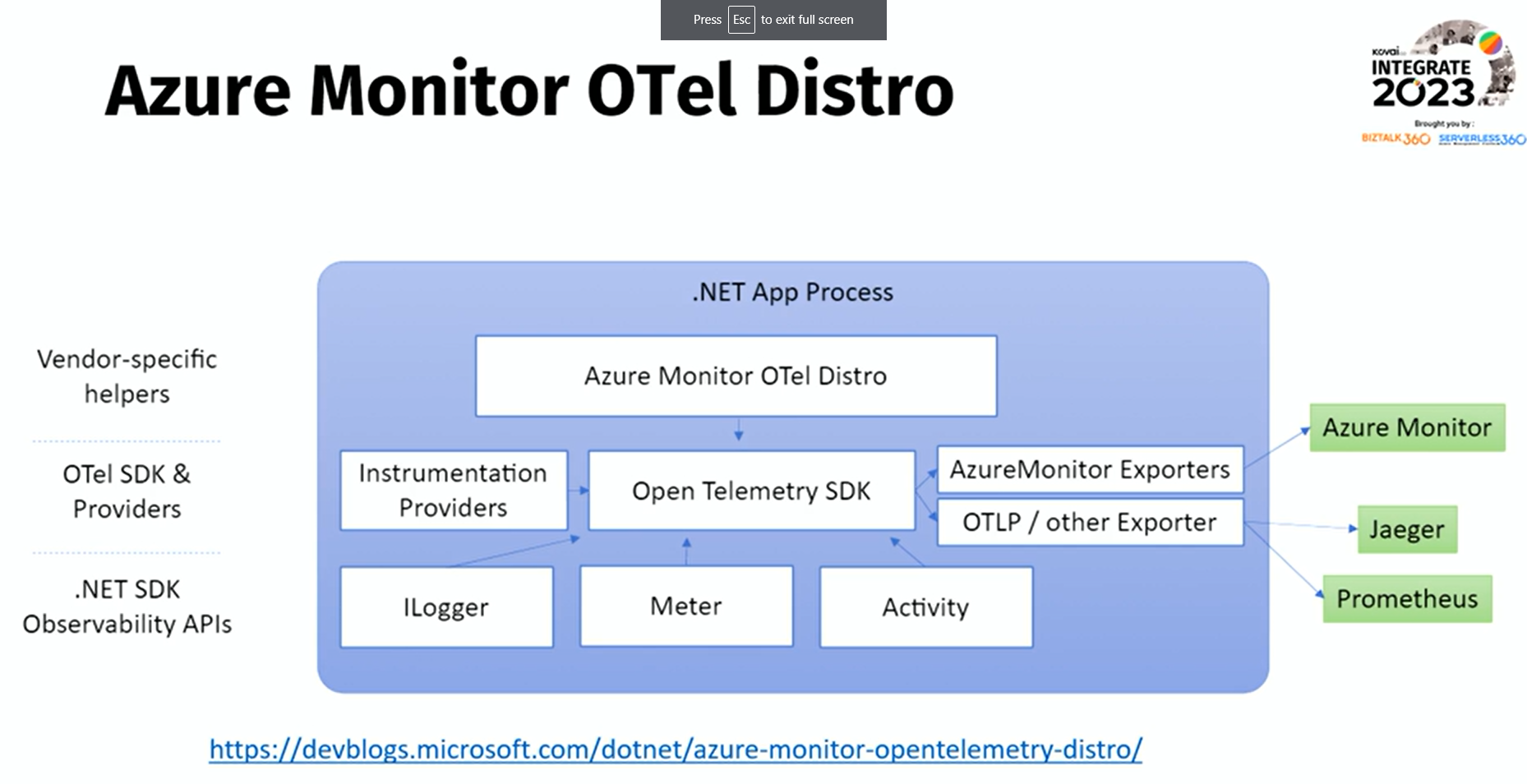

OpenTelemetry

Steef further emphasizes OTel – an open-source observability framework for collecting and analyzing telemetry data (Metrics, logs, traces from software and infrastructure. He further illustrated the capabilities by presenting an example using the newly launched Azure Monitor OTel Distro.

Cloud Adoption Framework

As he guides us through the Cloud Adoption Framework, he stresses the crucial role of governance and management within it. He also highlights the five interconnected pillars of the Microsoft Azure Well-Architected Framework – cost optimization, operational excellence, performance efficiency, reliability, and security.

Why Architecture Design Records?

Architecture Design Records (ADRs) serve as a valuable resource for understanding the rationale behind technology choices. He supports this statement by providing an illustrative example. ADRs provide a why behind every decision, following a scientific method approach to architecture decisions.

Steef concludes the session by emphasizing the importance of a clear way of working, including ownership, setting clear roles, culture, etc.

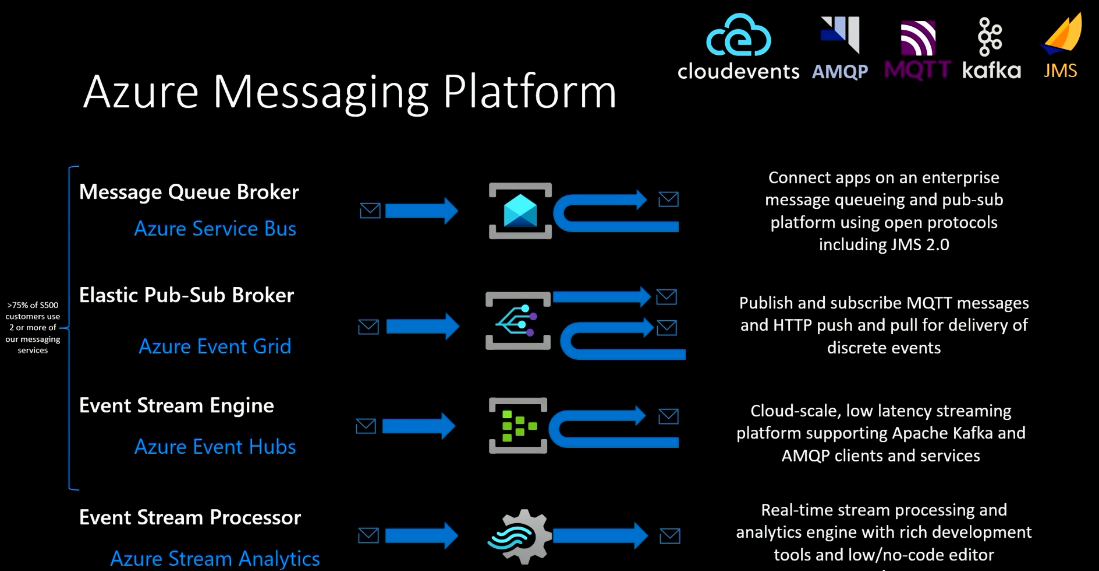

#2: Service Bus Deep Dive

Eldert Grootenboer from Microsoft delivered this exclusive session on Service Bus deep dive. The speaker gave a quick introduction to Azure Service Bus and the various Azure messaging platforms.

Upcoming previews in Azure Service Bus

He then enthusiastically spoke about the various upcoming previews to be released in the coming months which are as follows.

Durable terminus

This feature aims to resolve the following:

- Solve connection issues with containers and distributed systems.

- Allows the recovery of broken links.

- Keep end-to-end tracking of the full message state ensuring reliability.

Geo data replication

The current Geo disaster recovery is only for the recovery of metadata and the new release aims to solve the following:

- Full data replication across multiple regions.

- Introducing two types of replications – synchronous and asynchronous.

- Manual failover with telemetry. This feature gives a variety of telemetry data and allows one to initiate a manual failover as per the user’s requirements.

- The Geo data replication feature is being released for both Service Bus and Event Hub.

Recently released

He then discussed a few of the recently released features, which are as follows.

Passwordless authentication for JMS

- AAD backed authentication

- Support for managed identities and service principals

Performance features

Attain consistent low latency on your entities

- Multi-tiered storage.

- Vastly improves reliability.

Partitioned namespaces for premium

- Higher scalability by allowing multiple partitions in premium namespaces.

- Useful in high throughput scenarios, where absolute order and message distributions are less important.

- Orders can be enforced using partition keys or sessions.

Optimizing performance in Azure Service Bus

Eldert shared a lot of ideas to optimize Azure Service Bus performance, where he started with,

- Identifying the right SKU: The standard SKU is largely used for development, and test scenarios and comprises shared resources whereas the premium is for production scenarios, predictable latency and throughput, and dedicated resources.

- Namespace sharding: It’s now possible to run workloads on multiple namespaces and shards across multiple regions.

He then threw light on methods like Reusing factories and clients, Concurrency, Prefetching, and features including sessions, scheduled messages, deferred messages, transactions, and De-duplication that would assist you in further optimizing the performance.

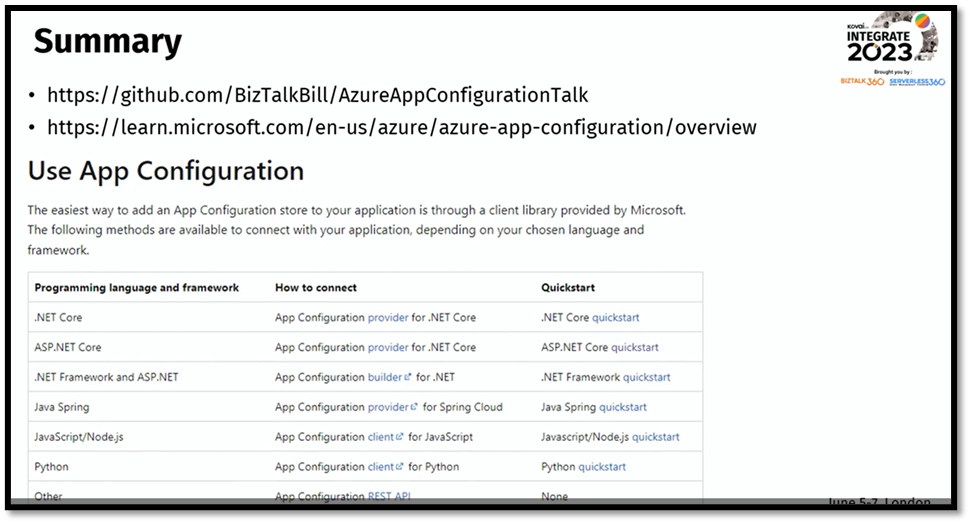

#3: Using Azure App Configuration with Azure Integration Services

At INTEGRATE 2023, the audience were treated to an enlightening session by Bill Chesnut, Cloud Platform & API Evangelist at SixPivot. With years of experience working on complex projects for clients, Bill shared his insights on leveraging Azure App Configuration to solve common challenges in application development and deployment.

To kick off the session, Bill took the audience on a journey through his day-to-day activities, demonstrating how he navigates client portals, hunts for files and configurations, and ultimately utilizes app config to simplify his work. With everyone on the edge of their seats, he dove into the core topics of the session, exploring the benefits and best practices of Azure App Configuration.

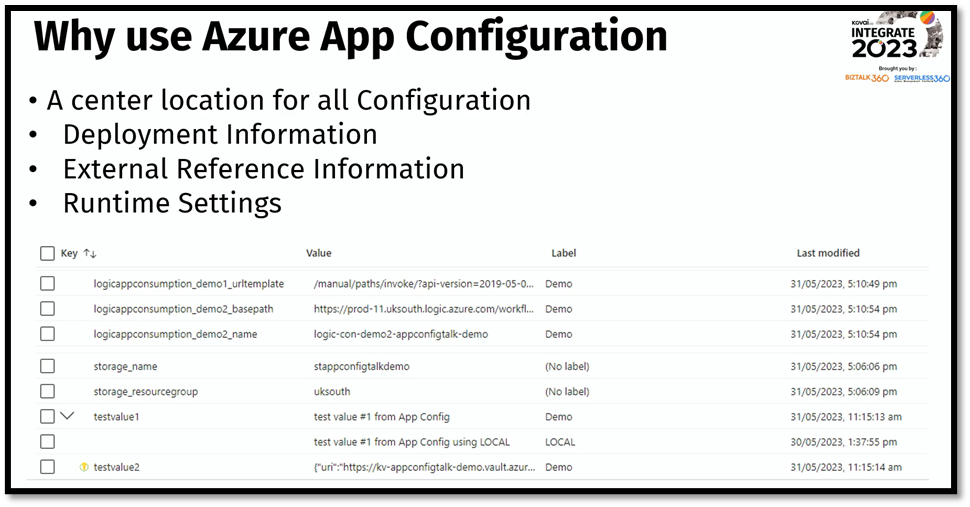

Bill started by answering the fundamental question: What is app config? He explained how it provides a centralized and dynamic approach to managing application settings, eliminating the need to directly access Azure Key Vault. This approach ensures that necessary values can be obtained easily, regardless of the environment or subscription.

One of the highlights of the session was when Bill showcased a sample application stored in a GitHub repository. He emphasized the importance of structuring the app configuration settings, including keys, values, and labels, to accommodate different environments such as development, testing, and production. By leveraging these configurations, developers can seamlessly adapt their application to various scenarios, without worrying about accessing Key Vault.

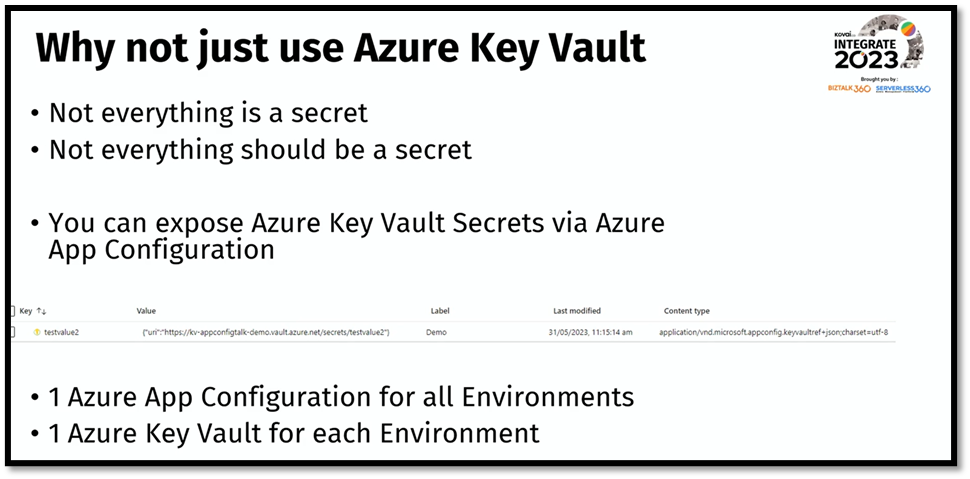

Bill went on to discuss why Azure Key Vault should be avoided in certain cases, showcasing how templates can be utilized to fetch values from Key Vault indirectly. This approach not only simplifies the process but also enhances security and flexibility in application development.

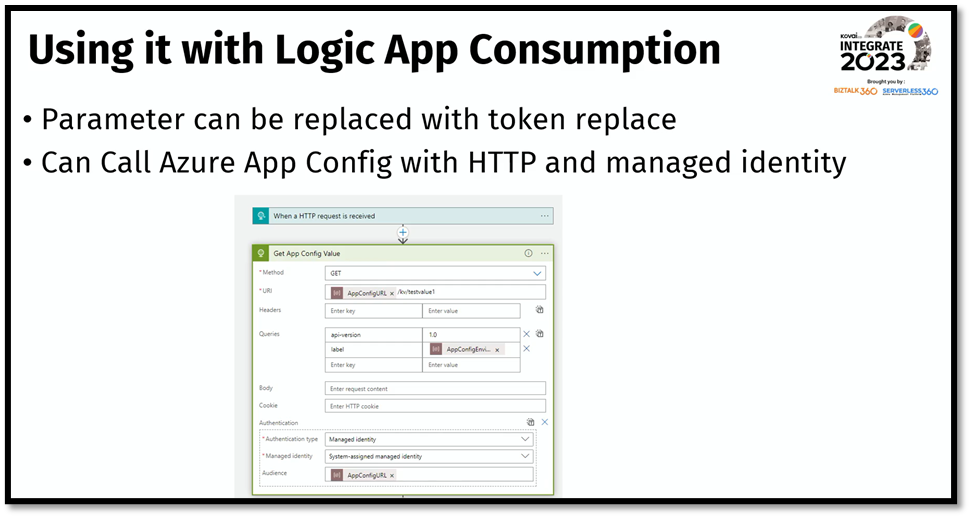

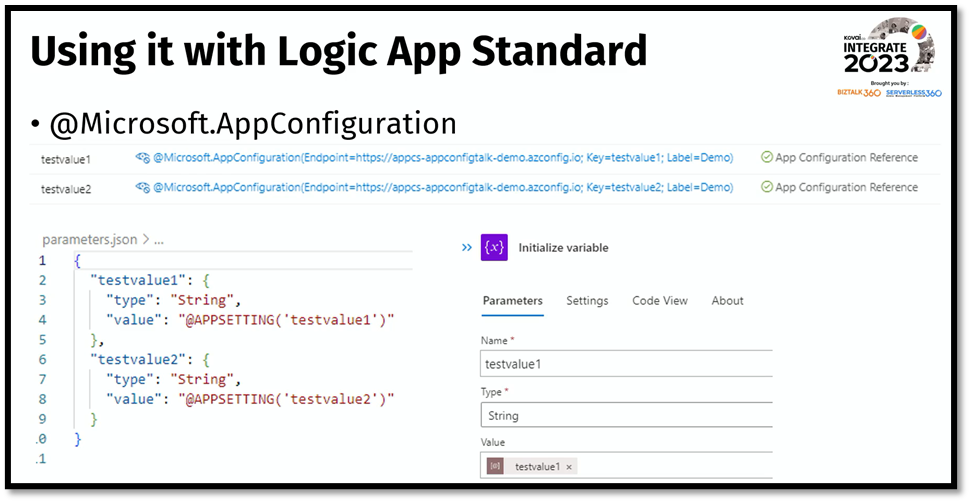

The session also delved into practical implementations, with Bill highlighting how Azure App Configuration can be integrated with Logic Apps. He explained that while the Key Vault connector lacks managed identity support, using App Configuration with Logic App Consumption resolves this limitation. He showcased snippets from a live demo, demonstrating the seamless integration between Logic Apps and App Configuration, which left the audience captivated.

As the session came to an end, Bill provided references to GitHub repositories and additional materials, empowering attendees to implement the discussed concepts in their own businesses. The engaging Q&A session demonstrated the enthusiasm and interest of the audience, affirming the value of Azure App Configuration in simplifying application development and deployment workflows.

#4: Modernising the Supply Chain with Logic Apps and EDI

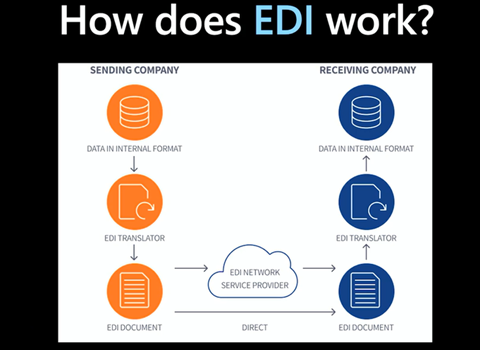

Martin Abbot, Integration Specialist at Microsoft kickstarted the session by giving a brief introduction on EDI and how deeply it is involved in the field of supply chain.

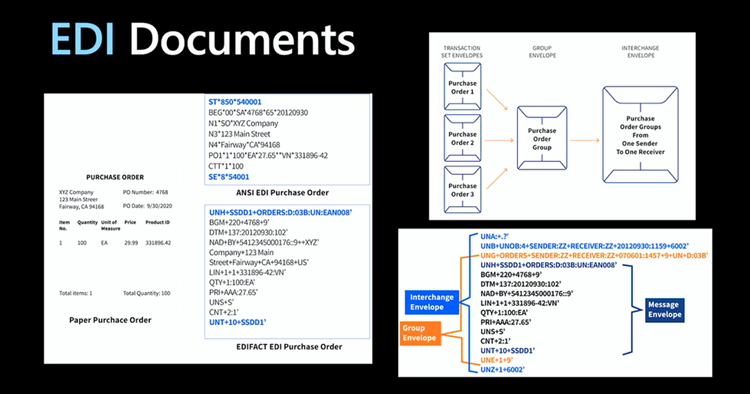

He first gave an Overview of EDI (Electronic Data Interchange), along with the working Mechanism of the same as elaborated below:

The sender identifies the data or document, which is then processed by an EDI Translator to convert it into an EDI Document. This document is then sent to the EDI Network service provider for transmission to the receiver company.

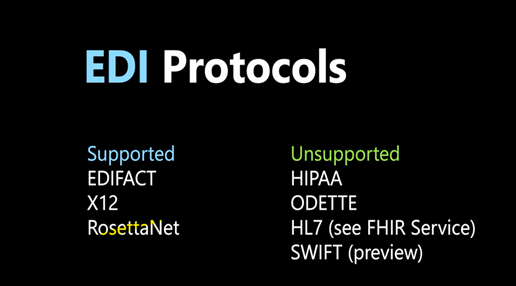

Then, he dived deep into the types of EDI communications:

Point to Point: In this approach, a direct connection is established between business partners to securely transfer their transactional documents.

Value Added Network: A service provider routes the business information to the appropriate receivers in a secure manner.

AS2: It is a communication protocol designed to transport data, especially EDI data, in a secure and reliable way. A point-to-point or VAN connection is established for business data exchange. It takes care of the encryption and decryption of the document.

Relevancy of EDI in Modern World

He highlighted that for more than 60 years, EDI has been the standard format for transmitting electronic data. With the integration of Logic Apps and modernizing supply chains, it will be able to scale even further in the future.

Demonstration of the supply chain through EDI and Logic Apps

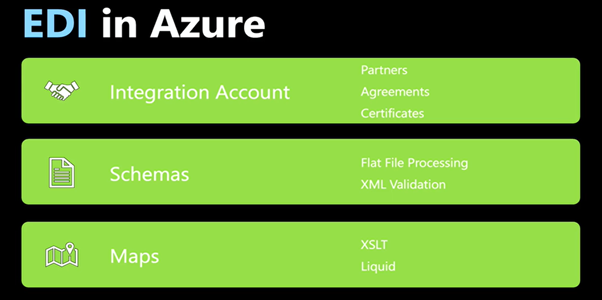

EDI in Azure

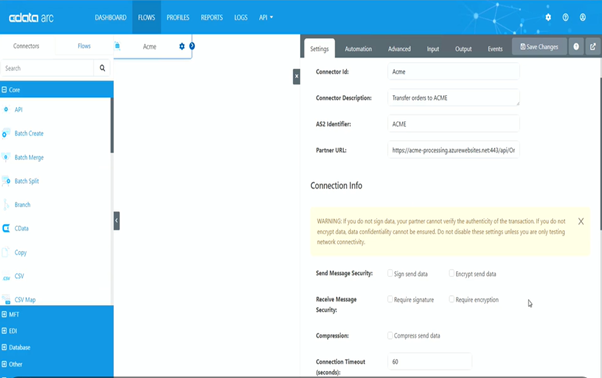

Processing of Transactional Documents in CData arc

Martin demonstrated a way to transfer the invoice details from partner 1 to partner 2 through AS2 in the CData Arc Platform. The sender or AS2 identifier was set to Tailwind.

In the flow tab, the trading partner was set to Acme and continued with the default configuration. Additionally, MDN Receipt was enabled to get acknowledgment from the receiver. The invoice file was also uploaded for the processing.

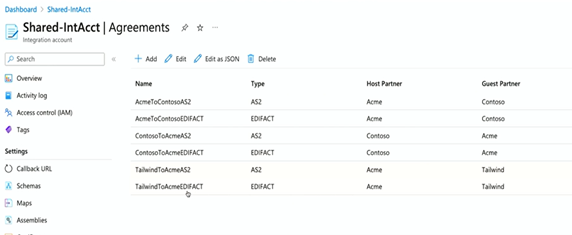

He then switched to Azure Integration Accounts to inspect the agreements of Tailwind and Acme made through AS2 and EDIFACT.

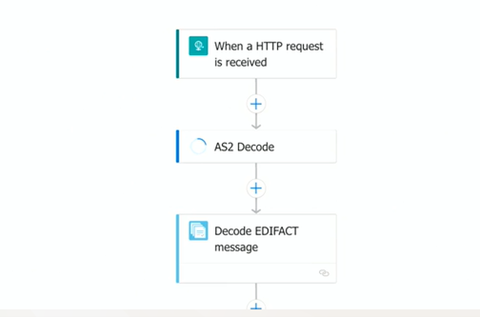

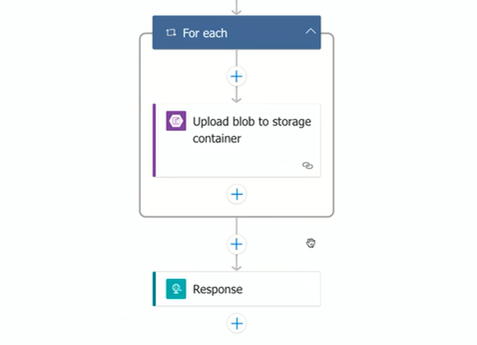

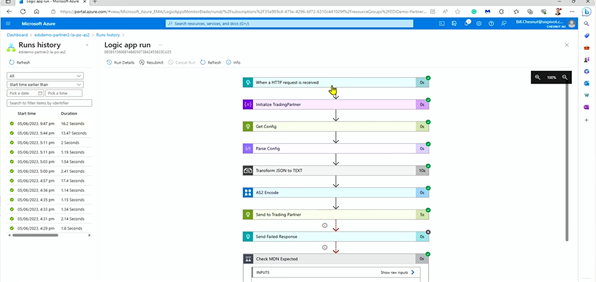

Processing of Transactional Documents through Logic Apps

Martin showcased a demo processing of Order-In and Invoice-out through Logic Apps between trading partners.

Bill Chestnut then took over the session and discussed a way to test the integration accounts for business transfer without an actual trading partner. He revealed the process of certificate creation for the AS2

Moving on, he briefly showcased the EDI document transfer between trading partners with AS2 in Logic Apps.

The session ends with Martin emphasizing the importance of EDI in the modern supply chain and how Logic Apps performs this function in the best possible way.

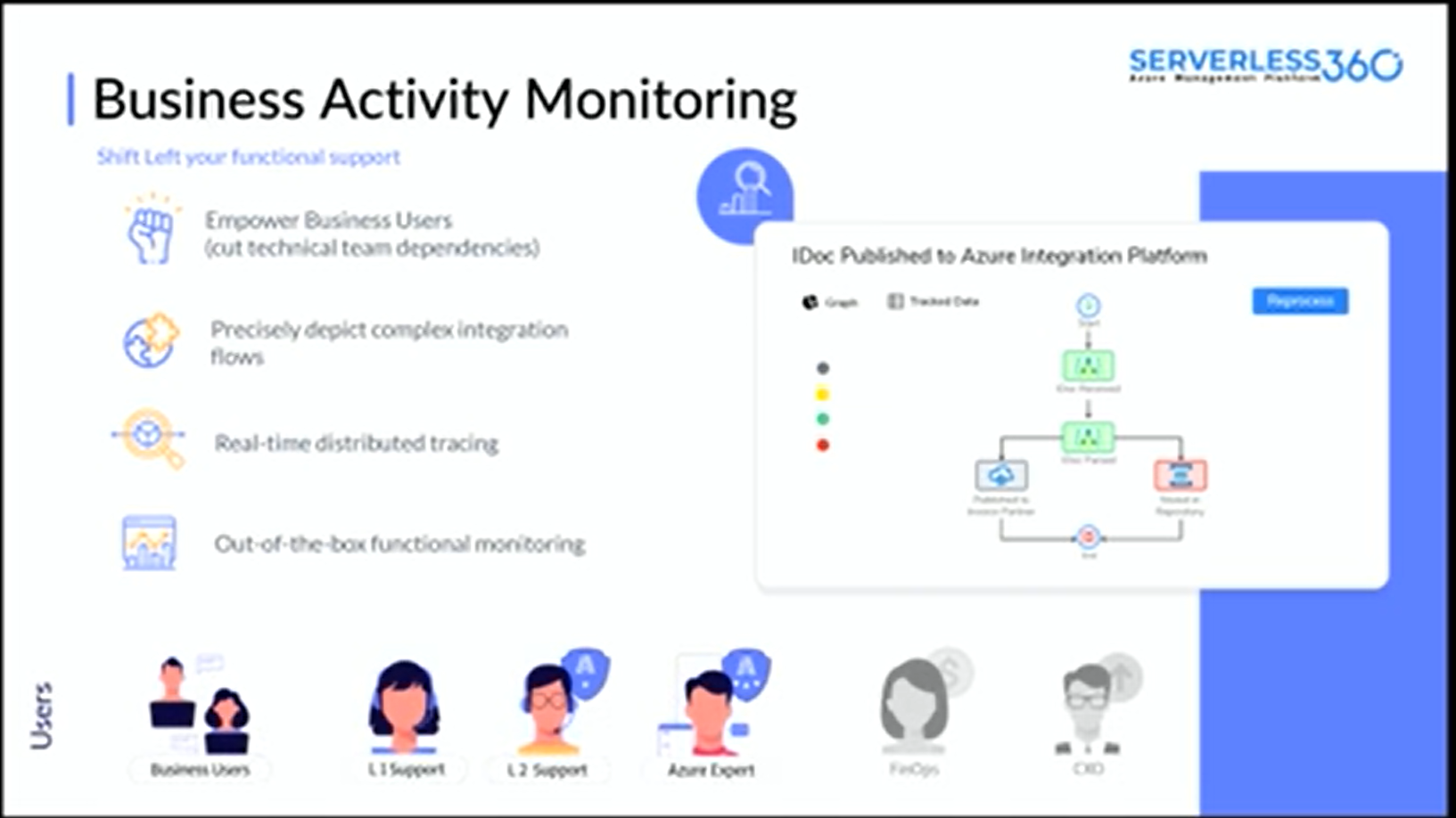

#5: Real-world examples of Business Activity Monitoring with Turbo360

We got an exciting session about Business Activity Monitoring along with a real-time demo straight from Michael Stephenson, Microsoft Azure MVP.

What is BAM and why BAM?

In every integration solution, it is crucial for organizations to establish a comprehensive traceability and monitoring system that empowers business users. Business Activity Monitoring (BAM) in Turbo360 serves as an invaluable solution for tracing and monitoring hybrid integrated solutions. Without tools like BAM, identifying bottlenecks or failures within the business flow becomes a challenging task.

BAM – a self-service portal

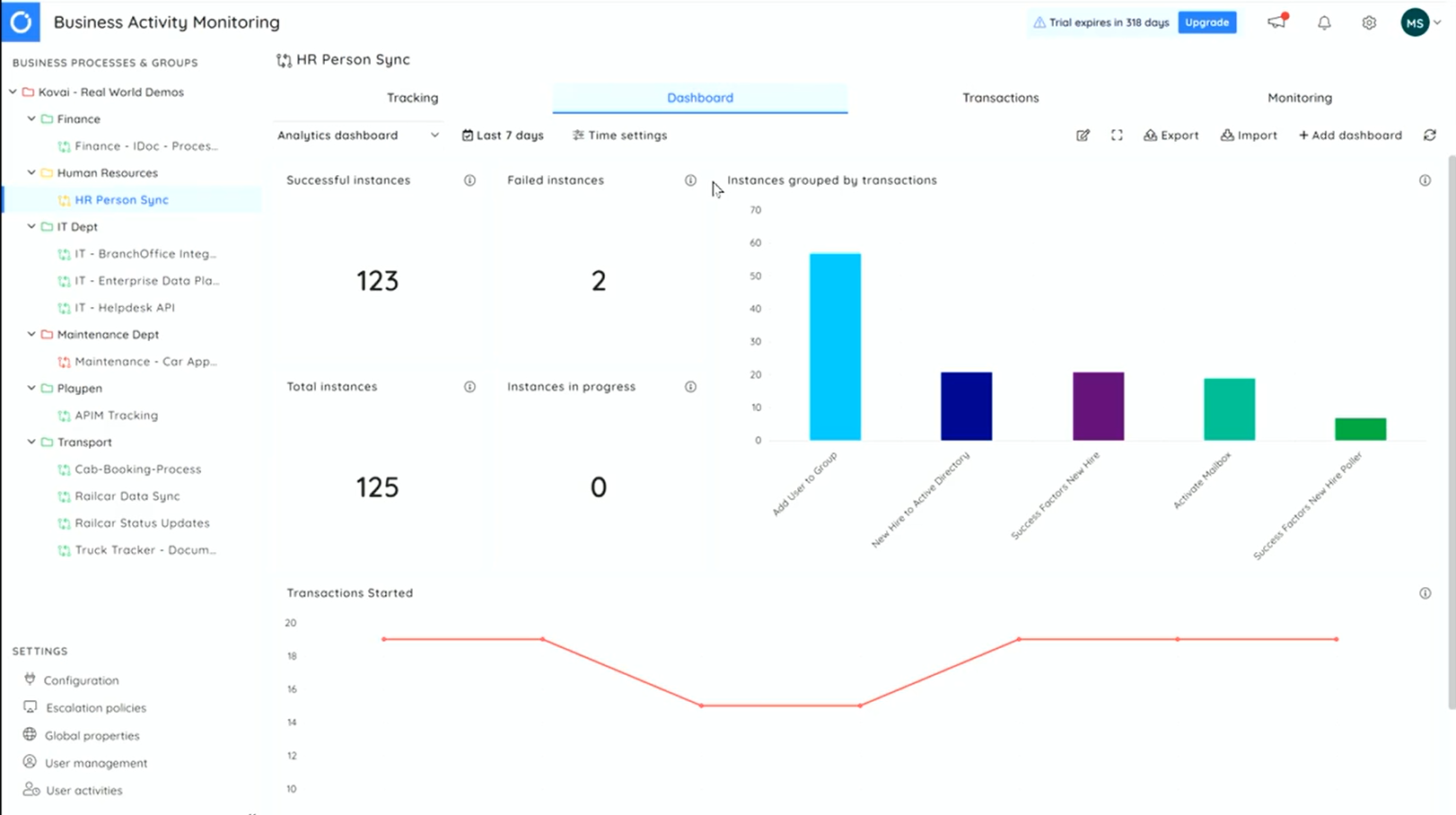

Then, talked about what can be accomplished with Business Activity Monitoring (BAM) and some real-world examples that showcased the concept of employee self-service. In this scenario, when new employees join the company, and their information needs to be synchronized across various systems.

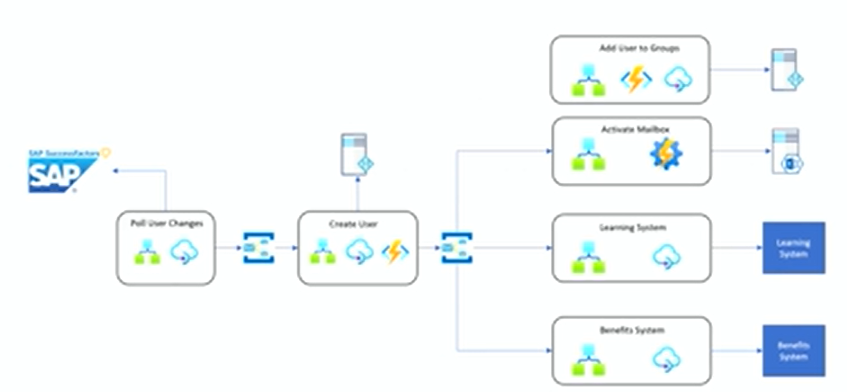

He started by integrating with SAP SuccessFactors using Logic Apps and API Management. These components worked together to pull data from SuccessFactors and publish it to the Service Bus using a publish-subscribe messaging pattern.

When a new user joins the organization, a user creation use case is triggered. This use case involves Functions, Logic Apps, and API Management, and it integrates with Active Directory. Subsequently, the user is provisioned, and this action triggers multiple integrations via the Service Bus.

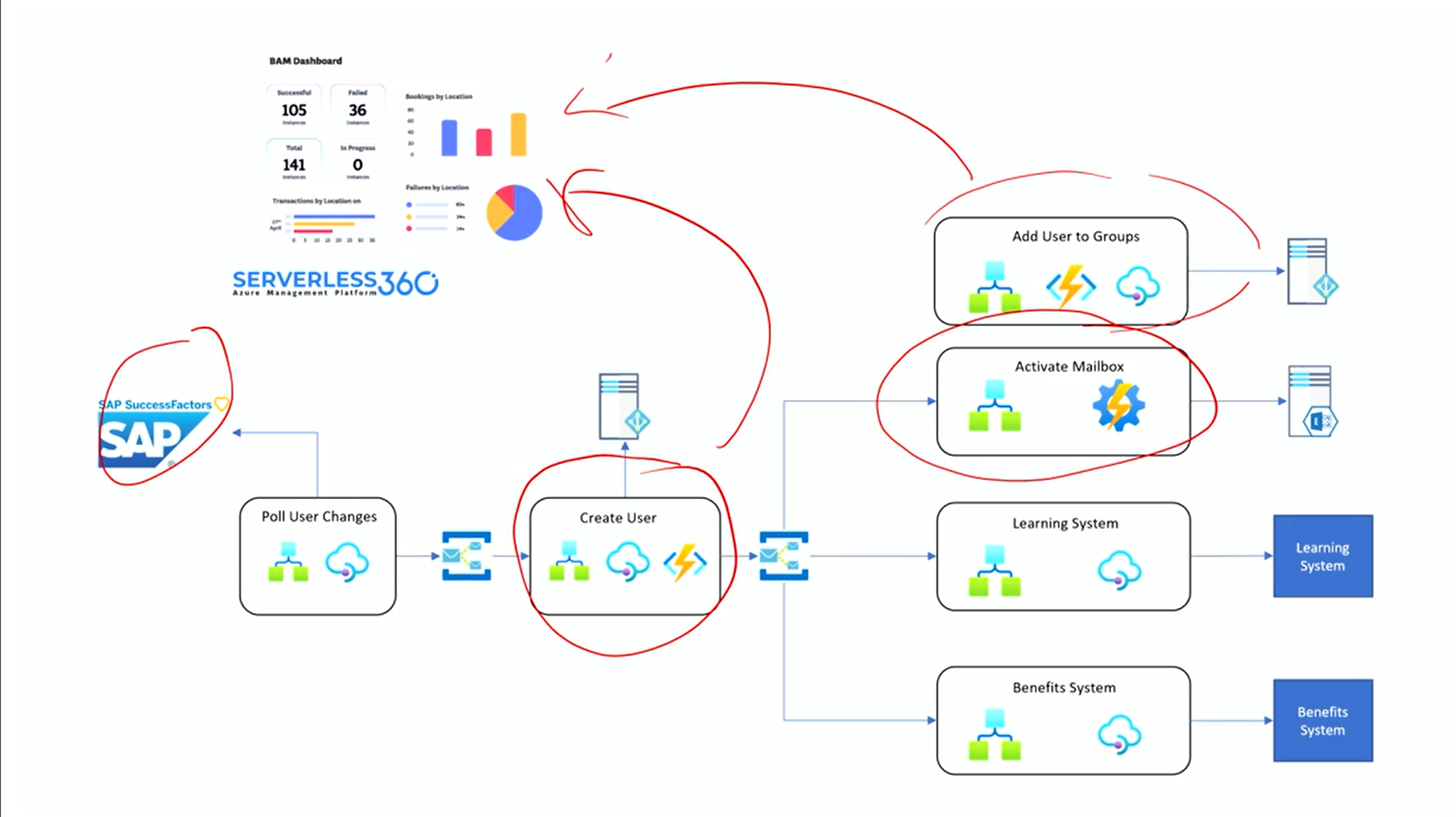

Here, the challenge lies in having a holistic view of the entire integration landscape. This is where BAM comes into play. With this, we can establish integrations across multiple components that send BAM events. These events are not merely low-level diagnostics; they provide meaningful milestones for business operations.

For example, an event can indicate the receipt of a message to create a user, an error encountered during processing, or the successful creation of a user. These events hold business value and help us track the progress of critical operations. By leveraging Turbo360, we can push these events into the platform, allowing non-developers to manage and monitor these transactions effectively.

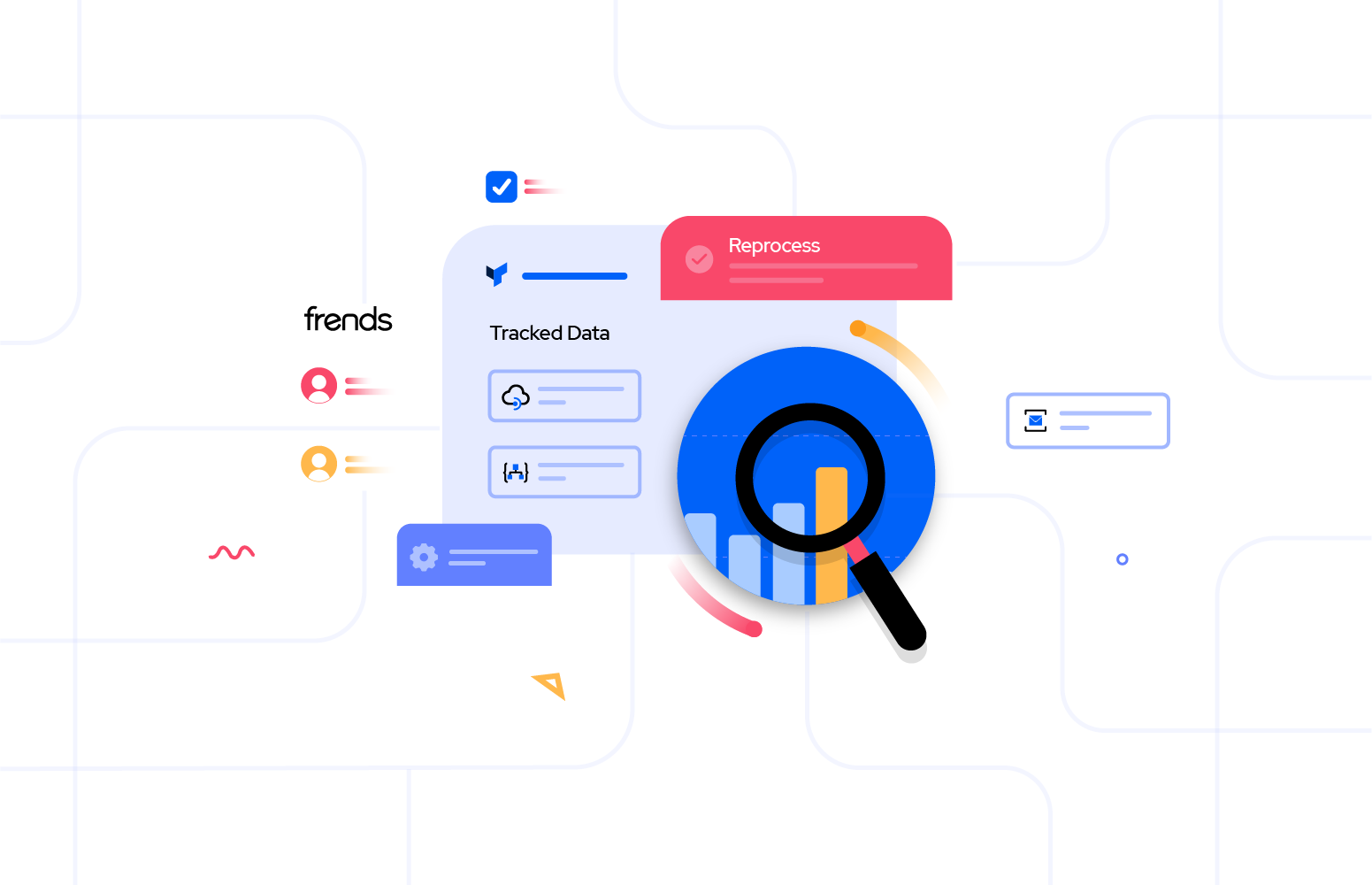

![]()

In the Action Required tab of BAM, conveniently access all the error transactions. Moreover, get the option to save a query to retrieve error data and monitor its progress using the query monitor. This BAM dashboard offers a query-based widget that allows to save and view data in a clear and organized manner.

It offers several advantages in terms of functionality and ease of use. Here are some key advantages of Turbo360 BAM:

- Track, Trace, Action Required, Repair/Resubmit.

- Advanced Querying and Filtering

- End-to-End Visibility

- Seamless Integration

- Extensive Monitoring Capabilities

- User-Friendly Interface

- Failure Monitoring, Query Monitoring, Duration Monitoring.

- Custom Dashboards and Widgets

- Enhanced Security and Compliance

Overall, Turbo360 BAM provides a comprehensive and user-friendly tracking solution for complex Azure/hybrid integrations, offering advanced features, extensive integration support, and enhanced visibility compared to native Azure monitoring tools

#6: Event Hubs – Kafka and event streaming at high-speed

Kasun Indrasiri, Senior Product Manager from Microsoft took us on an exhilarating journey through the latest capabilities of Event Hubs, giving us a sneak peek into the exciting features. He was accompanied by Steve Gennard, Development Director of FX at the London Stock Exchange.

Kasun started his discussion by talking about real-time event streaming being the lifeblood of modern enterprises.

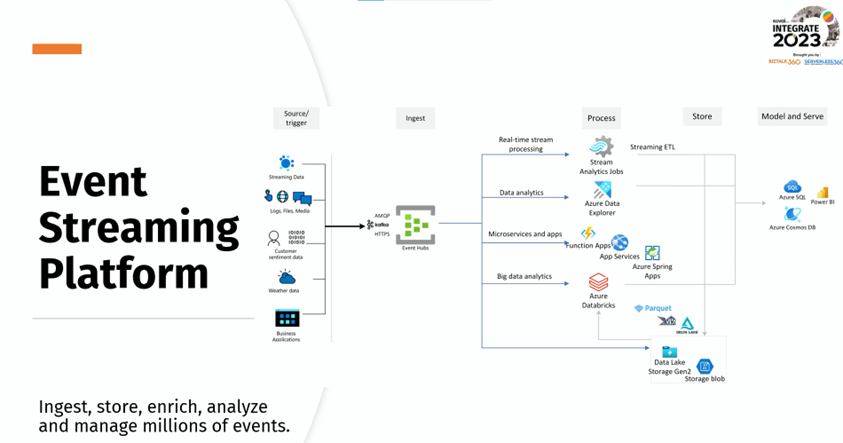

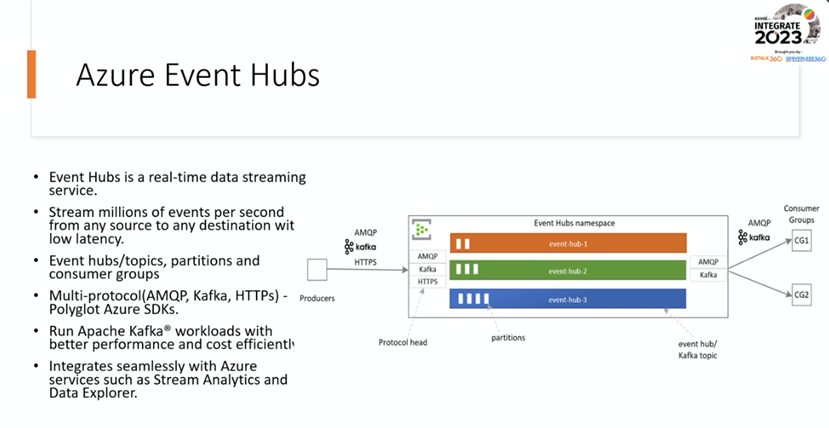

Event streaming platform

An event streaming platform allows to ingest, store, enrich, and generate business insights out of all the events that get generated within the enterprise. Azure Event Hubs play the role of the main ingestion service.

He then gave an introduction to Azure Event Hubs and explained how it’s working.

Event Hubs for Kafka

For the following reasons, one may choose Event Hubs for Kafka.

- Simplify Kafka’s event-streaming experience by bringing existing Kafka applications to Event Hubs without writing a single line of code.

- Event Hubs provide scalability and better performance and reliability, which is another significant benefit.

- Azure Event Hubs is one of the most cost-efficient cloud services with no feature-specific cost.

- By using Event Hubs, you get all the core services like Azure Active Directory and Azure Monitor that are natively integrated with Event Hubs.

What’s new in Azure Event Hubs?

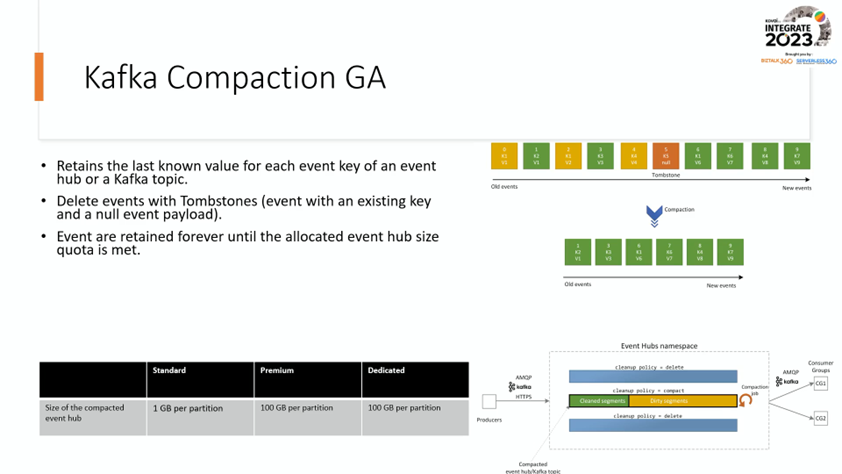

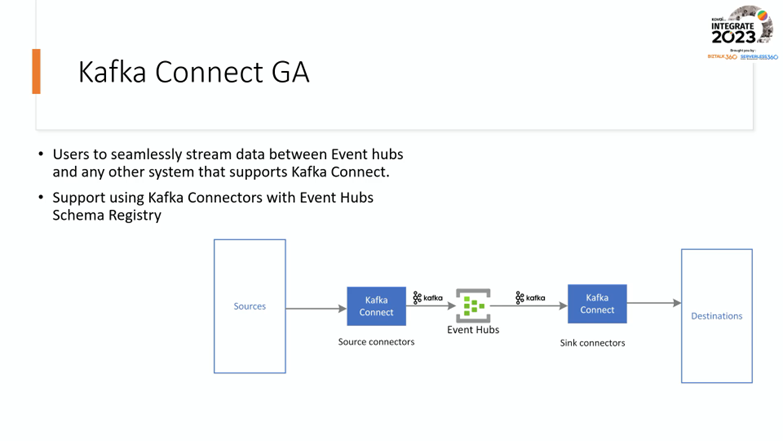

- The general availability of Kafka compaction and Kafka connect was recently launched.

- The Mirror Maker tool has been used to migrate existing data from the on-prem Kafka cluster to Event Hub.

- Data Generator public preview was also launched in which one can ingest data to Event Hubs and build an end-to-end event streaming pipeline. It is helpful in Azure stream analytics, Azure data explorer, and multiple other consumer services.

Then, Kasun spoke about the enhancements made to improve the performance of the Azure Event Hubs which include the key features as below.

Event Hubs – Premium

It is a multi-tenant offering that provides dedicated computing and memory capacity.

Next-gen Event Hub dedicated clusters

It’s built on top of the latest infrastructure with self-serving scalability. It is also known to provide consistently low latency, unlike other cloud vendors.

Finally, Steve Gennard discussed a few challenging use cases of Event Hubs with the stock exchange.

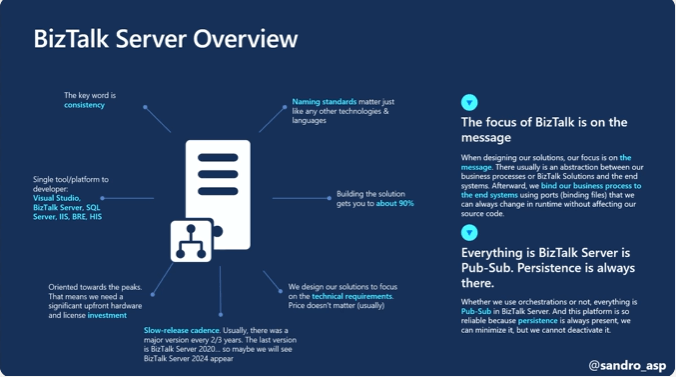

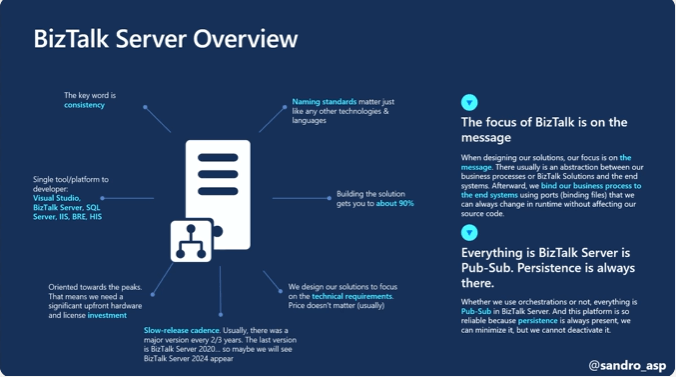

#7: BizTalk Server to Azure Integration Services migration

Sandro Pereira, Head of Integration at DevScope, one of the renowned speakers highlighted Day 2 of Integrate event with the most anticipated topic in the Integration space “BizTalk Server to Azure Integration Services migration”. Sandro kick-started the session by sharing the link to the whitepaper which can be useful for audiences in the migration phase.

He set the path at the beginning itself, saying that “this migration doesn’t mean that BizTalk Server is dead”. Microsoft team has provided the Mainstream end date is April 11, 2028, and the Extended end date is April 9, 2030.

Before enterprises begin with the migration, Sandro advised that it is necessary to know the overview of both: BizTalk Server and Azure. He highlighted the overview of the same.

With the context being said, he briefly explained the timeframe that will take place to completely migrate from BizTalk Server to Azure. This journey differs from organization to organization based on the number of servers they own. In short, depends on the footprint they own in BizTalk Server. Hence, this journey will not be an easy or short ride for all.

Migration basics

Sandro highlighted the following key points to be remembered before initiating the migration process:

- What is the technology stack we are going to use?

- What is our subscription policy?

- What is our resource policy?

- What is going to be our security policy?

- What is our tag policy?

- What is our Azure naming convention?

- End-to-end tracking capabilities strategy

- Monitoring capabilities strategy

- Error handling and Retry capabilities

- Persistence or Reentry capabilities

- Deployment policy

- Development Strategies/Policies

Migration phases

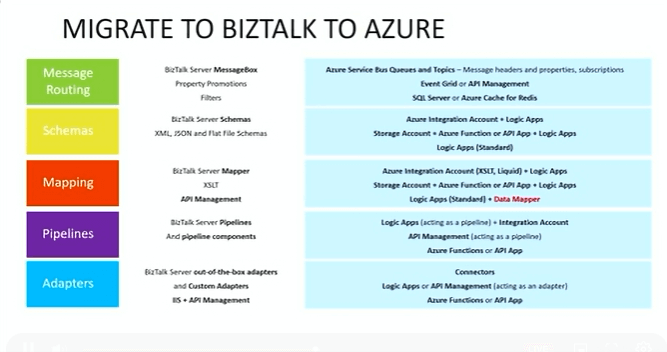

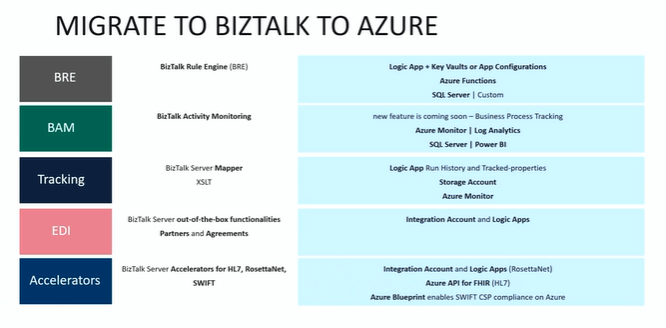

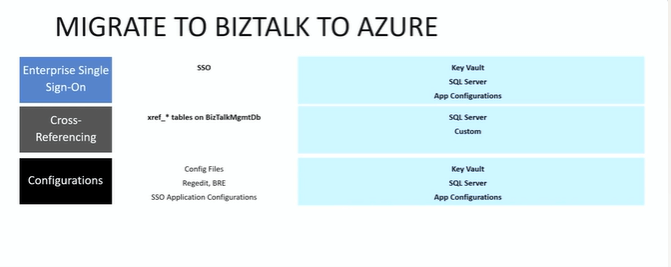

Once the above-mentioned key points are in place, next is the migration phase from BizTalk to Azure. He mentioned how each component migrates.

Sandro added the phases involved in Migrating from BizTalk Server to Azure Integration Services

- Assessment: Assessment of existing BizTalk Server environment and applications.

- Design: Design of new Azure Integration Services environment and integration solutions.

- Development: Development of integration solutions using Azure Integration services.

- Testing: Testing of Integration solutions for functionality and performance.

- Deployment: Deployment of integration solutions to production environments.

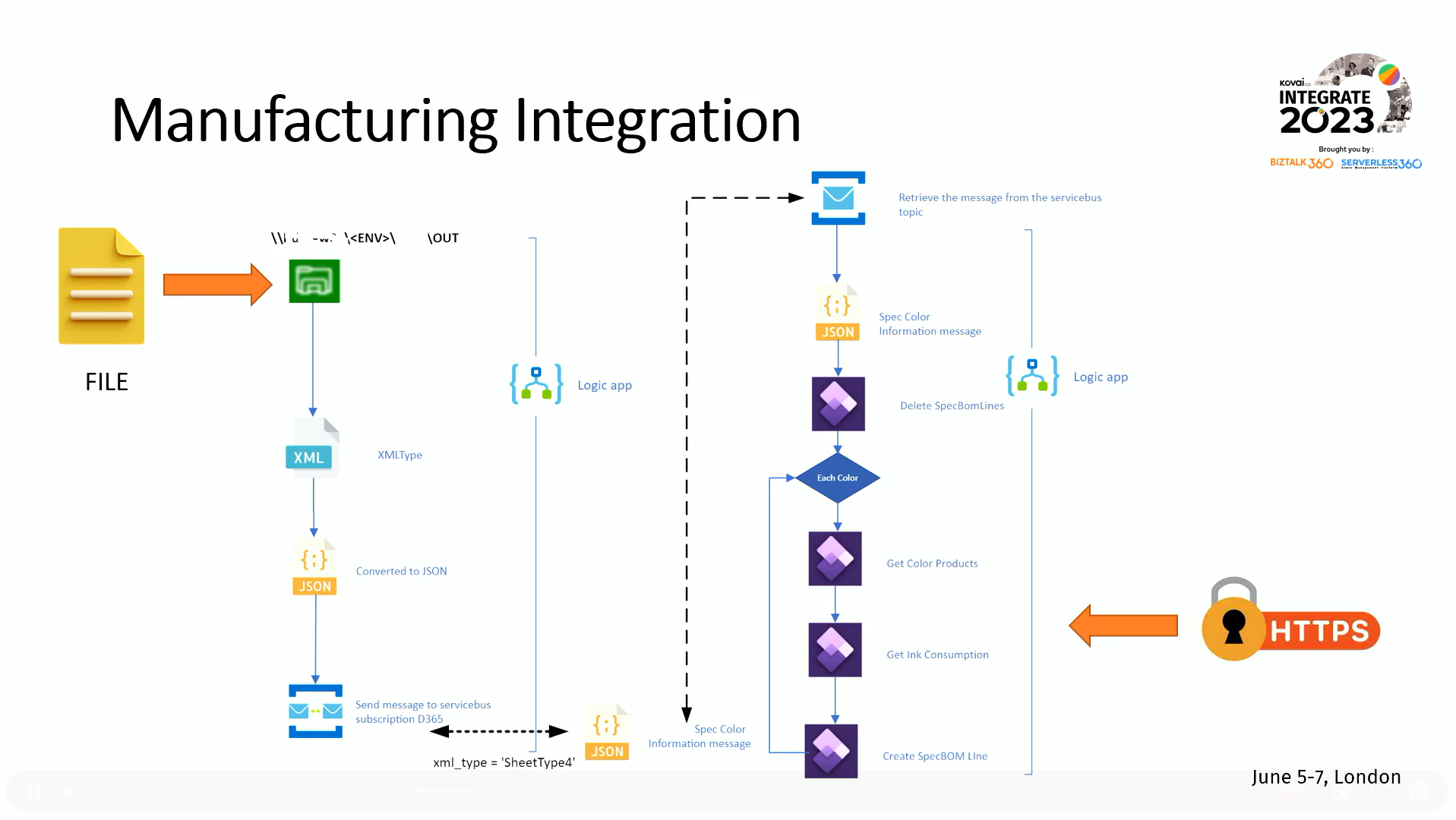

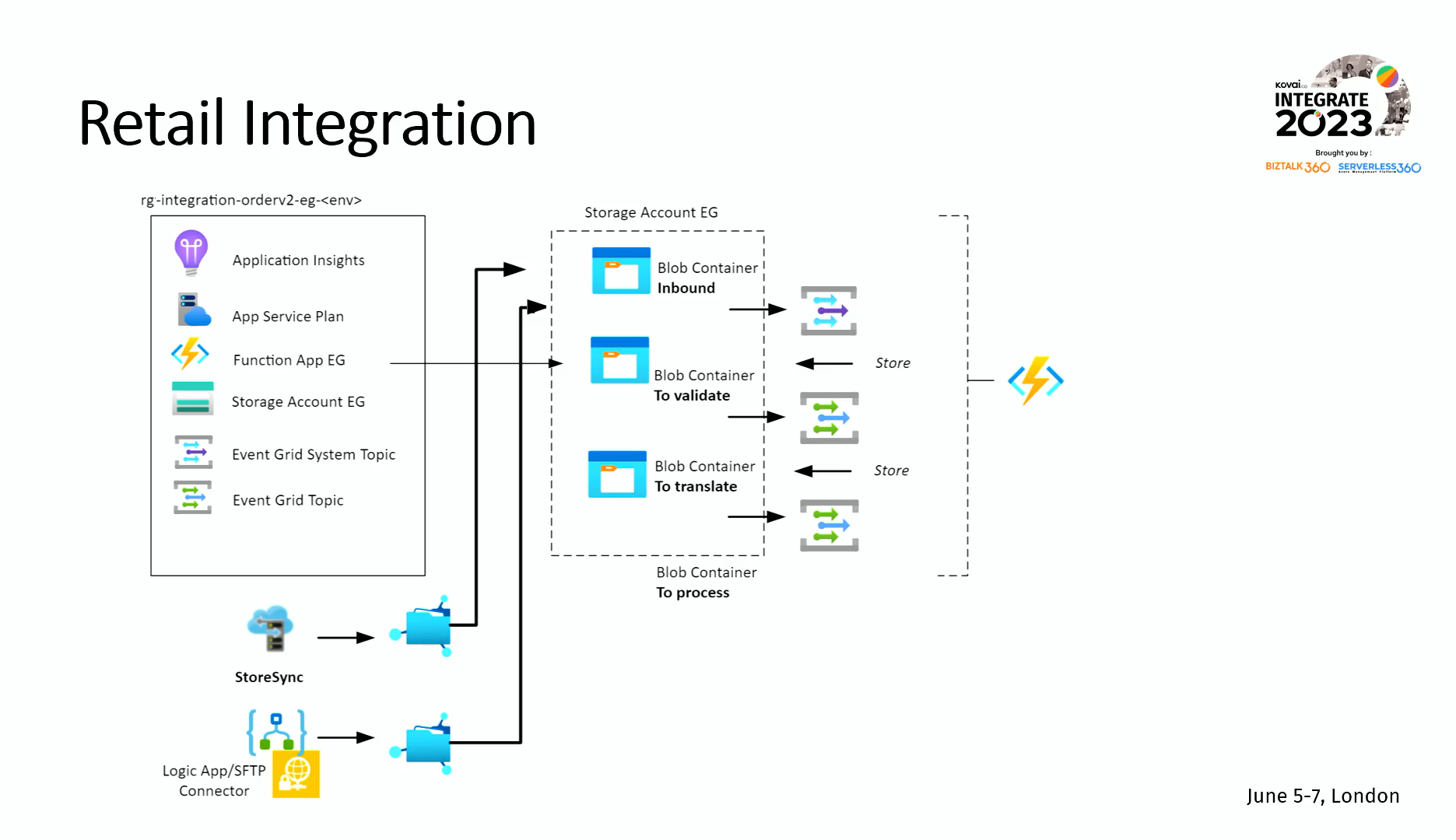

In the next part of the presentation, Sandro showed a few migration scenarios in a crisp, clear manner, which awed the audience.

#8: Azure Logic Apps Deep Dive

The deep dive session on Logic Apps featured Slava Koltovich and Wagner Silveira from Microsoft, primarily discussing the future roadmap and updates on Logic Apps.

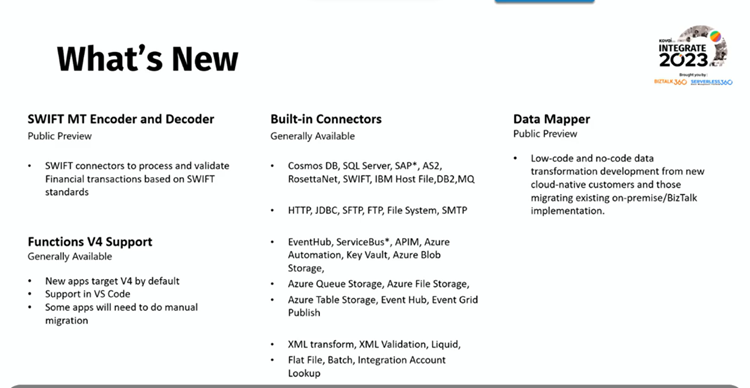

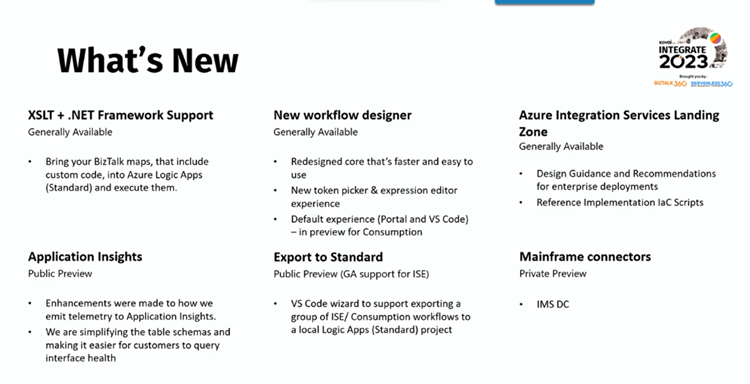

Below are some of the feature updates:

- Logic App Standard now supports Functions v4 and it’s been generally rolled out to users.

- Over 30+ new built-in connectors have been released for the Logic App Standard.

- Users can now utilize Logic Apps Standard to process SWIFT-based payment transactions and construct cloud-native applications with comprehensive security measures, isolation, and VNET integration.

- Developers can now add.NET Framework methods into XSLT transformations in Logic Apps Standard, which simplifies migrating integration workloads from BizTalk Server and Integration Service Environments (ISE) to Logic Apps.

- This revamped new Logic App designer, offers users an enhanced interface and upgraded functionality for designing and managing their Logic Apps workflows.

- Logic Apps Standard VS Code Extension now allows exporting Logic Apps workflows deployed either in Consumption or Integration Service Environment (ISE).

- Azure Integration Services landing zone supports comprehensive design support of Azure Integration Services at an enterprise level, providing recommendations in key areas such as architecture, design considerations, and crucial design aspects.

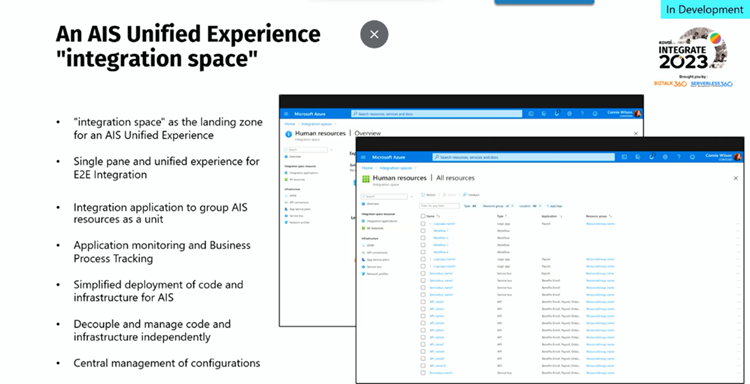

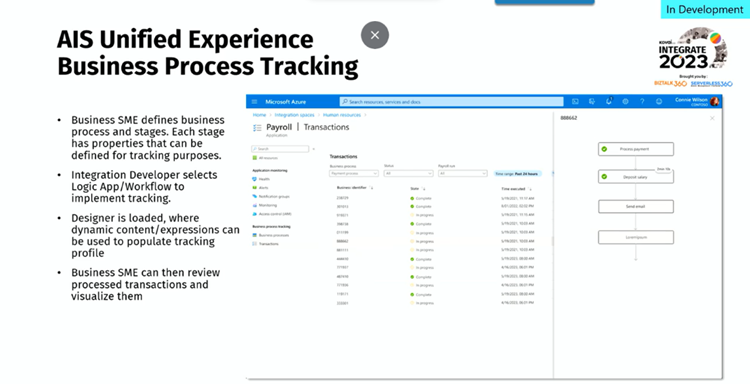

Azure Integration Services application monitoring

Following that, Slava mentioned that the new Azure Integration Services monitoring feature provides a unified experience, offering comprehensive visibility and control over the integration workflows.

With its built-in monitoring capabilities, one can easily track the performance and health of their integrations, monitor message flows, and analyze logs and metrics to gain valuable insights and ensure the seamless operation of applications.

This monitoring feature allows for unified monitoring and tracking of the execution of your business processes within integration workflows. With functionalities like Business Activity Monitoring (BAM), obtain visibility into the progress and status of business processes, track key milestones, identify bottlenecks, and analyze performance metrics to enhance efficiency.

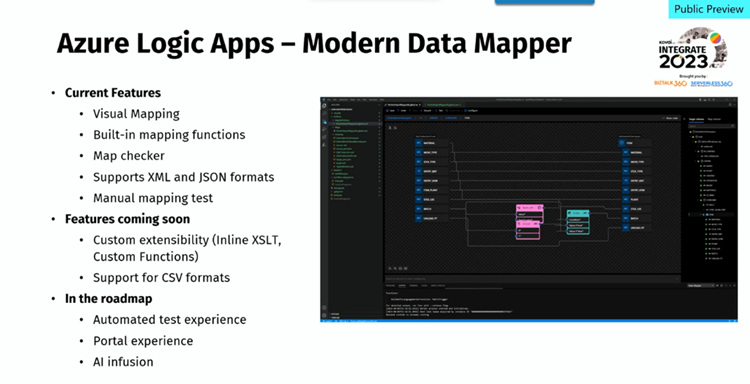

Data Mapper

Accompanying that, Wagner shared updates on the new Data Mapper, a visual data transformation tool available for Logic Apps Standard and consumption models. The feature offers an enhanced XSLT authoring and transformation experience, featuring intuitive drag-and-drop gestures, a prebuilt functions library, and manual testing capabilities. It allows to easily create mappings for XML to XML, JSON to JSON, XML to JSON, and JSON to XML transformations.

Finally, Slava and Wagner conducted multiple polls in between each update to receive feedback from the audience.

#9: Serverless Workflows using Azure Durable Functions & Logic Apps: When Powers Of Code and LowCode Are Combined

Jonah Andersson, DevOps Engineer, gave her debut session on day 2. Azure Durable Functions and Azure Logic Apps are two serverless computing services in Microsoft Azure that allow developers and users to write stateful workflows and integrate them into other services and applications – by code and user interface logic.

In this session, Jonah shared how these two are relevant in creating an inclusive workspace environment for serverless and cloud development for everyone.

Agenda

- LowCode/NoCode and Serverless Development

- Serverless Integrations Use Cases

- Developing event-driven stateful workflows with Azure Durable Functions and Azure Logic Apps

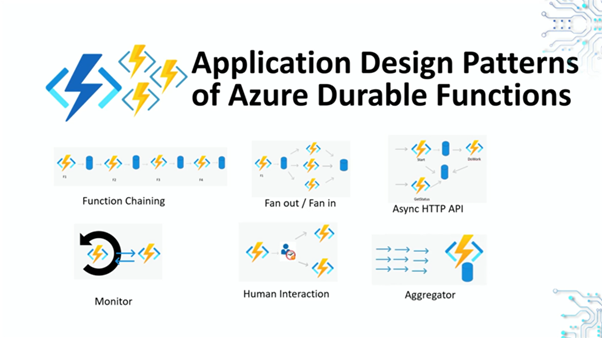

Jonah commenced the session by explaining the benefits of low-code/ no-code solutions and Serverless Development. She also briefly described the working mechanism of Azure Logic Apps and Durable Functions and explained the orchestrators quickly. Moving forward, Jonah highlighted the different Application design patterns of Azure Durable Functions and how they can be implemented.

A glimpse of the patterns discussed,

- Function Chaining – Execute functions in a particular order or sequence

- Fan–Out/Fan-In – Execute multiple functions in parallel, then wait for all functions to finish and aggregate the results

- Async HTTP APIs – Solves the problem of coordinating the state of long-running operations with external clients

- Monitor Pattern – Helps to have a process of monitoring to be stateful until specific conditions are met

- Human Interaction Pattern – Automate business processes that require human interaction

- Aggregator Pattern – Especially used for stateful entities where you can aggregate event data over some time

She then moved into the concepts of Sub – Orchestrators and how they can be implemented. She explained the best practices and error handling for Azure Durable Functions and how it can be helpful during development.

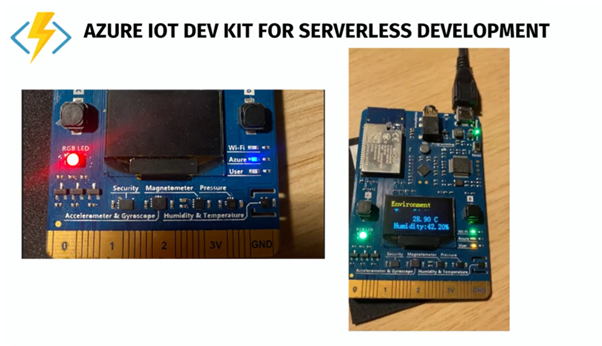

Jonah highlighted the Azure solution she worked with, which solved various problems. The solution is an Azure IOT Dev Kit for Serverless Development which facilitates integration with Azure Serverless and event-driven compute PaaS/SaaS services.

Jonah concluded the session with a key takeaway explaining the properties of Azure Durable Functions and Logics Apps, clearly explaining when to choose what.

#10: Real-time event stream processing and analytics

Kevin Lam, Principal Group Program Manager at Microsoft, and Ajeta Singhal, Senior Product Manager at Microsoft, delivered a session on Real-time event processing and Azure Stream Analytics respectively.

Real-time Event Streaming:

Kevin started with a simple way of explaining how the information and access to the information have evolved over the years, with Search engines replacing libraries, OTT platforms replacing theatres, online music streaming replacing the CD/DVD floppy, and so on.

He emphasized the importance of accessing real-time data in day-to-day life to use the business opportunities in front of us and stated that enterprises are still behind in consuming data and creating value in real time.

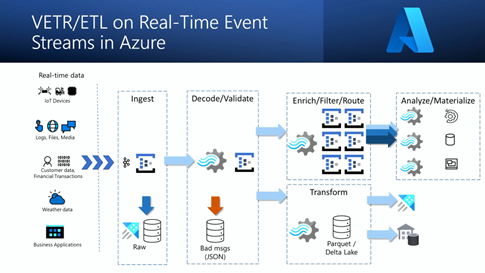

Kevin shared the below picture of Real-time Event Streams in Azure and explained how the data process happens inside the event streams in Azure in detail.

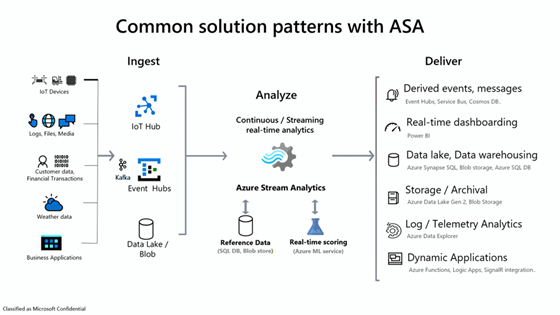

When the Real-time data is obtained from sources such as IoT Devices, Media, Financial transactions, weather data, and business applications, it is ingested into the event streams in Azure. From there, the data is decoded/validated with the use of schemas. Then it is enhanced and transformed into a different destination based on the requirement.

Azure Stream Analytics:

Ajeta Singhal took over the session from here to explain the Azure Stream Analytics and Microsoft Fabric Event Streams.

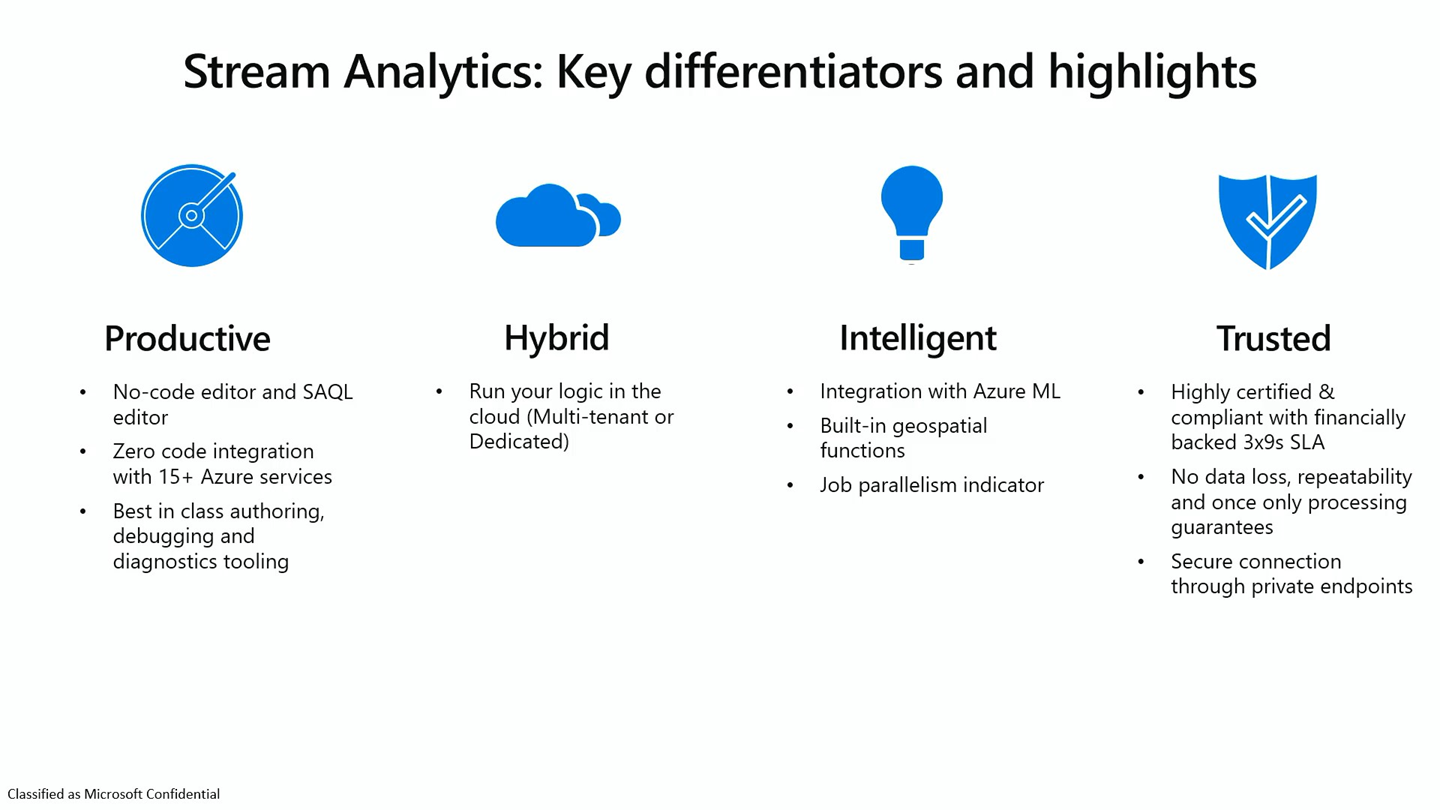

She explained that Azure Stream Analytics provides higher productivity as it has a no-code editor, zero code integration with 15+ Azure services, integration with Azure ML, and a secure connection through private endpoints.

She went on to share how Azure Stream Analytics workflow is setup and elaborated on how the data can be streamed in real-time with the using the same.

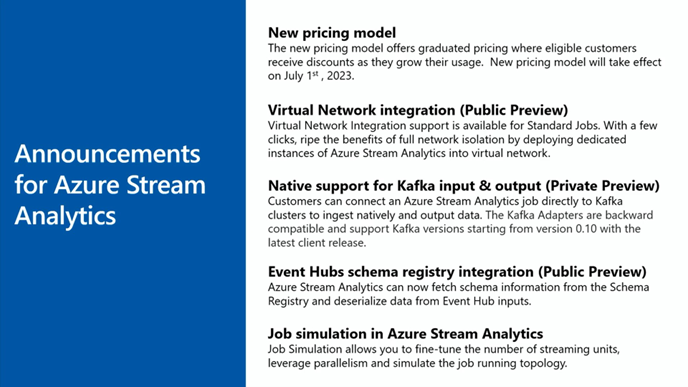

Later, she discussed about the announcements for Azure Stream Analytics as shown below:

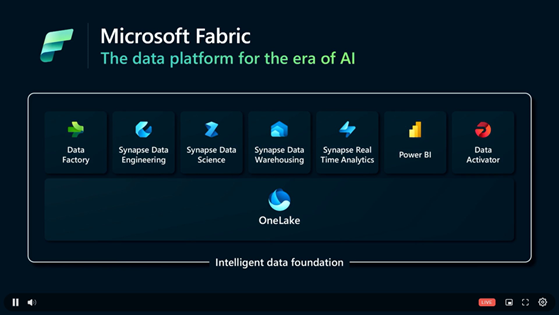

Microsoft Fabric event streams

In the final stage of the session, Ajeta explained Microsoft Fabric, a SaaS-based one-stop tool to capture, transform, and route real-time event stream data to destinations with no-code experience. Just like how Microsoft Office 365 fulfills the purpose of a regular workday in our Office, Microsoft Fabric can be considered as an Office 365 for Data as it comes up with the Data factory, Synapse Data Engineering, Data Science, Real-time Analytics and offers the ability to build a report in Power BI and Data Activator.

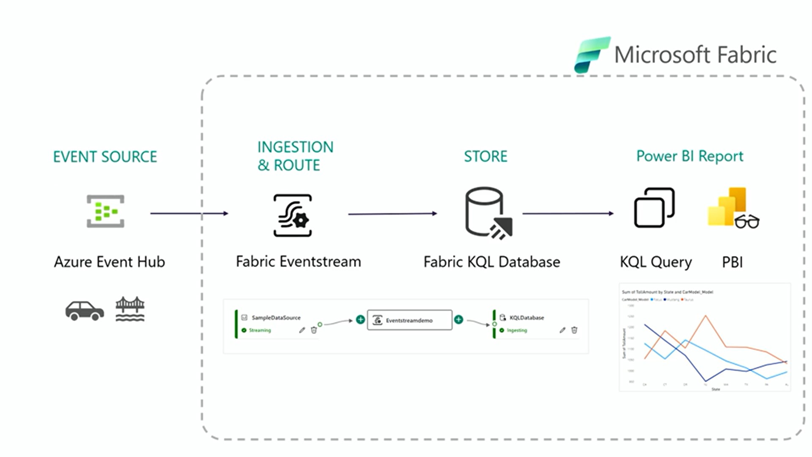

Finally, the session ended with a fascinating demo of Synapse Real-time Analytics with the data of cars passing through the tolls of different states and the amount paid by them in each toll. As shown in the below flow chart, the real-time events are collected from the Event source and routed to the EventStream in Fabric. The same data can be stored in a KQL Database in a separate table and can be queried based on the requirement.

That’s the wrap for Day 2!