#1: Keynote

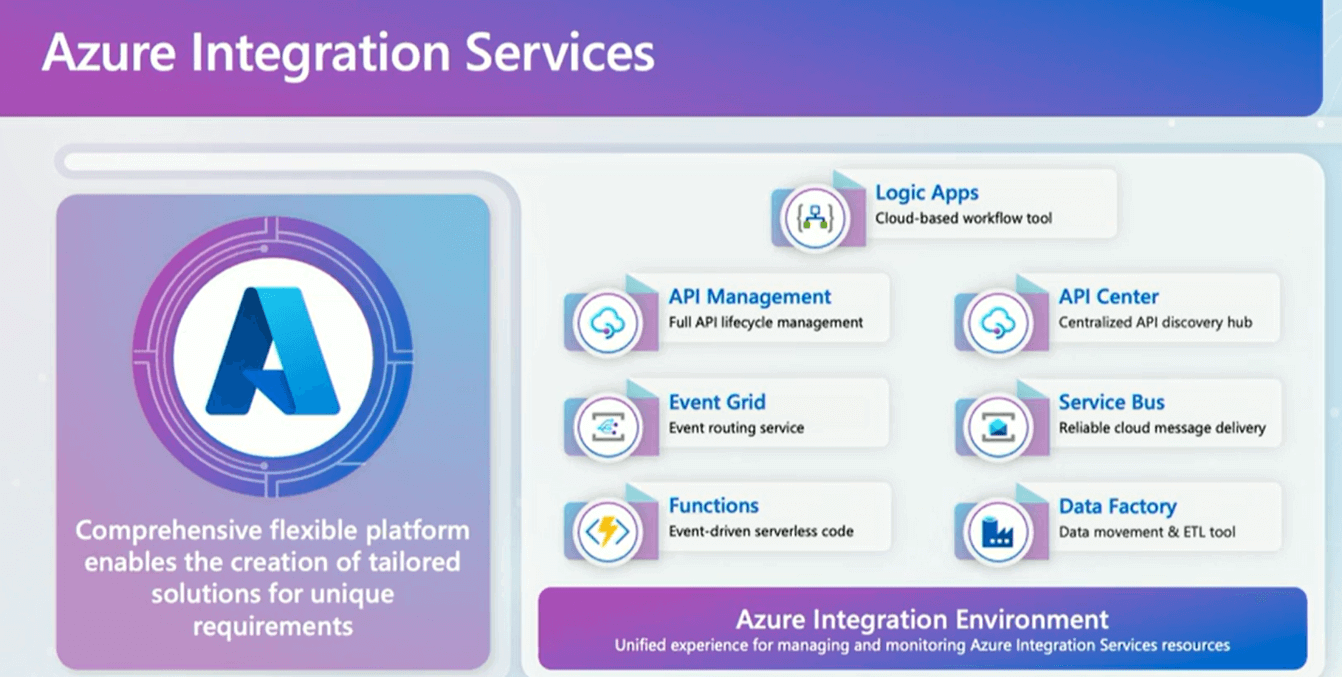

Slova Koltovich, Principal GPM at Microsoft, delivered the keynote at Integrate 2024. He began by discussing Azure Integration Services (AIS), a comprehensive platform enabling the creation of tailored solutions to meet unique requirements.

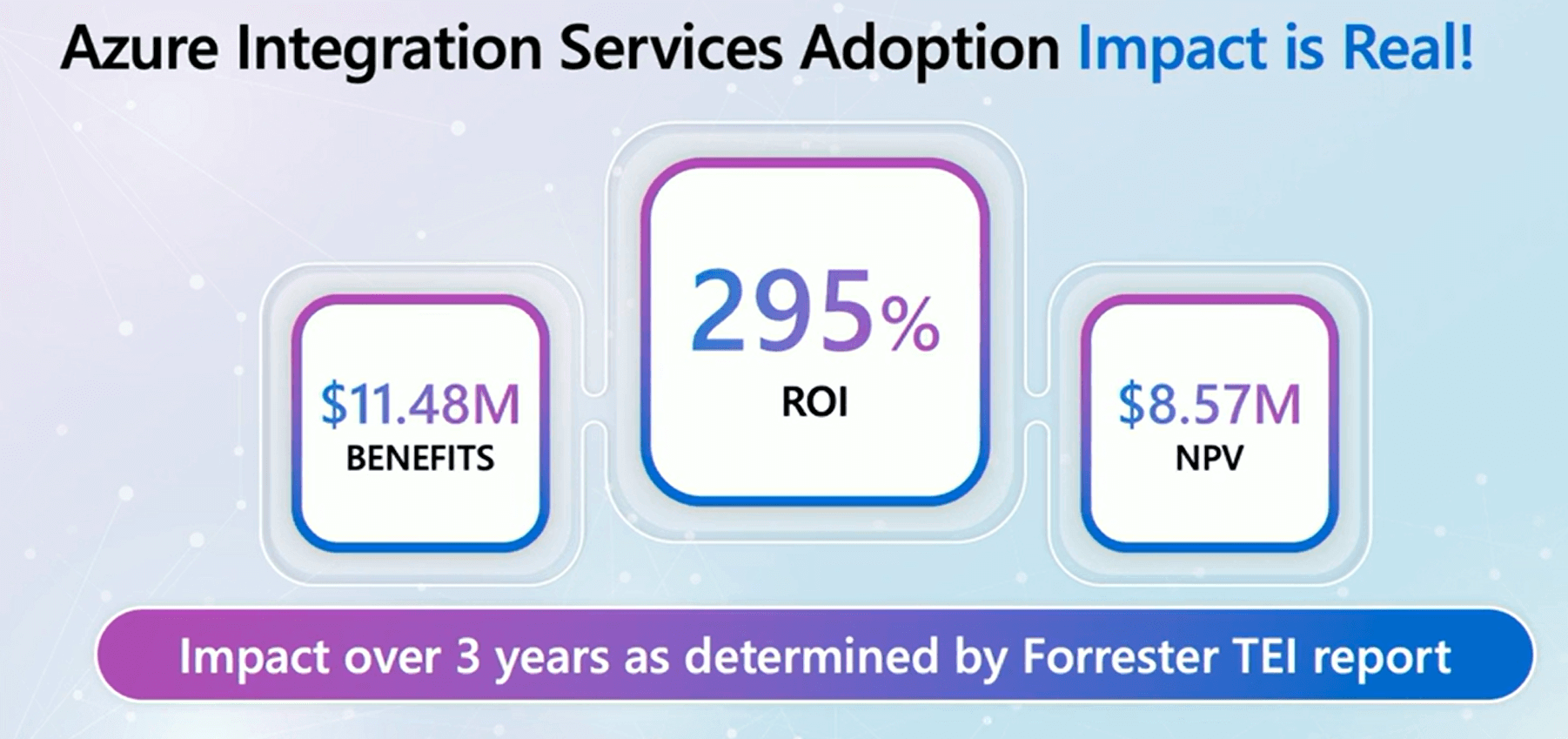

The primary advantage of AIS lies in its focus on security and compliance. With nearly 800 connectors, AIS facilitates connections to numerous data sources. Microsoft invests billions annually in security, with thousands of personnel dedicated to it. He noted that the return on investment (ROI) for AIS is approximately 300%.

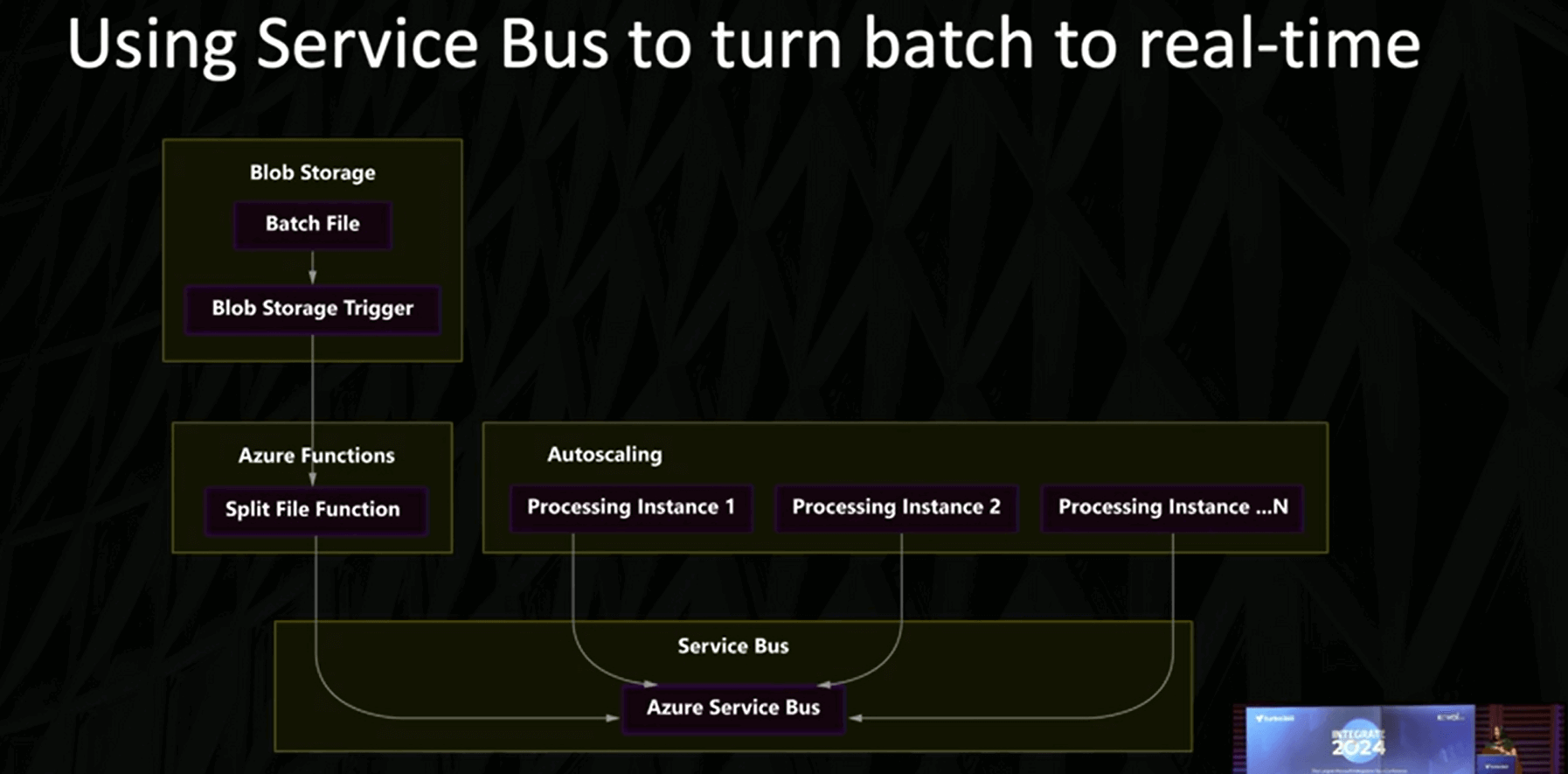

He then introduced David Whitney, Director of Architecture at New Day, a company specializing in credit cards. Whitney shared how they utilize Azure API Management (APIM) and Service Bus in their applications. He explained how they leverage APIM and Service Bus Premium to dynamically scale workloads, using APIM for credential validation and traffic routing.

Slova proceeded to announce new features in Azure:

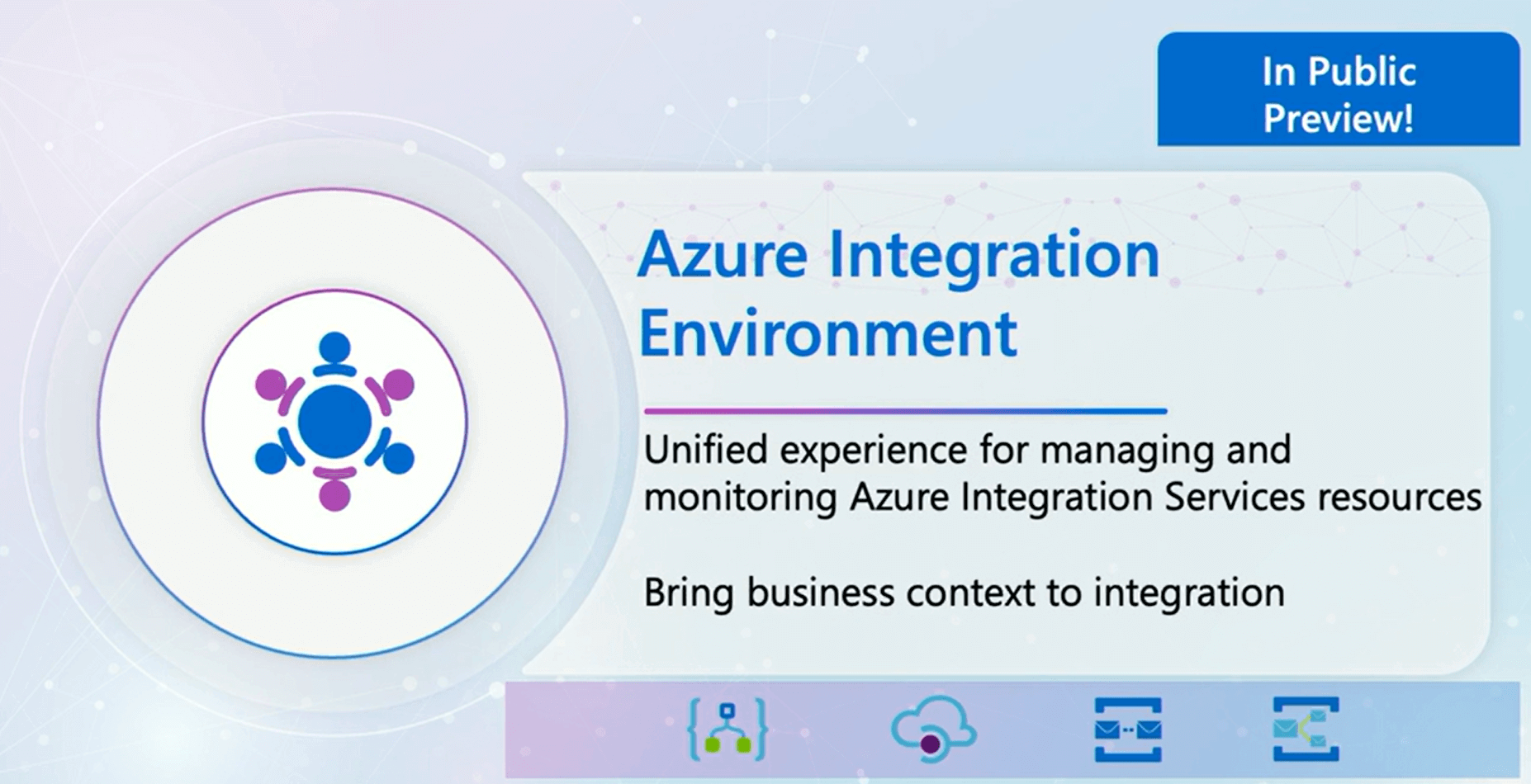

- Azure Integration Environment is now available in public preview.

- End-to-End monitoring for AIS is also in public preview.

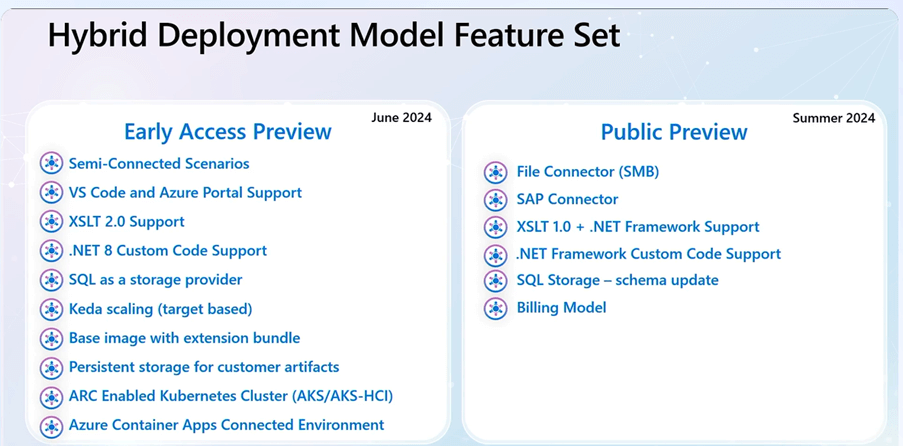

- Hybrid deployment of Azure Logic App Standard will be accessible from June 2024 in Early Access Preview and in public preview in Summer 2024.

- Business process tracking stage status and enhanced token picker support.

- Streamlined integration development with AI.

- Standard Logic App now enhances developer productivity with zero downtime deployment using slots.

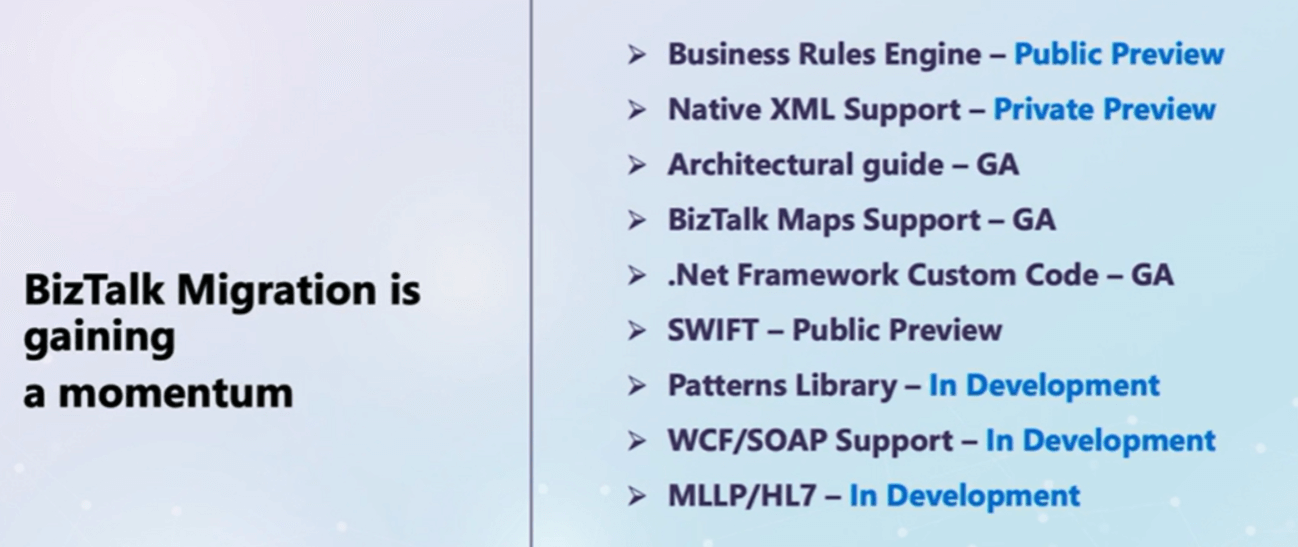

- BizTalk migration gains momentum with the release of new features.

He mentioned ongoing work on a testing framework for Logic Apps and invited Kevin Lamb to introduce the Messaging services to be discussed in upcoming sessions. He then explained how Logic Apps can be used to build AI-powered applications with Azure AI Search and Azure OpenAI Endpoints, illustrating how Logic App actions can replace lengthy Python code for data ingestion and retrieval using the RAG pattern.

He concluded by highlighting how customers utilize APIM as a proxy between their Open AI endpoints and applications.

#2: Accelerating developer productivity with Azure Logic Apps

Wagner Silveria kicked off the session by outlining the investments dedicated to enhancing the Azure Logic App Standard tooling, aimed at boosting developer productivity and streamlining DevOps processes in alignment with common developer activities. He highlighted improvements in the onboarding process, where automation now handles installation and updates of dependent extensions, ensuring they stay up to date. Additionally, optimizations are made to reduce boot-up time, and workspace support has been implemented to enhance file organization.

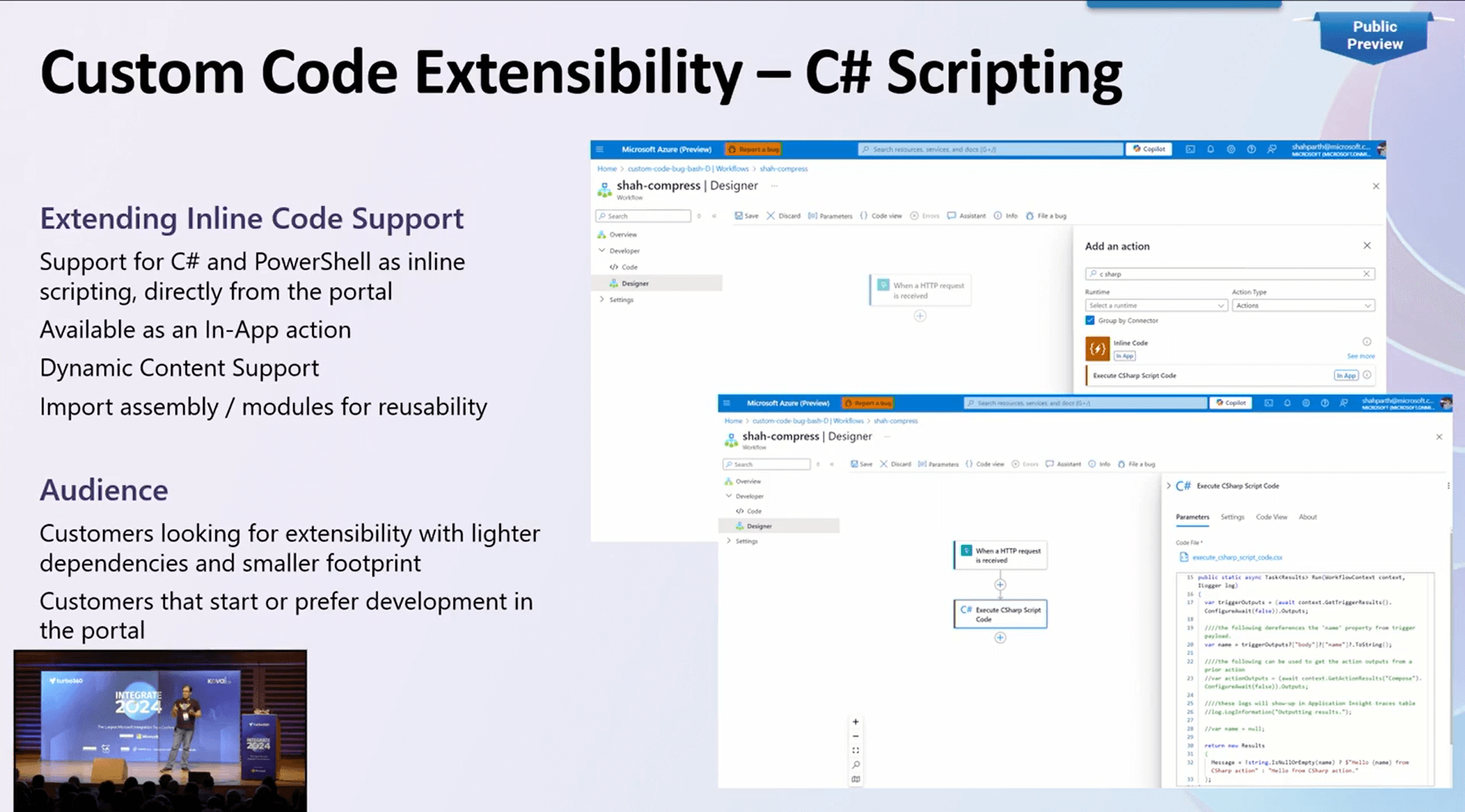

Transitioning to a discussion on custom code extensibility, Wagner elaborated on recent enhancements enabling customers to select their preferred target framework when initiating projects, accommodating both legacy and modern scenarios with .Net 8 support. Inline scripting capabilities have been expanded to include C# and PowerShell, catering to customers who prefer lighter dependencies. A live demonstration showcased a logic app workflow utilizing C# inline scripting to compress files upon receiving an HTTP request.

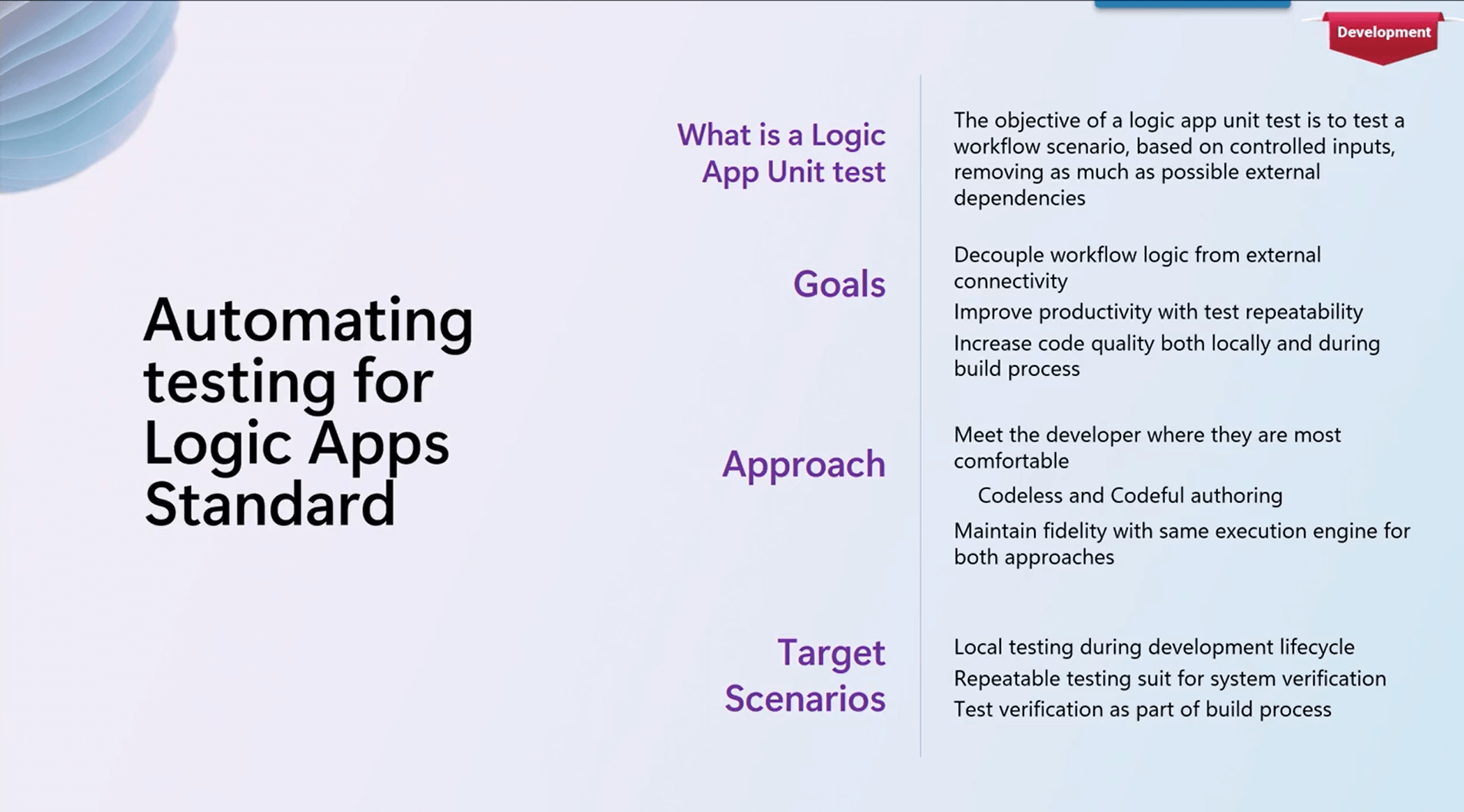

The session progressed with Wagner introducing automation testing for logic app standards, emphasizing the objective of enhancing code quality through a repeatable testing suite for systematic verification. Two approaches, Codeless and Codeful, were proposed for conducting automation testing for logic app standards.

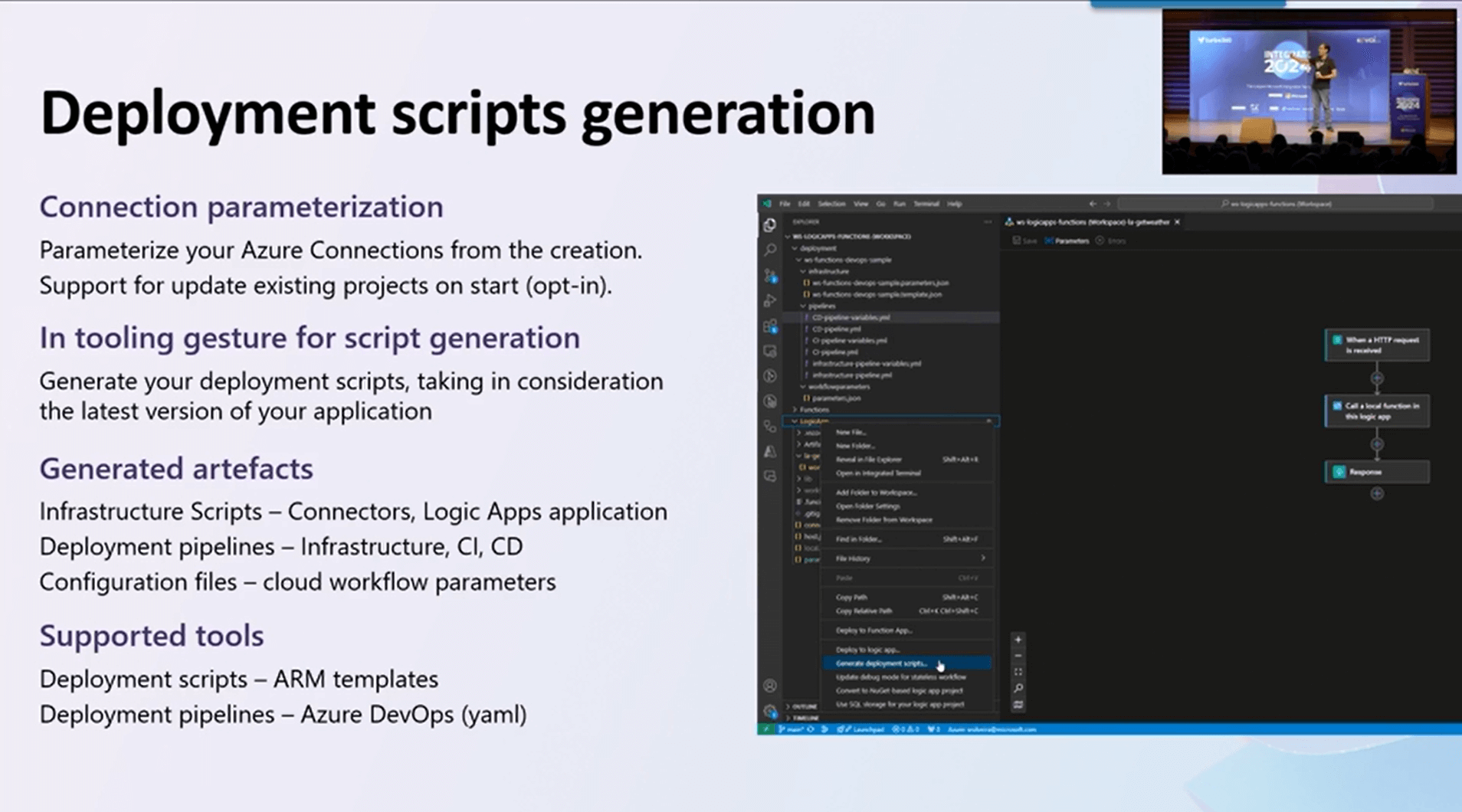

Regarding deployment flow, investments are made in generating deployment scripts, from parameterizing Azure connections during workflow creation to producing artefacts through in-tooling gestures. These scripts can be assigned to slots without disrupting production workflows, facilitating zero downtime deployments.

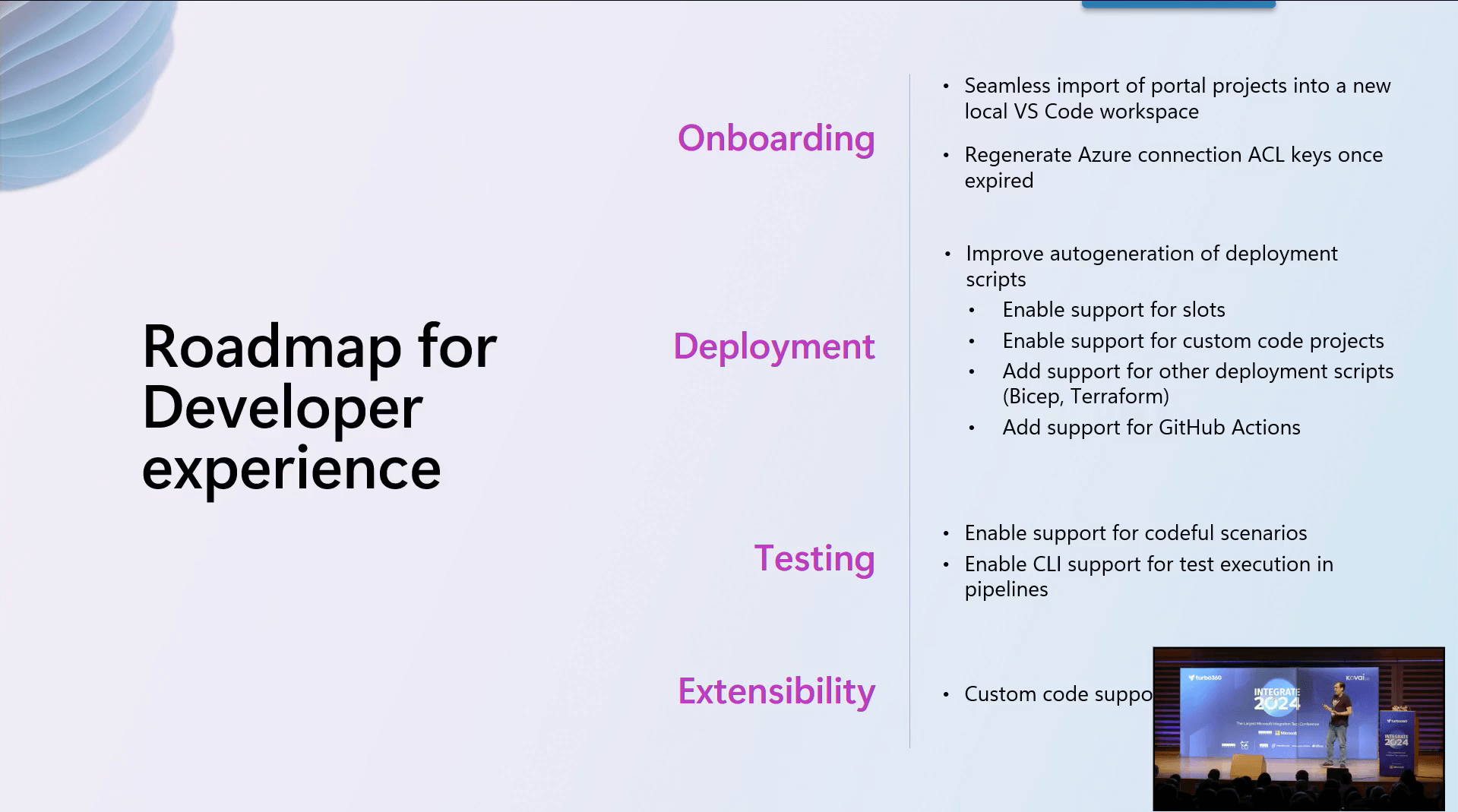

Wrapping up the session, Wagner outlined the roadmap for future releases, emphasizing ongoing endeavours aimed at continuously improving the developer experience across all development activities.

#3: Real-world with Logic App Workspace

Bill Chesnut initiated the session with the exploration of incorporating custom code into Azure Logic Apps, focusing particularly on Azure Logic Apps Standard. The discussion underscored the significance of custom code in handling tasks beyond the native capabilities of Logic Apps, such as PGP encryption/decryption and tailored date formatting. Notably, functions are independently deployed and invoked from Logic Apps.

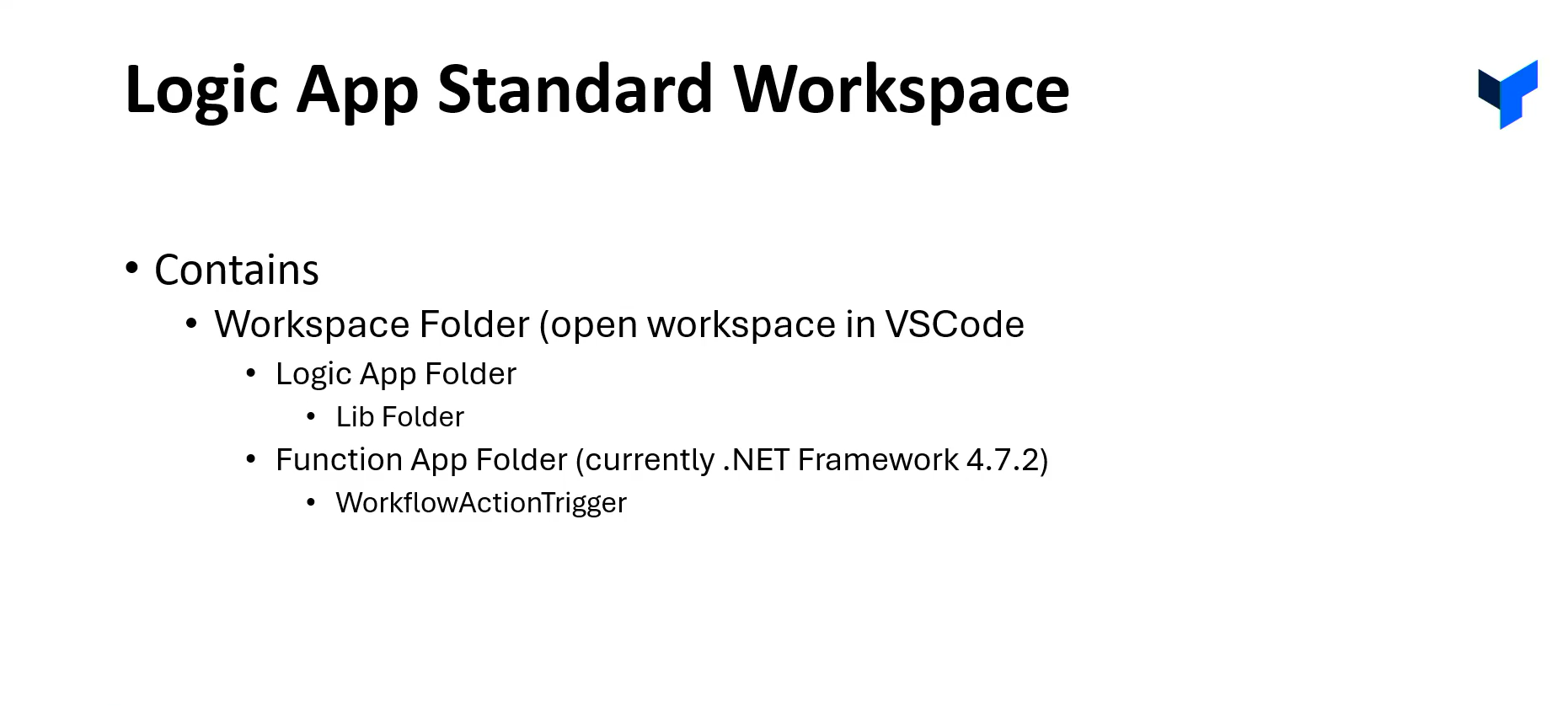

Azure Logic Apps Standard brings forth a distinctive folder structure, where the creation of a workspace aids in the management of functions and custom code. Function deployment mandates a separate process, ensuring proper binding and project construction before integration with Logic Apps.

Custom code emerges as a solution to address challenges like date formatting disparities across different regions. Moreover, the integration with third-party services often entails the management of API tokens and custom code for efficient token lifecycle management.

Chesnut interpreted the complexities associated with local debugging and deployment, including issues with local settings files and the management of diverse environment configurations, particularly with managed identities.

Deploying Azure Logic Apps with Azure DevOps involves setting up a Logic Apps Standard workspace, configuring build and release pipelines to compile and deploy custom code and functions, and managing environment-specific settings using Bicep or ARM templates. This streamlined approach ensures efficient deployment and integration with third-party services.

The challenges with region-specific date formatting, the convoluted folder structure of Logic Apps Standard, and the difficulties with token management and deployment procedures were also discussed. Deployment problems are made worse by the complexity of function deployment and the difficulties in managing local environments.

Chesnut wrapped up the session by offering a concise summary that captured the essential aspects of tackling challenges within Logic app actions using .Net code.

#4: Latest developments in Azure Logic Apps

Fresh from Kent Weare’s (Principal Product Manager at Microsoft) insightful session at INTEGRATE 2024, let’s explore the exciting new features that are taking Azure Logic Apps to the next level! These advancements empower you to build even more robust, efficient, and business-centric integration solutions.

Integration Environments and Business Process Tracking: Orchestration with Clarity

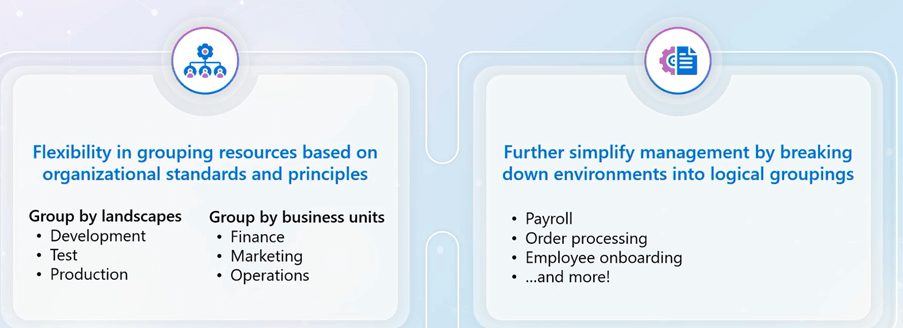

Gone are the days of managing a scattered landscape of Logic Apps resources. Integration Environments (Public Preview) introduce a game-changer: the ability to group your Logic Apps, connectors, and other assets into logical applications. This streamlined organization makes monitoring, troubleshooting, and lifecycle management a breeze.

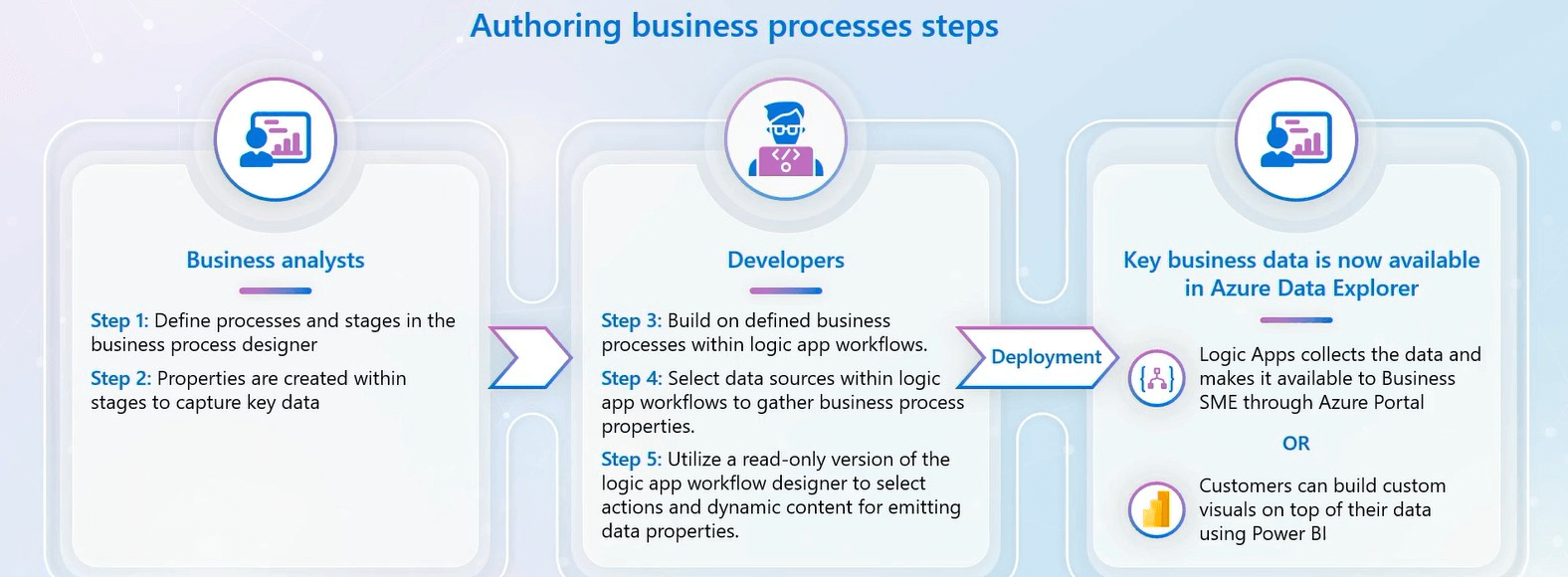

But the magic doesn’t stop there. Business Process Tracking bridges the gap between technical workflows and real-world business processes. This powerful feature allows you to define stages within your Logic Apps workflows and capture key data points along the way. Imagine this: a clear visualization of your data flow, from trigger to action. Business analysts and architects can now gain valuable insights, pinpoint potential bottlenecks, and ensure your integrations are tightly aligned with business objectives.

With the integration environment, you can further group resources together in the form of an application, generally relying on specific business processes or projects (e.g., payroll, order processing, onboarding).

Recent Introduction:

Recent Introduction: End-to-End AIS Monitoring

This feature provides insights tailored to business needs along with monitoring capabilities. It supports Logic Apps, Service Bus, and API management. Based on AppInsights, these insights are exposed through a workbook for visualization.

Business Process Tracking also brings business subject matter experts into the solution development process, providing them with more insights. Business analysts and architects can define various stages and data properties to collect key data at the right time. Once the business process is defined, developers can map the conceptual business process to an underlying technical solution, ensuring reliable data collection and visualization in the Azure Data Explorer.

Key benefits include:

- A top-level Azure resource for business process tracking.

- Greater control over data.

- Establishment of logs.

- Adding metadata through tags.

Additionally, the business process stage status feature, which was a top-requested feedback item, lets users know the status of each stage (whether succeeded or failed). This gives full control over exceptions, whether technical or business-related.

Improvements and Updates

Significant updates have been made in various areas:

- Token picker enhancements.

- Renaming “business id” to “transaction id.”

- Workflow expression support.

- Variable support.

- Emitting Boolean values at runtime.

These updates enable linking business processes to transactions, providing a holistic view with complete flexibility to manage resources.

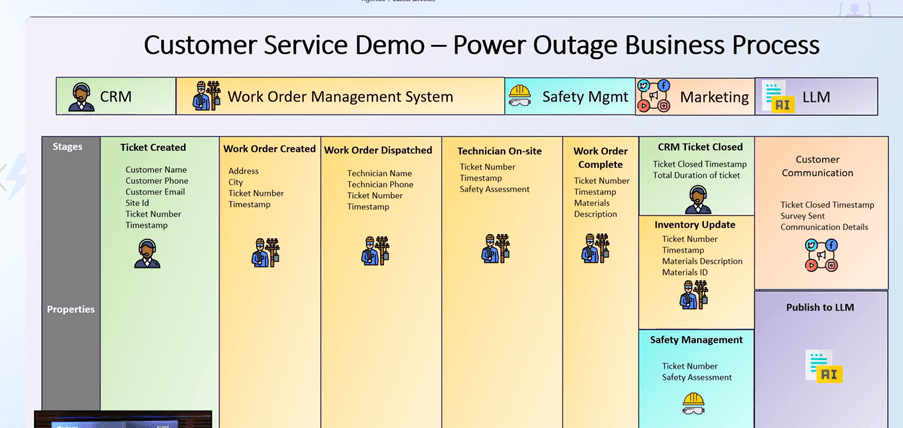

Customer Service Demo: AI and LLM Data

An interesting demo showcased how businesses can orchestrate different sets of data to various systems and visualize these data stages through the new business process monitoring capability.

Logic Apps Standard: Hybrid Deployment Model

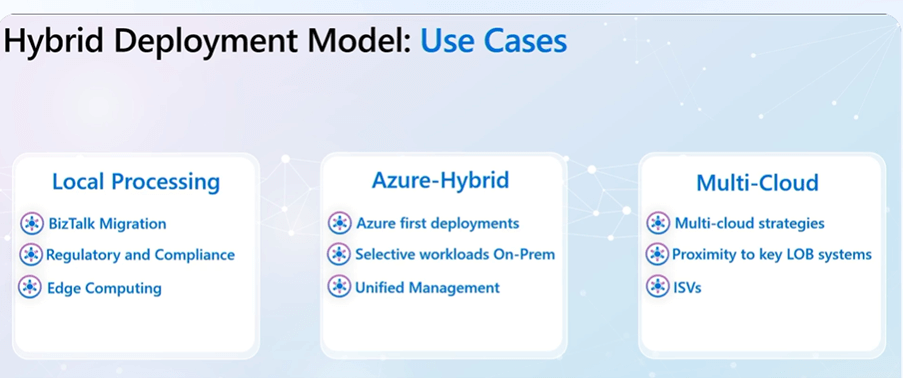

This model allows you to deploy standard Logic Apps to customer-managed infrastructure (on-premise, private cloud, public cloud). It focuses on semi-connectors, enabling local processing (workflows running on-premise), local data access (e.g., SQL), and local network access while managing everything from the Azure portal. Facilitated through Azure Arc, this feature lets you view insights such as run history and deployed apps locally.

And the different Uses cases has been explained as mentioned in the below picture:

Hybrid environment feature set has been explained what is added and what features set are available for the public preview.

Follow the below link to get the early access to the recent features.

https://aka.ms/HybridLAOnboarding

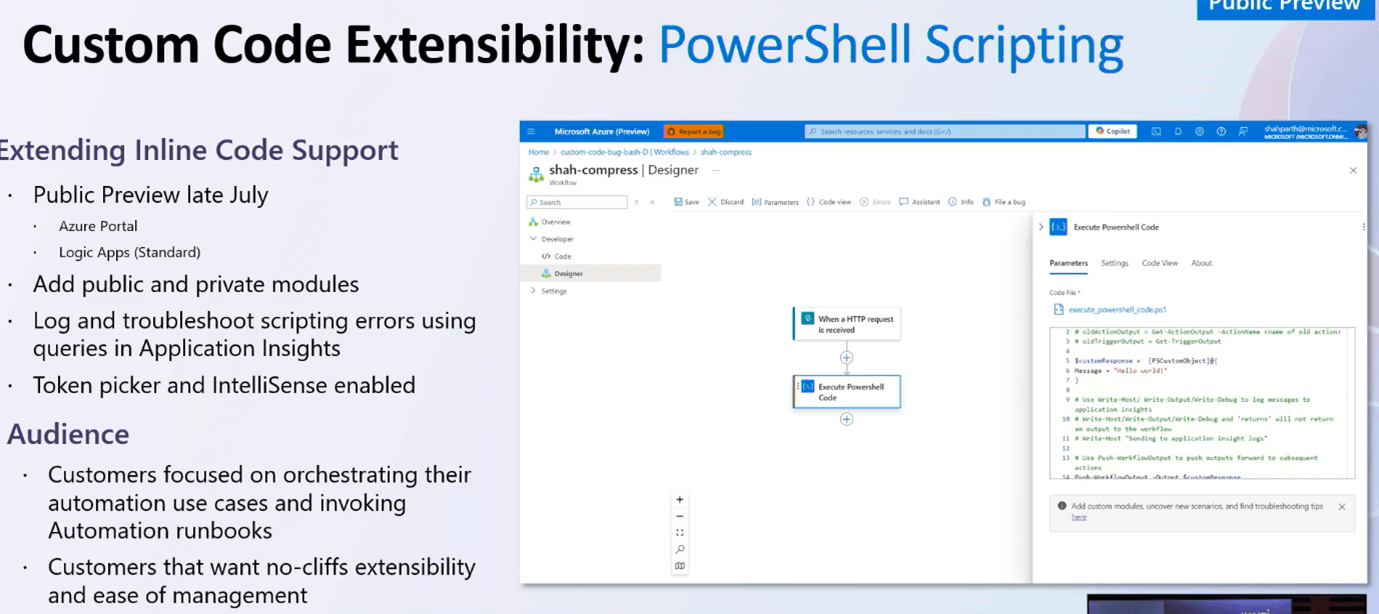

Custom Code Extensibility: PowerShell Scripting

Extending inline code support will be available in public preview by late July, enabling Azure Portal and Logic Apps (Standard) users to add public and private modules. Users can log and troubleshoot scripting errors using queries in Application Insights, with token picker and IntelliSense enabled.

Audience:

- Customers focused on orchestrating their automation use cases and invoking automation runbooks.

- Customers seeking no-cliffs extensibility and ease of management.

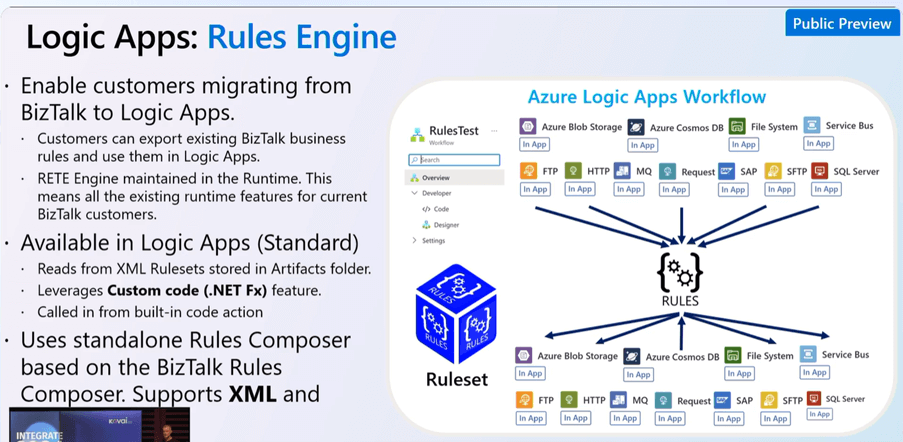

Logic Apps: Rules Engine

For customers migrating from BizTalk to Logic Apps, this feature enables exporting existing BizTalk business rules for use in Logic Apps. The RETE engine is maintained in the runtime, leveraging existing runtime features for current BizTalk customers. Available in Logic Apps (Standard), it reads from XML rulesets stored in the Artifacts folder and uses custom code (.NET Framework). The standalone Rules Composer supports XML and .NET facts.

Future Investments:

- DB Facts

- Rules scoped beyond Logic App level (Integration Account).

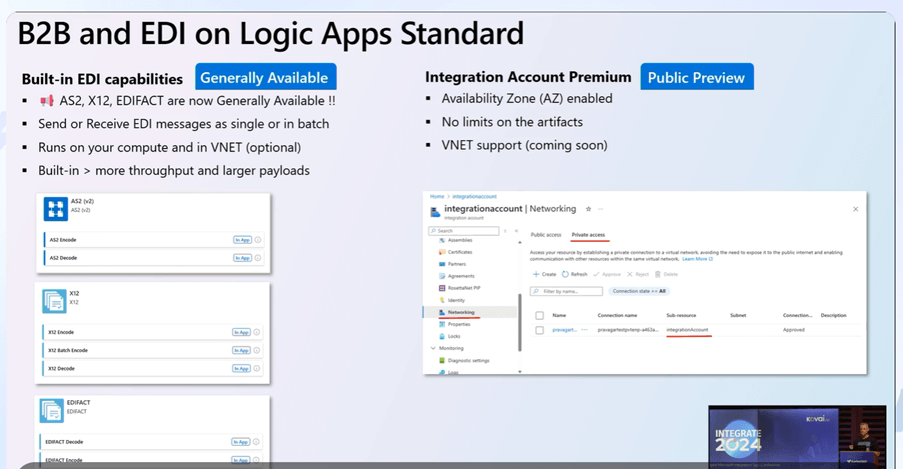

B2B and EDI on Logic Apps Standard

Built-in EDI capabilities are now available, along with an Integration Account Premium for enhanced functionality.

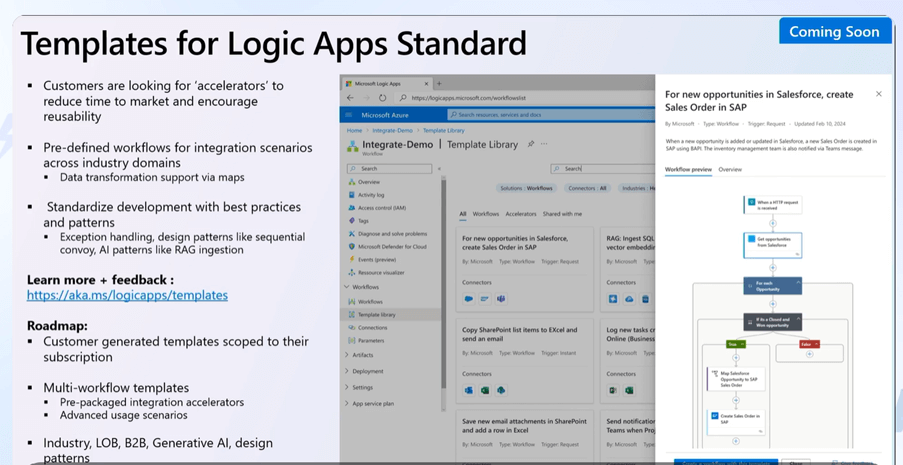

Templates for Logic Apps Standard

Templates have been introduced to streamline the development process.

With that all the latest improvements has been explained.

For the latest updates and detailed information, check out the below screenshot.

Conclusion

Kent Weare’s session at INTEGRATE 2024 highlighted the transformative advancements in Azure Logic Apps. The introduction of Integration Environments and Business Process Tracking simplifies complex workflows and bridges the gap between technical and business processes. With enhanced monitoring capabilities, hybrid deployment models, custom code extensibility, and a seamless migration path from BizTalk, Azure Logic Apps are positioned to offer unparalleled flexibility and control. These features empower businesses to create more efficient, insightful, and aligned integration solutions, paving the way for innovation and efficiency.

#5: The future of event streaming with Azure Messaging

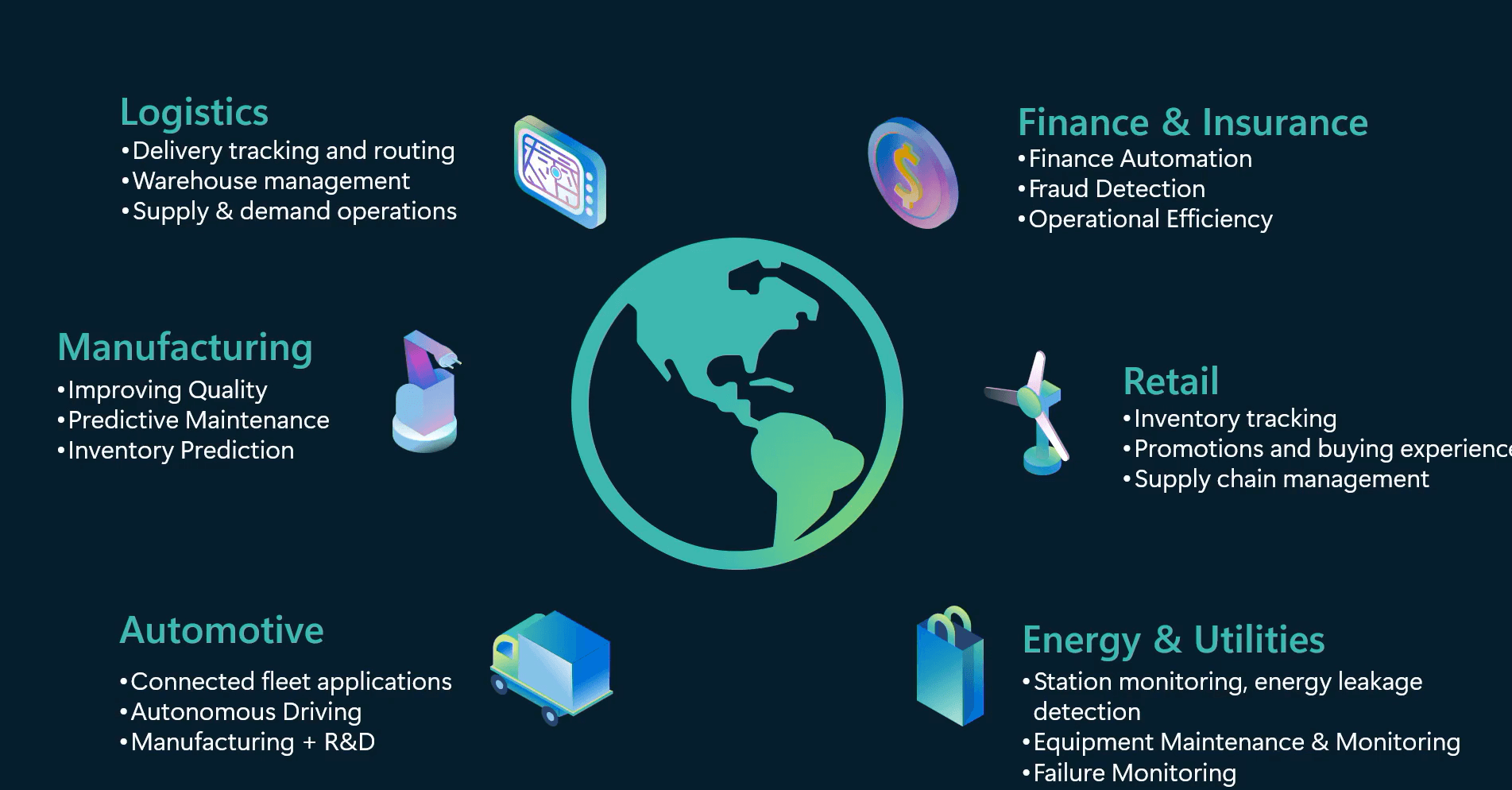

Kevin Lam (Principal PM Manager) from Microsoft kickstarted the session by introducing various business advantages to the torrents of events happening around us!

Take advantage of real-time events data in Logistics, Manufacturing, Automotive, Finance, Retail and Energy & Utilities.

Azure Event Hubs

It is front door to capture the event data

- High-volume, low-latency event streaming

- Kafka Framework supports the multi-protocol to handle the workloads seamlessly!

- Native integration with Azure Stream Analytics and Azure Data Explorer

- Industry-leading performance, reliability, availability and cost-efficiency

He then explained the Azure Event Hubs in the following aspects

- Geo-Replication across the multiple regions and it’s available for public preview starting June 17, 2024.

- Emulator is Container which supported in Windows and Linux. The emulator has been used for the dev/test purposes only.

- Kafka: Now the Event Hubs supports 100% Kafa Compatibility for the transactions and Streaming.

- Schema Registry: Event Hubs supports JSON Schema (GA) for validation, Protobuf (Public Preview) and Avro Schema which are required for decoding.

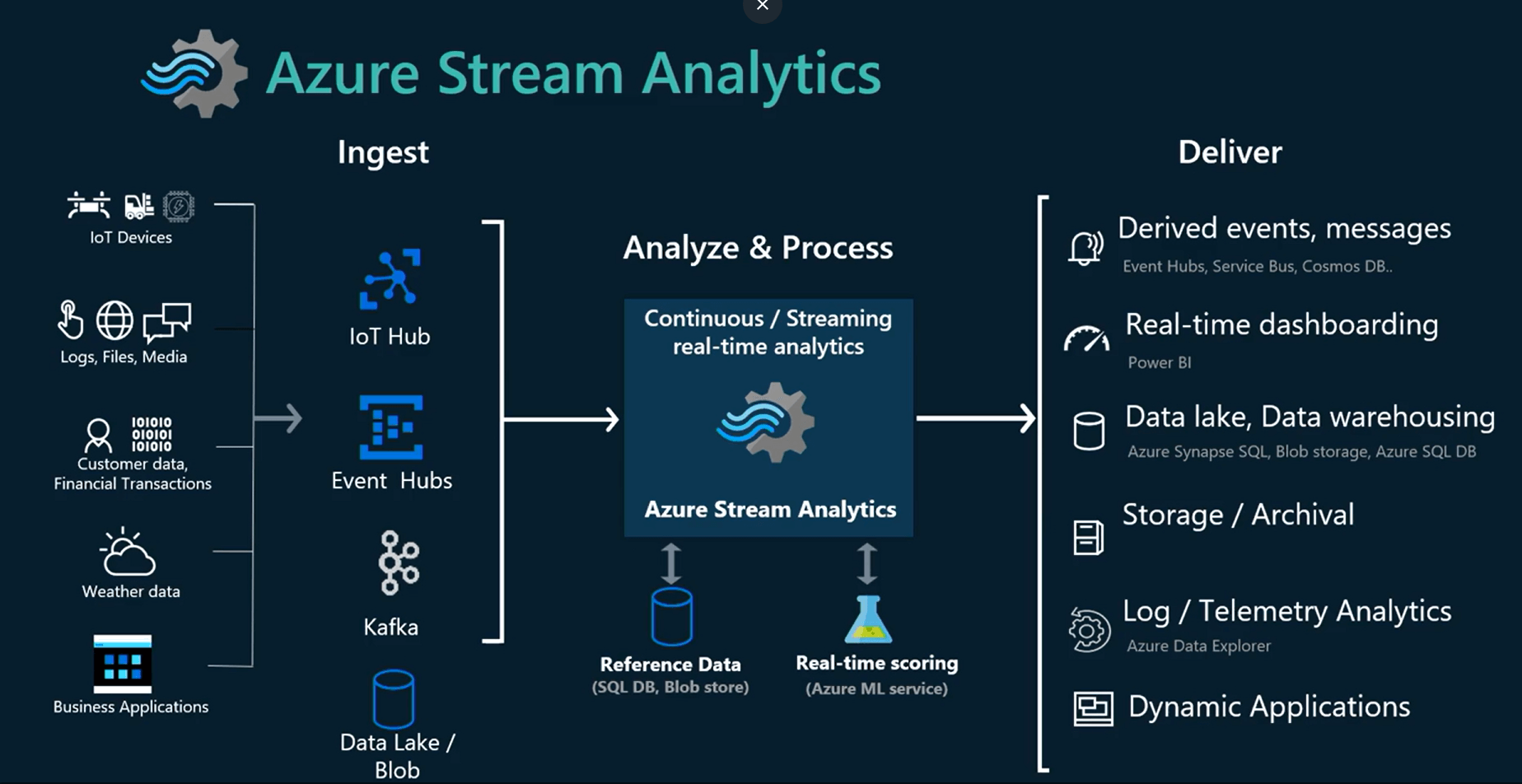

Stream Analytics

Anashesh Boisvert (Senior Product Manager) from Microsoft joined the session to explaining the Stream Analytics and its latest improvements

- Data Visibility: Validate the large volume of streaming data, parse, route and pivot the streaming data.

- Reliable & Open: Observed > 99.99% request reliability with distributed checkpointing (Fault Domain).

- Stream Analytics Security: Streaming Platform automatically employs best-in-class encryption.

- Out of Box AI: Geospatial functions support the Integrated SAQL.

- Trouble shooting Tools: Rich set of Troubleshooting tools for developers.

- Cost Efficient: It’s transparent, flexible and competitive.

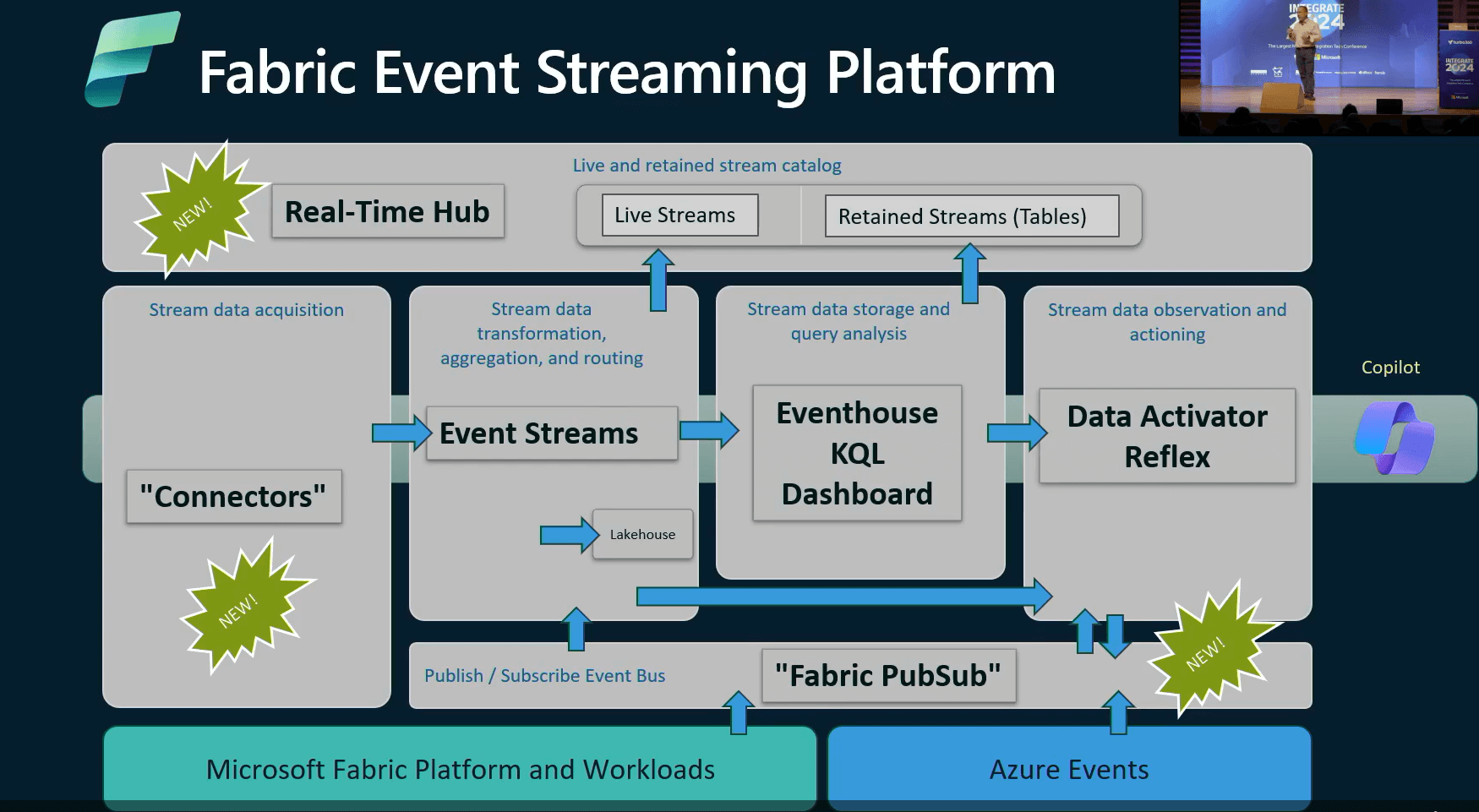

Fabric Event Streaming

Kevin Lam explained the Fabric Event Streaming platform which handles the workloads with different connectors, Azure Events provides the streaming data insights with Fabric Pub-Sub model.

Anashesh, finished off the season with demonstration of the Real Time Hub(Preview) capabilities.

#6: BizTalk to Azure the migration journey: Azure Integration in action

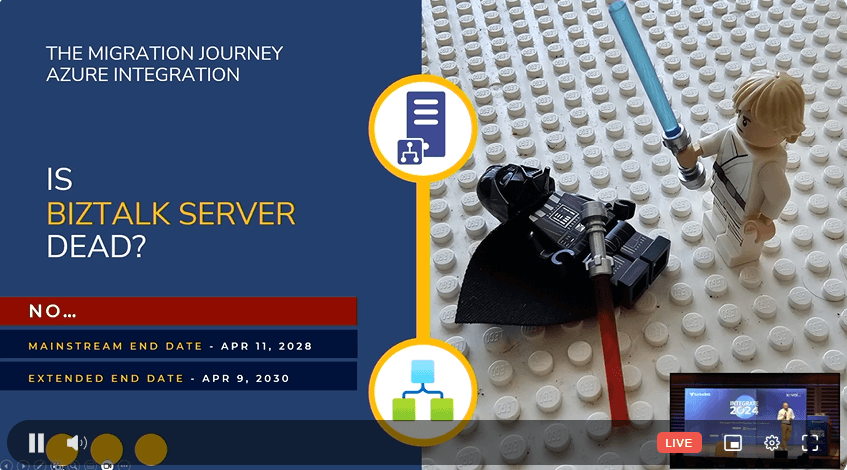

On day 1 of Integrate 2024, after the lunch break Sandro Pereira, Head of Integration at DevScope, started the session with the most awaited topic “BizTalk to Azure the migration journey: Azure integration in action”.

BizTalk Server’s mainstream end date is April 11, 2028; the product holds the extended date up to April 9, 2030. The product has been in the market for 25+ years and many companies still rely on BizTalk Server for their day-to-day activities.

Sandro mentioned that migrating from BizTalk to Azure can result in the following cases:

- Requires upfront investment

- Is a time-consuming process

- Needs a proper cadence (scheduled process)

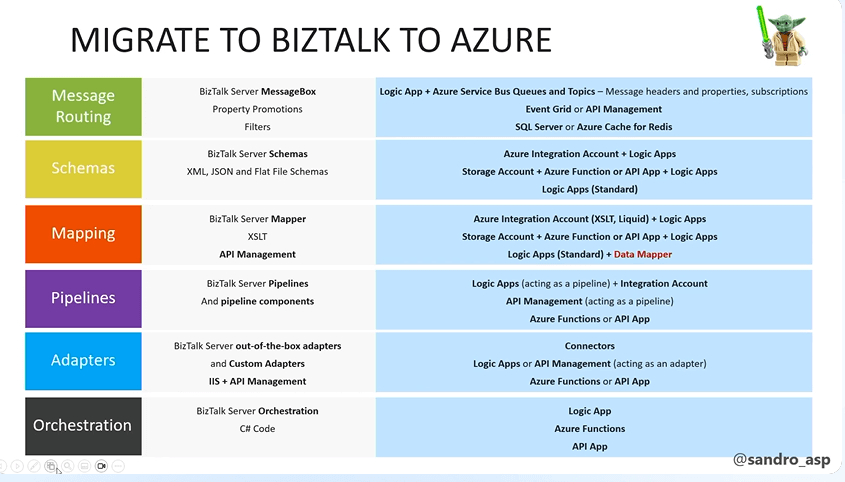

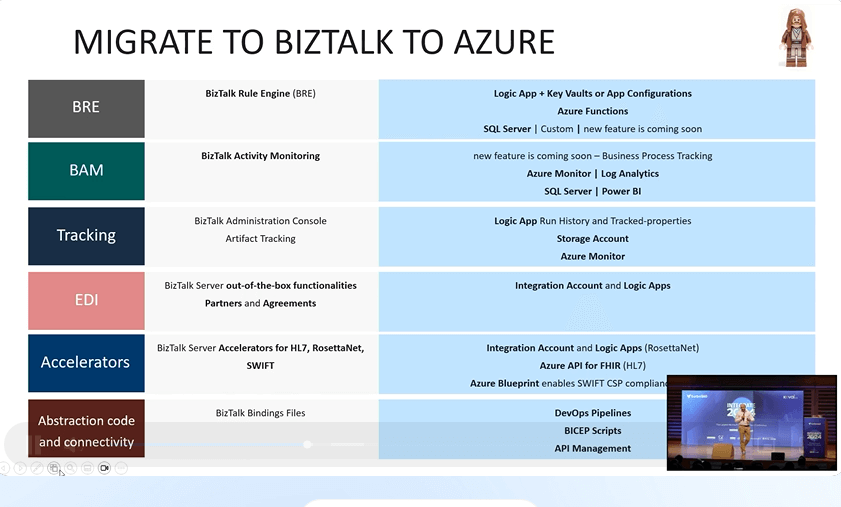

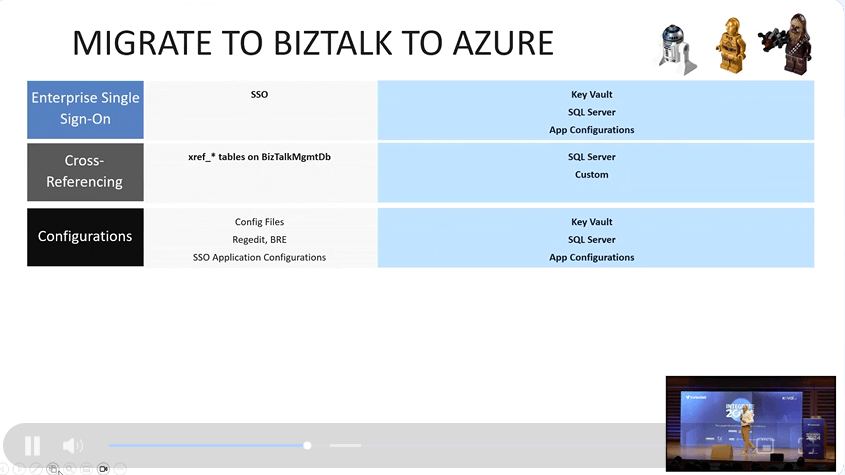

Sandro shed light on how each feature works during the migration from BizTalk to Azure.

Sandro ended the session with an interesting live demo about file shares, logic apps, and storage accounts in Azure and BizTalk Server, making the session livelier and more engaging for the audience.

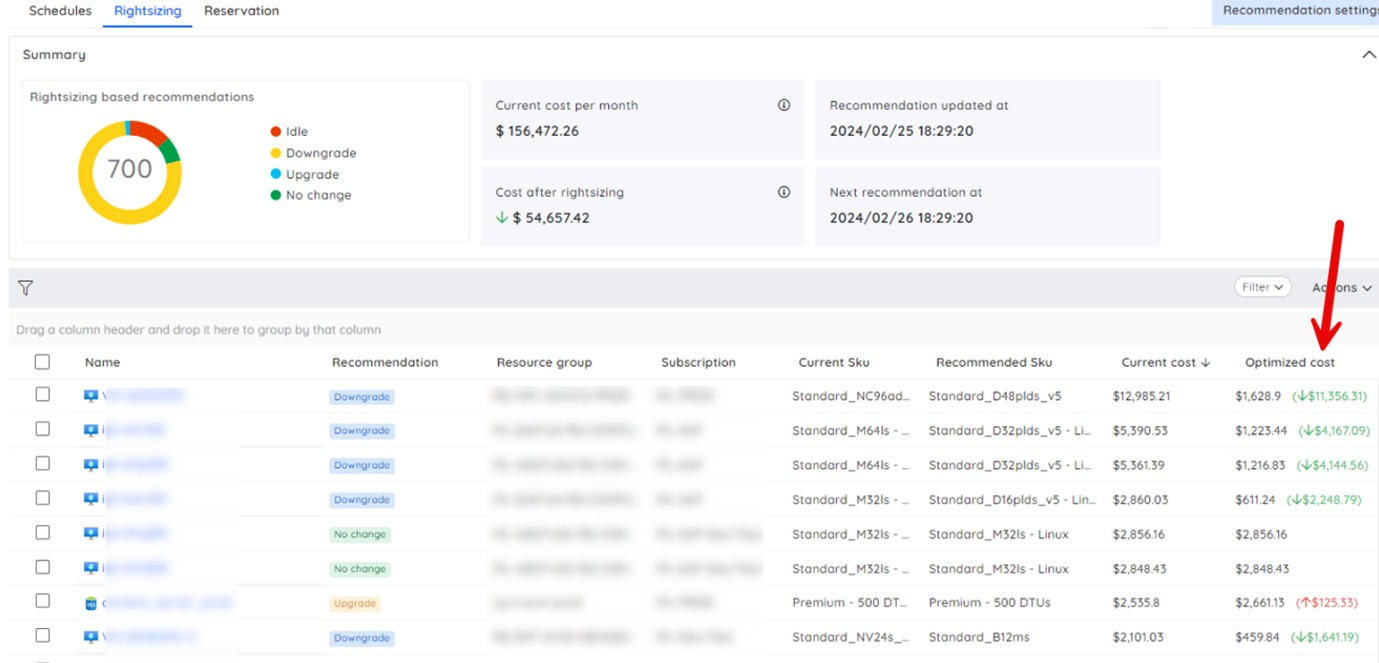

#7: How an integration team identified >$1m worth of Azure Cost savings

One of the challenges the customer had is that Azure is not reporting many rightsizing recommendations in Azure advisor. Nevertheless, there were 19 virtual machines with approximately $60k per year worth of rightsizing recommendations identified by Azure advisor. These had not been implemented by the customer because they had not realized they were there and the recommendations are not easily visible in the scope of the application that would benefit from them.

The customer completed the migration and then recognised that they had missed out on taking advantage of Azure reservations which had resulted in opportunities to reduce the bill by $700k per year. Using Turbo360 they had identified these savings and had implemented appropriate reservations where they can reduce those costs.

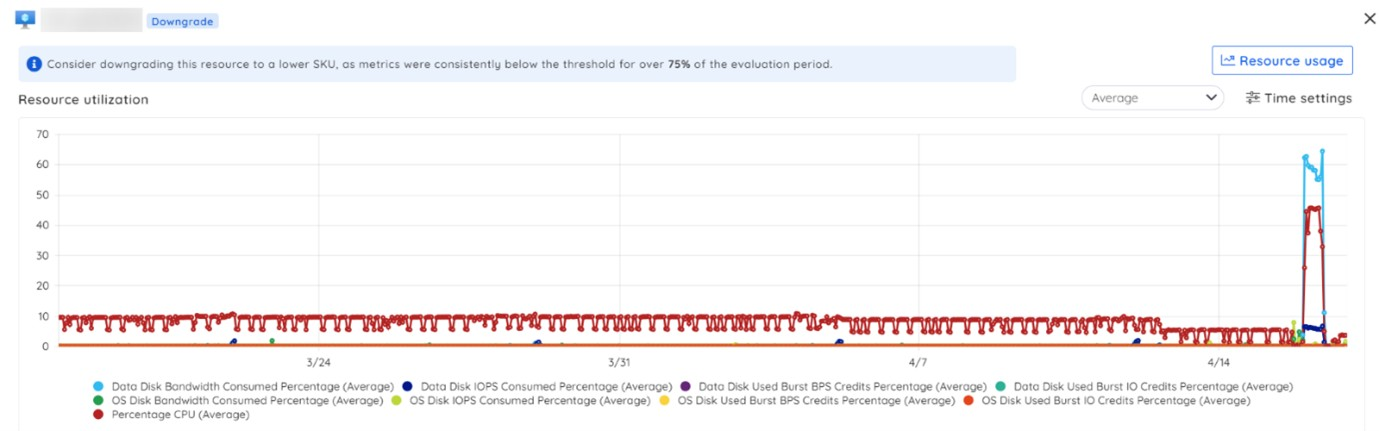

The customers next step was to look at rightsizing opportunities. Turbo360 was flagging up a lot of resources which are over provisioned. When the migration was performed an appropriate estimate of the required size for resources was made and then later it is found that these sizings were overestimates.

How Turbo360 identified 1M worth of savings with cost democratization?

With Turbo360 the customer had democratized the management of cost to the operations teams who look after each application. This means instead of having 1 person with the right access and knowledge to look into cost, now every application team can participate in this.

Turbo360 had identified 336 downgrade recommendations which ranged in size from $10 to $12,500.

The application teams can now look to review their rightsizing recommendations within their scope and can easily review resource usage.

The customers teams can now follow the pattern like shown below.

It will take the customer a while to work through the huge number of recommendations they have. Some will be very easy to implement and others will require an impact assessment but the customer will start seeing immediate cost reduction as soon as changes are implemented.

#8: Building an integration solution with GitHub Copilot chat

GitHub Copilot

In today’s session, Martin Abott, Program Architect at Evolytica Ltd, showcased how to build a simple integration system using Azure services: APIM, Function App, Service Bus Queue, Logic Apps, and Cosmos DB with the help of GitHub Copilot. This blog captures the essence of his insightful presentation.

GitHub Copilot played a significant role in the demonstration. It’s an AI-powered code completion tool that aids in generating code suggestions and automating repetitive tasks. However, Martin emphasized that Copilot is not an autopilot. While it boosts productivity, developers should not rely on it entirely. Copilot is trained to understand the context from your Git repository, offering relevant code suggestions based on your project. With a cost of $100/year or $10/month, Copilot provides an excellent return on investment by saving time and enhancing efficiency. To get the best results, Martin advises writing specific, concise prompts that provide enough context without being overly verbose.

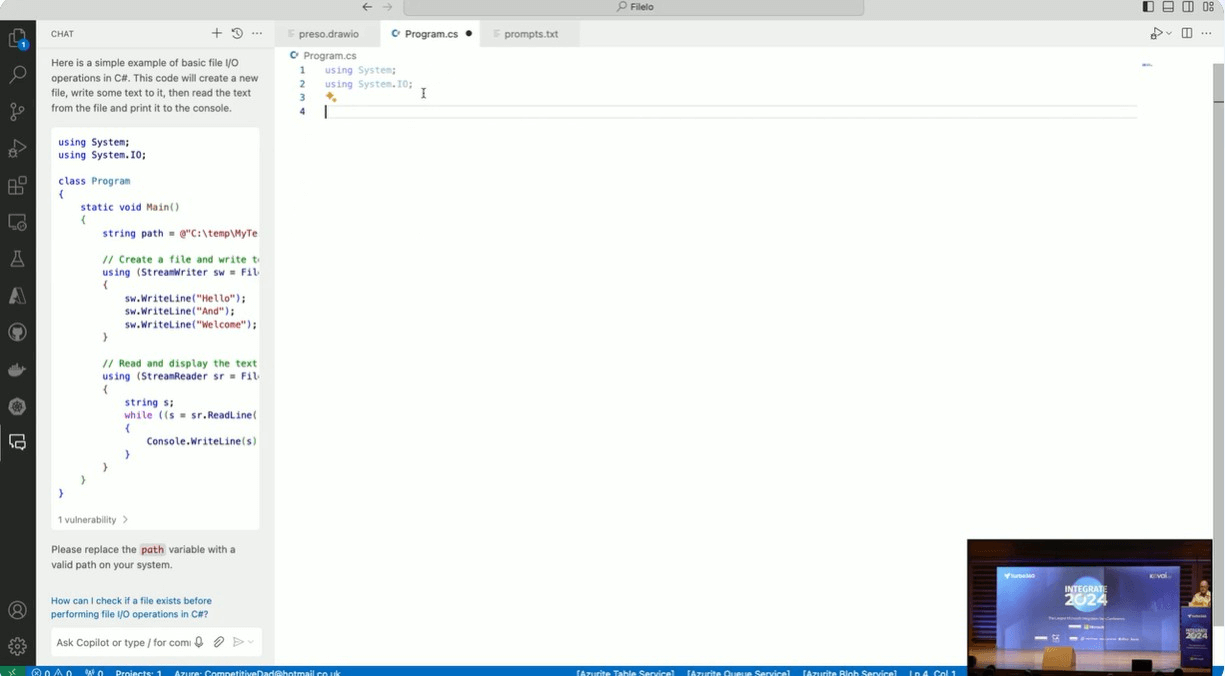

Basic Program

Martin began with a basic program to illustrate Copilot’s capabilities like generating, rewriting code, writing tests & using help commands to fix bugs.

Prompt: Write some C# code to do basic file & rewrite to put operation in to separate methods

Integration Flow

Martin explains the integration flow, detailing the process of sending a message from API endpoints to the Cosmos DB database using GitHub Copilot as planned for today’s demo.

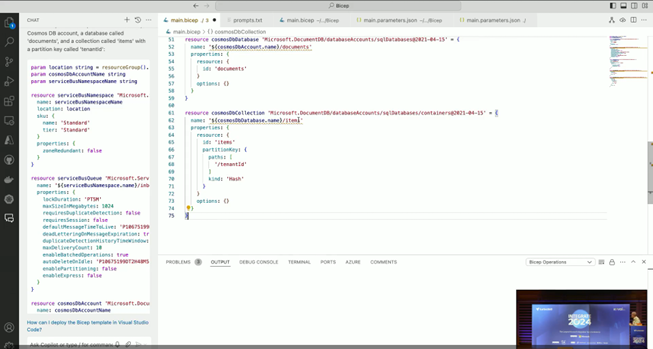

Bicep Template Deployment

Martin demonstrated deploying Azure resources like Service Bus queues, Cosmos Database & Database collection using Bicep templates. Here’s how Copilot assisted,

Prompt: Write a Bicep template that creates a service bus namespace with a queue called inbound, a cosmos database called documents & collections called items.

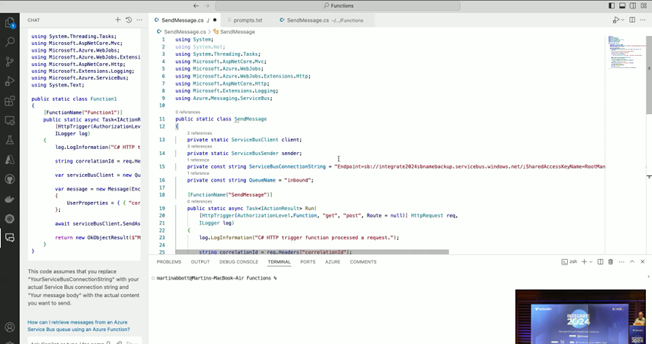

Deploying Azure Functions

Next, Martin showed how to deploy an Azure Function that uses an HTTP trigger to read a header called correlationId and send a message to the Service Bus queue called inbound.

Prompt: Write an Azure Function that uses a http trigger, reads a http header called correlationId & sends a message to service bus queue called inbound.

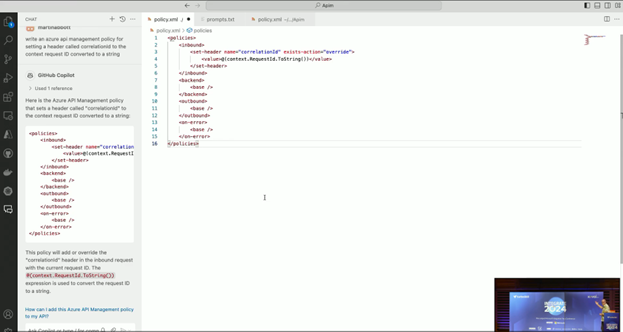

Deploying APIMs

For security, Martin configured APIM to set a header called correlationId to the context request ID converted to string, demonstrating how Copilot could assist with policy code generation.

Prompt: Write an Azure API management policy for setting a header called correlationId to the context request ID converted to string.

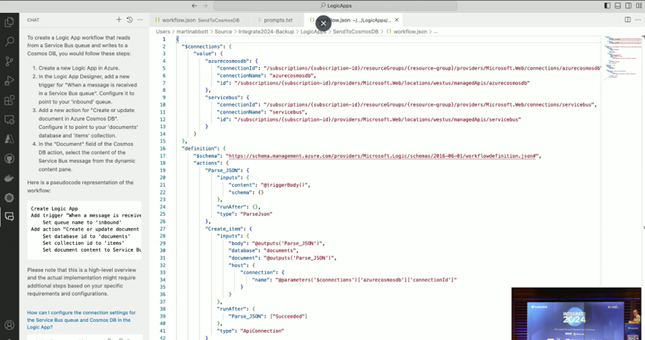

Logic Apps Deployment

Finally, Martin used Copilot to create a Logic App stateless workflow that reads from the inbound Service Bus queue and sends the contents to a Cosmos DB database called documents and a collection called items.

Prompt: Write a standard logic app stateless workflow that reads from a service bus queue called inbound, and sends the contents to a cosmos database called documents & collection called items.

Integration Flow Outcome

By the end of the session, Martin had successfully demonstrated triggering the API endpoint with a message containing correlationId as an HTTP header. The message travelled from Function Apps all the way to Cosmos DB, showcasing an integration flow generated with GitHub Copilot.

Prompt: Write a standard logic app stateless workflow that reads from a service bus queue called inbound, and sends the contents to a cosmos database called documents & collection called items.

Conclusion

Martin Abott’s session highlighted that while GitHub Copilot is not perfect, it is a powerful tool that can significantly enhance productivity and streamline development processes, making it invaluable for developers.

#9: Master PaaS networking for AIS

On day 1 of Integrate 2024, there were many awesome sessions. The session on Master PaaS networking for AIS was done right after the last break. This session was presented by Mattias Logberg.

Introduction:

Network is not one of the most loved concepts around the globe. Because it always creates trouble at the end. But Mattias promises to make us comfortable with Networks.

Topics covered:

- How do we work with Vnets?

This topic may seem very short, but deep inside Vnets we have a ocean of topics to be prepared and explored.

What we need to build a secured Landing Zone (application or AIS)?

- Private Resolver – used for on-prem to Azure connections

- Route Table – Forces traffic to move from very restricted environment to cloud/another environment.

- DNS – The Domain Name System (DNS) turns domain names into IP addresses, which browsers use to load internet pages

- NSG – Network Security Group

- Service Endpoints

- Private Endpoints

Whenever we want to access the flies in Azure via On-prem the best way is to route the requests through Firewall.

Onprem -> Firewal -> Azure/Cloud

Mattias explained the concepts of building a secured AIS with real examples using Cosmos and Logic apps. Let’s see the example scenario: there is a cosmos Db where all the data is stored. Now to access the data, we will use a logic app standard. Now both the resources are in public which is bad. So, we will lockdown the resources using Vnet.

But remember locking the storage connected with the logic apps is bit tricky, as we need to create 4 service endpoints (Files, Queues, Tables, Blobs).

Now with both the resources being locked down from public access, deployment and maintenance of the application becomes very difficult. But this can be overcome by introducing a VM inside the same network.

Points to Note:

Deny All rule in NSG – restricts all the communication across subnets within the same Vnet and also the communication within the same subnets unless we ask the NSG to allow specific communications.

#10: Knowledge Management for your Enterprise Integration Platform

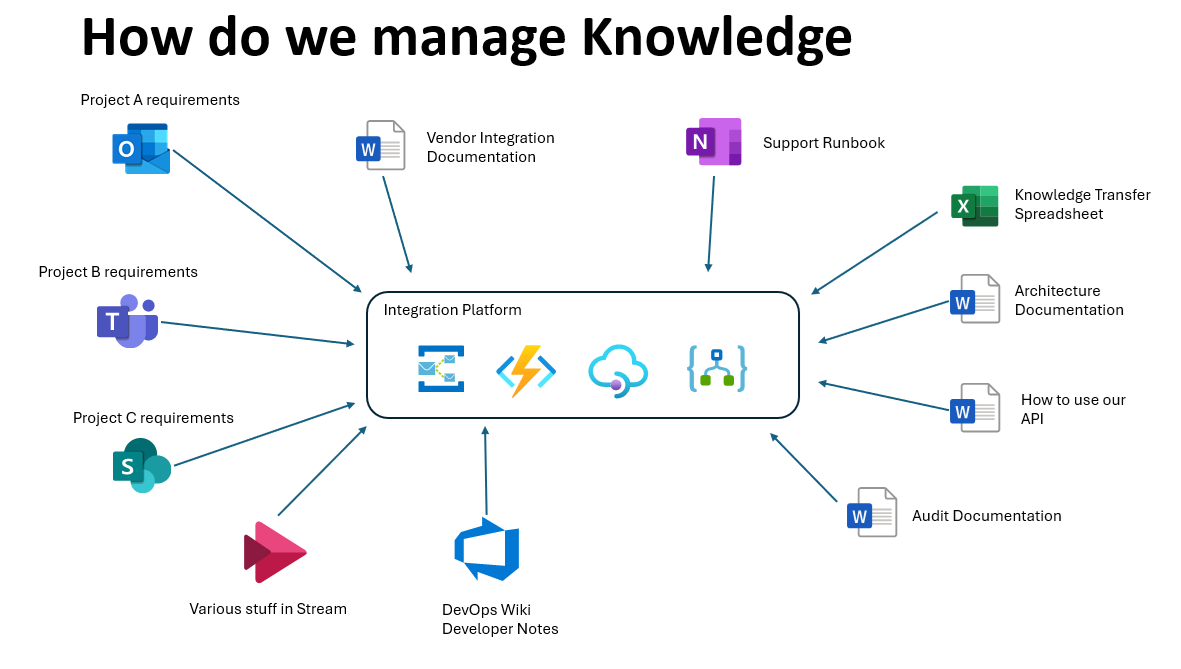

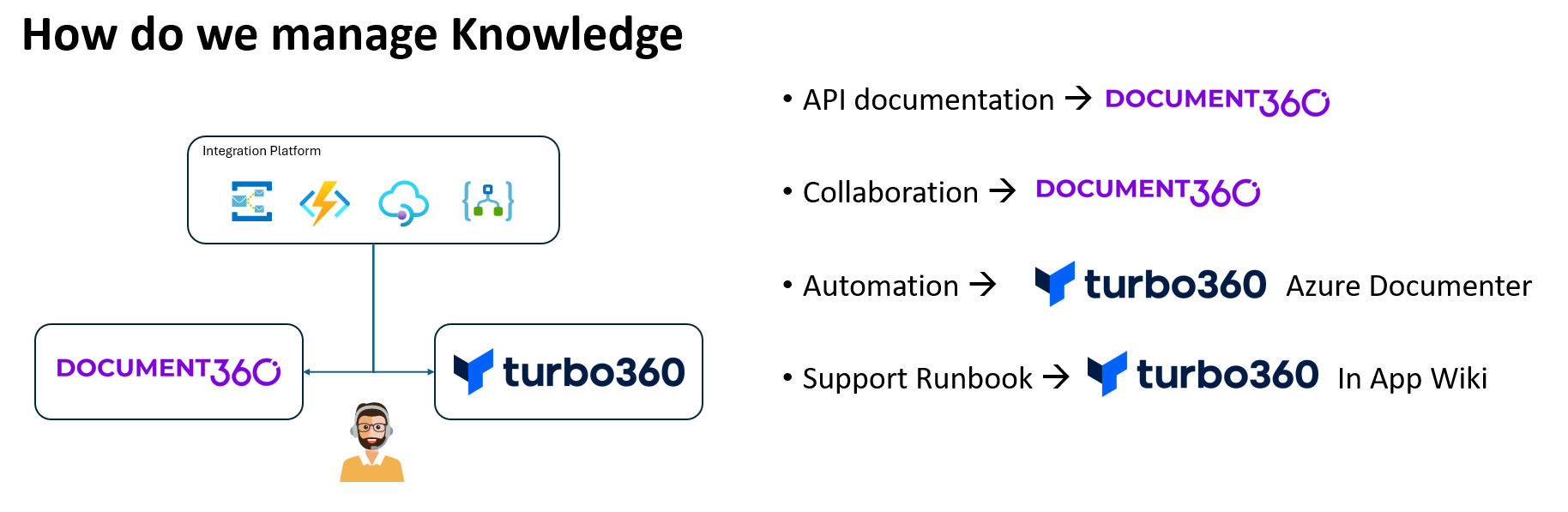

Michael Stephenson Microsoft MVP delivered his second session on knowledge management for the enterprise integration platform. His session threw light on the challenges in knowledge management for enterprises and discussed a few use cases demonstrating those challenges and how Document360 and turbo360 address these challenges.

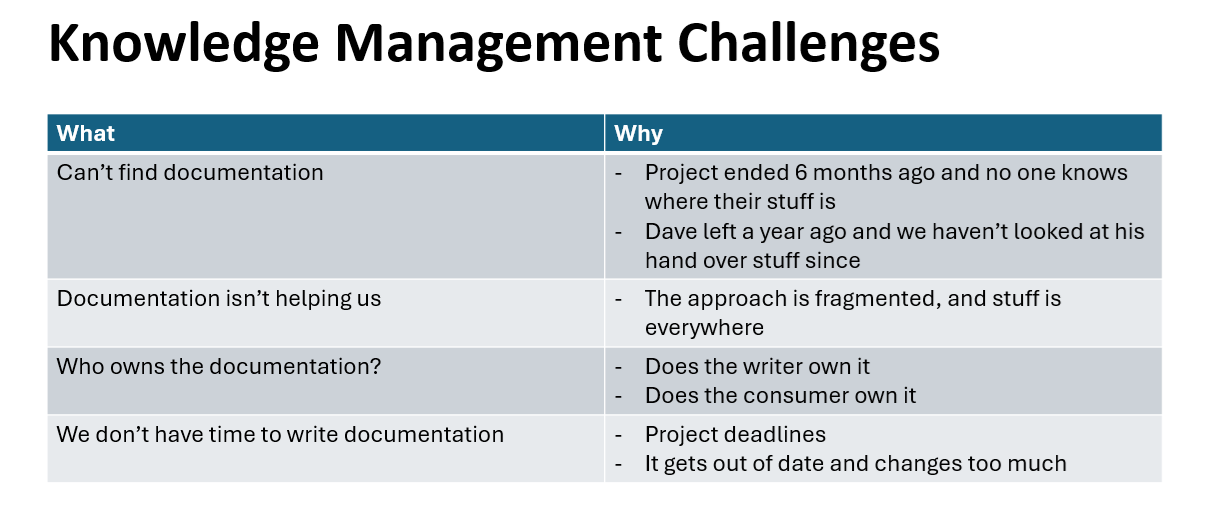

Mike started his session by discussing how the data related to an integration platform is spread across lacking centralized knowledge management. He also highlighted the core challenges of knowledge management which are shown below:

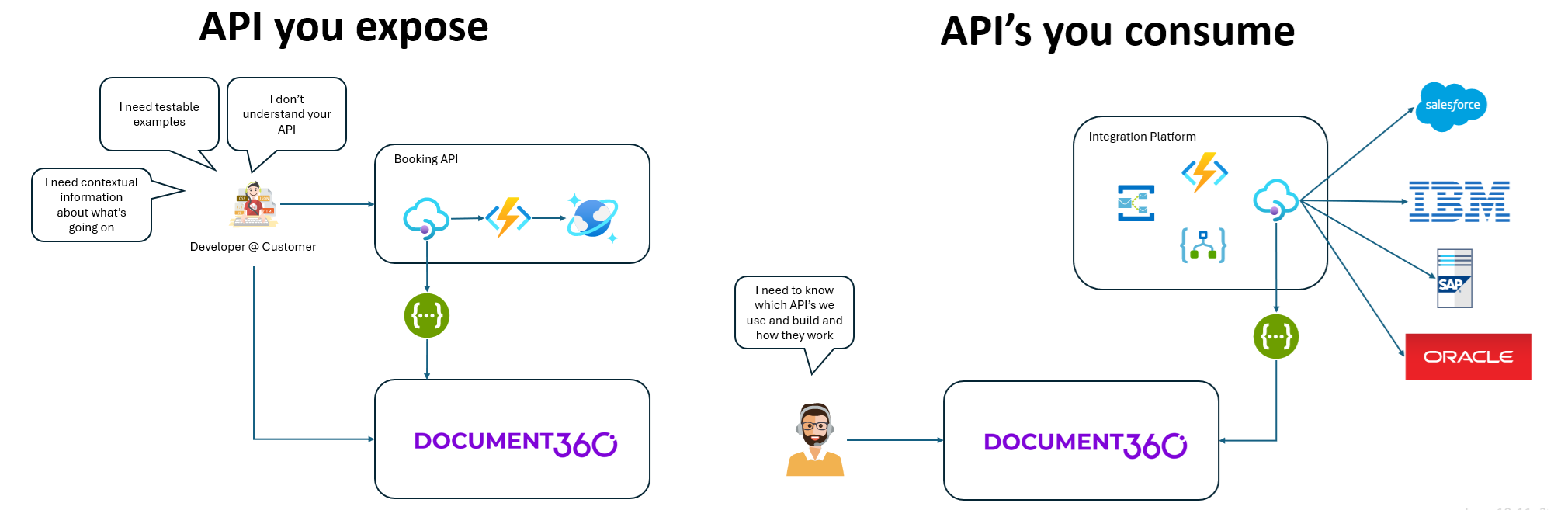

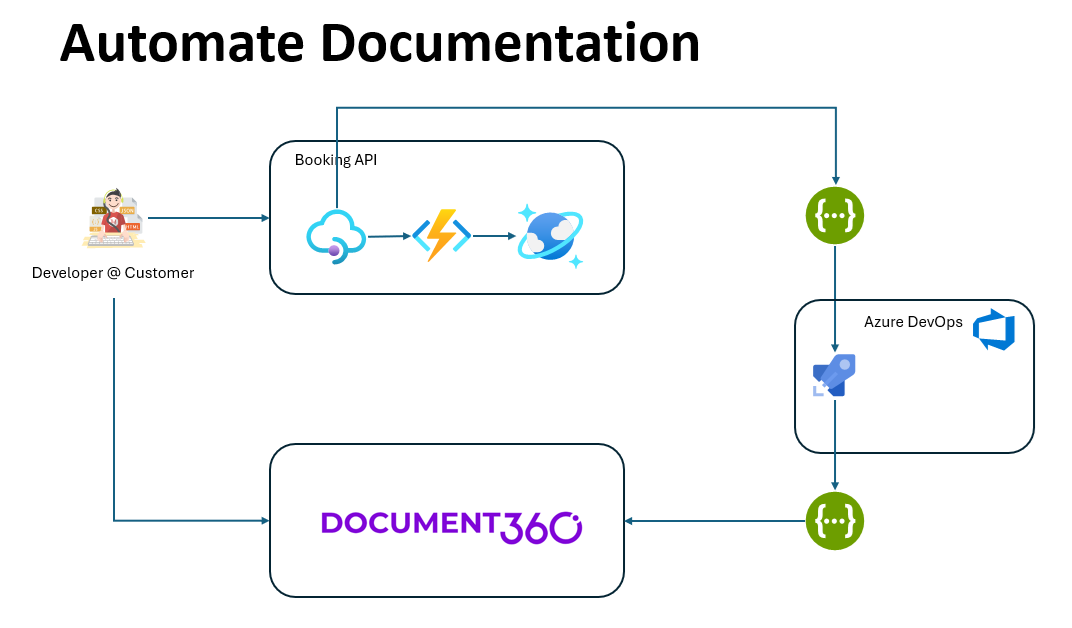

Then Mike demonstrated how to generate API Documentation using Document360 for the sample integration that he created for this demo seamlessly with the least effort.

Followed by which he demonstrated how Document360 can be integrated into Azure DevOps to generate automated documentation and highlighted the importance of collaborative documentation that can achieved. He listed the few documentation types that are supported:

- Support Runbook

- Release Notes

- Developer Documentation

- Project Documentation

- Architecture Documentation

- Cost Reviews

- Team Successes

- Level 10 meetings / Sprint Retrospectives

Following this he emphasized on importance of automatic document generation by using Turbo360 and demonstrated how documents can be automatically generated. He highlighted the document types that can be automatically generated using Turbo360:

- Network diagrams

- Change Audit Reports

- Detailed Documentation

- Executive summary

- RBAC permissions

- Cost Overview Reports

Finally, he summarized how to use both Document360 and Turbo360 for effective knowledge management of enterprise integration platforms.

#11: Azure API Center: What’s new in the Microsoft API Space!

Hey techies! There were a lot of mind-blowing sessions on the first day at Integrate 2024, where Julia Kasper, Program Manager Microsoft and an API developer, presented the session on “What’s new in the Microsoft API Space in Azure API Center”.

Topics covered:

- API Endpoints – Discussed various API endpoints and how the API Center facilitates generating API clients that can be integrated into other projects.

- Support for generating HTTP files.

What API administrators do?

API Administrators normally have full control over the APIs built within the organization.

Since the API Admins will be busy, they won’t know when APIs come and go, especially with warnings and errors. So, it is always better to implement a self sustain application which does not need an API administrator.

Key Points to remember:

- Metadata – customizing webapi is possible through metadata.

- In terms of governance, API analysis helps notify people about warnings and errors related to APIs. Additionally, error messages can be sent to the API administrator for resolution.

- Using GitHub Copilot to resolve API errors also aids in addressing bug issues in the API Center.

Future Plans:

- They’re currently investigating what are the shadow API’s that can be used to identify the API faults with ease.

Conclusion:

This session marked the end of Day 1 of Integrate 2024. Day 1 of Integrate 2024 was very insightful for integration uses in Azure.